Powerful processors, in the form of FPGAs or other chips, are opening up many more uses of vision technology, and this is especially the case with 3D imaging. A number of high-speed 3D smart cameras were being shown at the Vision trade fair in Stuttgart, including Teledyne Dalsa’s Z-Trak2 5GigE 3D profile sensor for in-line applications, which can deliver scan speeds of up to 45,000 profiles per second, and Automation Technology’s C6 laser triangulation sensor, able to reach a profile speed of 38kHz. Elsewhere, Nerian Vision, now owned by the TKH Group, which also owns 3D profiling provider LMI, was showing stereo vision cameras incorporating an FPGA for real-time 3D imaging.

Inder Kohli, Senior Product Manager of Vision Solutions at Teledyne Dalsa, explains that today’s 3D vision technologies help solve several inspection challenges that are difficult, if not impossible for 2D imaging techniques. He cites the examples of “variation in height, defects caused by indentation or bubbling of laminate, measuring objects’ thickness, coplanarity of adjoining surfaces, uniformity, or asymmetry of extruded parts.”

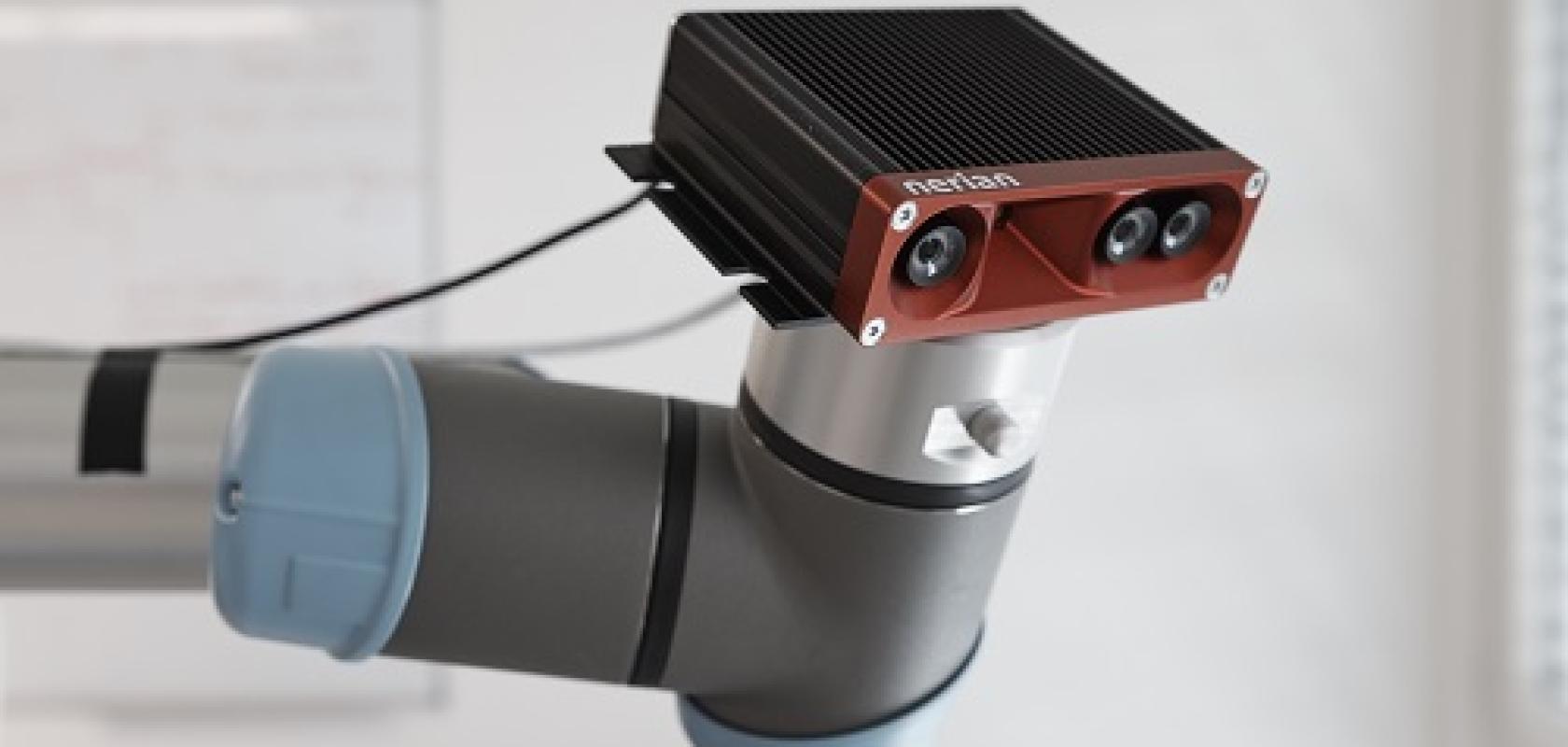

Denise Müller from Nerian Vision adds: “If volumes or obstacles are to be detected, 2D image processing often reaches its limits. If you want to answer questions like, how far away is that obstacle, or find out if a package is really filled to the fill level, then 3D image processing technologies can be used wherever depth information is required for further automation steps.”

Although 2D vision technology itself is still innovating, sometimes applying 3D vision can eliminate issues associated with the inherent limitations of 2D. For example, changes in illumination, or a lack of it, can cause problems for 2D vision systems, making images blurry or unclear. Here, 3D systems can step in as they can record depth information, generating a point cloud, and a more accurate, clear image.

In addition, “the biggest advantage of 3D scanning and processing over traditional 2D is the ability to perform measurements on low-contrasted parts, where most of the depth information would be lost if captured from a 2D sensor,” says Kohli. “For example, measuring the depth of a drilled hole in a manufactured metal part is simply impossible to achieve using a 2D image. 3D image processing allows very high precision measurements on objects with many types and shapes.”

However, creating a 3D image is much more data heavy. Knowing when 3D vision needs to be applied, and when it doesn’t, is key for industry. Collecting data just because you can, is expensive in terms of computer time, storage, and energy.

Four key 3D technologies

It is not just knowing when 3D vision technology is needed, but also, which type. Müller explains that now, there are four key 3D technologies to take note of: the first is stereo vision, which is the most like human vision. Two cameras mimic human eyes and take 2D images of an object from different angles to extract depth information.

Secondly, there is laser triangulation or profiling, which measures the alteration of a laser beam when projected onto a moving object using a camera at an angle to the beam. The structured light technique known as a whole field method uses a light pattern projector to provide an entire 3D image of the object, and not just of a single cross-sectional line.

Both stereo vision and laser triangulation or structured light techniques take measurements in the spatial domain. Time-of-flight (ToF) and lidar - light detection and ranging - are based in the time domain.

Time-of-flight sensors emit a light pulse, often in the infrared. Objects in the field of view reflect this light signal back to the sensor. The sensor measures the time of flight and uses this to create 3D images. “Large scenes can thus be recorded and scanned, which is especially relevant for volume measurements,” explains Müller. “The sensors are also particularly compact. However, light pulses are susceptible to reflections and lighting conditions. High frame rates are achievable, but at moderate image resolutions.”

Lidar also uses time-of-flight detection. It sends out a pulsed eye-safe laser to detect the environment and retrieve depth information. Müller notes, “a big advantage is that lidar can also offer rotation and thus 360° circular detection, which is a special requirement in applications for autonomous driving.”

Here it is important to note that, when developing a machine vision system, understanding which technology to use depends on the application. In some cases, to get the machine to see what you want it to, you often need to apply a combination of techniques.

Human vision copycat

In mimicking human vision, one of the great advantages of stereo vision is that it has a passive mode of operation. As with our eyes, this form of machine vision does not require signals to be emitted to generate depth information. Müller notes that “this makes stereo vision technologies irreplaceable in outdoor applications.” In addition, as it is not disturbed by other light signals, a 3D stereo camera can be used to supplement other 3D vision techniques.

However, stereo vision techniques are particularly computationally intensive, and Müller points out that “many manufacturers have to rely on additional computing power in the form of servers or graphics cards.”

“At Nerian, we pursue the innovative approach of implementing the image processing on the hardware side in a field-programmable gate array (FPGA). This is very complex in terms of implementation and programming, but for the user, 3D image processing becomes possible in real time and without additional load on other computing units, even at high image resolutions.”

Nerian’s SceneScan stereo vision sensor has the processing power required for real-time stereo vision in challenging lighting conditions – in bright daylight or at long distances. It is also available in a small and energy-efficient system.

In an example of how vision systems can be integrated to fit different applications, Nerian’s latest Ruby 3D depth camera is a hybrid solution of active illumination and passive stereo vision. It uses an infrared laser dot projector and integrates two monochrome sensors for depth perception with a colour sensor. This means it can act like a stereo vision camera, working well outdoors and sensing colour, but can also perform precise measurements on difficult surfaces.

When it comes to mobile robotics, the Ruby 3D can achieve solid obstacle and path detection and provides reliable 3D depth data to control autonomous vehicles or robots. It can generate reliable 3D depth and colour information giving it applications in agricultural robotics to check soil conditions and information on crop growth status. It can also detect challenging and bulk materials in bin-picking applications. In addition, Ruby 3D has an inertial sensor (IMU) and together with its robustness against dark and bright light conditions, this means it can be used on construction sites, for example, for navigation, mapping, and obstacle detection.

Profiling with precision

In contrast to stereo vision, laser profiling techniques generally require the objects they are seeing to be in continuous linear motion. This makes them an ideal technology for production lines where objects move along a conveyor belt. As laser light is monochromatic, detectors are calibrated to only detect light deflected from the laser and therefore these systems can be used in both dark and illuminated conditions.

Teledyne Dalsa’s Z-Trak 3D profile sensor can reach scan speeds of 45,000 profiles per second. Credit: Teledyne Dalsa

“3D systems are becoming more reliable, and affordable, and are easier to deploy and maintain for factory applications that require 100% inline inspection,” says Kohli. He introduces the Teledyne Dalsa Z-Trak2 high-performance 3D profiler sensor as being “top of the range” for high-speed in-line measurement and inspection applications. “Its features include a high frame rate, single scan high dynamic range (HDR), and multiple automated optical inspection (AOI).” In addition, “Z-Trak2 offers the ability to combine multiple units to help expand the field of view without sacrificing aspects of resolution; it can remove occlusion and unwanted reflections or create a 360-degree view of the object.”

With its ability to output both 3D data and 2D concurrently, Z-Trak2 also combines vision technologies to meet the needs of packaging, logistics, and identification applications. It offers a cost-effective solution, where “expensive” 3D data does not need to be collected and processed if not required. The factory calibrated Z-Trak2 also overcomes some of the key challenges that face laser profilers, including occlusion, spurious reflections, and laser speckles, which makes it easier to set up and maintain more attractive 3D options for factories.

Imaging at WARP speed

Athinodoros Klipfel from Automation Technology, another leader in 3D sensor tech, highlights that, “one of the key limitations with laser profiling is speed.” Here, profiling with a laser can create millions of data points which limits processing times.

To tackle this issue, Automation Technology has developed a sensor chip for what it calls Widely Advanced Rapid Profiling (WARP). Klipfel notes that, “instead of transferring the complete image and all data points for processing, with WARP, only the region with the laser line is output and this vastly accelerates the frame rate and profile speed of the 3D sensor.”

WARP allows the combination of high resolution and high-profile speed which leads to optimised surface inspection. In this case, smaller defects can be found at higher production speeds. Automation Technology’s new C6 sensor is a 3D laser triangulation sensor that combines speed and resolution. The sensor chip incorporates WARP technology, allowing the camera to reach a profile speed of up to 38kHz at an image size of 3,072 x 201 pixels, and with a resolution of 3,072 points per profile. WARP technology is unique to Automation Technology, making them the global leader in high res-high speed profiling.

Klipfel adds: “WARP is revolutionising profiling, and is taking inspections in industries such as semiconductors, steel or transportation, to new levels.”

The future of 3D

Time-of-flight and lidar technology are still in their nascent phases. They allow 3D images to be captured in real time and have potential applications in Industry 4.0, robotics, logistics, surveillance, intelligent traffic systems (ITS), mapping/building, and automated guided vehicles including drones.

But at present, ToF cameras are complex and often have low spatial resolution. To reach their full potential, further development in camera technology is needed, together with fluid computer software and system integration.

Kohli notes: “With ToF, we will see future developments to improve accuracy and precision. In combination, this will allow greater distance and reflectivity range, minimise the stray light effect, and enable systems to evolve autonomously and to take real-time decisions in complex conditions and changing environments.”

Müller thinks it is important for the future of 3D technology that sensors become more reliable, faster, and more accurate. “Furthermore, there is a big trend towards AI through machine learning. When combined with 3D vision technologies in the right way, this will open doors for many more applications.” She points to areas like agriculture and forestry automation, and in the emerging smart factories of Industry 4.0. She concludes: “In this way we can also ensure that the development of automation technology takes place in harmony with safety-relevant aspects and offers greater value to society.”

More dimensions in Stuttgart

Optomotive presented a newly engineered 3D high-speed smart sensor series called LOM at the Vision trade fair. The LOM series consists of customisable and user-programmable high-speed laser triangulation sensors based on Optomotive’s FPGA technology. The series can achieve inspection rates up to 10kHz. Excellent data of shiny and other challenging surfaces is generated by optimised optical design, in-camera Peak Detection and blue laser light.

The camera includes an Arm system-on-chip, combined with an industrial Ams image sensor and laser line projector. In-camera Peak Detection IP core processes images to produce profiles in 8-bit subpixel resolution.

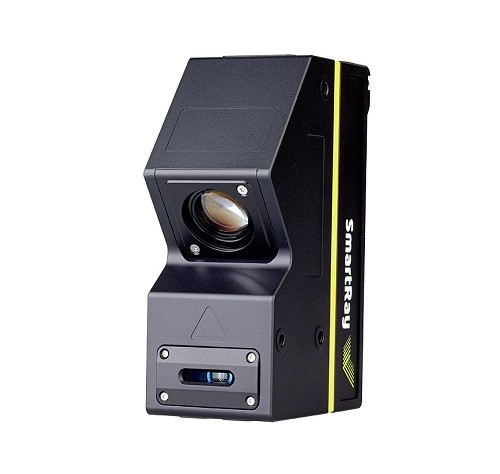

Other 3D profile sensors released at the trade fair include SmartRay’s Ecco X 25 3D sensor, offering 4,096 points of resolution and a scan rate of 40kHz. The Ecco X targets automated optical inspection in electronics and other challenging industries.

SmartRay’s Ecco X sensor. Credit: SmartRay

With a measurement range of 20mm and a stand-off distance of 65mm, the Ecco X 25 delivers a typical vertical resolution of 0.9 to 1.4µm and a typical vertical resolution of circa 5.0 to 7.0µm. The z-linearity is 0.005%, and z-repeatability is 0.2µm, targeting inspection of miniaturised electronics and precision-machined or 3D-printed mechanical parts. A scan at full field of view and 40kHz delivers up to 163 million points per second.

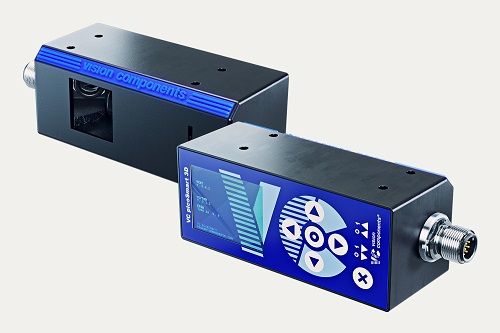

Vision Components launched the OEM laser profiler, VC PicoSmart 3D, with integrated processors for 3D profile data analysis. The profiler is made up of an embedded vision system with a 1MP image sensor, an FPGA pre-programmed for 3D computation, a high-end FPU processor, and a line laser module with a 130mW blue laser. The system is enclosed in an industrial-grade housing measuring 100 x 40 x 41mm.

Vision Components’ VC PicoSmart 3D laser profiler. Credit: Vision Components

Scanners for bin picking were also on display. Zivid announced the second member of its Zivid Two 3D camera family, the Zivid Two L100. The L100 has been developed to be able to tackle the larger, deeper bins typically seen in the manufacturing industry.

The camera has a focus distance of 100cm, a recommended working distance of 60 to 160cm, a field of view of 105 x 62cm at 100cm distance, and 2.3MP resolution. It has a spatial resolution of 0.56mm at 100cm distance, dynamic range of 90dB, and dimensional trueness of greater than 99.7%.

Zivid’s VP of sales, Mikkel Orheim, told Imaging and Machine Vision Europe at Vision Stuttgart that trueness – is the camera both precise and accurate at producing 3D coordinates – is the real test for making the camera reliable enough for manufacturers to deploy them at scale. He said trueness in 3D cameras for bin picking is hard to find.

Zivid tests its cameras for use in industrial environments, making sure there’s no drift in precision or accuracy over a temperature range of 0-45°C, and after being exposed to the kind of vibration and rapid acceleration commonly found when mounted on a robot arm.

Finally, MVTec showed a deep-learning bin-picking application based on its AnyPicker tool in Halcon software. In the demonstration, a robot system picked up arbitrary objects with unknown shapes with the help of Halcon.

The application combines 3D vision and deep learning for the first time with the aim of robustly detecting gripping surfaces. In contrast to typical bin-picking applications, there is no need to teach object surfaces – no prior knowledge about the objects is required. MVTec says that this enables typical applications, for instance in logistics, to be implemented in a shorter time and more cost-efficiently.