Announcements last year of Nvidia’s plan to acquire Arm and AMD to buy Xilinx will inevitably impact embedded vision developers. In an opinion piece reacting to the news, Jonathan Hou, president of Pleora Technologies, said that vision developers will benefit from a unified programming interface from each vendor, but that developers will have to make a choice – more so than before – to develop on a specific platform.

What the next generation of computer chips from the combined forces of Nvidia and Arm, and AMD and Xilinx will look like remains to be seen. But those building embedded vision products today have a reasonably broad choice of embedded boards, as well as MIPI camera modules, according to Jan-Erik Schmitt, VP of sales at Vision Components. He said that how easy or difficult it is to develop an embedded vision system depends on the knowledge of the engineer, but that compared to 25 years ago – which is how long Vision Components has been making embedded vision components and systems – it is now a lot easier. Most systems are based on Linux, which a lot of computer vision programmers are familiar with and for which there is a good choice of CPUs, mainly Arm-based.

Schmitt sees interest in embedded vision in the medical field, for medical analytical devices such as DNA sequencing and blood analysis. Here, it’s usually a switch from PC systems using USB cameras to embedded processing boards, to get rid of the PC. There are also a lot of new tech applications, Schmitt noted, often based on AI – devices for smart cities and smart appliances, for example. AI is also finding uses in medical devices.

The rise of MIPI CSI-2

To build an embedded vision system involves connecting a camera to a processing board, for which the MIPI CSI-2 interface is becoming more important, Schmitt said. MIPI CSI-2 has its origins in the consumer sector, where it is used in mobile phones, tablets and cameras internal to PCs or laptops. It is a low power solution, which is important for edge devices and embedded platforms, where power consumption has to be kept low. It has started showing up in industrial or semi-industrial applications, as companies like Qualcomm or Nvidia expand into sectors outside consumer, bringing the MIPI interface with them on their boards. Areas like automotive with autonomous driving, and IoT, are thought to be the next big markets for computer chips.

The data rate of MIPI CSI-2 is around 1.5Gb/s per lane, and depending on the system and sensors there can typically be up to four lanes, giving around 6Gb/s bandwidth. The cable length from the image sensor to the processing platform is limited to about 200mm for robust data transmission, which is fine for most embedded applications.

‘The MIPI interface is a good one, but the percentage of standardisation is quite low,’ Schmitt said. ‘This has many advantages, but in terms of speed of development it can be a disadvantage.’

One thing that Schmitt says embedded vision developers need to be aware of is that there isn’t a common MIPI camera control interface for processing units, so processors aren’t easily interchangeable. In this regard, every combination of MIPI camera and processor needs a specific driver. ‘As long as the driver is offered together with the MIPI camera module, then development is quite easy – it’s a standard Linux OS and a Linux driver to access the camera,’ Schmitt said. ‘Where issues can occur is in making sure the driver is available, or having a MIPI camera manufacturer that offers a driver together with the hardware.’

Engineers – at least those outside the industrial vision space – tend to start by choosing the processor board and then picking the image sensor. Vision Components offers purely MIPI camera modules together with the standard drivers in source code for custom adaptation options, to give the engineer full flexibility of processor choice.

- Further reading: Matthew Dale looks at what it will take to increase the adoption of embedded vision

- The Khronos Group’s president, Neil Trevett, discusses the open API standards for machine learning and embedded vision

There are other interfaces that are often used, such as LVDS, but MIPI is now being included in a lot of newer embedded boards. Meanwhile, on the image sensor side, Sony has developed its SLVS-EC high-speed interface – standing for Scalable Low-Voltage Signalling with Embedded Clock – which has potential for embedded vision system design, although specific drivers are still needed. In the industrial vision world EmVision, a standardisation initiative led by the European Machine Vision Association, aims to develop a camera API standard for embedded vision systems.

Choosing a platform

According to Schmitt, there are four platforms that are getting attention at the moment: Nvidia; Raspberry Pi for rapid prototyping; a growing interest in NXP; and FPGA-based solutions.

FPGA is the most complex of these four, Schmitt said, but there are companies very familiar with FPGA programming, and a MIPI camera module attached to an FPGA can give a product with a long lifespan.

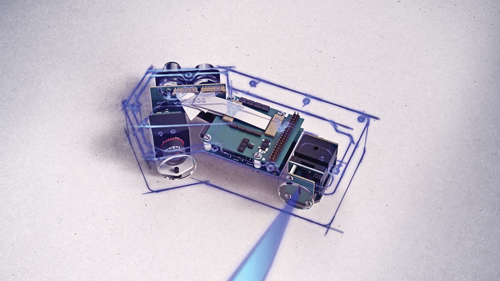

A compact embedded vision system using Vision Components modules. Credit: Vision Components

Schmitt added that each of the other three platforms are fairly similar to develop on, as long as the drivers are available. ‘The Raspberry Pi is still a closed system in some ways, because if you are not using the official Raspberry camera, you have no access to the internal ISP. It’s easy, but it’s limited,’ he said. ‘Nvidia Jetson is a bit more complex, but you have many more options – you have access to the GPU and ISP functions.

‘I wouldn’t say any of the three other platforms stand out as being easier to use, because for each there are advantages and disadvantages.’

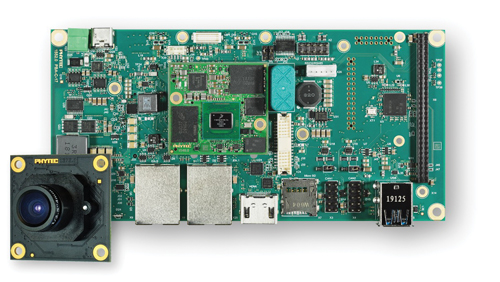

The German firm Phytec concentrates on NXP solutions for its embedded vision offerings. It has 26 years experience building embedded vision solutions, and, like Vision Components, ensures drivers are provided for cameras in its board support package (BSP). This package also has support for various vision middleware, including OpenCV, MVTec’s Halcon library, and Tensorflow Lite. Cameras with MIPI interface are available, and the company now offers the NXP i.MX 8 M Plus processor containing a neural processing unit to accelerate AI applications.

Along with design, customisation and rapid prototyping services, Phytec also supplies development kits to give customers easy access to embedded imaging and to let them start developing immediately. Susan Remmert, responsible for strategic partnerships at Phytec, noted that all components in the kit are industrial-grade, so customers can make a prototype very close to what might be the final product.

Various industrial vision suppliers are now offering embedded vision development kits, including Basler, The Imaging Source, and Allied Vision. All three have kits based on the Nvidia Jetson Nano developer kit. Allied Vision and MVTec have teamed up on their starter kit to offer Allied Vision’s 1.2 megapixel CSI-2 camera, Alvium 1500 C-120, along with Halcon software. The Imaging Source also provides a kit based on Raspberry Pi, and has recently introduced MIPI CSI-2 and FPD-Link-III board cameras, as well as FPD-Link-III cameras with IP67-rated housing. The board camera range includes monochrome and colour versions with sensors from Sony and On Semiconductor, and resolutions from 0.3 to 8.3 megapixels.

Remmert said the kind of questions Phytec has asked, relating to embedded imaging, concerns aspects such as lens specification – Phytec offers M12 or C/CS-mount lens holders on its camera modules, or can create a custom camera with integrated lens – how rugged the components are, and questions about maintenance and the lifetime of the components. Part of developing an embedded vision product is to validate the image acquisition chain: the optics, the lighting, the image sensor. The algorithm also has to be validated, and then there’s the choice of hardware for the end product and validation of that software and hardware combination.

PhyBoard-Pollux embedded imaging kit with i.MX8M Plus module and VM-016 MIPI CSI-2 camera module. Credit: Phytec

‘Image processing development takes time,’ Schmitt said. ‘Creating a prototype can be reasonably fast, but the validation of the final solution takes time. And then companies have to bring this into mass production.’

Building an image processing system involves a lot of image acquisition and a lot of testing, Schmitt explained. The device has to be tested in the real world, which can throw up problems and means the developer has to alter the algorithm or the image acquisition chain. This takes time, and adapting the system can happen several times.

‘If you are fast, you can do everything from scratch in one year,’ Schmitt said. ‘If you are making a commercial product, it’s between one and four years.’

Sometimes the software development is already done and the customer is transferring from a PC to an embedded platform. Once the software is ready, then the work involves porting and optimisation of the final mass production system.

Schmitt added that, as long as the engineer is working with a Linux-embedded solution mainly based on Arm processors, it is easy to assess the performance on a standard platform, such as a Raspberry Pi, and then tell how fast or slow it will be on a different platform.

Throwing AI into the mix

The performance of the system also depends on whether the engineer is using AI or rule-based image processing algorithms. AI is highly dependent on hardware acceleration, and there are differences between using accelerators from Intel, Google, GPUs or FPGAs, according to Schmitt. Also, the type of neural network the developer is using will make a huge difference. Engineers will often already have made this choice, Schmitt said; it’s taken at the beginning of the project because someone has expertise in Tensorflow, for example, or knows all the tools in the Nvidia toolchain. Artificial intelligence adds another layer of complexity to an embedded vision project. The hardware platforms are now available, which is helping companies to start working on vision AI projects, noted Dimitris Kastaniotis, product manager at Irida Labs. However, for real-world vision-AI solutions a lot of effort beyond connecting libraries and hardware platforms is needed to create and support a successful vision-AI application, he said.

Kastaniotis said there are several pain points that need to be addressed during the development of an AI vision solution, such as defining the problem in the first place, the availability of data, the hardware optimisation, and the AI lifecycle management.

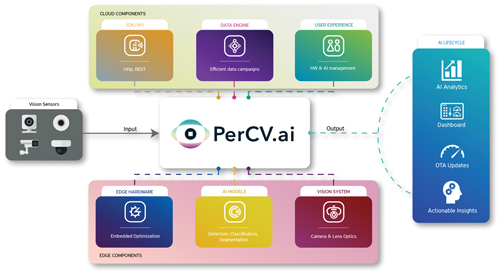

Irida Labs has developed an end-to-end AI software and services platform, PerCV.ai, to shorten development time for building embedded vision AI systems. It is designed to help customers define a problem, and deploy and manage vision devices.

The first step, according to Kastaniotis, when starting an AI computer vision project is determining what the customer needs – getting a detailed specification from the customer. This can be the first area that can introduce delays, as there can be varying expectations from the customer of what can and cannot be achieved.

Irida Labs’ PerCV.ai workflow: an AI software and services platform that supports the full vision-AI product lifecycle. Credit: Irida Labs

‘You have to create a common understanding with the customer, and do that quickly,’ Kastaniotis said. ‘The definition of the problem can not only affect the performance, but also the specifications of the vision system and the hardware platform selection. In addition, when working with AI you have to describe the problem in terms of data, as this is what neural networks understand.’

Once the problem has been defined, the next step is a pilot project with customer samples in order to gauge whether the vision solution is solving the problem according to the expectations of the customer.

Then comes the data campaign, which can be time consuming and expensive, in Kastaniotis’s experience.

Cameras need to be installed to collect the data, and the data annotated. PerCV.ai aims to keep data campaigns to a minimum by collecting the right data, as well as minimising annotation effort.

The next stage is to move from the first pilot installation to deployment, while also maintaining customer feedback. ‘When you start to scale up the system with more sensors and more feedback loops, then you definitely need a platform that will help roll out updates, get feedback, and also detect drift in the distribution of the data,’ Kastaniotis said. The PerCV.ai platform can help with these aspects to scale and maintain the vision system over its lifetime.

‘By solving the three main bottlenecks in embedded AI vision development – defining the problem, the data campaign, and updating the system during deployment – we saw a significant improvement in the time-to-market of an embedded vision solution,’ Kastaniotis added.

‘With our platform, we are able to go from six months or one year for a standard embedded vision project, to four to eight weeks for developing and implementing a first system.’

Ultimately, according to Schmitt, the toolsets vision engineers have available now are far better than 10 or 15 years ago. There are also ways to speed up prototyping, by evaluating the image acquisition chain in parallel with the choice of CPU, for instance. ‘Choices you make in software may automatically influence the choice of hardware and vice versa,’ Schmitt said. But that ‘grabbing images and validating the image acquisition equipment – image sensor, lens, and lighting – can be determined completely independently from the processing platform, using any standard cameras.’

In addition, upgrading with a Linux OS-on-Arm system is relatively easy, such as moving to a quad core Arm solution, or a dual core Arm with higher clock rate, which ‘wouldn’t impact your development time,’ Schmitt said, adding ‘you don’t have to start everything from scratch.'

--

Vision at Embedded World

The Embedded World exhibition has gone digital, with a variety of embedded vision technology, along with conference talks, presented virtually at the show from 1 to 5 March.

The embedded vision conference track will run on 4 and 5 March, and will include case studies covering agricultural livestock monitoring, wildfire surveillance, and a system for detecting anomalies during welding. There will be two sessions on system integration, with talks from the Indian Institute of Technology in Mumbai, Allied Vision, Arm, Neil Trevett at The Khronos Group, Xilinx, and Core Avionics and Industrial. Intel will speak about its OpenVino platform, Basler about optimising neural networks, and Toradex about tuning performance.

VDMA Machine Vision will organise a panel discussion on embedded vision, asking what are the factors for success when developing embedded vision systems, and what are the barriers to entry.

Products on display at the digital trade fair include the VC PicoSmart from Vision Components, which will premier at the show. The board measures 22 x 23.5mm, the size of a postage stamp.

Developing with the OpenCV AI kit

To celebrate OpenCV’s 20th anniversary, the open source computer vision library is running a Kickstarter campaign for its OpenCV AI kit (OAK).

At the time of writing, the campaign, which launched last summer, has raised more than $1.3m.

OAK is an MIT-licensed open source software and Myriad X-based hardware solution for computer vision.

Those pledging $99 will receive an OAK 12 megapixel rolling shutter camera module, capable of 60fps, or $149 for the OAK-D stereovision synchronised global shutter camera.

The cameras can be programmed using Python, OpenCV and the DepthAI package. They are supplied with neural networks covering: Covid-19 mask or no-mask detection; age recognition; emotion recognition; face detection; detection of facial features, such as the corners of the eyes, mouth and chin; general object detection (20-class); pedestrian detection; and vehicle detection. Those wanting to train models based on public datasets can use OpenVino to deploy them on OAK.

There are two ways to generate spatial AI results from OAK-D: monocular neural inference fused with stereo depth, where the neural network is run on a single camera and fused with disparity depth results; and stereo neural inference, where the neural network is run in parallel on OAK-D’s left and right cameras to produce 3D position data directly with the neural network.

OpenCV is running a second spatial AI competition, inviting users to submit solutions for solving real-world problems using the OAK-D module. A similar competition last summer featured winning projects ranging from systems for aiding the visually impaired, a device for monitoring fish and a mechanical weeding robot for organic farming.