‘If you were complaining about how fast technology is moving today, it will never be slower than it is right now. The rate of advancement is escalating.’ This comment was made by Jason Carlson, CEO of computing firm Congatec, during a panel discussion about vision technology at the Embedded World trade fair in Nuremberg, at the end of February. Embedded World brought together the big players in computer hardware – Arm, Intel, AMD, Nvidia, Microsoft, to name a few – with suppliers of software, electronic displays and everything in between. Machine vision firms including Basler, Allied Vision, Framos, MVTec Software, Imago Technologies, Stemmer Imaging and Vision Components, were among the exhibitors, as they look to sell into areas outside of factory automation – areas like retail where customers are taking advantage of powerful yet small computing boards to build smart devices with inbuilt imaging capabilities.

The escalation in improvements in performance of embedded boards that Carlson referred to – some analysts say the rate of change is faster than Moore’s law for semiconductor chips, which states the number of transistors in an integrated circuit will double every two years – is down to large markets like automotive and the investment being made in self-driving cars, and other consumer sectors pushing development. ‘The big companies like Google and Amazon are driving high levels of performance and volume applications. It drives knowledge and experience and price-performance for the rest of the industry in a way that’s good,’ Carlson said.

Augmented and virtual reality, and other personal computing, was another area that could advance computer vision, it was noted during the panel discussion. The aim is to get these systems to run on battery power, while still using sensors to image the scene and track eye movement. Michael Gielda, of computing company Antmicro, said that he didn’t think AR and VR would be the ‘new smartphone’, but that this new wave of devices will force costs down and make sensors smaller.

When it comes to embedded devices, incorporating vision is relatively new for developers, depending on the market – smartphone and car makers will know all about imaging, but others might not. Olaf Munkelt, managing director of MVTec Software, made the point that there are lots of components available with higher bandwidth and specialised processors, but what’s lacking is a blueprint on how to integrate all the components to build an embedded vision system. He said that customers are coming to MVTec asking for complete solutions.

‘We’re at the beginning stages of this,’ Carlson said. ‘This is not a mature and defined market.’ He added that Congatec’s speciality is embedded computing, so it doesn’t make sense to try and become vision experts. ‘It’s all about creating an ecosystem of partners: camera, sensor, software. Together, as an ecosystem, we have the best chance of helping the customer get their solutions to market faster.’

Paul Maria Zalewski, director of product management at Allied Vision, agreed that partnerships are necessary to lower the effort of building an embedded vision system, to make sure sensor alignment is accurate, that the lens is suited to the application, and to deal with image processing.

Congatec has partnered with Basler to develop embedded vision systems, while Antmicro is working with Allied Vision and its Alvium cameras. Speaking to Imaging and Machine Vision Europe on the show floor, Gerrit Fischer, head of product market management at Basler, said that Basler is shifting its strategy to become a full solution supplier – at least in terms of embedded imaging – rather than just selling cameras. Basler began in 1988 as a solution provider, but switched to a component supplier after five years, and from then built its business on industrial cameras. Now, however, it is moving back to developing complete solutions, this time for embedded devices; it acquired embedded solutions expert Mycable in 2017 with this in mind.

Fischer observed that the embedded vision market is much more complex than the industrial sector, saying that there are few standards, new customers, and that embedded imaging requires a new sales approach, one based on providing complete solutions rather than components. He said that Basler will offer consultancy on developing embedded products.

Congatec and Basler, along with NXP Semiconductors and Irida Labs, were demonstrating a proof-of-concept system for the retail sector at the show, designed to identify items in a shopping basket for automated checkout. It uses an NXP i.MX 8QuadMax system-on-chip (SoC) mounted on a Conga-SMX8 Smarc 2.0 computer-on-module from Congatec; a Smarc 2.0 carried board; and Basler’s Dart Bcon for MIPI 13 megapixels camera module. Irida Labs trained the neural network to recognise the items in the shopping basket.

Panellists at the embedded vision discussion. From left: Paul Maria Zalewski, Allied Vision; Michael Gielda, Antmicro; James Tornes, On Semiconductor; Jason Carlson, Congatec; and Olaf Munkelt, MVTec Software. Credit: Nürnberg Messe / VDMA

Fischer also said that Basler had decided not to include pre-processing inside the camera, because the board computers have their own image signal processors. ‘It’s a contradiction to keep processing on the camera,’ he commented. Its Dart camera module has a Bcon for MIPI interface, offering various resolutions, but those wanting to build an imaging device can experiment with its Dart development kit, equipped with a Qualcomm Snapdragon 820 SoC.

Basler is targeting volumes of 1,000 to 20,000 for custom projects, Fischer told Imaging and Machine Vision Europe, and that a full project could take a year to develop.

There are already embedded system developers with vision expertise, such as companies like Phytec, which has been operating an embedded imaging group for the past 25 years. Phytec is an NXP partner, which means it gets early access to NXP processors to develop system-on-modules – it has recently released the Phycore-i.MX 8 family for development on i.MX 8 boards. It also builds custom solutions; examples include a vision device for SSB Wind Systems to measure how rotor blades on wind turbines bend – which took a year to develop – and a handheld instrument for Nextsense designed to measure train wheels in safety inspections.

Open communities

The complexity of embedded vision is ‘only going to get worse in some sense’, at least in the short term, observed Gielda, of Antmicro, during the panel discussion. ‘Right now we’re seeing so many different new and exciting technologies… but as a side effect you get a lot of confusion,’ he said. ‘There is no single element that will make it all work suddenly. You see the entrance of players like Google or Amazon, and they’re not necessarily making it easier because they have new ideas.’

Gielda is an advocate for open-source communities as a way to address the complexity apparent in developing embedded systems. One way to gain knowledge and experience of building embedded systems is to learn from others who are working on similar projects, whether that’s as a hobbyist or an industrial player. All the computer hardware firms have their own open source communities, and then there are platforms like RISC-V, which is an open source hardware instruction set architecture, based on the reduced instruction set computer (RISC) principles. ‘RISC-V is enabling innovation; it’s now more about openness,’ Gielda said.

Intel was promoting its OpenVino toolkit for developing computer vision solutions at Embedded World, which includes software libraries, OpenCV, and model optimisers for convolutional neural networks (CNNs). It has also published reference implementations on GitHub, for things like building a face access control solution (https://github.com/intel-iot-devkit/face-access-control) and for people counting (https://github.com/intel-iot-devkit/people-counter). Machine vision firms, including Allied Vision and Basler, also publish information on GitHub. Xilinx has its developer forums, including its ReVision zone, while Arm has contributed to 200 open-source projects, according to the firm’s product manager, Radhika Jagtap, presenting during the embedded vision conference programme.

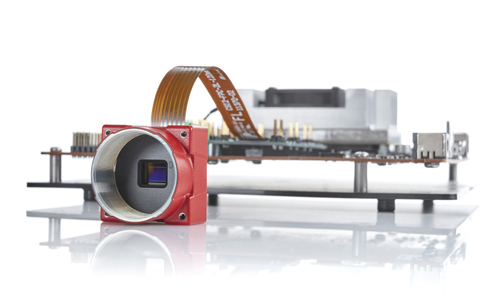

Basler’s Dart Bcon for MIPI development kit won an award at Embedded World. Credit: Basler

Open source tools are a big part of the embedded sector, and they are where a lot of developers turn to begin projects. Speaking to Imaging and Machine Vision Europe at the exhibition, Munkelt, of MVTec, felt that open source software is competition for the company’s own Halcon and Merlic products, but it’s not stiff competition, largely because it takes a lot of work to optimise the code for specific architectures with open source software. Then there is cost of developing and maintaining the software code, something that is underestimated in Munkelt’s view, and which is covered in the price of a professional software suite. In the end, most decide that it’s worth purchasing professional software, he believes, to make the system run quickly and to hasten time-to-market of the product.

Deep learning

The escalating rate of change in computing is most acute in the work surrounding artificial intelligence. The chip makers all now offer dedicated processors for running neural networks – which are widely known to be compute intensive – and tools for pruning and optimising models to make them efficient enough to run on edge devices. Nick Ni, director of product marketing at Xilinx, said that AI is evolving so fast that silicon hardware design cycles can’t keep up, which, he said, is one reason for working with Xilinx’s adaptive hardware based on FPGAs.

Here again, the niche market of machine vision might benefit from the huge amount of development going into neural networks, although there are still questions about the technology, not least the black box nature of deep learning algorithms. Gielda commented: ‘We don’t understand necessarily what deep learning algorithms are doing and why they are doing so well. That’s something we need to address.’ He cited a security system as an example, and the necessity to understand why its algorithm would flag certain people or situations as a security risk.

In the panel discussion, Munkelt noted that there are two parts to take into consideration regarding deep learning: the algorithm and the data. ‘Today the data is more important,’ he said. ‘Look at the automobile industry and at how much it spends on building up datasets. [The value] is not in the algorithm, but in the data. Customers want to own their data.’

Allied Vision’s Alvium camera has been used in embedded projects in partnership with Antmicro. Credit: Allied Vision

Nirmal Kumar Sancheti, from AllGo Systems, gave a presentation about developing its See ’n’ Sense driver monitoring device, which uses deep learning algorithms to detect signs of drowsiness in drivers. The algorithm monitors features like head pose, gaze, blink rate and blink duration, but Sancheti noted that one challenge with building the system was collecting drowsiness data, as it’s not good enough to simply record people acting drowsy. Once again, here data is key.

Last a lifetime

Will all this development activity find its way into the machine vision sector? Mark Williamson, managing director of Stemmer Imaging, commented that embedded systems provide the opportunity for innovation. ‘It’s not all just about price; it’s more about the opportunity of creating systems that have advantages over traditional PC systems, because of size, power, simplicity, as well as cost.’ He added: ‘Some applications will still be best served with PCs. There always needs to be a business case.’

Stemmer Imaging first worked with embedded processing for a specific customer with high-volume and low-cost drivers – the project warranted custom software engineering, according to Williamson. ‘In recent years elements of these custom developments have given us the knowledge to create off-the-shelf software products that need little or no custom development,’ he said. ‘This makes embedded more accessible to mid-volume applications and can scale to more applications.’

Stemmer Imaging is porting more of its advanced software tools to embedded platforms. It also offers cameras with embedded interfaces, such as MIPI, and can create customer-specific subsystems combining various elements with embedded processors.

Longevity of components is important for industrial systems, which is now being offered by chip makers to a certain extent – Qualcomm’s Snapdragon 820E embedded platform provides availability for a minimum of 10 years. According to Carlson, 40 per cent of Congatec’s customers are from the industrial sector, which make products that they have to support for 20 or 30 years. Relating back to his comment about how fast embedded processing is changing, he remarked: ‘I say to them [industrial customers], do we really believe 30 years from now anyone will be using a system designed today? This will be like four orders of magnitude behind in performance.’

On the question of price, low-cost cameras are available, like the OpenMV Cam H7, which, at $65, has an Arm Cortex M7 processor and an OV7725 image sensor operating at 640 x 480-pixel resolution at 60fps.

Carlson commented: ‘They [customers] want performance at the price of a Raspberry Pi, and we can’t do that, but if we can combine vision with something else – now you can give them performance for a small added cost.’ He said combining vision capabilities with other functionality in one chip will drive down prices.

He added: ‘Imagine a system … where on one board you’ve got one core of a chip being a gateway, one core being a real-time motion controller, and four cores of that same chip doing serious image [processing] – face recognition, age recognition, mood recognition – and this all on one system. Are these systems too expensive? When you consolidate all these functions into one, I think this is really going to be a change in what can be done in the embedded vision world.’

Success through collaboration

By Anne Wendel, VDMA Robotics and Automation

Hardware and software manufacturers for embedded vision components must work together for the benefit of users, in order to promote the effective use of this future-oriented technology. This was one of the conclusions of the VDMA panel discussion – Embedded vision and machine learning: new architectures and technologies boosting (new) vision applications – during the Embedded World trade fair on 27 February in Nuremberg.

All five panellists – representatives from both the classical machine vision sector and the embedded community – agreed that the potentials of embedded vision in combination with deep learning are enormous. This impression seemed to be shared by the more than 100 attendees, and many of the 1,100 exhibitors: many demos showcased at the booths were vision related. Without doubt, many future applications will be based on embedded vision: small, integrated image processing systems that work intelligently directly from devices and enable them to see and understand. Embedded vision is made possible by compact, high-performance computing platforms that also consume very little energy and, thanks to standardised interfaces to image sensors, can process an increasing amount of image data in real time. With artificial intelligence, image processing systems are becoming even more intelligent; they are learning for themselves.

According to the panellists, embedded vision technology will not completely replace traditional, PC- or smart camera-based machine vision systems in the future, but it offers technically and economically interesting solutions in a multitude of application fields. The development speed of the individual components required – from sensor boards and a wide variety of embedded platforms, to software – remains enormously high. As a result, embedded vision technology has now reached a level of performance to build effective systems.

An important step to make it easier to develop embedded vision is, however, that component manufacturers cooperate with regard to standardisation and platform building. ‘If users have to laboriously assemble sensors, processors, software and other components individually, embedded vision will not reach its full potential. However, various camera, embedded board and software manufacturers have now recognised this and are cooperating in the interests of users.

Participant statements

Paul Maria Zalewski, Allied Vision: ‘Today’s biggest challenge to apply efficient vision capabilities to embedded systems is the camera itself, with all the integration efforts. New kinds of camera modules and technologies will help embedded engineers to lower NRE costs significantly.’

Olaf Munkelt, MVTec Software: ‘Deep learning on embedded devices is continuously gaining importance in the market. Yet, we do not see deep learning as a one-for-all solution, but rather as an ideal complementary technology for solving specific machine vision applications, such as classifying defects. By combining deep learning technology with other approaches, complex vision tasks, including pre- and post-processing can be solved efficiently.’

Jason Carlson, Congatec: ‘Given the continuous evolution of increased computing power at small sizes, low power advancements, and multicore processors capable of running multiple software applications, highly intelligent vision systems with centralised computing at the edge will enable a wide variety of volume applications. Vision systems powered with serious analytics, using time-sensitive networks, and delivering real-time performance, will enable the next generation of products at market-acceptable price points. The value created will be from digitisation of information.’

James Tornes, On Semiconductor: ‘[Artificial intelligence] is not simple, but it is starting to do great things. These systems are the heart of new transportation experiences with autonomous driving; they are developing higher efficiency and better quality manufacturing systems for in-line product inspection; and it [AI] is simplifying shopping experiences, from having no checkout lines to paying at vending machines with face recognition. There is a lot more to do, but real change is happening today because of improvements in imaging quality and advancements in artificial intelligence.’