Caption: Solutions for driver assistance and autonomous vehicles were on display at Embedded World. Credit: Nurnberg Messe

Walking around the Embedded World trade fair in Nuremberg it would be an overstatement to say that vision was everywhere, but among all the processor boards and the software suites, vision demonstrations could be found in surprising abundance. The CTO of AMD, Mark Papermaster, in his keynote speech on the first day of the conference, highlighted medical imaging, face recognition, autonomous vehicles and industrial automation as key areas for embedded processing, all of which use vision to some extent.

AMD had brought technology from a number of its customers to the show, including an industrial inspection system from Q Technology, a Danish firm that builds camera solutions for food inspection, for tasks like grading potatoes and quantifying the amount of sediment in beer. It has been using AMD’s Accelerated Processing Units (APUs) – a combination of CPU and GPU on a single chip – in its potato inspection systems since 2012.

Papermaster’s keynote presentation was telling, both because of the breadth of areas that now use imaging and the fact that he considered industrial automation a sizable market for AMD processors.

Vision has sensing capabilities not provided by any other type of sensor, according to Jeff Bier, founder of the Embedded Vision Alliance and president of BDTI, during a panel discussion on embedded vision. Basler’s chief marketing officer, Arndt Bake, added: ‘Vision is complicated, but we believe it is totally underutilised, and if we can crack the nut to make it more available, many more applications will start to use it.’

As well as having its own stand at the trade fair, Basler’s cameras designed for embedded computing applications were being shown on the booth of Arrow Electronics, the electronic component and computing supplier with 2016 revenue of $23.82 billion. Arrow is integrating Basler’s technology into its system-on-module and boards portfolio for embedded vision developers. In addition, Basler’s line of industrial cameras is now supported on Qualcomm Technologies’ Snapdragon 820E embedded board, which has a quad-core CPU, GPU and DSP. Snapdragon processors are typically found in mobile phones and other mobile devices.

Leon Farasati, director of product management for Snapdragon embedded computing at Qualcomm, said during the panel discussion that Basler’s line of industrial cameras being supported by Snapdragon is ‘a big deal’. ‘Before, you were stuck with mobile sensors if you wanted to [build] an embedded device. A lot is changing.’

As with much of what influences the relatively small world of industrial machine vision, embedded vision is evolving out of the investment made in consumer and mobile devices, notably advances in the processing boards. Farasati said: ‘Nowadays, we talk about how PCs are trying to have the mobile experience on embedded devices. From the power and the performance perspective, we have made leaps and bounds. You don’t need the PC power anymore; we’re even beyond that. For us, [it’s] how do we make it easier for people to harness what’s inside the system-on-chip.’

Bier added that most of the processing power – especially most of the energy-efficient processing power allowing vision algorithms to run on embedded boards without a lot of cooling – comes from processing engines other than the CPU, so GPUs, DSPs or FPGAs, all of which typically have higher performance than CPUs.

Xilinx’s Zynq 7000 series system-on-chip (SoC) combines an Arm processor with an FPGA and has been the basis of a number of machine vision smart cameras – Vision Components first introduced a smart camera based on the Zynq SoC back in 2014; at the trade fair in Nuremberg, it launched an Arm-based 3D triangulation sensor, the VC Nano 3D-Z.

Xilinx was demonstrating several vision solutions at Embedded World, including: machine learning recognition on four cameras simultaneously; video image signal processing (ISP) implementations on Zynq; ADAS computer vision capabilities for monitoring driver attention; and a multi-stream video codec. In the automotive market Xilinx shipped more than 40 million units of its semiconductor chips in 2017 for integration into 26 makes of vehicle.

The inexpensive and powerful embedded processing boards being built for areas like automotive sensing and mobile devices are bound to find their way onto the factory floor in some form or another. At the moment, the vision portion of a machine is not normally connected to a network because cameras create so much data, according to Bake, at Basler. But with a switch to Industry 4.0 and the Industrial Internet of Things (IIoT), there will be more vision processing on the edge – i.e. onboard a device – Bake said, with the data being fed directly into a factory network.

Edge nodes capable of real-time processing, tracking, and part rejection can report back raw data, analysis, and findings to a central or cloud compute platform. ‘This hybrid approach enables the realisation of the benefits of centralised cloud compute, without having to sacrifice on latency and determinism,’ explained Kevin Kleine, National Instruments’ vision product manager, when speaking to Imaging and Machine Vision Europe.

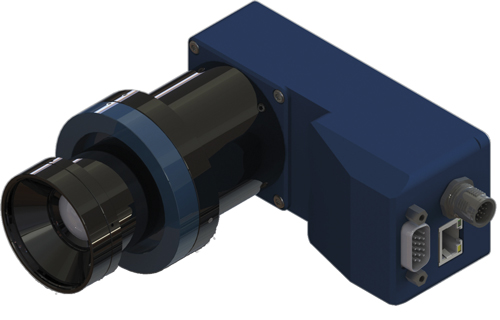

Imago Technologies' VisionCam LM line scan camera has an inbuilt Arm Cortex-A15 processor

The IIoT also creates a market opportunity that’s big enough to make it attractive for companies like AMD or Qualcomm or Intel or Xilinx to sell chips into, and the ability to develop vision applications on these chips could change the way vision is deployed in factories.

Bier commented: ‘It creates an enormous opportunity, where instead of vision being a distinct stage in the manufacturing process, vision now starts to become integrated into every stage in the manufacturing process.’

Farasati explained that Qualcomm is now committed to availability and support for the Snapdragon 820 until 2025, specifically to cater for the industrial market, where product lifecycles are longer. He said that this commitment for product longevity required fundamental changes internally for Qualcomm, and that was driven by the industrial market.

Looking outside the factory

The market potential for embedded vision outside the factory floor is much larger than traditional machine vision, and many machine vision companies exhibiting at Embedded World are targeting both industrial and non-industrial applications. Olaf Munkelt, managing director of MVTec Software, commented during the panel discussion that the embedded part of MVTec grew twice as fast last year as its machine vision business, largely because of non-industrial applications.

Bake said retail was one area where the use of vision was growing, for tasks like people counting or even to the extent of the Amazon Go store that’s opened in Seattle, which doesn’t have checkouts but tracks with cameras what each customer picks up from the shelves and charges it to their account. ‘What we see with these applications is companies providing solutions that are really specific,’ explained Bake. ‘You have one company doing people counting, another focusing on checkouts; it’s really application-specific solutions because the market behind that is big enough.’

Amazon Go is able to operate thanks, in part, to machine learning algorithms, which can make sense of all the image data by learning, rather than being specifically programmed. Bier said that, while the hardware has improved dramatically, a key bottleneck to deploying vision has been creating the algorithms for a specific application. This is now changing, however, because of the advances in machine learning.

Chip maker Arm announced in February a new suite of processors as part of its Project Trillium, designed specifically for machine learning and neural network functionality. These are for mobile computing primarily, with the ML processor able to deliver 4.6 trillion operations per second of computing power at an efficiency of three trillion operations per second per watt. The other processor in the Project Trillium suite is for object detection and can run real-time detection with full HD processing at 60fps.

‘To me, this [machine learning] is a dramatic breakthrough that, along with the hardware, is enabling vision to spread into tens of thousands of new applications,’ Bier said.

Kleine, at National Instruments, remarked that mainstream vision applications are moving toward deploying on ASICs optimised for processing convolutional neural networks. ‘While we have yet to see this technology penetrate into industrial applications, given its capabilities for increasing processing power while reducing power footprint, it has exciting potential for deployment onto embedded boards,’ he said.

Dedicated hardware for machine learning is still in its infancy, and is something Munkelt noted during the panel discussion that he would like to see more of. ‘It [machine learning] has great [potential], and I wish there was support from the hardware side in order to speed these things up,’ he said.

MVTec’s Halcon software package includes some machine learning functionality designed to make it easier to deploy neural networks, than developing the application using open source tools like Caffe and Tensorflow. Halcon has an embedded version, and MVTec was demonstrating its software by running fast image processing on a variety of embedded boards at the Nuremberg show.

Qualcomm Technologies' Snapdragon board is designed for mobile devices, but can be used to create embedded vision systems for various applications

In embedded vision outside the factory there is a greater tendency to use open-source software tools over vision libraries. Munkelt noted that engineers have to consider the learning curve for developing the application and the speed of deployment.

‘Time to market is important,’ he said. ‘We have learnt in the mobile sector, time to market is everything. In industry, typically we have longer cycles; we are in the process of shortening these cycles. Time to market is crucial and software tools are of great help.’

Dr Ricardo Ribalda, lead firmware engineer at Q Technology, the inspection company using AMD chips, noted during the show that the AMD platform has sped up the time taken to build new products significantly – new Q Technology products are now made in three months, he said. Ribalda also noted that AMD’s APUs are compatible with OpenCV to write computer vision algorithms and Tensorflow for neural networks.

Qualcomm’s Farasati explained that the company has invested in Linux and gives embedded vision developers access to the underlying cores inside Snapdragon. ‘We give access through the open source community and a lot of good things are coming out of that,’ he said.

The open source robotics foundation, Open Robotics, in San Francisco, has created a robotics operating system on top of Snapdragon, with access available through open source Linux. Also, when Arrow started supplying the Snapdragon board, Qualcomm purposefully used an open source standard called 96Boards created by Linaro, a Linux-on-Arm consortium. 96Boards gives pre-defined connectors that allow the user to put mezzanine cards on top of the platform. ‘As an end-user, now it’s very easy to pick up a development board and add a camera board on top; you can add a drone board, or a sensor card, or multiple [boards],’ Farasati said. ‘We see a lot of nice projects coming out of this from universities, hackathons, online courses, because the platform is very accessible, and you have a fairly large ecosystem of mezzanine cards. The camera from Basler, I think that’s going to open the doors for this type of development.’

Oliver Barz, key account manager at camera maker and electronic hardware provider Imago Technologies, told Imaging and Machine Vision Europe on the show floor that customers are now demanding Arm processors and a Linux environment for developing embedded vision applications. He said that more often customers have knowledge of what the system should do, and also have their own algorithms.

Imago Technologies develops intelligent cameras, with its expertise residing mainly in the industrial space. Its VisionCam models are based on dual-core Arm Cortex-A15 processors and dual-core floating point DSPs. Customers can port their own algorithms onto the devices to create their own smart camera. The real-time Ethernet function means the processor is able to support a real-time Ethernet, such as Profinet. Imago was displaying its first smart line scan camera using the Arm Cortex-A15 processor, along with its VisionBox controller with x86 processors for managing various different vision tasks.

An embedded future?

Munkelt observed during the panel discussion that machine vision in industry and embedded vision, with its business-to-consumer applications, are still largely separate. ‘They don’t exchange standards,’ he said, although the G3 consortium of machine vision organisations is proposing an embedded vision standard for industrial applications based on the MIPI interface.

‘They [machine and embedded vision] need to get to know each other in order to see what the benefits are from [each] side,’ he added.

Nevertheless, embedded vision is progressing fast, largely outside factory automation. Bier remarked: ‘We’ve seen an exponential increase [in embedded vision] over the last few years. Many companies and organisations are working to provide more accessible [software] tools and development boards and other resources, and secondly to provide education to engineers so that you don’t have to have a PhD in computer vision to integrate computer vision capabilities into systems.

‘Conferences, training courses, all kinds of open-source projects and designs; the number of things that are available has increased hugely over the last couple of years,’ he added.

Bake observed that vision applications are so complex that no single company can solve the problem alone. He said it was key to cooperate with companies: ‘The better we can cooperate, the easier it will get for customers.’ He added that educating customers is also important for growth in vision applications.

Bier concluded: ‘The technology has improved so fast – the sensors, the processors, the software – that it’s gotten a bit ahead of our imagination on how to use it. That’s the thing I’m looking for most improvement on: a growth in awareness from system developers across all industries. What can we do if we can add human-like visual perception to our products; how can we make it better, safer, easier to use, more secure, more capable? We’re at a stage with the technology where we are largely limited by what can be imagined.’