Along with the imaging expertise in the exhibition halls, the trade fair will host a panel discussion about embedded vision and its development and applications on 26 February at 1.30pm, hall 2, 2-510.

Embedded World, which runs from 25 to 27 February, covers all aspects of embedded computing, from Internet of Things and autonomous systems, to connectivity, hardware, software, system-on-chip design, and everything in between.

Organised by VDMA Machine Vision, the speakers on the panel are: Jan-Erik Schmitt, managing director of Vision Components; Dr Christopher Scheubel, executive director of Cubemos, a spin-off from Framos; Arndt Bake, chief marketing officer of Basler; Jason Carlson, CEO of Congatec; Markus Levy, director of AI and machine learning technologies at NXP Semiconductors; Bengt Abel at Still, part of the Kion Group, experts in automation and logistics; and Frank Schäfer at CST, an industrial inspection company.

In addition, on 27 February, there will be a technical conference session on embedded vision running throughout the day, with speakers from Amazon Web Services, Au-Zone, MVTec Software, Allied Vision, the Khronos Group, Intel, Vision Components, ZHAW Institute of Embedded Systems, the University of Applied Sciences Augsburg, and Synopsys. Topics range from machine learning image processing for IoT products, edge computing with OpenVino, Mipi cameras, data exchange between FPGAs and GPUs, and security for embedded vision SoCs.

The trade fair will also have a dedicated forum for startups and present awards for innovative software and hardware. On the 27 February, 1,000 final year engineering degree students will gather at the fair for a programme to promote embedded computing and connect the students with potential employers.

--

Scorpion Vision Featured product

Arducam is focusing on the development of open source hardware and software for single board computers such as Arduino, RPi and Nvidia Jetson. Scorpion Vision is their trusted partner and has increased the portfolio of board level and embedded cameras to cater for increasing demand.

Arducam cameras are available with fixed or M12 and C-mount lenses. Together with Scorpion Vision’s premium range of lenses, it gives the user great flexibility to accomplish projects.

A recently released product is a stereocamera for RPi with synchronised image/video capture. Two cameras are mounted on a single board for easy integration simulating a single camera for RPi’s port. The stereocamera is available with either 5 or 8-megapixel resolution. Due to the low weight they are ideal for mounting on small robots.

With the stereo setup, users can create a depth map and try 3D imagery.

--

Exhibitors

A number of exhibitors will be showing vision equipment designed to run on processors from NXP, Nvidia and Xilinx. Basler (2-550) will release its first camera module and matching add-on camera kits for the launch of NXP’s i.MX 8M Plus applications processor.

It features a dual camera image signal processor and, for the first time, an accelerator for neural networks.

As a further highlight, Basler will present its new AI vision solution kit with cloud connection. The AI kit gives customers direct access to the cloud services of Amazon Web Services.

Basler has also expanded its partnership with Nvidia, with the Dart Bcon for Mipi camera modules now running on the Jetson platform, including Jetson Nano and Jetson TX2 series.

Various live demonstrations in the areas of digital signage, security access control and motion analysis (skeleton tracking) will show the possibilities offered by Basler’s embedded vision solutions.

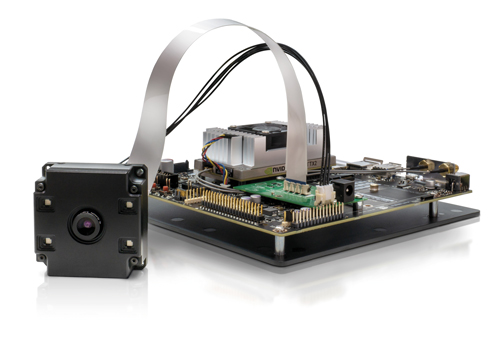

Phytec (1-438) will present its embedded imaging kit i.MX 8M, a platform for customised embedded imaging systems using MVTec’s Halcon software for embedded devices.

The kit contains pre-compiled SD card images for the Halcon demo HPeek.

With HPeek, image processing solution developers can evaluate and benchmark the performance of Halcon on NXP i.MX8 processors.

Halcon brings professional image processing routines, including deep learning algorithms, into embedded vision products.

Phytec offers co-ordinated hardware such as camera and processor modules, as well as a wide range of services for customer-specific system development and manufacturing in its production facilities in Mainz, Germany.

At the trade show, Phytec will also present its portfolio of development and manufacturing services for customised designs, series hardware, and offers the opportunity to conduct a first project discussion with experts on site.

MVTec Software will have its own stand (4-203) presenting a number of live demonstrations. For example, a multi-platform setup will show how four embedded boards perform different tasks with Halcon and Merlic, thereby showcasing the wide range of platforms on which MVTec’s software runs.

Another demonstration will illustrate how Merlic software identifies and checks medical test tubes. It uses the Pallas smart camera from MVTec’s Chinese partner Daheng Imaging.

In a third demonstration, five standard examples of deep learning will be run simultaneously: semantic segmentation, object detection, classification, optical character recognition, and the latest MVTec feature, anomaly detection. An Nvidia Xavier will be used as the platform.

In addition, Christoph Wagner, product manager for embedded vision at MVTec, will give a presentation about choosing the right machine vision software at the Embedded World conference on 27 February at 10.30am.

MVTec will give a presentation on Halcon deep learning for embedded devices at the accompanying exhibitors’ forum on 25 February at 1.30pm.

Other vision software on display comes from Kithara Software (4-446). Special emphasis will be on the functions of Kithara RealTime Suite regarding automation with EtherCat, real-time image capture and processing, connection to automotive interfaces, as well as real-time data storage.

Camera manufacturer, Vision Components (2-444), will present its range of camera modules with a Mipi CSI-2 interface. These components enable compact, repeatable OEM designs and easy connection of image sensors to more than 20 single-board computers, including Nvidia Jetson, DragonBoard, all Raspberry Pi boards and all 96Boards.

Vision Components has also integrated non-native Mipi sensors in Mipi camera modules, using a specially developed adapter board. Examples are IMX250 and IMX252 sensors from the Sony Pregius series, which are characterised by high light sensitivity and low dark noise.

The manufacturer’s Linux-based, freely programmable embedded vision systems will also be on display. These cameras and 3D line sensors are based on a Xilinx Zynq SoC, which has long-term availability.

New quad-core embedded cameras provide a performance boost thanks to the onboard Snapdragon 410 processor: 1.2GHz clock rate, 1GB RAM and 16GB flash memory. In addition to various built-in interfaces such as GigE and 12 GPIOs, the board camera is available with optional extension boards that enable easy, flexible addition of an SD card slot and more interfaces: serial interface, I²C, RS232, DSI, RJ45 Ethernet adapter, and power interface.

Jan-Erik Schmitt, vice president of sales, will also give a talk about Mipi cameras during the Embedded World conference on 27 February at 2pm.

E-con Systems (2-645) will showcase its cameras for embedded platforms including: Nvidia Jetson TX1/TX2/Xavier/Nano; NXP’s IMX6/IMX7/IMX8; Rockchip’s RK3399; and Xilinx FPGAs with various camera interfaces (Mipi, GMSL and USB).

The firm will be introducing its new full HD lowlight Mipi camera for the Google Coral development board at the show. E-con Systems will also launch its AR0233-based GMSL2 camera with solid IPP66 enclosure for water, dust and impact resistance, for outdoor applications and continuous operation. In addition, the company will demonstrate eight cameras streaming simultaneously on Nvidia Jetson Xavier.

Imago Technologies (2-639) will be showing its VisionBox Daytona, ideal for applications that need Nvidia’s Tegra TX2 GPGPU together with camera interfaces, real-time IO and mobile access to data and images.

The user can connect the Daytona into a wifi network or can use the integrated 4G modem. Typical GigE cameras can be connected with a single Ethernet cable. The IO functionality provides a trigger-over-Ethernet as well.

Also on display will be the latest Arm, I-Core and multi-core DSP-based VisionBox products, now in series production, as well as a demonstration of the VisionCam event-based camera sensors.

Framos (2-647) will present its first 3D industrial camera, the D435e, along with an Intel RealSense-compatible system design kit for skeleton tracking.

The firm’s camera and sensor module portfolio allows vision engineers and developers to evaluate many image sensors on open processor platforms.

ATD Electronique (3A-539) will show its Macnica EasyMVC camera interface SLVS-EC Rx IP, equipped with the new Sony high-speed sensor IMX530. The interface is supported by SLVS-EC v2.0 IP onto an Intel Cyclone 10GX platform.

A second demo includes the Sony sensor IMX299 with SLVS-EC v1.2 IP, also from Macnica, demonstrated on a Xilinx Kintex Ultrascale platform. Further demonstrations include the miniature CMOS image sensor Naneye M, and the new global shutter image sensor CSG14K for machine vision and AOI applications supporting the 1-inch optical format from Ams.

In addition, ATD Electronique has organised a series of presentations in a separate conference room. Highlights are speeches from Macnica about SLVS-EC IP solutions; Sony, with inside information on the new Sony SWIR sensors; and Ams, with talks about small camera modules, applications and integration, plus NIR-enhanced sensors. A small number of seats are available on email request: sales@atdelectronique.com.

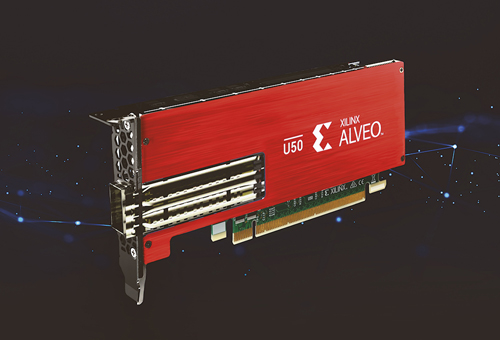

Finally, Xilinx (3A-235) will have demos highlighting its Versal adaptive compute acceleration card, Alveo accelerator cards, Industrial IoT, the Vitis unified software platform, and automotive solutions.

One of the demonstrations on the Xilinx booth will be region of interest-based encoding, using a video codec unit onboard the Zynq Ultrascale+ chip.

When streaming video at limited transmission bandwidth, it’s necessary to use intelligent encoding whereby a region of interest (ROI) can be encoded at higher visual quality than the rest of the region. Using Vitis AI, the Xilinx deep learning processor unit is integrated in the pipeline and is used to identify the ROI’s mask. The video codec unit then allocates more bits for ROIs in comparison to the rest of the region at a given bitrate to improve encoding efficiency. The key markets for this are video surveillance, video conferencing, medical and broadcast.

Also on display is a machine learning inference solution for edge use cases on the Versal Adaptive Compute Acceleration Card. Using Xilinx tools, it is built on a heterogeneous compute platform where adaptable engines are used to integrate live video interfaces along with pre- or post-processing elements.

Real-time 3D calculation of physical effects using the Bullet Engine on Alveo accelerator boards will be shown. Industrial applications benefit from digital real-time models of devices.

Other demonstrations include a cloud-trained neural network on a connected Xilinx IIoT edge device, along with an automated driving demonstration and path-finding platform, and an ADAS development kit from Xylon, based on the Xilinx Zynq Ultrascale+ MPSoC device.

Xilinx will also be discussing several topics at Embedded World on 26 February, including the Versal AI core at 2pm; low-bit CNN implementation and optimisation on FPGAs at 3pm; and emerging SoC performance and power challenges at 4pm.

Other embedded vision product releases

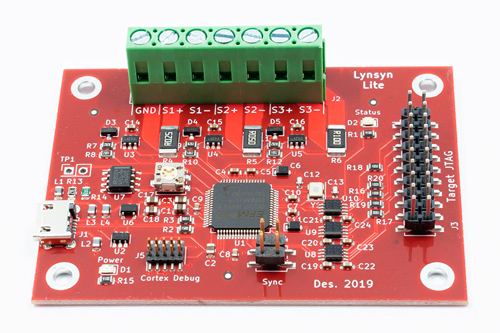

Embedded computing and vision equipment released recently includes power measurement tool Lynsyn Lite, from Sundance Multiprocessor Technology. It has the ability to measure a system’s energy consumption based on application behaviour, identifying the causes of power issues.

The core Lynsyn technology was developed by the Norwegian University of Science and Technology (NTNU) as part of its involvement in the EU’s Tulipp project. It was designed to overcome the challenges of measuring energy consumption when building the project’s reference platform for image processing applications.

Lynsyn Lite has been engineered by NTNU in conjunction with Sundance Multiprocessor Technology, also a member of the Tulipp consortium.

Lynsyn Lite measures the power usage of individual sections of source code deployed in embedded systems. It connects over JTAG to sample the program counters of the system processors and correlate the power measurements with the source code, mapping consumption samples to application actions. A sampling frequency of up to 10kHz is used.

Lynsyn Lite features three sensors that measure both current and voltage. Although it has been designed to support application power profiling – primarily of systems based on Arm Cortex A9, A53 and A57 cores – there is no need to purchase a separate JTAG pod; it can also be used as a generic JTAG programming device with the Xilinx Vivado tool suite.

The measurement tool is compatible with both Linux and Windows operating systems and includes open source software that both samples and visualises measurement results.

Elsewhere, Active Silicon’s 3G-SDI, HDMI and USB/HDMI autofocus-zoom block cameras deliver high-definition, low-latency video transmission and capture. These cameras – 34.7 x 41.5 x 58.4mm – have 10x optical zoom and other features including 3D noise reduction, wide dynamic range mode, and motion detection.

![]()

Active Silicon’s interface board brings features such as simultaneous HD-SDI and analogue output, simultaneous USB and HDMI output, and HD-VLC output for long distance transmission. By customising the FPGA firmware, Active Silicon can add application-specific video processing features to the cameras. These features can also be controlled over a serial or USB connection.

Finally, Lucid Vision Labs has released Helios Flex, a pre-calibrated time-of-flight Mipi module that can be integrated easily into embedded platforms for industrial and robotics applications. The module features Sony’s DepthSense IMX556PLR back-illuminated ToF image sensor on an embedded board using the Nvidia Jetson TX2. It delivers 640 x 480 depth resolution at 6m distance, using four 850nm VCSEL laser diodes. The Helios Flex module includes a software development kit with GPU-accelerated depth processing and runs at 30fps.