Vision sensors with onboard AI are set to be trialled in Rome to ease some of the city's congestion.

Three devices have been installed in Via Settembre in the centre of Rome to provide real-time information about free parking spaces, identify overcrowding on buses, and warn drivers when pedestrians are crossing the road.

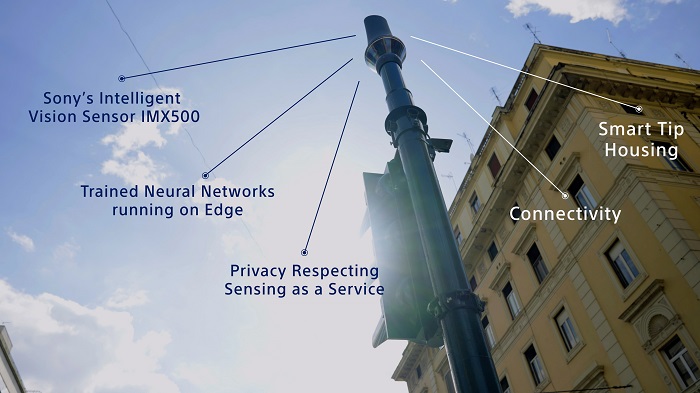

The devices, called Genius Tips, use Sony's IMX500 sensor, which was launched last year and was the first image sensor equipped with AI processing functionality. It has a logic chip for AI processing stacked below the pixel chip.

In the trial, due to begin in June, the sensor will extract metadata about where free parking spaces are located. The information will be streamed in real time, and the coordinates overlaid on a map for a driver to find the parking place.

Antonio Avitabile, managing director of corporate alliance and investment at Sony, emphasised that the Genius Tips are not cameras as no images are stored or leave the sensor – processing happens on the sensor to convert images into data.

The edge processing means the devices put little burden on the network, as only metadata is generated. This coupled with the fact that different neural networks can be deployed on the same hardware wirelessly makes the solution scalable to cover the city.

In addition, there are no privacy issues, as only data is produced.

Envision, which develops infrastructure for smart cities, built the Genius Tips, which consist of two sensors looking over the road. Along with the parking application, the devices have also been trained for two other uses: to detect a pedestrians’ presence and alert a driver, and to monitor the number of people queuing at bus stops.

Data of queue length and people getting on and off the bus are processed by the sensor, and Envision's software platform aggregates this and makes it available to operators managing the bus network.

The trial seeks to evaluate the effectiveness of these systems.

A Genius Tip on top of a traffic light

The pixel chip on the IMX500 sensor is back-illuminated, and has approximately 12.3 effective megapixels and a wide angle of view. In addition to the conventional image sensor operation circuit, the logic chip is equipped with Sony’s digital signal processor dedicated to AI processing, and memory for the AI model.

Signals acquired by the pixel chip are run through an image signal processor and AI processing is done in the process stage on the logic chip. The extracted information is output as metadata.

The chip can perform ISP processing and AI processing – 3.1 milliseconds processing for MobileNet V1, according to Sony – on the logic chip, completing the entire process in a single video frame. This design makes it possible to deliver real-time tracking of objects while recording video.

The image sensor and this smart city trial is an example of what Michael Tusch of Arm spoke about in an Embedded Vision Summit presentation in 2017 – namely that surveillance cameras aren't going to produce video any more but data, all because of the disruptive nature of embedded vision.

Speaking about the Rome trial, Avitabile said: 'We have a vision of achieving more sustainable and liveable cities, and through the IMX500 scalable platform we can substantially accelerate this process.'

The trial in Rome is conducted with a number of start-ups in the Italian ecosystem supported by Sony Europe – TTM Group is responsible for installing the IMX500 image sensor in the smart tip; Envision developed the smart tips; and Citelum installed the devices on traffic lights.