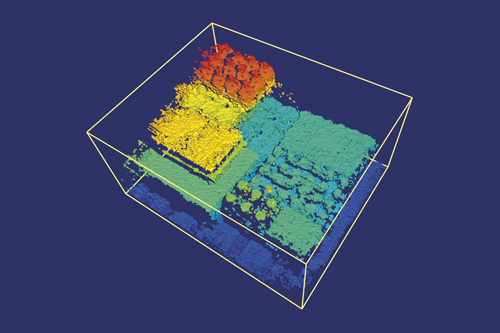

Photoneo's demo of a complete Bin Picking solution with PhoXi 3D Scanner (Image: Photoneo)

Imaging in three dimensions rather than two offers numerous advantages for machines working in the factories of the future by granting them a whole new perspective to view the world. Combined with embedded processing and deep learning, this new perspective could soon allow robots to navigate and work in factories autonomously by enabling them to detect and interact with objects, anticipate human movements and understand given gesture commands. Certain challenges must first be overcome to unlock this promising potential, however, such as ensuring standardisation across large sensing ecosystems and increasing widespread understanding of what 3D vision can do within industry.

Three-dimensional imaging can be achieved by a variety of formats, each using different mechanics to capture depth information. Imaging firm Framos was recently announced as a supplier of Intel’s RealSense stereovision technology, which uses two cameras and a special purpose ASIC processor to calculate a 3D point cloud from the data of the two perspectives. A random dot projector can also be part of the solution to improve the accuracy of the cameras for indoor applications.

Photoneo takes a slightly different approach to 3D vision with its PhoXi 3D scanners, using a laser light pattern projector that emits a set of coding patterns onto the target scene, which are interpreted by a single camera and used to reconstruct a point cloud. The PhoXi capturing and processing pipeline is capable of delivering 16 million measurements per second, either in 3.2 megapixels at five frames per second, or 0.8 megapixels at 20 frames per second.

An image of a pallet taken using 3D stereovision. (Image: Framos)

‘All calculations of point cloud and normal vectors are made inside the device, so we don’t waste client computer power on our calculations,’ commented Branislav Puliš, vice president of sales and marketing at Photoneo. ‘Our scanners use [the] structured pattern approach so we cover a larger area in one shot. It helps us to make applications faster and meet tough cycle times.’

PhoXi scanners are suitable for robot handling applications for bin picking, where randomly placed, semi-oriented objects can be picked from a container or pallet and placed on a conveyor belt.

‘High scan quality allows us to pick parts that are positioned randomly and place them directly where needed without further orientation handling,’ Puliš explained. ‘Moreover, the solution is compatible with multiple industrial robot brands.’

Flight of light

Time-of-flight (ToF) is an alternate and relatively new method of 3D imaging that relies on the consistency of the speed of light to calculate distances and generate point clouds. It uses the time it takes the pulses of camera-mounted LEDs to reflect off objects and return to the image sensor of a camera.

‘ToF is a direct method of capturing 3D; there’s no reconstruction required … the camera itself outputs rich 3D information,’ explained Ritchie Logan, vice president of business development at ToF specialist Odos Imaging. ‘Unlike other methods of capturing 3D data like laser triangulation or stereo reconstruction where there can be a significant requirement for post processing of data, with ToF everything is done onboard the camera.’

With internal illumination sources, ToF cameras can be offered as single-box solutions that can be integrated easily into industrial environments. ‘You can pick up a ToF camera, bolt it to something and immediately stream calibrated 3D images out of the camera,’ explained Logan. ‘There’s no setup, no calibration – it’s a fast, low-cost method of capturing 3D images quickly in real time.’

Time-of-flight can give information for gesture recognition. (Image: Basler)

Odos Imaging provides its StarForm-Swift camera for ToF imaging, offering VGA resolution and 40 frames per second with a precision from 1cm at ranges up to 6 metres. The platform uses seven LEDs outputting 850nm infrared light as the illumination source, and outputs a point cloud that can be used for tasks such as palletisation in logistics, carton dimensioning and profiling, completeness checking and agricultural growth management.

‘These applications are being driven by the fact that ToF is low-priced and easy to integrate,’ said Logan. ‘We also see quite a strong pull in harsh automation environments where humans are unable to work, such as cold food storage areas.’

Camera manufacturer Basler’s ToF offering also provides VGA resolution and a precision of 1cm, at 20fps and at a range of up to 13 metres. The system also uses one extra 850nm LED for illumination.

Imaging in the infrared can make ToF cameras susceptible to sunlight, so to reduce this effect Basler’s ToF camera features structures around its lens that block out stray sunlight and other reflections.

‘Our time of flight camera is used in applications such as parcel inspection and categorisation,’ said Mark Hebbel, head of new business development at Basler. ‘It can also be used in production lines, for example in a factory where cupcakes are being made, where the camera would be able to determine the depths of the cupcakes to check whether they have risen properly during baking.’

According to Hebbel, 640 x 480 pixels (VGA) is the highest resolution currently available for ToF imaging.

‘Because the technology is so new at the moment, the 640 x 480 pixels resolution … can be used for most applications, because there’s simply no higher resolution ToF cameras on the market, as the sensors don’t exist,’ explained Hebbel.

‘VGA is a sweet spot that delivers sufficient angular resolution to be able to see small objects at long ranges. However, it is also small enough resolution that the data generated is easily manageable,’ Logan added.

Certain challenges occur when designing ToF imaging systems. ‘When taking measurements based on the speed of light, the time it takes electrons to travel around the electrical circuit needs to be taken into account,’ explained Hebbel. ‘Therefore the routing on the PCBs affects the time delay and the whole camera needs to be calibrated in order to achieve accuracies such as +/-1cm. This can be complicated to achieve, hence why there aren’t that many ToF cameras on the market right now.’

Imaging at the edge

While ToF requires little processing to output 3D images, gigabytes of uncompressed data are still produced that need to be stored and processed.

‘This can be done either in real time at the point of imaging – at the edge – or after sending the data into the cloud to be used in an Internet of Things environment,’ said Hebbel. ‘While the cloud has enough power to process this data, transferring the data into the cloud and then retrieving it again can be a challenge.’

Manipulating imaging data at the edge, also known as embedded processing, is an area of increasing momentum and discussion in the vision community, and involves storage and manipulation of information near the source of capture. Doing so enables smaller, more compact imaging systems and a range of new applications in areas where traditional vision systems would struggle to perform.

Odos Imaging is using embedded vision to transform its camera from being a standard data collection tool to being an intelligent deliverer of information. ‘We are developing on-board processing for the StarForm-Swift, and can run applications on the current hardware,’ said Logan. ‘Instead of providing a device that’s just an acquisition source for image data, we are offering a programmable system that can make its own decisions when observing a scene. That’s something most of the industry is moving towards, it will be critical for forward-thinking applications.’

Embedded processing, while increasing the capability of vision systems, introduces certain factors that need to be accounted for when being deployed, according to Hebbel: ‘If processing is done locally then a lot of energy is needed to run numerous processors, which requires a large battery that will affect the performance of any autonomous vehicles or drones that it’s attached to.’ This will therefore have to be taken into consideration when equipping robots and vehicles with embedded vision systems to allow them to navigate and interact in future factory environments – a key role for vision technology in Industry 4.0.

Robotic eyes

‘Understanding of the environment by robotic systems will be a big game changer for the new industrial revolution,’ stated Puliš, of Photoneo. ‘Robots, as machines, are nearly perfect, being precise and robust, but they are blind. With 3D vision, they will get their eyes. Their usage will be much wider with improved flexibility.

‘The eyes and brains of robots will come in the form of new 3D cameras and deep learning systems that will not only be able to identify what has happened based on a past experience, but they will be able to predict what may happen in the future.’

Vision-equipped logistics robots are a potential example, offering the ability to pick up containers and move around a facility autonomously. Imaging in 3D would enable them to anticipate structures and objects, recognise where humans are, and understand where they are going and how to navigate around them safely.

Simultaneous localisation and mapping (SLAM) algorithms will play a key role in this, as they enable a machine to produce a digital map of its surrounding environment. According to speakers at the recent EMVA Embedded Vision Europe conference in Stuttgart, SLAM was difficult to achieve only two years ago; however, recent developments have turned it into a reality.

Further advances in deep learning could extend this perceptive capability to robots being able to understand gesture commands from humans, allowing them to stop or move out the way when told to do so. In implementing these applications, a 3D camera would be used to record the spatial information of the gesture, which would then be inputted into a range of middleware for body, facial and gesture recognition, and understood using deep learning.

‘In the future, manufacturing robots could be taught by gestures and examples to do specific tasks, such as turning a screw,’ suggested Dr Christopher Scheubel, who is responsible for IP and business development at Framos. ‘A person would demonstrate to the robot how to turn and fix a screw. The robot would then be able to perform that action with other screws of different shapes and that aren’t in exactly the same position as it had learned, and could then guide itself to each screw.’

These kinds of processes are still currently under development, according to Scheubel, who also explained the increasing processing power and the low price of 3D imaging is driving the development of these natural interactions with devices. Deep learning software is also enabling this technology and is developing at incredible speeds because of progress made by companies such as Google, Amazon and Apple in the consumer market.

‘What we are currently doing at Framos – and where the industry is going right now – is transferring these developments over from the consumer market to the industrial sector,’ said Scheubel. ‘Enabling this natural interaction with robots through gestures and speech will be one of the next steps in industry, allowing for an easier transfer of knowledge from human to machine.’

Industrial ecosystems

The challenge of integrating 3D cameras into these future environments, according to Hebbel and Scheubel, is to combine and standardise them with other sensors. ‘Having them as part of a real-time system that changes environment as it moves around a building requires the development of huge ecosystems between a number of sensors from different partners, which is very complicated to achieve at the moment,’ confirmed Hebbel.

This ability to communicate with an intelligent factory environment is what will transform 3D cameras from being standard image capturing devices to valuable sources of information in Industry 4.0 environments, added Logan, of Odos Imaging. ‘ToF is one tool in the toolbox of 3D sensing capability,’ he said. ‘In practice, Industry 4.0 and IoT will need all of these tools.’

The jury is still out, as to how IoT will operate, according to Hebbel: ‘No one company can define what the IoT is going to look like. Huge ecosystems are being built up by Microsoft and other big players to get sensor data into the cloud from multiple edge processors. It’s an exciting time that’s bringing a lot of people together.’

The barriers and future of 3D vision

One of the main challenges 3D vision providers are facing is an absence of proper knowledge on the subject within the industry, according to Photoneo’s Puliš.

‘A lot of customers have a lack of information about 3D or they have obsolete information,’ Puliš said. ‘This causes distrust of 3D and represents a barrier for further development.

‘So many times we have to be evangelists of 3D and show what is possible. Many times customers prefer 2D custom solutions because they know the technology, but at the end of the day, they pay more because of the time spent on installation and further maintenance compared to 3D.’

Hebbel agreed, adding that the higher price of 3D cameras is leading to customers attempting to solve problems using 2D alternatives. ‘They’d be better off using 3D cameras,’ he said. ‘We are in communication with sensor producers, trying to bring prices down so more cameras can be sold and a larger market generated.’

With the accuracy, distance and cost of 3D imaging set to improve with future technology developments, this larger market will lead to the vision format finding its way into a range of new applications, both industrial and commercial.

‘Biometrics is a very interesting area for vision,’ continued Hebbel. ‘Once cameras become cheap enough, they’ll work their way into retail shops to observe customers and their reactions. ToF cameras could also be used in a similar way to lidar on autonomous vehicles.’

While Basler’s and Odos Imaging’s ToF cameras use infrared LEDs as the illumination source, structured lighting and laser illumination systems manufacturer Osela is in the process of introducing a new laser-based time of flight illuminator (TOFI). It emits nanosecond laser pulses in 685nm, 808nm, and 860nm and provides uniform illumination that maintains optical power density over long ranges.

The TOFI can be used in any ToF application, according to Nicolas Cadieux, CEO of Osela. However, Osela only provides the TOFI for ToF camera manufacturers – integrated in the camera housing – or to end users that want to develop their own ToF system.

As opposed to traditional LEDs, where the divergence of light is very high, the TOFI’s laser format dramatically reduces the loss of light and enables high homogeneity. This makes it ideal for high energy pulse delivery in long range industrial depth sensing applications, eye-safe gesture recognition and volume measurement.

Requiring only a 5V power supply, the circuitry of the TOFI provides high current pulses by a simple low impedance high speed digital signal. This allows it to output 2W of power with rise and fall times of less than 1ns.