The 3D imaging market is set to be transformed by investment pumped into the automotive and consumer sectors. Last year, in this magazine, Yole Développement’s Dr Guillaume Girardin said those markets will push 3D sensing beyond $18bn by 2023.

Automotive is going through a self-driving revolution, which needs a lot of sensing capabilities. One is lidar, or light detection and ranging. There are many variations in the technology – differences in the laser wavelength that is pulsed out, the signal returning from which gives a map of the environment; differences in the method used to scan the laser light; and other intricacies. Bill Gates is funding a lidar startup, Lumotive, with beam steering technology based on metasurfaces; BMW has signed an agreement with Israeli firm Innoviz for its solid-state lidar; while Outsight, at the recent Autosens conference in Brussels, introduced a 3D camera that combines lidar with hyperspectral analysis.

In the consumer market, Apple’s iPhone X, along with phones from Xiaomi, Huawei and others, have 3D sensing technology for face recognition.

All this investment in 3D sensing bodes well for industrial imaging, as technology developed for larger markets, like consumer, trickles down to the niche machine vision sector. Sony, for instance, is developing time-of-flight (ToF) sensing technology for applications such as gesture control in cars or mobile devices. It now offers its DepthSense ToF sensor that combines CMOS with SoftKinetic’s ToF technology, which Sony bought in 2015. The DepthSense sensor is already used in industrial cameras like Lucid Vision Labs’ Helios camera and Basler’s Blaze 3D camera. Helios delivers 640 x 480-pixel depth resolution at a 6m working distance, with four 850nm VCSEL laser diodes.

ToF, which operates on a similar principle to lidar but without scanning the laser beam, has its place among industrial 3D solutions. The technique does not offer very precise depth data, but it is sufficient for applications in logistics, for example, to give the dimensions of boxes. Other 3D imaging methods include: structured light, like systems provided by Isra Vision; stereovision, such as the technology from Scorpion Vision or the new Visionary S stereo camera from Sick; and triangulation, like that from LMI Technologies. All have benefits and flaws, and applications for which they work well.

Slovakian firm Photoneo won the 2018 Vision Award, presented at the Vision show in Stuttgart, for its parallel structured light technology, which can capture around half a million 3D points at 20fps. Each sub-pixel in Photoneo’s sensor can be controlled by a unique modulation signal. The camera uses structured light, but transfers individual coded light patterns to the sub-pixels in the sensor, where they are sampled at one point in time. This means the camera projects one pattern and captures one image but can get multiple virtual images from it, each illuminated by a different light pattern. It is also possible to switch the sensor into a sequential mode and get the full 2-megapixel resolution with metrology-grade quality. It means the technology gives the high resolution and accuracy of structured light, but can also capture objects in motion.

Software solutions

There are many ways to analyse 3D data, although the recommendation from Luzia Beisiegel, product owner of the Halcon library at MVTec Software, is to do as much as possible in the 2D space, ‘because it is simpler, more intuitive and faster’, she said.

Each 3D application starts with a pre-processing step, to remove unnecessary information, such as outliers from the point cloud. Beisiegel explained that this is normally based on a standard blob analysis applied on the z-mapping, which she said is much more intuitive and faster than doing the same operations in the 3D space on non-ordered point clouds.

The latest version of Halcon, 19.11, includes a function called Generic Box Finder, which allows the user to find boxes of different sizes in a predefined range of height, width, and depth, based on point cloud information. It addresses applications that have to handle boxes of different, unknown sizes, such as in logistics and pharmaceutical industries.

‘The requirement for using the Generic Box Finder is that point clouds are ordered,’ said Beisiegel. ‘This means that 3D point cloud co-ordinates can be mapped onto 2D image co-ordinates. The resulting images are called xyz-mappings and can be attached as attributes, for instance, to the point cloud format om3d.’

Most of the relevant 3D operators in Halcon have a mapping mode integrated, such as the Sample Object Model 3D operator. This mode is around five times faster than working in the 3D space on non-ordered point clouds, said Beisiegel.

‘On Windows systems, it is difficult to reach real-time 3D image processing,’ she added. ‘However, we are always working on improving the speed of our operators, including our 3D operators. We do this by using ordered point clouds, and we have already most of these modes integrated in Halcon.’

A 3D application, where the height image has encoded in its grey-values the z co-ordinate mapped onto 2D co-ordinates, can be processed on embedded hardware. There are a lot of 3D applications that can be solved in this way, according to Beisiegel – reading embossed text and numbers, detecting surface defects, or measuring heights; all those applications can be solved without needing a lot of memory or a powerful CPU, because it is based on 2D information. Point-cloud processing, on the other hand, requires a lot more memory, because there is more information to be processed.

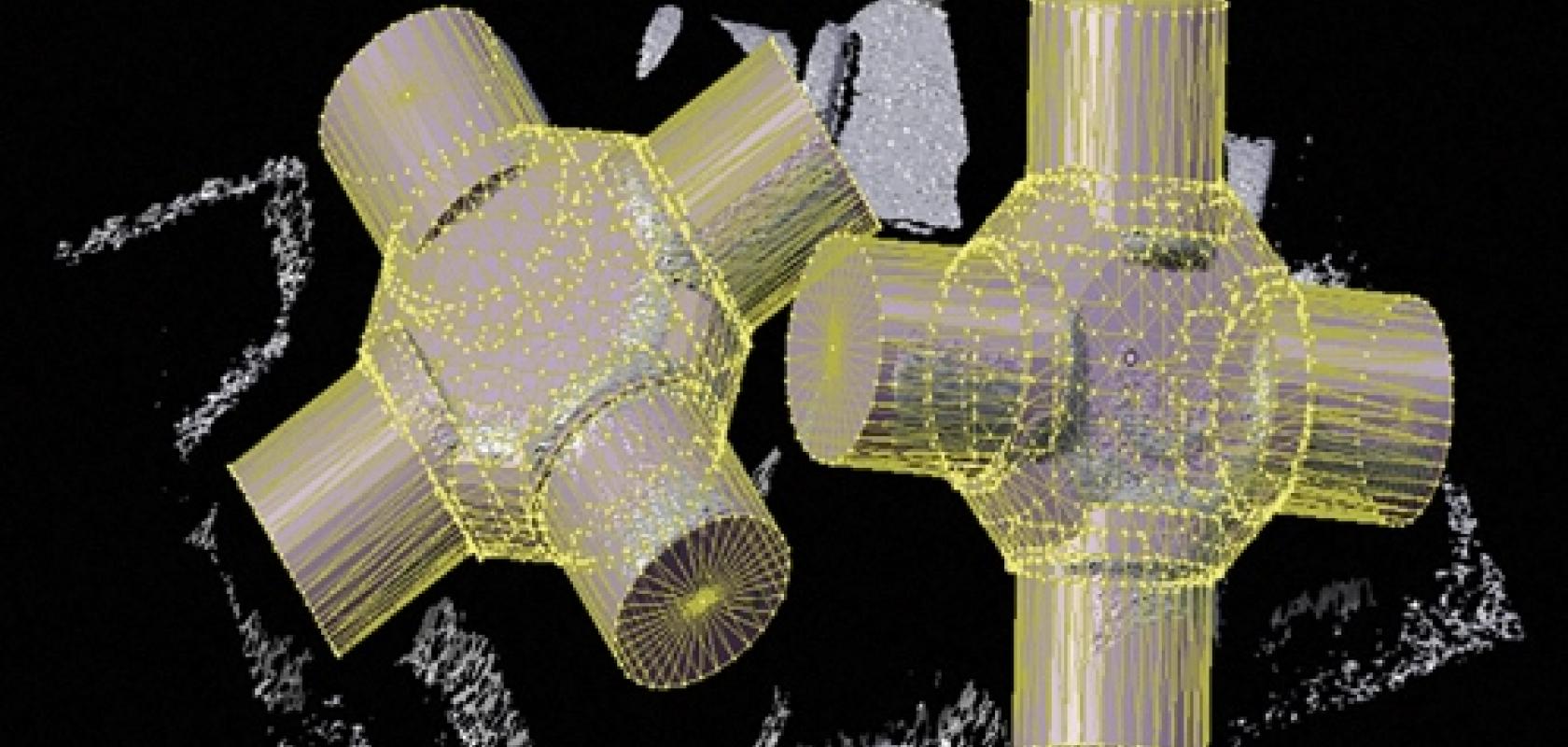

Standard 3D applications in industrial automation – such as in the assembly sector – include 3D measurement, bin picking and surface comparison. In some cases, especially for more robust bin picking, the shape of the part is known in the form of a CAD model.

Other markets include logistics and agriculture, both of which generally don’t have major requirements in terms of accuracy, but here the major challenge is handling objects of unknown shapes and sizes.

There are also pick-and-place applications where the shape is only partially known. A 3D surface is described by 3D point co-ordinates and normal directions. Based on those two attributes, a point cloud can be segmented according to different features, such as difference of neighbouring normals, curvature feature, or the area of the surface (the number of points). In Halcon it is possible to segment objects based on normal directions or other surface information.

Other software packages, such as those from Matrox Imaging or Stemmer Imaging’s Common Vision Blox, all have comprehensive libraries for working with 3D data. New in Sherlock 7 vision software, from Teledyne Dalsa, is a tool called Shape Extraction, which extracts raised or indented features, such as embossed characters or stamped and engraved markings.

Beisiegel, at MVTec, said the firm is trying to make its 3D tools as simple as possible for the user. This is the focus of the Generic Box Finder, for instance – it has a simple interface where the user enters intuitive parameters, while other, more complex parameters run in the background as a default.

‘For noisy point clouds it can be difficult to estimate default parameters,’ noted Beisiegel. Noise can be from reflective materials, or not enough texture available on the surface for a stereovision sensor without a pattern projector, for example. In such cases the image processing library needs to process those noisy point clouds. ‘Automation of processes is one of the focuses of our algorithms,’ she said.

‘Imaging boxes of unknown size is challenging,’ Beisiegel added, in relation to Halcon’s Generic Box Finder. Boxes are 3D objects, but if viewed from above they can be seen as a 2D plane. Another challenge is that when boxes are close together, the edges are not clear. ‘There are a lot of challenges that still need to be solved in this application,’ she concluded.