A blessing and a curse of 3D imaging is that there are so many ways to do it. Triangulation, stereovision, structured light, time-of-flight, the list goes on, with numerous variations on those themes. This means there’s normally a 3D vision technique that will meet the application requirements – if 3D is necessary in the first place – but that choosing the right method requires a certain degree of knowledge.

Using 3D vision can actually simplify inspection if implemented correctly, as noted by vision experts in a recent article, and there are now self-contained sensors designed to make using 3D vision easier. This doesn’t mean though that all applications can be solved with the existing solutions, or that there’s no room for innovation in 3D vision.

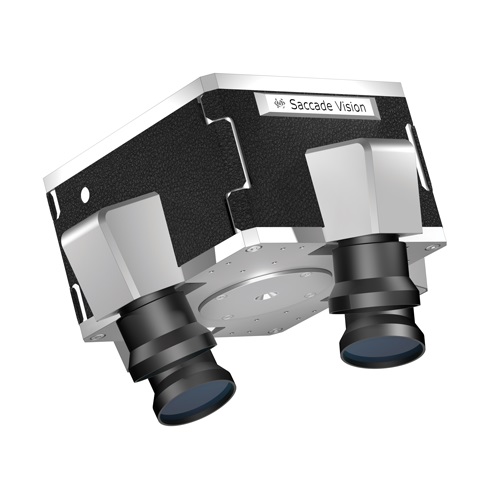

Two new techniques entering the market are technology from Israeli firm, Saccade Vision, and French spin-off Tridimeo. Saccade Vision has developed a MEMS-based 3D triangulation profiler. The MEMS-based laser illumination module can scan in multiple directions, and the device is able to create selective resolution and locally optimised scanning.

Tridimeo’s device, meanwhile, is a high-speed and multispectral 3D camera. The core technology is called a spectrally-coded-light 3D scanner. It is based on a projector that can encode data directly on the spectrum of the projected light beam. The beam makes it possible to probe both the shape and the optical spectrum of the object in the scene.

Saccade Vision is working on pilot projects with customers at the moment, both in production and as integration projects. A power electronics manufacturer is working with the system – currently as a stand-alone system – to give critical measurements of injection-moulded parts. ‘This is a difficult part to measure,’ explains Alex Shulman, co-founder and chief executive of Saccade Vision. ‘It’s black, it has thin walls, so a regular profiler can have issues with under-sampling. In our case, we can optimise the scan for different regions, so if we’re scanning a thin wall we can do it more accurately.’

The next step is to integrate the system on an injection moulding machine to make the measurements directly. ‘Instead of doing a manual inspection with callipers every two hours, which is how these parts are normally checked, the customer wants to do it automatically with high sampling rates,’ Shulman says.

The system can make a pre-scan of the entire part at low resolution to align it. It can then scan the critical features of the part in high resolution at a precision of 10µm. ‘We scan each critical dimension in such a way as to get very accurate boundaries,’ Shulman explains. ‘This is done by optimising the scanning pattern according to the feature’s specific properties.’

To scan in different directions requires different camera positions, with the image reconstructed by combining profiles from different scans. This system can operate in different configurations, from one to four cameras. This is, firstly, to get views from different angles, and secondly, the camera needs to be positioned perpendicular to the laser line to make the scan.

In another project, vision integrator Integro Technologies is developing an inspection system using the Saccade scanner. The manufacturer with which Integro is working has challenging requirements, including scanning at precisions of 10µm for some sections of an aluminium mould in high-volume production.

The customer wanted to perform 100 per cent in-process measurement of complex surfaces on a wide variety of mould segments. The moulds are precision machined from aluminium and vary in size – a general nominal size, for example, is about 12 x 6 x 2 inches, explained David Dechow, principal vision systems architect at Integro, who worked on the project.

- Lessons when working in 3D - We ask four experts to give their advice for 3D imaging best practices

- On demand 3D vision webinar

- Pick of the bunch - Matthew Dale explores the new 3D vision tools that are enabling automated bin picking

Currently, specific mould segment features are inspected off line using manual contact measurement tools at a part sample rate of about 20 per cent of the total, Dechow continues. The process requires measurement of the features in 3D. In addition to being a slow process, the existing manual inspection is not able to achieve all the required measurements. The task is made more challenging with the tight measurement tolerances required, in some cases as little as +/- 0.001 inches.

Dechow explains that because of the height and spacing of the 3D mould features, automated 3D imaging systems cannot extract the surfaces that must be measured. ‘In particular, features at the bottom surface of the mould are easily obscured by the vertical height of adjacent features when technologies such as laser triangulation profilometry are used,’ he says. ‘To achieve multiple angles of imaging, a single or even an opposed profilometry device would have to be scanned across the part surface at multiple angles. Furthermore, the scanning of the entire part at high resolution is time consuming, especially when only specific sections of the part are of interest for measurement.’

The Saccade-MD system is able to perform static scanning of a single field of view from multiple angles and with variable resolution. The unit can be attached to a robot arm and moved to specific areas of interest on the mould. Without further motion, the system can scan from appropriate angles to overcome 3D dropouts that happen when features are obscured, Dechow says.

The system is also able to image at variable resolutions within each scan, so that the density of data is higher only in the areas of interest, Dechow continues, and the overall size of the point cloud for that view is much smaller than would be produced if all of the scan were at high resolution.

‘In execution, the robot would have motion programs for each part type to carry the Saccade-MD to the required views for a given part, and the Saccade-MD would have an imaging configuration for each view on each part,’ Dechow says. ‘Once images are acquired, standard 3D measurement tools and techniques can be employed to implement the required measurements in each view.’

Dechow notes the point clouds will initially have to be downloaded manually and the measurements run separately, but that the process can be automated with further work. He says, however, that even taking this into consideration, ‘throughput will still meet the customer requirements and allow 100 per cent of the product to be measured.’

Saccade Vision’s system uses a MEMS-based laser illumination module that can scan in multiple directions, in a setup using one to four cameras. Credit: Saccade Vision

Shulman says Saccade Vision is targeting discrete manufacturing at the moment, such as plastic injection moulding, extrusion moulding, metal forming, CNC or die casting. All these operations don’t have a conveyor, and the part needs to be scanned stationary. ‘In theory we can add scanning in motion, because we have everything needed for that,’ Shulman says. ‘But we are currently not investing in that; it is possible, but it’s not our focus at the moment.’

Shulman adds that Saccade Vision’s technology’s strength lies in its ability to deliver micrometre precision. He says the firm is not targeting bin picking, but is looking at precise pick-and-place applications, requiring 3D position accuracy of 100µm or less in precision manufacturing.

‘We’re focusing on metrology for discrete manufacturing and process analytics,’ Shulman says. ‘Industry 4.0 is data-driven manufacturing. However, data collection today, at least in discrete manufacturing, is mainly done by indirect correlated sensors – measuring vibration, acoustics, temperature – and predicting machine health or making process control measurements based on that. Direct measurements, such as from our 3D scanner, have much better fidelity and provide a better basis for decision making.

‘Solid-state MEMS lidar has brought cost reduction, higher speed and higher quality scanning than the older galvo-based lidars could provide,’ he adds. ‘We want to do the same for triangulation in manufacturing.’

Saccade Vision plans to release a commercial product in the first half of 2022. It is also working on an inspection setup guided directly from a CAD model of the part.

Spectral speed

Tridimeo, on the other hand, does consider bin picking, pick-and-place and robot guidance in general within the scope of its 3D technology, alongside quality inspection. Tridimeo was founded early in 2017 as a spin-off from the French research institute, CEA. The company designs and produces high-speed and multispectral 3D cameras and develops vision software.

‘It’s a new way of performing 3D imaging,’ said David Partouche, co-founder and chief executive of Tridimeo. ‘Tridimeo is the only provider of high-speed multispectral 3D cameras in the world to our knowledge. All our vision solutions display both 3D imaging and multispectral imaging capabilities that are not accessible to other regular 3D imaging technologies.’

The technology was invented in 2014, primarily by Rémi Michel. The company’s 3D scanner, which uses a white LED and a complex optical system to encode the patterns, is used in combination with Imec’s snapshot spectral 2D sensor.

A standard structured light projector will project a pattern of light on the object and measure the deformation in the light to get a 3D image. This works by shining a sequence of patterns onto the object.

Tridimeo, by contrast, uses one multispectral camera with 16 bands, and projects 16 patterns – each at a different wavelength – at the same time. ‘It’s snapshot imaging,’ Partouche explained. ‘It can make it much faster than regular structured light 3D scanners.’ This is the case compared to white or blue light structured-light 3D scanners, although laser-based scanners can also be very fast, thanks to the available laser power.

Tridimeo developed its initial solution for Renault, a robot guidance system for depalletising semi-random kit of car body parts to load robot islands. The robot islands are for welding the parts together.

Renault wanted the camera to be attached to a robot arm, so it contains no moving parts. The car manufacturer wanted a 3D localisation precision of less than 1mm, taking less than two seconds to make the scan plus calculation per part, explains Elvis Dzamastagic, Tridimeo’s international business development manager. Renault also wanted something that’s fast to implement, that would correct for ambient light, and be robust to bright, stamped car body parts.

Tridimeo’s high-speed multispectral 3D camera. Credit: Tridimeo

In bin picking in general, one advantage of the system is it is able to distinguish between the parts to be picked and the bin itself using spectral information. This means the pickable objects can be detected and the rest of the image discarded, which makes the 3D localisation algorithm fast and avoids collisions between the robot arm and the bin. Being able to remove the bin from the data points making up the scene also makes it a much more robust solution. ‘When picking the last part at the bottom of a bin – a flat component, say a few millimetres thick – if you don’t have multispectral I don’t think it’s easy to locate the part robustly and precisely because it’s so thin,’ Partouche says.

In bin picking, Tridimeo would scan the bin for each pick. ‘The specification from our customers was that you need to scan, calculate in 3D, locate the part, and calculate the robot arm trajectory with no collisions in less than three seconds,’ Partouche explains. ‘We’re targeting picking at around six parts per minute. The bin is imaged as the robot leaves the bin to calculate the next pick while the robot is placing the part elsewhere. The snapshot is a few hundred milliseconds at most.’

To make the images robust to ambient light or reflections from shiny parts, two or more acquisitions would be made if needed, either to measure and subtract ambient light, or add images at different exposures together to get a high-dynamic-range image. The solution can be installed in factories using various industrial communication protocols, like Profinet and Ethernet/IP.

Beyond robot guidance, Tridimeo’s technology can be used for quality inspection, such as detecting a sealing joint on a car body part, even though both the joint and part are a similar colour – they can be distinguished through spectral characteristics. This could be used in car manufacturing to check whether the joint is absent or present, or identify its location.

In addition, the technology can be used to inspect the colour of car parts directly after painting, where the spectral data gives a colour measurement and the 3D data measures the shape and tilt of the part to take this into account in the apparent spectral output. ‘If you don’t have the 3D, you don’t know what to compare the spectral output with,’ Partouche says. ‘That is to say, tilting the part or changing the viewing angle would change the spectrum of light reflected back to the camera, so you need 3D information.’

In the future, Dzamastagic says plastic processing and food inspection might be further application markets for Tridimeo’s technology, although at the moment its software is more suited to robot guidance.

There are now reasonably mature 3D vision products on the market; these two companies show there’s also plenty more innovation out there when it comes to measuring the third dimension.

Matrox AltiZ high-fidelity 3D profile sensors power TUNASCAN vision system, sorting up to 20 tonnes of tuna per hour with accuracy rates approaching 100 per cent

Headquartered in Spain, Marexi Marine Technology Co. has been a marine technology leader for more than 15 years. They develop optical scanning systems for marine species for fishing, canning and aquaculture sectors. Their TUNASCAN system is Marexi’s most state-of-the-art machine, a high-speed, high-throughput vision system that scans and classifies tuna by species, size and quality. Visual classification of fish is challenging, especially once frozen. Differences between species become practically impossible to discern reliably without exhaustive testing. Using cutting-edge 3D profile sensors along with machine learning algorithms, TUNASCAN properly classifies and sorts tuna with accuracy rates of more than 95 per cent.

Two Matrox AltiZ 3D profile sensors (on the far left and right) scan each fish as it passes through the dual-sensor TUNASCAN system

‘We are always seeking ways to further enhance our solutions,’ notes Pau Sánchez Carratalá, vision and robotics engineer at Marexi. ‘In the interest of improving the classification algorithms used by TUNASCAN, we overhauled the entire acquisition system with the support of Matrox Imaging and Grupo Alava.’

Just keep scanning

TUNASCAN is a major fixture of Marexi’s marine technology offerings; this patented two-channel vision system can process up to 20 tonnes of frozen tuna per hour. From the reception hopper, frozen tuna are fed into and pass through the scanning section, where two Matrox AltiZ sensors perform a 3D scan and a computer classifies each tuna individually. Classification results and location data are sent to the sorting system, where each tuna is sorted into its appropriate container.

Clear fishy fishy

Upgrades to the TUNASCAN project centered upon Matrox AltiZ 3D profile sensors. Sánchez Matrox AltiZ high-fidelity 3D profile sensors power TUNASCAN vision system, sorting up to 20 tonnes of tuna per hour with accuracy rates approaching 100% 3D vision system nets the right tuna Carratalá notes: ‘We needed a reliable way to obtain 3D point clouds from objects moving at a fairly fast speed. The application also must deal with point-cloud noise and dirtiness from physical operation of the machine. In the past we used separate cameras and lasers for obtaining 3D data. Matrox AltiZ allows us to integrate these elements into a single sensor that provides exceptional robustness to the application.’

TUNASCAN employs machine learning to accurately classify frozen tuna based on features extracted from the point-cloud representation and calculated weight. Matrox Capture Works – the interactive set-up utility for Matrox AltiZ – was used to configure the sensor and provide the code snippet for the acquisition portion of the actual application.

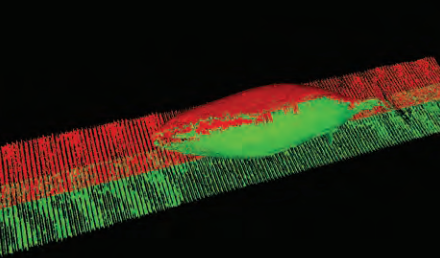

In the TUNASCAN system, the 3D devices are set to generate a point cloud. Laser lines trace the contours of the fish. Embedded algorithms then produce a point cloud, which is stitched together to create a complete 3D rendering of the tuna. The algorithm ensures greater control over invalid data, resulting in more robust 3D reproductions.

TUNASCAN also includes ultrasonic sensors that trigger 3D capture only when there is a fish available to scan. Special low-temperature infrared sensors are responsible for monitoring the temperature of each fish and assuring the proper behaviour of the system.

Fishing for results

Operator interaction with the system is minimal. In addition to sorting by species, the same species of tuna can be further sorted by weight. Every incoming fish is sorted into the selected categories by container. TUNASCAN manages multiple containers, automatically assigning a new container for output while the full container is being replaced, ensuring the system remains in continuous operation.

An onscreen rendition of the 3D point cloud generated by the two Matrox AltiZ

TUNASCAN systems are deployed in harsh environments and operate continuously, leaving very little opportunity for maintenance or calibration. ‘One of our installations has been working up to 20 hours a day, six days a week for almost three years, with barely any maintenance required,’ Sánchez Carratalá smiles. ‘All that, and with accuracy rates approaching 100%! Our clients could not be more pleased.’

In the swim of things

As part of Marexi’s commitment to continuous improvement of their products, TUNASCAN has been regularly improved and optimised. ‘We faced some challenges at the start, mainly because it is a very complex and disruptive system, and we needed to guarantee its efficacy,’ Sánchez Carratalá reports. ‘We are happy with the assistance offered by Matrox Imaging’s technical support team, as well as the help received from Grupo Alava.’

Tuna are scanned by two Matrox AltiZ before being classified; 3D classification results and location data is sent to the sorting section

Building on the success of the TUNASCAN upgrade, Marexi is currently working on a different project for the fish industry that also integrates a Matrox AltiZ, along with Matrox Imaging Library (MIL) X software.

Marexi reports that their current clients are very satisfied with TUNASCAN and the value it provides their businesses. ‘Our TUNASCAN application leverages the strengths of a Matrox AltiZ-based system,’ Sánchez Carratalá concludes. ‘Not only do the sensors deliver very accurate 3D data at really high conveyor speeds while dealing with a challenging product like frozen tuna, but the Matrox AltiZ functions optimally in extremely harsh environments and works for long periods of time without maintenance.

Each tuna is automatically sorted into its appropriate container