This year has demonstrated more than ever the need for automation. Even before the pandemic it was clear that behavioural patterns were shifting towards online shopping. Now, with a number of countries having gone into a second lockdown in the build-up to the most significant shopping period of the year, automation will undoubtedly play a crucial role in meeting the surge in online orders. Children will still have presents to open this year, so their unwavering belief in Santa Claus can continue.

However, Amazon has said it will hire 100,000 extra staff in the US in the run-up to Christmas to keep pace with e-commerce demand, which demonstrates that processing online orders is yet to be fully automated. After all, bin picking is still a young technology that is only now beginning to meet real industry requirements.

‘High-end companies such as BMW, ThyssenKrupp, Amazon and Ocado are now using this technology, and are a lot less forgiving – demanding 100 per cent pick rates rather than 80 to 90 per cent,’ said Mikkel Orheim, senior vice president of sales and business development at 3D camera manufacturer Zivid. ‘We see across the board that customers want more automation and to be able to pick more products. They are not looking for 10 systems that need to be monitored by five people; they are looking for 20 systems that can be monitored by one person.’

With the gauntlet having been thrown down, the vision industry is answering the challenge by enabling more flexible picking solutions to handle more objects.

Revealing the truth

The ideal bin-picking solution will be able to detect, pick and place pieces that are densely packed, complexly arranged, hard to separate or have shiny and reflective surfaces, all while being faster than a human.

However, according to Orheim there is still much more to be done in the machine vision domain before such universal picking can be achieved, with a number of challenges still needing to be addressed.

One such challenge he highlighted is mastering ‘trueness’, which he noted is one of the least understood components in the bin-picking industry.

‘In short, trueness describes how true your image is to the reality you are representing,’ Orheim explained. ‘Some of our most prominent customers have come to us saying that despite being able to clearly see the objects in their bins using an incumbent 3D sensor, they are not able to pick them every time.’ This is because the point-cloud images are not true: there can be scaling errors, where objects appear larger or smaller than they actually are; rotation errors; or translation errors, where objects appear to be in a different orientation or position than they are in reality.

‘Trueness is one of the hardest aspects of bin picking to get right,’ Orheim continued. ‘You will see a lot of articles and opinions saying that bin picking is about managing and compensating for errors in data, whereas our opinion is that you really need great data from the core component. This means cameras should be offering higher trueness, so minimal errors in scaling, rotation and translation.’

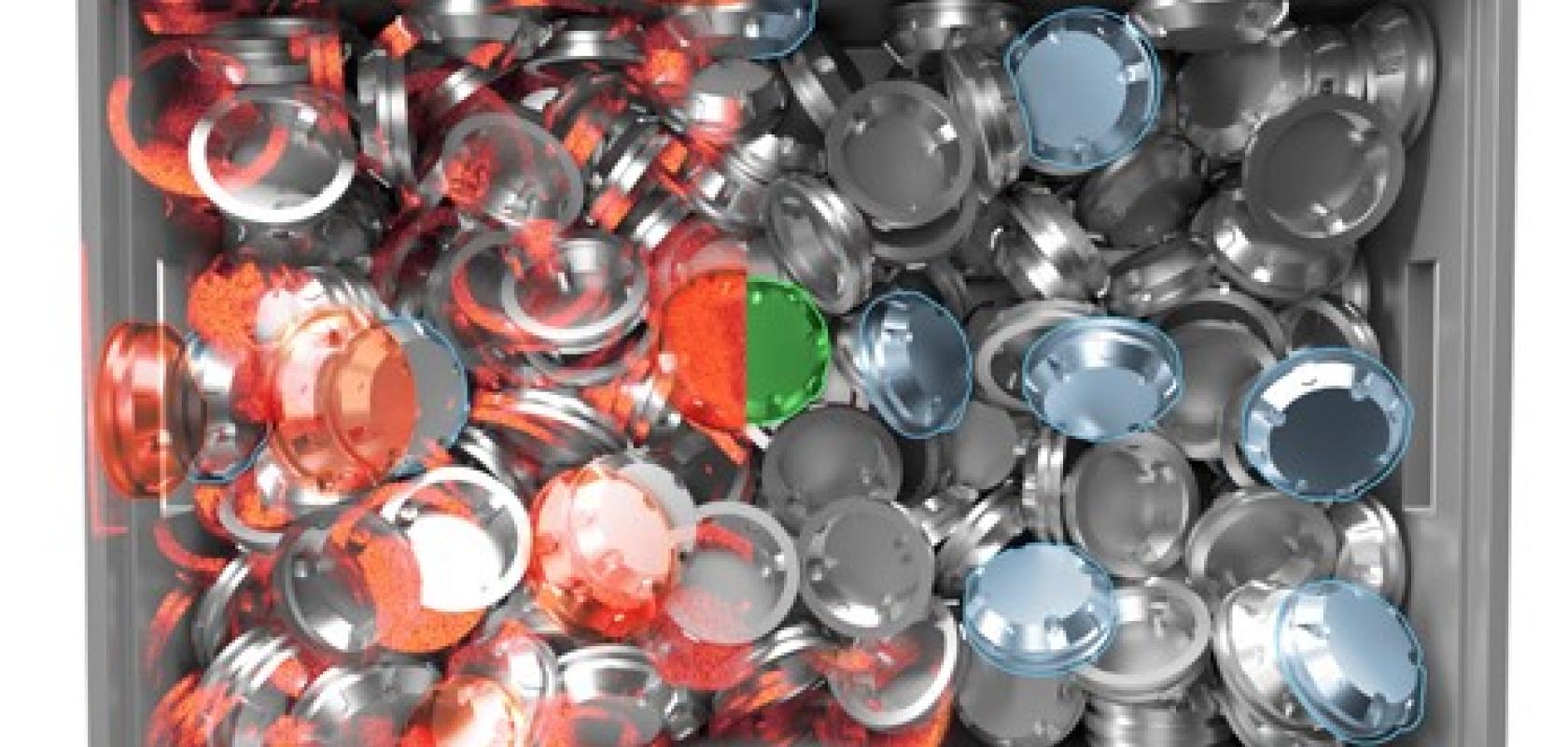

Colour is a native quality of the point cloud produced by the new Zivid Two camera. Credit: Zivid

If an image is scaled incorrectly by just 1 to 2 per cent then the gripper will miss the object, particularly if hard grippers are used, as these need to be aligned accurately when picking small or complex parts.

Orheim said that trueness will play an important part when customers are looking to install hundreds of bin picking systems. This is because if a system is not consistently able to empty a bin, it will not be approved in a site acceptance test. ‘Until you can achieve consistent, accurate results across multiple systems, you will not be delivering the next wave of automation and achieve broad adoption,’ he said.

Seeing the structured light

Another machine vision challenge Orheim highlighted is that the images provided by some of the standard cameras commonly used for bin picking – he gave the example of low- to high-end stereo vision cameras – are not high enough quality to be processed efficiently by the AI these firms are starting to use to power their detection systems.

‘While some objects can be recognised by smart detection algorithms and AI, objects that aren’t as well-defined can not be confidently distinguished,’ he explained. ‘These cameras are remarkably good for their price point, but from a bin picking perspective, we believe they are not quite up to scratch.’

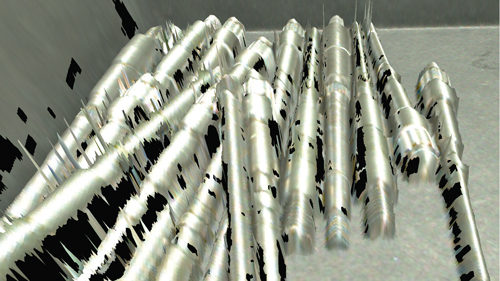

Zivid’s ART technology is able to reduce image artefacts from reflective parts when using its structured light 3D imaging. Credit: Zivid

Zivid aims to address this issue – while also achieving high dimensional trueness – using its own cameras, which use structured white light to conduct 3D imaging.

‘What sets our cameras apart is how we use colour, which comes into play when using AI,’ said Orheim. ‘Colour is becoming a much more interesting quality of the point cloud, because it can be used by an AI or deep learning algorithm as an additional factor to distinguish between objects.’

Rather than using a separate 2D camera to capture colour information and overlaying this on captured 3D data – as is currently done by a number of 3D camera vendors, according to Orheim – colour is instead a native quality in the point cloud produced by Zivid’s cameras. This prevents defects from being introduced into the images, and randomly arranged objects are easier to identify with AI thanks to the higher quality of the point cloud.

However, using structured light to produce a point cloud does introduce its own challenges when imaging shiny or reflective parts. This is because the light reflecting from the parts can create image artefacts, leading to the object being over- or under-exposed.

‘For example an object that is cylindrical may not appear to be so, which leads to confusion in the detection algorithm and the object not being correctly identified in the bin,’ explained Orheim. ‘This has been less dominant when using stereo vision cameras, which is why these cameras have gained popularity in bin picking. However, we have now solved this issue.’

Over the past two years Zivid has been developing artefact reduction technology, called ART, which improves the quality of the 3D data captured from reflective and shiny parts. ‘This solution is a combination of innovations in both hardware and software,’ Orheim said. ‘It means that customers now get all the benefits of structured light cameras when picking very challenging reflective parts in the bin.’

ART will be available on both the firm’s Zivid One+ camera and the recently released Zivid Two camera, which can image at speeds of 0.3 seconds.

Orheim concluded by hinting that one R&D project currently underway at Zivid is exploring how best to identify one object type still proving very challenging to capture accurately with structured light 3D imaging – transparent objects.

Bag picking: it’s in the bag

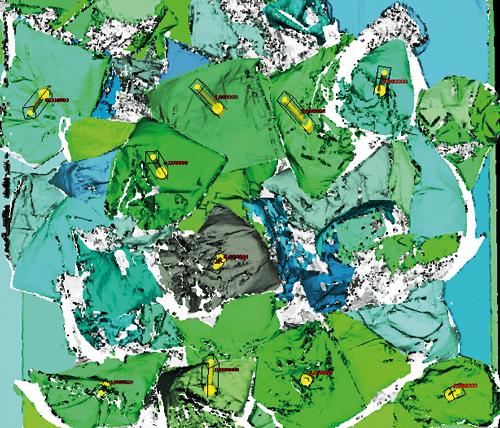

Photoneo, another 3D vision specialist, has been working on a vision solution to recognise bags in a bin, which by their nature is difficult to do. ‘They are flexible, deformable, full of wrinkles, and often transparent or semi-transparent,’ remarked the firm’s director of AI, Michal Maly. ‘Recognising boundaries between bags that are chaotically placed in a container can often be difficult even for humans. The task gets even more complicated for transparent bags. Difficult – but possible.’

Photoneo is using a neural network to recognise bags in a bin. Credit: Photoneo

Photoneo developed a neural network that is able to recognise bags that are overlapping, for instance, so that the robot won’t try to grab fully or partly covered bags.

‘AI combined with high-end 3D vision is undoubtedly the future of automation,’ Maly added. ‘It provides countless benefits – users do not need to worry about the performance with completely new items, and integrators do not need to perform any difficult 3D calculations and tasks, or have an expert knowledge of the various robotic brands on the market.’

Convolutional neural networks, such as that of Photoneo’s AnyPick solution, often come pre-trained on a large dataset of objects. They can also be trained on very specific types of items, which can increase their precision and performance. AnyPick is able to recognise objects in a fraction of a second and with a good gripper and a fast robot, can pick more than 1,000 items per hour.

Maly said that reinforcement learning is very promising for future developments in AI, as it is able to cope with various mechanical problems – not only those related to bag recognition and position, but also the way the robot picks them. It is able to assess individual steps of an action and calculate the chance of success or failure.

Lowering the cost of 3D vision

Earlier this year Airy3D introduced a passive near-field 3D single-sensor solution with the aim of reducing the cost of 3D vision for bin picking and other applications.

The solution, called DepthIQ, uses diffraction to measure depth directly through an optical encoding transmissive diffraction mask applied on a single conventional 2D CMOS sensor. Together with image depth processing software, the solution converts a 2D colour or monochrome sensor into a 3D sensor that generates both 2D images and depth maps that are inherently correlated, resulting in depth per pixel. It requires one 2D camera module, rather than a pair of cameras, and no special lighting, both of which can add considerable cost to 3D vision approaches.

‘It enables high-quality depth sensing at a much lower cost than conventional 3D technologies,’ confirmed Dr James Mihaychuk, product manager at Airy3D. ‘It does so with very lightweight processing, as most of the computational burden is eliminated by having the physics of light diffraction contribute the disparity information on a pixel-to-pixel basis. This renders the extraction of depth information from the raw 2D image, somewhat analogous to the demosaicing of a Bayer pattern colour filter array.’

A 2D image and its associated sparse depth map captured with an industrial DepthIQ-enabled sensor. Credit: Airy3D

Consequently, according to Mihaychuk, a DepthIQ-enabled sensor can deliver coordinates for robot picking independent of object size, colour or brightness, and at faster speeds and lower power consumption than conventional 3D solutions.

Airy3D plans the technology to be an alternative to stereo vision, structured light and time-of-flight solutions in bin picking by addressing the shortcomings of each technology. It does not face the same alignment challenges as stereo vision, and it avoids some of the optical and geometrical design challenges found in time of flight and structured light by sensing depth using the image sensor pixel structures.

A full HD (2.1 megapixel) CMOS image sensor can achieve VGA (640 x 480 pixel) resolution as a depth sensor using Airy3D’s technology.

Automating PCB assembly

Printed circuit board assembly in electronics is largely automated, with robots carrying out SMD placement, soldering and automated optical inspection. Until now, an exception has been the through-hole mounting of wired components such as capacitors, power coils and connectors. This is still mostly assembled manually, as it is a complex process that cannot be easily automated.

Now Glaub Automation and Engineering has succeeded in automating this process using a robot cell equipped with vision technology. The assembly is done by a collaborative Yumi robot from ABB, which, thanks to two arms, each equipped with four smart cameras from Cognex, can assemble the circuit boards twice as fast as a conventional robot.

Cognex’s solutions partner M-Vis developed the vision system. It uses eight Cognex smart cameras, a combination of 3D surface sensors and 2D cameras. The robot identifies a capacitor from its data matrix code, grips it, and places it precisely on the printed circuit board. The capacitor is then soldered from below.

An In-Sight 7802M vision system is used to measure the parts and provide the necessary information to correct the position of the gripper. A further system from the In-Sight 9912M series measures the circuit board and if necessary corrects the gripper’s movement when it is placing the component on the board. A 3D surface-scan camera, the 3D-A5060, is used to see the position of parts in the feed line.

The GL-THTeasy robot cell for assembling PCBs. Credit: Glaub Automation and Engineering

‘In each process step, the cameras capture the actual position of the component, the gripper and the circuit board in relation to the electronic component,’ explained Niko Glaub, CEO of Glaub Automation and Engineering. ‘In other words, the legroom of the components is aligned with the actual dimensions of the assembly positions. This allows the component to be automatically found and removed, then enables accurate through-hole mounting on the basis of actual position data.’

The cycle time can be less than three seconds depending on the feed and the components to be installed.