Many advances are taking place in medical imaging, both in terms of what some would consider traditional, nuclear medical imaging – PET or SPECT scans – and in standard industrial vision systems that are being adopted and adapted for the medical sector.

Nuclear medical imaging uses low levels of radioactive material to diagnose a host of conditions, from cancers and heart disease to endocrine and neurological disorders. In the case of positron emission tomography (PET) and single-photon emission computed tomography (SPECT) the radiation is converted to a photon via a scintillator crystal with an array of photomultiplier tubes (PMT) used to detect this low-level light. In a PET scan, these would be arranged in a ring around the patient; for SPECT, the tubes would be in a 2D array.

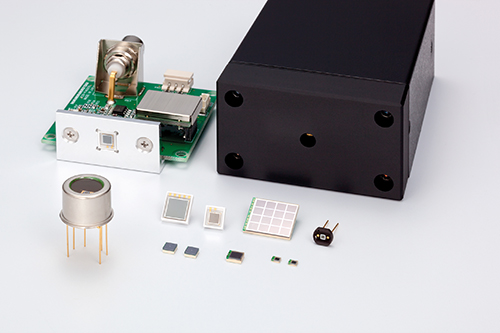

However, the PMT is starting to be replaced by the silicon photomultiplier (SiPM), a silicon-based semiconductor detector, made from an array of APD pixels operated in Geiger mode. Richard Harvey from Hamamatsu, a leading manufacturer of PMTs, silicon photomultipliers and scientific CMOS cameras, said: ‘The SiPM has performance characteristics approaching that of the traditional PMT. Five years ago silicon PM was niche and [only a handful of] markets were interested. Today, SiPM is coming a lot closer.’

While stressing that, ‘for low light detection in general the PMT is probably still the gold standard because it has a very large collection area. It has very low noise [and] silicon PMs’ noise can be higher… [so] I think there will always be a role for the PMT’.

Harvey also highlighted there are significant benefits of shifting to silicon. ‘The original PMT is handmade, so there’s a small variation from device to device; with a silicon process you have uniformity,’ he said. ‘But the real benefit comes in when you want to combine PET with MRI.’

Silicon photomultipliers have performance characteristics approaching that of the traditional PMT

Unlike the traditional PMT, the silicon device is immune to the considerable magnetic fields present in a magnetic resonance imaging (MRI) scanner. This ability to integrate functionality into multimodal machines – and in doing so cut both costs and required space – is highly desirable for hospitals. And while multimodal PET and MRI machines have been created before the move to SiPMs, these tended to be separate, connected via a moving gantry on which the patient lay.

Harvey commented: ‘The more multimodality they can combine on one scanner [the better the chance] of supplying a scanner to a hospital.’ Siemens, for example, recently announced a multimodal PET/CT scanner.

Time-of-flight imaging

Medical imaging is also starting to benefit from time-of-flight (ToF) PET detectors, which measure how long it takes for the gamma ray to leave the patient or object and reach the detector. These systems make use of very fast gamma ray detectors and data processing systems to decide more precisely the difference in time between the detection of two photons, with the benefit being significant improvements in image quality, especially from reducing signal-to-noise ratio.

Harvey remarked: ‘ToF PET is something that companies are really pushing hard.’ However, while suitable for medical imaging, size is important and it isn’t suited to pre-clinical studies on small animals such as mice. ‘The electronics are not fast enough to discriminate different pulses of light, as the bore diameter is so small,’ he explained. ‘When you’re scaling it to human size, you’re measuring a metre across ... you’re at the size where you can use ToF PET.’

Transmission standards

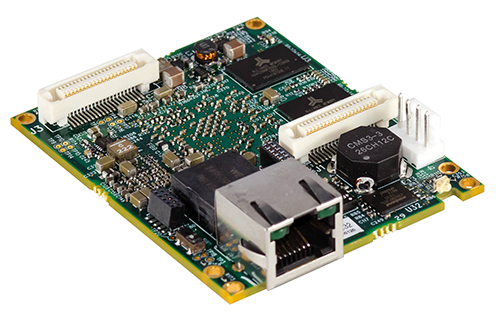

The use of real-time imaging is becoming more common in medical practices, and this, coupled with more accurate sensors and techniques, means data transmission can be a bottleneck.

Ed Goffin of Pleora, which develops video interfaces for the medical market, said: ‘Medical imaging technology is evolving from a snapshot system, to using a continuous x-ray to capture movement of a body part or a surgical instrument. These real-time demands require increased bandwidth support between the imaging device, processing unit, and a display panel.’

This sentiment is backed up by Hamamatsu’s Harvey, who said that data rates can be huge: ‘With PET, there are thousands of pixels surrounding the patient and it’s happening at a fast rate. Similarly, for applications like digital pathology, using sCMOS cameras file sizes are gigabytes, with z stack, [which] allows you to zoom in and still have a focused image.’

Pleora works predominantly with GigE for medical imaging because of the flexibility and reach of the cabling, and the ability to trigger image acquisition via IEEE1588, all of which simplifies the overall design.

Goffin pointed out that the benefits of increased bandwidth aren’t purely about better images, but also about reducing the radiation exposure to the patient. Pleora is working with manufacturers designing 2.5Gb/s and 5Gb/s Nbase-T over GigE Vision connectivity into flat panel detectors, as well as investigating the use of multi-source image data over 10Gb/s Ethernet for 3D imaging.

3D printed prostheses

As resolutions increase, the ability to use the data more meaningfully does too. Recent reviews, such as those by Malik et al published in The Journal of Surgical Research, and Marro et al published in Current Problems in Diagnostic Radiology, have highlighted the resolutions from tomographic images are now high enough to create 3D-printed models from them, for tasks such as preoperative planning of complex surgeries, the creation of custom prostheses, and for training physicians.

As per Marro’s review, data for generating these images for models typically comes from CT or MRI scans, although ultrasound can also be used. Resolution might be in the range of slice thicknesses of 0.5-1mm to reconstruct a model for maxillofacial surgery, for instance, whereas models of the pelvis and long bones can use slice thicknesses up to 2mm, the paper suggests.

Hamamatsu’s Harvey also said this was very visible at the International Dental Show in March in Cologne, Germany, with many stands having 3D printers. ‘We saw 3D printing was huge, making fillings, crowns, replacement teeth. A lot of this requires imaging; [the] jaw needs X-rays [and] on the dental side it’s huge.’

Hamamatsu is actively involved in the development of flat panel CMOS sensors for digital radiography. Harvey said the detail from a 3D composite image taken with flat panel CMOS sensors is fantastic. ‘I can perfectly see them being able to do 3D printing in the future.’

Adapting industrial vision cameras

Standard industrial vision cameras are now commonly used in medical applications, according to Ulli Lansche of Matrix Vision. Dentistry, for example, uses USB cameras, as do skin screening systems for analysing spots on skin for signs of cancer, Lansche noted. Another future imaging trend, according to Lansche, is remote diagnosis, to allow a doctor to examine the patient without the patient having to travel to the surgery.

Matrix Vision is currently working with Simi Reality Motion Systems and the gait laboratory at the Rummelsberg Hospital in Germany, which has created the world’s first image-based diagnostic system for gait disorders. The technology allows the hospital to diagnose these disorders quickly.

The system uses eight high-speed cameras running the Sony Pregius IMX174 sensor to record patients pacing up and down a 15 metre-long track, with special markers attached to specific points on their bodies. Live and recorded footage is used during the planning stage in the outpatient department, in the operating theatre, or when producing custom-fit aids in the hospital’s internal orthopaedic workshop.

Each of the system’s MvBlueCougar-XD cameras output 1,080 x 1,920 pixel 8-bit images at 30fps, totalling 500MB/s, with data transmitted up to eight metres to the PC positioned half-way down the track. This exceeds USB 3.0’s interference-free range of four metres, but also GigE’s 120MB/s transmission speeds. The system uses link aggregation to bundle two GigE cables into one, and relied on the camera’s 256MB image memory to compensate for the lack of bandwidth.

According to Lansche, as well as technical considerations, such as the need for surgical steel housings, and the ability to cope with wet environments, one of the key issues is in sensor and display calibration, especially for applications such as remote diagnosis. ‘There are, for example, many different shades of red, so it’s really important that the camera snaps the same colour as the human eye [for a doctor to make an accurate diagnosis],’ he observed.

Robotic surgery

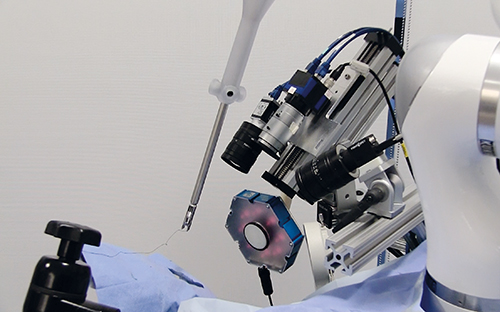

One potential future use of industrial and nuclear imaging system advances is for greater automation in the operating theatre. Limited robotic automation is already used in surgery involving rigid structures such as bones, according to John Hopkins University, although stitching soft tissue, which can move and change shape, is more difficult to automate, requiring a surgeon’s skill to keep suturing as tight and even as possible. The university reports 44.5 million soft tissue surgeries are performed in the United States each year.

The Smart Tissue Automation Robot, developed by John Hopkins University and Sheikh Zayed Institute for Pediatric Surgical Innovation, is a robot designed for stitching soft tissue

However, a move to more automated systems gives many benefits, particularly in efficacy and safety. Aside from the algorithm development, what are some of the challenges they might face? According to Pleora’s Goffin and Matrix Vision’s Lansche, the challenges will be very similar to those faced by industrial applications. Goffin noted: ‘There are three interconnected areas to address for applications such as image-guided or robotic surgery. One is bandwidth, and the need to transmit data-intense uncompressed video between imaging sources and a processing platform. Closely tied with this is latency. In image-guided surgery, there can’t be any distinguishable delay between what a surgeon sees on a display panel and the surgical tools. Third is processing and analysis, where the computing platform has to keep pace with both the bandwidth and low latency demands of real-time imaging applications.’

Lansche agreed: ‘[Cameras need] reliable image transfer with no losses. For this it’s important that cameras have an internal image memory to buffer images if the interface has a bandwidth bottleneck.’

John Hopkins’ computer science department has teamed up with the Washington, DC-based Sheikh Zayed Institute for Pediatric Surgical Innovation to investigate how the operating room may someday be run by robots, with surgeons overseeing their moves. Writing in the journal Science Translational Medicine, the academics stated: ‘Autonomous robotic surgery – removing the surgeon’s hands – promises enhanced efficacy, safety, and improved access to optimised surgical techniques. Surgeries involving soft tissue have not been performed autonomously because of technological limitations, including lack of vision systems that can distinguish and track the target tissues in dynamic surgical environments, and lack of intelligent algorithms that can execute complex surgical tasks.’

Interface boards, such as this one from Pleora, need increased bandwidth to support real-time medical imaging

Researchers have developed a plenoptic three-dimensional and near-infrared fluorescent imaging system, as well as an autonomous suturing algorithm, called Smart Tissue Automation Robot (STAR). Once built, the team compared its work against that of five surgeons completing the same procedure using three methods: open surgery, keyhole surgery and robot-assisted surgery.

Researchers compared consistency of suture spacing, pressure at which the seam leaked, mistakes that required removing the needle from the tissue or restarting the robot, and completion time, and while the robot took longer (35 to 57 minutes) than open (8 minutes) and robot-assisted surgery, it was comparable to the keyhole procedure, and by all other measures, the robot’s performance was comparable or better than the surgeons.

According to the paper: ‘No significant differences in erroneous needle placement were noted among all surgical techniques … suggesting that STAR was as dexterous as expert surgeons in needle placement.’

With 30 per cent of the 232 million operations that take place globally having complications, according to the researchers, will it be used in operating theatres imminently? And will it replace surgeons? The answer to the first is not yet clear; however, the answer to the second is ‘absolutely’. Speaking at last year’s Wired Health series of Ted-like talks, cancer specialist Shafi Ahmed highlighted that ‘five billion people do not have access to safe and affordable surgery’. ‘We need to produce 143 million operations and train 2.2 million surgeons … [which would] save 17 million lives every year … more than deaths from HIV, malaria and TB put together.’ According to Ahmed, the technology is still a way off, but it’s only a matter of time before robots – guided by advanced vision systems – replace surgeons.