On the 19 December, if all goes to plan, a camera with a resolution of one billion pixels will be launched into space as part of the European Space Agency’s Gaia mission to survey our Galaxy. The camera, comprised of a mosaic of 106 CCDs covering an area of 0.38 square metres, is the highest pixel count imaging device ever sent into space. Gaia will survey the Galaxy, plotting the position of around one billion stars during its five-year mission.

Gaia’s focal plane uses CCDs that read out in time delay and integration (TDI) mode for imaging very faint light sources like distant stars. This in itself is not particularly new technology, but the challenge with Gaia was the scale of the focal plane array, according to David Morris, chief engineer for space imaging applications at e2v. The company, based in Chelmsford, UK, fabricated the image sensors for the Gaia spacecraft.

He said: ‘For us, the challenge was going from a typical space programme that might have a maximum of perhaps 10 detectors to one that had more than 100. The scaling-up we had to do in terms of infrastructure for assembly and test was quite significant.’

Morris added that the project has increased the company’s capacity for supplying detectors for the space industry and a lot of e2v’s infrastructure, in terms of clean rooms for semiconductor fabrication, was put in place to cope with the volume of CCD production for Gaia.

The reason why the mission requires such a large imaging device is in order to see a lot of stars – one billion mapped over five years. ‘We can only do that with a large camera,’ explained Giuseppe Sarri, Gaia project manager at the European Space Agency. The mission has a finite lifespan, so to see these kinds of numbers of stars needs a large imaging focal plane. Gaia will not take pictures in the traditional sense, but will be spinning and the camera will build up a map of the Galaxy based on the 3D coordinates of each star. Each star will be imaged approximately 70 times during the lifetime of the mission.

The device is able to image magnitude 20 objects; i.e. it can perceive an object around 400,000 times fainter than that which is visible to the naked eye. This will allow the instrument to pinpoint very faint stars, either those that are close by or bright stars that are far away. The CCDs onboard Gaia can convert photons into electrical charge at an efficiency of up to 90 per cent, according to Sarri, which is vital for imaging the light from faint stars.

The camera is so precise it can measure the degree to which starlight is deflected by gravity from planets and the Sun. The precision required to measure this effect is typically around 10 to 30 microarcseconds, depending on the magnitude of the star, noted Sarri. A microarcsecond is an angular measurement; 10 microarcseconds is equivalent to the angular opening of a €1 coin on the Moon, viewed from Earth.

Gaia will not only map the coordinates of the stars in the Milky Way, but will also measure their movement. This will allow the scientists to calculate the path the star has taken in the past and its path in the future, which will improve the understanding of the evolution and formation of the Galaxy.

‘The Galaxy is not only an object in which stars are rotating around a black hole in the centre, but there are groups of stars that have particular motions,’ explained Sarri.

Each of Gaia’s CCDs has a resolution of 4,500 x 2,000 pixels, with the 4,500-pixel direction being the TDI portion. A TDI sensor has the benefit of an increased integration time without degrading the resolution of the imager. ‘The sensitivity of the instrument is effectively enhanced by 4,500 times over what it would be if a single image were taken,’ explained Morris.

The CCD has specific features incorporated to negate the influence of space radiation. E2v also developed specific packaging and mounting for each CCD, such that individual sensors could be butted close together in the focal plane array. The imaging area is almost exactly the same size as the packaging because the sensors can be joined extremely precisely.

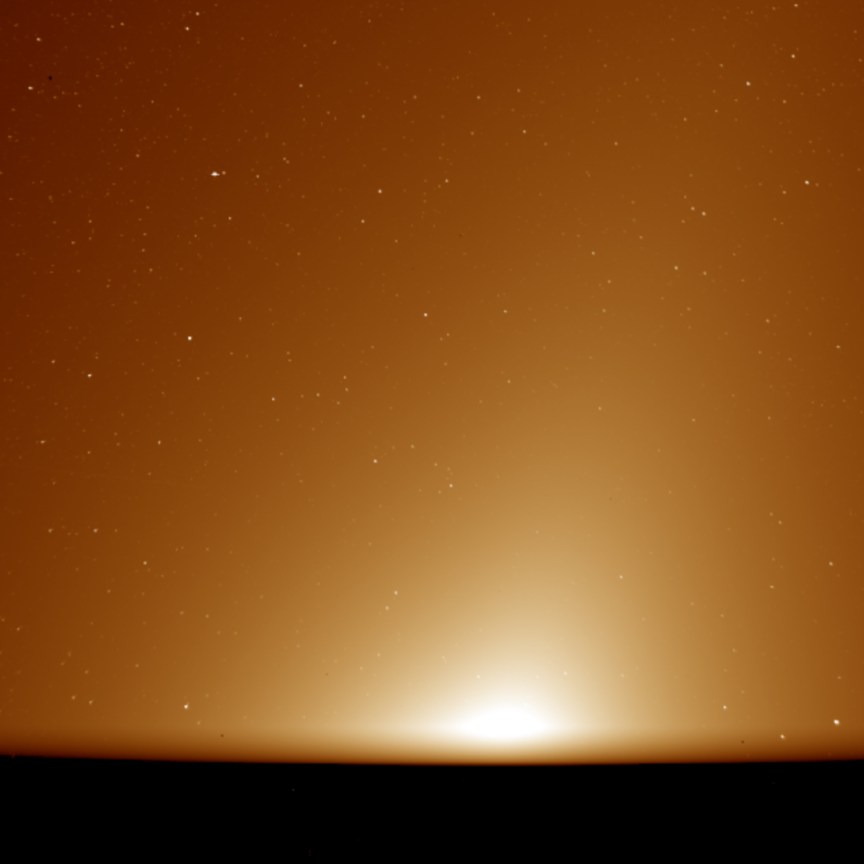

Artist's impression of the Gaia spacecraft, with the Milky Way in the background. Credit: ESA/ATG medialab; background image: ESO/S. Brunier

The sensors were also very flat so that, when they are mounted together on the focal plane, they create a very flat, precisely aligned mosaic of detectors. ‘In the end, the biggest challenge was not necessarily the design of the detector itself, but combining that with the complex design of the packaging to get the thermal, mechanical and optical interfaces appropriate for mounting in this type of focal plane. It’s a relatively complex job,’ commented Morris.

E2v first became involved in the Gaia project in 1997. ‘Gaia moved us [e2v] forward in terms of what materials to use for packaging the focal plane and how to make the electrical interface for the device,’ Morris continued. ‘We’ve carried on using the technique for butting the sensors together in many other programmes, both for other space missions but also quite widely now for large-scale ground-based astronomy applications.’

Currently e2v has a portfolio of around 50 projects, almost all of which are for space instrumentation. The next big programme the company is working on for ESA is for a science mission called Euclid, which will be a large space-based telescope studying dark energy and dark matter.

Solar power

E2v’s sensors are also onboard NASA’s Interface Region Imaging Spectrograph (IRIS) spacecraft, which has recently captured its first images of the Sun. The mission will observe how solar material moves, gathers energy and heats up as it travels through the Sun’s lower atmosphere.

IRIS is a high-precision instrument designed for gathering scientific data, but amateur astronomers studying the sky can provide valuable information to the astronomy community. Alan Friedman is one such amateur astronomer, whose photographs are exhibited in many space imaging galleries, but, he says, there is a scientific aspect to his pictures. ‘They are powerful images... but they are scientifically accurate as well as artistically rendered,’ he said.

He commented that it’s difficult for large observatories to study the Moon or the planets in our solar system, because their time is committed years in advance for various research programmes. However, amateur astronomers can occasionally discover things that are not seen by professional observatories simply because the large observatories are not looking. Jupiter, for instance, has had a number of impacts from asteroids and other objects striking its surface in recent years that were noticed by amateurs.

Gaia CCDs integration onto the CCD support structure. Credit: Astrium

Friedman has photographed the planets and the Moon, but he is most recognised for his images of the Sun, which he takes from a telescope in his back garden in Buffalo, USA. One of his pictures, a close-up of magnetic regions on the surface of the Sun, was runner up in this year’s Astronomy Photographer of the Year exhibition, which is currently being shown at the Royal Observatory in Greenwich, London, UK.

‘I have small telescopes and a busy life, so the morning look at the sun has worked out well for me,’ he recalled. ‘I have been able to develop some techniques for image processing that at one point looked quite different to what other people were doing.’

Friedman attenuates the light from the Sun by placing a piece of Mylar film in front of the telescope. He also uses special filters, such as a hydrogen alpha filter, which brings out certain features like prominences, gaseous flares extending from the Sun’s surface, or the texture of the chromosphere.

Friedman uses cameras from Point Grey to capture his images in a technique called lucky imaging (for more on lucky imaging, see panel). This is where a stream of images is taken, with the ones least affected by blurring from the atmosphere combined into one high-quality image.

‘It’s the streaming feature of the camera which is most important,’ commented Friedman. ‘The biggest problem in doing any of this high resolution imaging through the atmosphere is the turbulence of the atmosphere. It’s like looking through a pond when someone throws a stone in it and trying to see the bottom. The atmosphere looks transparent, but it really messes with the image. The streaming camera allows me to take many frames in a short period of time.’ He added that, if he had to fire a shutter to take the exposure, he would have to time that with a moment of stability in the atmosphere – the chances of doing which are very slim.

The image for the Astronomy Photographer of the Year exhibition used a Point Grey Flea camera operating at 120 frames per second. Friedman spent three hours waiting for a couple of decent moments of stability in the atmosphere for the image. ‘That image is made from data collected within two or three seconds after looking for three hours. It’s mostly a hunt for stability and that’s where the streaming camera is important,’ he said. He added that the higher resolution of a monochrome sensor compared to a colour chip is also an advantage with the Point Grey camera.

Once he’s captured his images, Friedman then uses software to analyse individual frames and rank them in order of quality. The sharpest frames are averaged together to lessen the digital noise and improve the signal. ‘You have a much nicer signal-to-noise ratio once you’ve stacked the images,’ he said. ‘Then you can process them, sharpen them, and draw out the details that might not be visible in each individual frame but is in the sum of them.

‘One of the things that was recognised in my work initially when I was first noticed, was that I usually take the chromospheric detail of the Sun and invert it,’ he continued, which makes certain features easier to see.

‘It presents the Sun in a different light than it is normally presented. I continue to try and make the images tell an interesting story of the Sun... but being faithful to the reality of it so that it has scientific meaning.’

Lucky imaging when combined with low-order adaptive optics should deliver images at visible wavelengths even better than the Hubble Space Telescope on the largest ground-based telescopes, says Craig Mackay at Cambridge University’s Institute of Astronomy

The Hubble Space Telescope (HST) has delivered images with a resolution eight times higher than can normally be achieved from the ground, even on the best astronomical sites. These images have revolutionised almost every branch of astronomy. As the HST nears the end of its life, astronomers will increasingly look to instrument builders to provide cameras capable of imaging with comparable or even better resolution than Hubble.

Unfortunately atmospheric turbulence normally limits ground-based angular resolution to about one arcsecond. Adaptive optic systems try to measure the atmospheric distortion of the wavefront entering the telescope and correct for it, but this is difficult to do in the visible spectrum.

With modern CCD detectors, an old technique called ‘lucky imaging’, whereby images are taken fast enough to freeze the motions of stars and then combined to achieve higher image quality, can give results that are as sharp as HST images on HST sized (~2.5m diameter) telescopes. The challenge then is to extend these methods to the larger telescopes, which are essential if we are to get even higher angular resolution.

Comparison images of the core of the globular cluster M 13. Natural seeing (~0.65 arcsec) on the Palomar 5m telescope (left); Hubble Advanced Camera for Surveys with ~120 milliarcsecond resolution (middle); and Lucky Camera plus Low-Order AO image with 35 milliarcsecond resolution (right).

Lucky imaging will not work on larger (>2.5m) telescopes, because the chance of finding a sharp image becomes vanishingly small. In Cambridge, we have been developing a new type of wavefront sensor that is particularly sensitive to large turbulent scales.

What we want to do is work out the instantaneous curvature of the distorted wavefront from a faint reference star. By looking at the intensity of the light from that star on either side of a conjugate pupil plane we see that the wavefront is diverging if a speckle goes from bright to dark as it passes through the pupil and converging otherwise. We can only measure intensity and this makes the propagation non-linear. So, in practice, it is better if we measure the intensity at four planes, two on each side of the pupil. These are measured at around 100Hz and the wavefront distortion computed in real time. The fitted wavefront is then scaled and used to drive a deformable mirror in the optical path to compensate for the errors. This lets us substantially reduce the effects of large-scale turbulence and restores the viability of the lucky imaging method.

The technique has already been demonstrated with a conventional adaptive optics (bright reference star) system on the Palomar 5m telescope to give the highest-resolution images ever obtained anywhere in the visible region.

We are fortunate to have been funded to build the next-generation instrument by the UK Science and Technology Facilities Council (STFC) called AOLI, an Adaptive Optics Lucky Imager. The construction of an instrument that will work on much fainter reference stars requires very high quality optics and a fair amount of clever engineering. The field of view of these instruments is small so the drive for faint reference stars is key to enabling the largest fraction of the sky to be imaged with these techniques.

The constraints on the quality of the optical components that we use are considerable. We need to achieve near diffraction-limited imaging over a wide wavelength range (500 to 1,000nm) and so need to use high-quality components. In addition, the mechanical mounts that we use must be robust while still allowing a good degree of adjustment. The telescope environment is rather difficult with the extremes of temperature and humidity. All the components and mechanisms must work reliably and consistently for many years with minimal attention or adjustment.

We have been very fortunate to receive a substantial award from Edmund Optics following an international competition for the most exciting and innovative optics project. This will allow us to obtain the range of optical components and mounts that are key to the success of the project.

Initially we will install the instrument on the 4.2m William Herschel Telescope on La Palma in the Canary Islands, and then on the much larger GTC, also on La Palma, which, at 10.5 metre diameter, should give us an angular resolution of up to 15 milliarcseconds, more than a factor of 60 better than can be achieved from the ground. The system will give scientists new insights into a very wide range of astronomical problems and will, we hope, provide a revolution in astronomical imaging comparable to that of Hubble.

--

Craig Mackay’s team won the European section of Edmund Optics’ (EO) 2013 Higher Education Global Grant Program, being awarded €7,000 worth of EO products. The award is given in recognition of outstanding undergraduate and graduate optics programmes, and Edmund Optics awarded more than $85,000 USD in EO products to winners in the Americas, Asia, and Europe in support of research and education activities.