Unless your camera is peering back at the Earth or staring at the Sun, imaging in space generally involves trying to capture very faint images of very distant objects with very low light. This means you need very big pixels and long integration times. What’s more, your imager has to survive the buffeting of launch and then operation in space, a bitterly cold, radiation-filled environment.

Industrial imaging, in contrast, is mostly done in a safe, generally radiation-free warehouse with steady and abundant artificial light, rapidly capturing details of successions of nearby objects. Like many consumer applications, very small pixels and short integration times are the watchwords for machine vision in an industrial setting. But despite these glaringly different requirements, space and industrial imaging have crossed paths on many occasions, often to dazzling effect.

Space to ground and back again

NASA’s Hubble Space Telescope has been churning out jaw-dropping images of the cosmos since the 1990s. Though somewhat overshadowed now by the James Webb Space Telescope, the charge-coupled device (CCD) sensors inside Hubble’s two primary camera systems – the Advanced Camera for Surveys (ACS) and the Wide Field Camera 3 (WFC3) – continue to transmit down to Earth breath-taking views of the heavens and important scientific data. So, it is bizarre to think that mission planners were seriously considering using old-fashioned film, and sending astronauts up and down regularly to retrieve it.

Fortunately, CCDs were a fairly mature technology by the end of the 1980s, when Hubble was being prepared for its 1990 launch. The first CCD digital camera was created in 1975 by Steven Sasson at Eastman Kodak and the first camcorder – Sony’s CCD-V8 8mm – launched in 1985. Sense won out and NASA fitted Hubble with bespoke versions of the latest CCD technology. Hubble’s spectacular images of the distant universe helped popularise CCDs, which went on to replace film in a host of different applications.

In 1993, just as Hubble was receiving its first human visitors to restore its blurred vision – marred by spherical aberration in its mirror since first light – a different NASA team at the Jet Propulsion Laboratory was creating a revolutionary new imaging technology: the complementary metal oxide semiconductor (CMOS) sensor.

Led by Eric Fossum, the team was aiming to significantly miniaturise NASA’s future cameras yet maintain their scientific image quality. Their CMOS active-pixel sensor (CMOS-APS) consumed as much as 100 times less power than CCDs, allowed for smaller camera systems and could be manufactured by the same low-cost process used to build microprocessors and other semiconductor devices.

Fossum soon realised the technology held as much promise on Earth as it did in space. He and colleagues founded Photobit in 1995. By June 2000, Photobit had shipped 1 million CMOS sensors for use in popular web cameras, machine vision solutions, dental radiography, pill cameras, motion-capture and automotive applications. With the advent of camera phones, in 2008 the company (after acquisition and spin-out, then called Aptina Imaging Corporation) shipped its 1 billionth sensor.

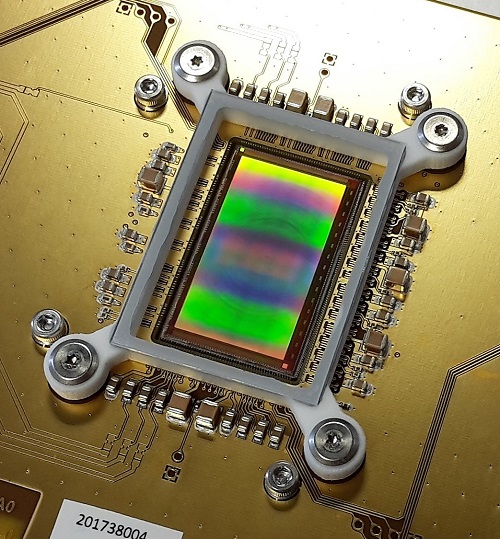

The CMOS revolution was a boon to both terrestrial applications and space-based ones, sometimes with the two overlapping. “The CMOS image sensor for the Mars Perseverance Rover was initially developed for traffic monitoring,” says Professor Guy Meynants (KU Leuven, Belgium), an expert in industrial and space image sensor design and technology who co-developed the 5,120 x 3,840-pixel resolution sensor in 2007 at the company he co-founded, Cmosis. “That was actually the first really high-resolution global shutter CMOS imager with a decent speed; 30 frames per second.”

NASA's Mars Perseverance rover acquired this image using its onboard right navigation camera (Navcam), one of nine engineering cameras using CMV20000. Credit: NASA/JPL-Caltech

In a global shutter sensor, all the pixels acquire the image at the same moment in time. This is important for autonomous driving on Mars, where all the cameras must capture images at the same moment when the rover is moving to avoid rocks and obstacles. With a more common rolling shutter sensor, like those in webcams or mobile phones, every pixel row has a slightly different exposure period, meaning fast-moving objects appear deformed.

Despite its age, the Perseverance sensor – the CMV20000 – is still sold by AMS for a host of applications, including machine vision, 3D imaging, motion capture, and various scientific and medical purposes.

CCDs hang on in space

Today, the CMV20000 is in good company, with CMOS image sensors having overhauled CCDs in virtually every sphere. In industrial vision, for example, Sony (one of the most important international sensor manufacturers) began its phased discontinuation of CCD production as early as 2015.

“CCDs are replaced in most industrial and certainly in almost every commercial application by CMOS image sensors,” confirms Dr Nick Nelms, who has been developing detectors at ESTEC, the European Space Agency (ESA)’s Research and Technology Centre in the Netherlands, for 16 years. “And one reason for that is because they are much easier to manufacture in volume under modern semiconductor processes, they're much easier to operate and you can incorporate a lot more electronic functionality onto the actual detector itself, in terms of the operation and post-processing for instance.”

But CMOS image sensors have not enjoyed the same all-conquering success in space. Some of the latest missions, including ESA’s GAIA (launched in 2013) and ExoMars Trace Gas Orbiter (2016) and NASA’s Juno (2011) and Lucy (2021) missions, feature CCD detectors. Why?

Frédéric Devrière is Business Marketing Manager at Teledyne e2v, a company that has produced image sensors for over half of ESA’s current fleet and a huge number of other space missions, and owns one of the last remaining CCD foundries in the world, based in Chelmsford, UK. He says that although CCDs are an old technology, they are easy to tweak to the desired requirements and relatively cheap in low volumes.

Moreover, CCDs have some attractive qualities for astronomy: “You have higher voltages to drive pixels, so you can capture far more light and reach some parts of the spectrum that you cannot with standard CMOS image sensors,” he explains. “Astronomers are looking to the near infrared bands above 800 nanometres and with standard CMOS, you see almost nothing in these bands.”

Another key reason is that what astronomers want bears little resemblance to what the CMOS foundries make on a day-to-day basis. “CMOS image sensors made for consumer applications have very small pixels, around a micron, even less sometimes,” Devrière continues. “For astronomy, we go up to 100-micron pixels, which would be very difficult to do in CMOS but can easily be done in CCD.”

What’s more, CMOS foundries are simply not that interested in short-run bespoke deals when they can make huge profits from churning out sensors for the terrestrial commercial and industrial world. “We're interested in maybe two to four batches of wafers, whereas a CMOS foundry is putting through tens of thousands of wafers per month,” explains Nelms. “Whatever we would order is really of no consequence for them, so we carry very little weight in terms of saying how they should do things – so there is a divergence in what you would call commercial state of the art and what is suitable for us.”

Current cross-pollination

Where space imaging comes closer in step with industrial imaging is Earth observation. Current and future missions require data at higher temporal resolution than CCDs can muster, in the order of hundreds of frames per second. “CHIME is a good example of where they're looking at 200-300 frames per second,” says Nelms. “This is not going to happen with CCDs.” ESA is working with design houses across the EU member states, including Teledyne e2v and Belgian detector developer Caeleste – which also designs high-speed industrial and professional imagers – to provide the high-end, beyond state-of-the-art CMOS image sensors required for this and other missions.

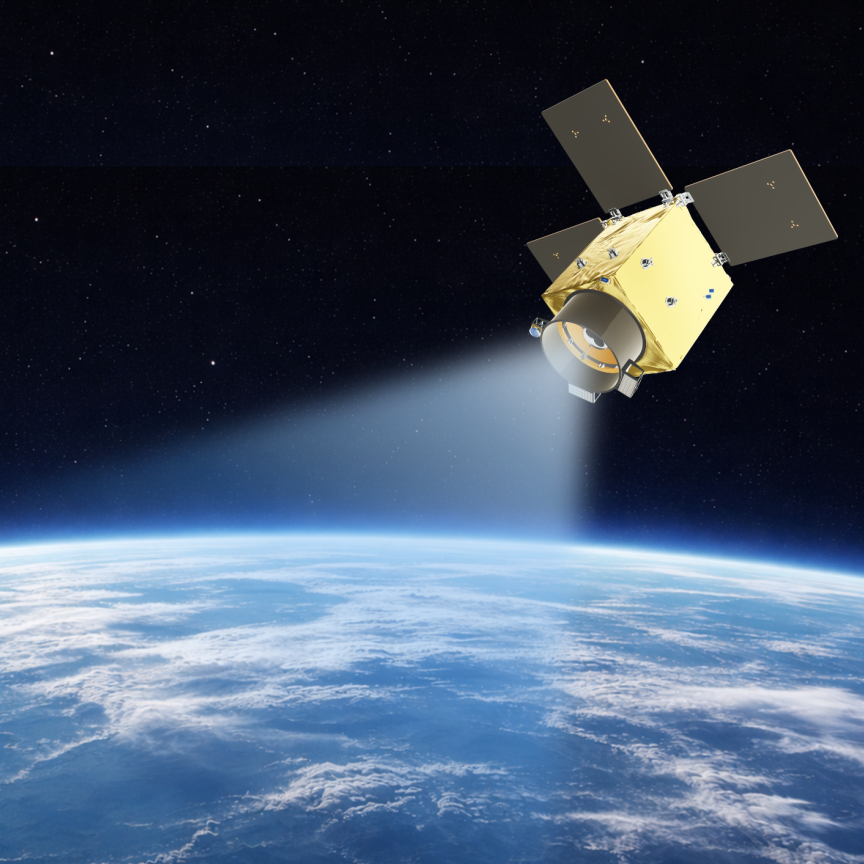

Elsewhere, Teledyne e2v has been answering the demands of the emerging NewSpace market (the private space industry) by taking a commercial off-the-shelf “plus” (COTS+) philosophy, allowing shorter development times and lower costs, while ensuring that quality control remains intact.

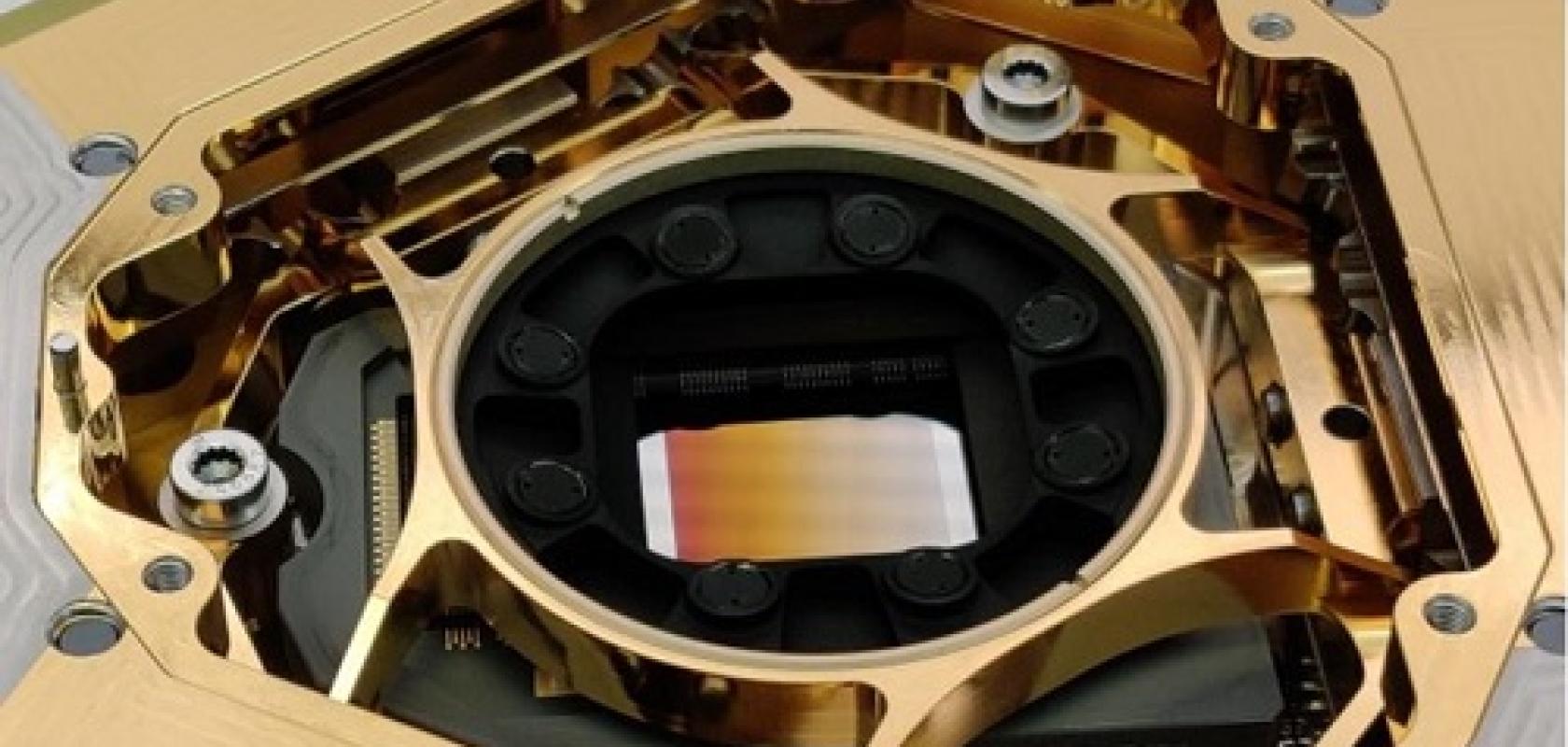

European Low Flux Image Sensor, which is a large-format CMOS image sensor for space applications being developed by ESA with industrial partner Caeleste. Credit: Caeleste

“Traditionally, we've been designing sensors for very big satellites that cost a lot of money, meaning they can afford to have very custom designs,” says Devrière. “With NewSpace satellite constellations, costs must be far below $1 million.” Some of Teledyne e2v’s most popular NewSpace COTS+ imagers include 2-3MP star tracker cameras – required to position the satellite in space – that were originally used for security and industrial process control applications on terra firma, and 30-100MP imagers now snapping pictures of Earth from above that were originally used as machine vision systems.

Future trends

Solid-state detectors – particularly CCDs and CMOS sensors – have revolutionised both space and industrial imaging. But they are not the last word. “The perfect detector for astrophysics is one with zero noise, high quantum efficiency, extremely good bandwidth, high dynamic range, easily scalable to large arrays, and does not require cooling,” says Dr Mario Perez, NASA Astrophysics Chief Technologist. “We don’t yet have all of these desirable attributes integrated in one detector.”

Eager to try to meet these demands and better illuminate the photon-starved environment of deep space, NASA is exploring how to detect and extract as much information as possible from single photons. The choice of which kind of single-photon detector to employ on a space telescope will depend on the specific science investigation – the search for signs of life through exoplanet imaging or spectroscopy, understanding galaxy evolution over cosmic time through large-sky surveys, tracing water over protoplanetary evolution, etc – but some exciting candidates are emerging.

“Some of the devices that have shown great progress in meeting a subset of the criteria for an ‘ideal’ single-photon detector are superconducting bolometers, kinetic inductance detectors [KIDs], transition edge sensor [TES] detectors, quantum capacitance detectors [QCDs], and tunnel-junction detectors,” outlines Perez. “But the superconducting nanowire single-photon detector, known as SNSPD, particularly stands out”.

SNSPDs were first demonstrated over two decades ago and consist of a thin, narrow superconducting nanowire patterned to form a square or circular pixel. A bias current just below the maximum supercurrent that the nanowire can sustain is applied to the SNSPD, so that a single photon absorbed by the nanowire can locally break superconductivity, giving rise to a brief voltage pulse that can be detected. Because this internal physical process is inherently very fast, SNSPDs have a high instantaneous bandwidth of detection when compared to other single-photon detectors such as KIDs and TESs.

SNSPDs can be tuned across wavelengths from optical to mid-infrared, and have lower cooling requirements. But their main advantage comes from the fact that they are capable of measuring the intensity (flux) of the photon with nanosecond-level time-of-arrival accuracy, combined with low timing jitter, near-unity quantum efficiency, very low dark count rates, competitive dead times, and in some cases energy or photon-number resolution, and position information. All this adds up to a powerful combination of properties for an astronomical detector. From this, says Perez, researchers can build a 3D data cube representing all the photons arriving on the detector at different times, providing a far richer understanding of whatever cosmic object they happen to be measuring.

In 2013/14, NASA trialled SNSPDs in the first demonstration of laser communication beyond Earth orbit, where the LADEE spacecraft circling the Moon communicated with two ground terminals, both equipped with SNSPD arrays. This opened the possibility of improved deep space optical communication, but where Perez hopes SNSPDs will benefit NASA most is in the agency’s astronomy and astrophysics exploration. “I think the next set of missions five to ten years from now will have single-photon detectors for deep space,” he says. “The problem is that they require very exquisite cryogenics because most of them are superconducting devices – this is being worked on now.”

Already available commercially from the likes of ID Quantique and Hamamatsu, SNSPDs have found use in various industries and research fields, including quantum communications, optics and computing, as well as lidar and spectroscopy. Providing unprecedented low-light sensing, might they be the next big advance in industrial imaging too?