According to the European Commission, transport, in particular traffic on roads, represents almost a quarter of Europe’s greenhouse gas emissions, and is the main cause of air pollution in cities. The commission’s low-emission mobility strategy aims to tackle this by, among other things, making use of digital technologies to improve the efficiency of transport networks.

A team at Siemens’ Data Analytics and Application Centre (DAAC) in Munich, Germany, is analysing driver behaviour with large amounts of traffic camera data. The information, according to David Montgomery, implementation manager for the centre, could be used by councils to identify potential accident black spots, so that preventative action can be taken. The prescriptive analytics could also be used to give recommendations for managing local traffic.

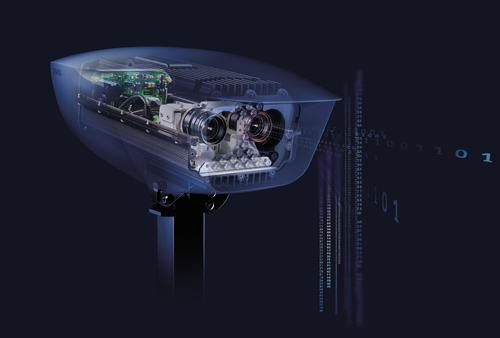

Among the images being considered are those taken on Siemens Mobility’s smart traffic camera, the Sicore II. It uses a dedicated processor to analyse the images it captures and can adjust gain, exposure, flash intensity and duration. This enables it to capture both the number plate and model of multiple moving vehicles at the correct exposure, even when vehicles are travelling at speeds up to 300km/h, and in both day and night conditions. Such data is captured using both an infrared automatic number plate recognition (ANPR) camera, and a colour visible overview camera, both in HD.

Sicore II also has an integrated vision engine with machine learning capabilities. ‘The vision engine will allow us to grow the camera’s capability to detect all sorts of driver behaviour, tracking the vehicle precisely through the field of view and capturing evidence of a range of scenarios,’ explained James Riley, Siemens’ global product marketing manager for enforcement solutions. ‘We are moving towards understanding driver behaviour far better, with the aim not merely of identifying areas of risk, but also of anticipating and acting to prevent accidents altogether.’

Such preventative action could involve informing other vehicles when a Sicore II camera detects a speeding car, or a vehicle that is about to jump a red light, or take an illegal turn. This could be done using Siemens’ V2X (vehicle to everything) technology, which enables roadside infrastructure to communicate directly to nearby vehicles over a wireless communication protocol.

Markus Schlitt, Siemens Mobility’s head of intelligent traffic systems, commented that improvements in traffic cameras will often flow down from innovation in the consumer sector. Examples of possible future improvements that Schlitt lists include: the ability to change settings between exposures more quickly; the ability to perform multiple exposures in a single frame; and further improvements to the speed, size and cost of the technology.

Schlitt added that there could also be more integration between application layer software and the low level camera FPGA, which would enable closer interaction between a traffic camera’s analytics and the camera sensor. He also described that if easier customisation of a camera’s FPGA functionality was enabled, then OEMs, such as Siemens Mobility, could integrate their functionality directly in the camera, and some companies are already doing this.

Lastly, Schlitt said that while for many years global shutter has been the only option to cope with the requirements for the traffic sector – currently used in the Sicore II – recent improvements in the speed of rolling shutter cameras offer the possibility of significant cost reductions in the sector, without compromising image quality, even when operating at high speeds.

Seeing the light

A lot of what makes an effective traffic camera is exposure control and the dynamic range of the system. If a camera is facing east or west, for example, then at some point during the day the sun will shine directly into the lens.

Alternatively, at night, a car’s headlights might end up pointing at the camera. High contrast scenes also occur near the entrance of a tunnel, or under a bridge, where a camera positioned there will have to capture both high and low levels of light simultaneously.

High dynamic range (HDR) systems are effective at tone mapping and processing a scene with a high contrast range, as they are able to take a sequence of narrower range exposures and merge them in order to render both the brighter highlight detail and the darker shadow detail.

Pinnacle Imaging Systems recently partnered with On Semiconductor to develop a new, lower cost HDR video surveillance solution capable of capturing high-contrast scenes up to 120dB and displaying them at 30 frames per second. The video platform, running on a Xilinx Zynq 7030 system-on-a-chip and using an AR0239 CMOS image sensor from On Semiconductor, is designed to offer richer data to camera and AI developers.

‘This is a full image signal processor (ISP) reference design that can be used as the imaging unit in traffic control systems or surveillance cameras,’ commented John Omvik, vice president of marketing at Pinnacle Imaging Systems. ‘We can offer it as a full ISP, or we can provide just the HDR logic components for somebody else’s image signal processor.’

The AR0239 CMOS sensor, from On Semiconductor, captures up to three different exposures for each frame when imaging a high-contrast scene: one long exposure for the shadow regions, a medium exposure for the mid-tones, and a short exposure for the highlights.

‘Our Denali-MC ISP automatically takes the best portion of each of these images, and merges them into a single HDR frame that is then tone mapped to render the complete tonal range of the original scene so that it can be displayed on a screen or processed by an analytics engine,’ Omvik explained. ‘This is done in real time in full 1080p HD, giving traffic systems lots of resolution to work with.’

High contrast scenes need cameras with a high dynamic range to pick out details in the image. Credit: Pinnacle Imaging Systems

Omvik added that by capturing the multiple exposures for each frame virtually simultaneously, the solution is useful for traffic applications, such as capturing red light violations in high contrast circumstances, where a single exact moment in time is needed for evidence documentation. The high resolution images offered by Pinnacle and On Semiconductor’s HDR solution have also generated interest in companies making vehicle-based dash cam systems for police departments, where the requirements regarding the quality and integrity of the image signal are getting stricter.

‘What is unique to our design is that we do all of our processing in what’s called the raw Bayer domain,’ Omvik explained. This includes all the merging and tone mapping, after which the single channel of data is fed into a de-mosaicing block to produce an RGB image. By organising the image processing in this way – rather than applying the de-mosaic process at the beginning of the workflow, as is the case in most cameras – there is only one channel of data to process. The idea is to improve performance and reduce computer requirements.

Omvik expects that the richer data offered by the new HDR solution will become increasingly important as more applications, such as traffic monitoring and analysis, become automated, and rely on computers to perform analysis, rather than humans. ‘As that happens, the better the content being fed into these recognition systems, the better the results will be from them,’ he said.

Polarisation power

Another improvement in camera technology that could have uses in traffic monitoring is the polarised image sensor Sony released last year, which a lot of camera manufacturers have now incorporated into their product offerings.

Imaging polarised light removes reflections from windscreens, revealing the occupants of the vehicle. This is useful for applications such as checking that vehicles using a carpool lane have more than one passenger, for example.

Traffic cameras have previously used a polarising filter in front of the lens, but this is only a partially effective solution, according to Stéphane Clauss, senior business development manager of Sony Image Sensing Solutions (ISS) Europe. ‘The disadvantage of using this kind of setup is that the filter is only capable of removing a very specific reflection orientation, so it was working to some degree, but not in all cases,’ he explained.

Sony released the IMX250MZR/MYR global shutter polarised imaging CMOS sensors last year, and ISS followed this with its XCG-CP510 polarised camera module.

Siemens Mobility's smart traffic monitoring camera, Sicore II, uses sensors imaging in both the visible and infrared. Credit: Siemens Mobility

‘The polarised sensors that we released last year provide a much more capable and flexible solution, due to their use of a pixel-level polarising filter structure, which offers four orientations of polarising filter, rather than the one offered by the previously used setups,’ commented Clauss. ‘The sensor is a much more flexible solution, as it is able to remove reflections from most surfaces regardless of their shape – many windscreens are now curved – and regardless of the direction that a vehicle is facing, or the direction where sunlight is coming from.’

Thanks to their ability to remove reflected light consistently from any angle, cameras using the new polarised imaging sensors are much simpler to install. Clauss added: ‘From the integrator’s perspective, instead of having to try and position the camera in a particular location so as to avoid reflections, they can install the camera in whatever location is most convenient for them, as the camera will always be capable of filtering unwanted reflections, and focus on what needs to be inspected.’

In addition to offering the capabilities of the new polarised sensor architecture, the XCG-CP510 camera module offers a number of embedded features that help make its acquisition process more efficient. Its precision time protocol feature, for example, enables a number of networked cameras to be synchronised together. This is particularly important in the intelligent transportation systems (ITS) market, as the images captured in a camera network must all be time-stamped, so that when the authorities examine multiple images of a particular event, they are all captured at the same time.

The software provided with the camera also offers algorithms that remove reflections automatically, while providing high resolution and good contrast images that can be used with third-party algorithms.

‘Providing this software with the camera will make our polarised imaging technology much easier and faster to adopt by customers looking to process the captured images with their own algorithms, for applications such as counting the number of people in vehicles or reading number plates,’ Clauss said. ‘Previous polarised imaging setups have not offered the level of efficiency required to assist this application.’ The images can also help police pick out drivers using mobile phones, or those not wearing their seatbelts.