The biological eye is a marvel of nature refined over millions of years of evolution. Machine vision manufacturers and researchers have taken inspiration from nature to produce new vision systems that emulate the way eyes see, completely overthrowing the traditional machine vision architectures used over the past half a century.

Conventional image sensors capture information by receiving signals from a timer that exposes their array of pixels for a fixed period, regardless of what happens in the field of view. This leads to data being captured unnecessarily if nothing happens in the scene, or for data to be lost if an event happens very quickly between frames.

In contrast, biological vision systems are efficient, allowing the brain to process huge amounts of visual input without using too much energy. By taking inspiration from this frameless paradigm and translating it to dynamic vision sensors, image acquisition can be transferred from an array of pixels to a set of smart pixels that can control how data is captured individually.

These smart pixels are able to deliver visual information more efficiently, by responding only to occurrences within their field of view – such as a change in lighting or movement – similar to how the individual cells of an eye respond to temporal changes. They are then able to self-adjust their acquisition speeds and exposure times depending on the change, meaning that if an event happens at speed, they acquire data quickly, while if less is happening, they can slow down their capture appropriately. Exposure can also be adjusted according to brightness, which removes problems associated with over and under exposure.

‘The pixels monitor increases and decreases in photocurrent that are beyond a threshold relative to the initial amount of illumination,’ explained Dr Simeon Bamford, CTO of Swiss neuromorphic vision firm Inivation. ‘The response of the sensor is [therefore] approximately equivalent, whether in very high illumination or very low illumination.’ This reflects the way eyes work, which react to lighting changes in a scene.

By responding to only the changes of a scene, bio-inspired sensors output a sparse stream of events rather than a sequence of images, each encoding the location of the pixel that sent it and whether the change was positive or negative. This results in a large reduction in data acquisition and processing, according to Luca Verre, co-founder and CEO of bio-inspired vision firm Chronocam, as well as reductions in information loss, as data can no longer be lost between frames if an event occurs too quickly. ‘We can control the bandwidth and the sensitivity of the sensor by regions of interest, and can exclude a region of interest from sending out information … so we can actually further compress the data,’ Verre added.

The signals from events also occur on a scale of microseconds, enabling very low latency vision as each frame doesn’t have to be exposed and transmitted. Overall, this translates to imaging systems that use much less power, as little computation has to be done when picking out the relevant elements of a scene.

On top of these benefits, according to Verre, by having each pixel adjust independently, a very high dynamic range can be achieved. The difference between this and our own vision’s high dynamic range is that a dynamic vision sensor can adapt to light changes in microseconds, whereas the eye can take some minutes to adapt.

Chronocam was founded three years ago with the initial objective of building a silicon model of the human retina and bringing the resulting sensor to market. Now the company produces such a sensor as well as its own software, which performs new bio-inspired methods of computer vision and machine learning that are adapted to the way the sensor captures information.

‘Chronocam’s technology is leading to high speed, real time machine vision,’ commented Verre. ‘Now we can effectively run at tens or hundreds of kiloframes per second equivalent, while producing very little data that we can process in real time.’

Inivation’s own Dynamic Vision Sensor, which carries the same name as the type of sensor it represents, came out of a line of research that stretches back at least 30 years. According to Bamford, it was the first silicon retina model that overcame practical problems that were previously preventing them being viable for machine vision.

While this sensor, being Inivation’s first-generation bio-inspired offering, is purely a dynamic vision sensor, the firm’s second-generation sensor, the DAVIS (Dynamic and Active-Pixel Vision Sensor), has the ability of being able to produce a video stream alongside its event stream.

‘Fundamentally, this is a CMOS vision sensor with a very different pixel design,’ Bamford explained. ‘In the second-generation sensors we’ve combined the standard APS global shutter circuit with the dynamic vison sensor circuit, so that you can have a global shutter exposure and, even while the exposure is happening, if there’s a fluctuation in the photocurrent, the very same pixel can produce a string of events.’

Hundreds of universities and companies have already purchased prototypes of the new sensor and are currently working with Inivation to help develop algorithms and applications that will enable it to be commercialised. ‘There’s a huge amount of interest because of the unique advantages of the sensor,’ commented Bamford, who also said that a third-generation device is now being developed, one which uses CMOS image sensor processes, micro-lenses and colour filters, and can be used in an improved range and quality of applications.

In addition to its sensor offerings, Inivation also develops cameras, with outputs very different to standard imaging devices.

‘There’s the frame stream, which is the same as a normal camera, and then there’s the event stream in parallel, which is completely novel,’ Bamford explained. ‘Most of our prototypes also contain an inertial measurement unit, and the data from this is also built into the event stream, which is helpful for positioning and robotics applications.’

While the highest resolution camera currently achieved by Inivation is VGA standard, the dynamic vision sensing technology has a temporal resolution of microseconds. ‘In certain applications a very high temporal resolution can compensate for low spatial resolution,’ said Bamford. ‘When tracking movements, a limited number of high temporal resolution samples allow you to get a very accurate fix on a spatio-temporal plane.’

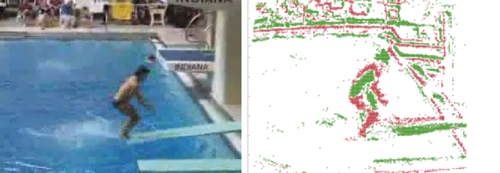

Comparison of a video frame with an Inivation DAVIS camera taken as part of the IoSiRe project. Green/red point correspond to +1/-1 (on/off) spike polarity. Credit: Andreopoulos et al

With their ability to optimise data acquisition and processing, more conventional image sensor firms and computer vision companies are exploring bio-inspired approaches, which are becoming more accessible thanks to modern manufacturing techniques, such as 3D stacking wafers, according to Verre. Software advances are also aiding the development and uptake of bio-inspired imaging, with Bamford explaining that a big push for Inivation has been the recent proposal of several good simultaneous and localised mapping (SLAM) algorithms that operate using the event-based information of dynamic vision sensors.

Despite these enabling developments, certain hindrances still prevent the full uptake of bio-inspired technologies, such as its large differences from standard vision that make it difficult to integrate into current machine vision environments.

‘The hardware and system-on-chip integration of bio-inspired sensing is still maturing, and this creates a barrier to entry in comparison to conventional active pixel imagers,’ confirmed Dr Yiannis Andreopoulos, one of the researchers leading efforts at University College London (UCL) as part of a collaborative project that began earlier this year to explore the bandwidth, delay and energy saving advantages of using bio-inspired vision in Internet of Things (IoT) environments.

‘In addition,’ Andreopoulos continued, ‘[bio-inspired] spike-based sensing is still incompatible with the way gradient-based machine learning works, such as deep convolutional or recurrent neural networks, because the latter require a regular space-time sampling grid. However, spike-based hardware for machine learning has been produced – or upgraded – recently, for example the IBM TrueNorth chip and the Intel Loihi chip. These are a natural fit for bio-inspired spike-based sensing, even though they are also based on gradient-based machine learning methods.’

Moving into the real world

The optimised data acquisition and processing of bio-inspired imaging has led it to be considered for a number of uses, such as autonomous driving, robotics, and medicine.

‘The autonomous driving industry is converging on lidar as a primary sensing method. However, a complementary visual solution is also needed,’ said Bamford. ‘DAVIS, in particular, is very promising because of its low latency, high dynamic range and limited data stream.’ Additionally, the wide dynamic range of dynamic vision sensors makes them suitable for the varying lighting conditions of driving, such as when entering and exiting tunnels or travelling in different levels of sunlight. Companies such as Intel, Renault and Bosch have already invested in Chronocam, with automotive applications in mind.

Inivation and its clients also see huge potential for dynamic vision sensors in robotic sensing and cooperation, as they can act as complementary safety systems for the robots by scanning the vicinity for people and obstacles. The low latency and consistent performance of the sensors in uncontrolled lighting conditions will also be key here, according to Bamford.

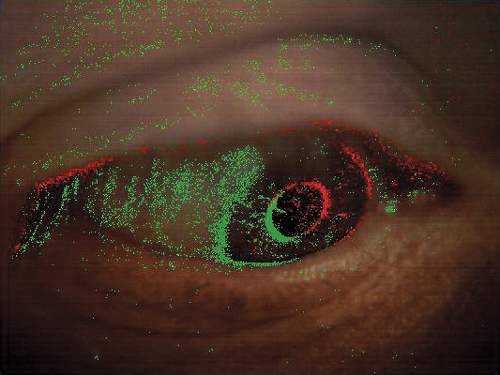

The pixel-level events recorded by Inivation's DAVIS640 dynamic vision while viewing eye movement. Credit: Inivation

Industrial inspection and identification are also potential application areas for dynamic vision sensors, as their low power enables high-speed, real-time performance, whereas standard cameras, in comparison, require large amounts of power and processing to achieve the same result.

In the medical field, Chronocam has already highlighted how close its sensor technology lies to natural vision, as two years ago it used its own sensors with Pixium Vision to help restore the sight of blind people by connecting them to retinal implants. ‘We stimulate the brain using our sensor as an input device,’ Verre explained. ‘This is now commercialised around Europe for visually impaired people.’

Dynamic vision sensors will find their way into day-to-day and industrial applications in the future, according to Bamford, with chips smaller and more advanced. ‘We see clear pathways to making the sensors even better than they already are, improving latency, data rate bandwidth and frequency response,’ he said. ‘We’re going to be able to realise the vision of very low power end-to-end systems.’

The internet of silicon retinas

In July the Internet of Silicon Retinas (IoSiRe) project started, a collaborative £1.4 million effort funded by the UK’s Engineering and Physical Science Research Council (EPSRC) involving UCL, King’s College London and Kingston University. The project aims to explore how bio-inspired vision could be used in conjunction with cloud-based analytics in order to achieve state-of-the-art results in image analysis, image super-resolution and in a variety of other image and vision computing systems for IoT-oriented deployments in the next 10 to 20 years.

‘Essentially, the goals of the project combine the consortium’s unique expertise in sensing, machine learning and signal processing, and communications, in order to quantify the energy and latency savings in comparison to what is done today with conventional frame-based sensing, processing and transmission,’ explained Andreopoulos, of UCL.

Within the project, the consortium will be using Inivation’s Dynamic Vision Sensor among other low-power spike-based visual sensing hardware and combining it with low-power processing to create compact representations that can be processed using deep neural networks.

‘At the moment, converting spike-based sensing to representations appropriate for deep convolutional or recurrent neural networks is an open problem,’ said Andreopoulos.

‘If end-to-end resources are to be considered – sensing, on-board processing, and transmission to multiple cloud-based Docker container services – creating a framework that can provide for scalable resource tuning is a very interesting problem.’

Industrial partners of the project have expressed interest in a wide range of applications, from cognitive robotics to smart home and smart environmental sensing at very low power and latency. ‘We would be interested to learn more about industrial use cases, as this is definitely something we are keen to explore in the project and beyond,’ concluded Andreopoulos.

Mantis shrimp-inspired camera combines colour and polarisation

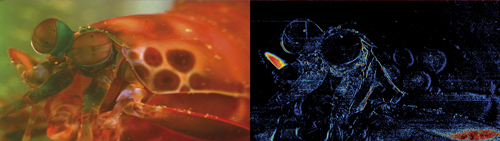

Still frame of Odontodactylus scyllarus underwater with polarised antenna scales. Left: colour image. Right: degree of linear polarisation represented in a false-colour map, where red and blue indicate highly polarised and unpolarised light. Credit: Gruev et al

Many biomedical applications would benefit from being able to capture both the colour and polarisation properties of light, for example in cancerous tissue detection, which requires polarisation information to be superimposed onto anatomical features captured in colour camera images.

Unfortunately, many of today’s colour-polarisation imagery technologies are complex, bulky, expensive and offer intolerably low polarisation performance, as well as limited translation to field experiments for the study of naturally occurring phenomena.

Professor Viktor Gruev and graduate student Missael Garcia, from the University of Illinois and Washington University in St Louis, have designed a camera inspired by the eyes of a mantis shrimp. The camera can record both colour and polarisation imaging data on a single chip, while costing less than $100 to produce, according to the researchers.

The mantis shrimp has two apposition compound eyes, sensing 16 different spectral channels, four equally spaced linear polarisation orientations, and two circularly polarised states. It is one of the best-adapted predators in shallow waters, thanks to its visual system.

The researchers stacked multiple photodiodes on top of each other in silicon to see colour without the use of special filters. They then combined this technology with metallic nanowires to replicate the portion of the mantis shrimp visual system that allows it to sense both colour and polarisation. This created a high-resolution colour-polarisation camera with better than 30 per cent quantum efficiency in the visible spectrum and high polarisation extinction ratios.

‘By mimicking the space- and energy-efficient implementation of the mantis shrimp visual system, we have designed a compact, single-chip, low-power colour- and polarisation-sensitive imager,’ Garcia commented. Testing revealed that the new sensor captures co-registered colour and polarisation information with high accuracy, sensitivity and resolution (1,280 x 720 pixels).

The technology can be used in a wide range of applications, including remote sensing, cancer imaging, label-free neural imaging and the study of new underwater phenomena and marine life behaviour.

In the future the two researchers plan to fully mimic the hyperspectral and polarisation-sensitive capabilities of the mantis shrimp’s eye, in addition to extending the multispectral concept to be able to detect multiple narrowband spectra.