At the beginning of November, the Bloodhound land speed record car took another step closer to attempting to break the world land speed record of 763mph, reaching 491mph in a test run in the South African desert.

The jet-propelled car, rescued from administration by businessman Ian Warhurst in December 2018, is the culmination of a decade of development, a project that’s more about showcasing engineering expertise, trialling new technology and inspiring students, rather than breaking the record per se.

The test runs in South Africa were to gather data on every aspect of the vehicle, from how well the engine operates to braking and aerodynamics. Stemmer Imaging supplied cameras to test components early on in the Bloodhound project, including a high-speed camera from Optronis to study the rocket plume.

Bloodhound is an extreme example of a road vehicle – a cross between a car and a jet plane – and the cameras used in the project have to image very fast events such as jet engine ignition. But all automotive manufacturers make use of high-speed cameras to some extent, to test the performance and safety of new cars. Aicon 3D Systems, for example, has built an optical measurement system based on high-speed cameras, which records the performance of wheels being tested in prototype vehicles. WheelWatch uses Mikrotron EoSens cameras to measure parameters such as track, camber inclination, spring travel, clearance and steering angle of car wheels, all while driving at speeds of up to 155mph.

Automotive manufacturers will drive prototype vehicles millions of miles for testing. WheelWatch is used to monitor the wheels to see how they behave during extreme manoeuvres, how they handle bumps and wet roads, and whether the wheel wells provide enough room when making sharp turns.

The EoSens cameras used in this system are able to run at 1.3-megapixel resolution at 500 frames per second, with 90dB dynamic range. One camera is attached per wheel. Coded measurement targets are placed on the mudguard with which each camera aligns itself. The system recalculates its position continuously in relation to these mudguard targets to achieve positional accuracy of ±0.1mm and angular accuracy of ±0.015°. The wheel is also fitted with a pattern of dots to collect the data.

Up to four cameras can be synchronised with each other, and other measurement sensors. Movements in the engine block can also be detected using additional cameras.

Kaya Instruments' JetCam with a Camera Link HS interface to transfer video over fibre optic cabling

This sort of monitoring system is a typical example of where high-speed cameras are used – recording fast events when testing automotive or aerospace components. Michael Yampolsky, CEO of Kaya Instruments, which supplies high-speed cameras, defines high-speed imaging as any application requiring more than 20Gb/s of bandwidth, which is 2-megapixel resolution at 1,000fps or 5 megapixels at 400fps.

In factories, cameras operating at high frame rates are predominantly for troubleshooting machinery and not traditional inspection, according to Stephen Ferrell, director of business development, Americas, at Mikrotron. Conveyance transfer points, filling, capping, labelling, stamping, indexing, stacking, inserting, cutting and trimming are operations Ferrell listed that can occur in milliseconds and require hundreds to thousands of frames per second to capture and analyse motion components of the machines. These processes occur across many industries, such as automotive, consumer products, electronics and pharmaceuticals.

When operating at fast frame rates, FPGA or GPU processors become important for image analysis in real time, says Yampolsky.

The WheelWatch images are assessed onboard a Mikrotron camera equipped with an FPGA before they are sent to a laptop inside the car via a GigE interface. Wheel target positions and target trajectories are available shortly after image acquisition. In addition, WheelWatch computes all six degrees of freedom of the wheel in the vehicle co-ordinate system.

‘To date, machine vision solution vendors have mostly relied on using GPUs with CPUs in high-end PC-based systems to achieve high processing throughput,’ said Ferrell. Recently, however, FPGAs are being used for embedded image processing algorithms.

‘With the highly parallel design of FPGAs image pre-processing, image segmentation, feature extraction and image interpretation in the spatial and frequency domains, using linear and non-linear filters can be performed in real time at hundreds or thousands of frames per second,’ Ferrell said.

Coded measurement targets are placed on the mudguard with which each camera aligns itself. Credit: Mikrotron

The latest member of Mikrotron’s EoSens line of CoaXPress cameras, the EoSens 2.0CXP2, has a Xilinx Kintex UltraScale 35 FPGA, 2GB of internal DRAM memory, and a CoaXPress version 2.0 interface.

However, Yampolsky warned that manufacturers building high-speed cameras have to contend with power dissipation in the designs. ‘While using very fast ASIC devices, sensors or FPGAs, a lot of power is consumed, which is translated to heat in cameras and this impacts image quality.’ Therefore, careful power design and heat dissipation is a key factor when developing high-speed cameras.

As frame rates increase, reading out all that data becomes a bottleneck to analysing the images. High-speed cameras, therefore, have internal memory to capture and store a fast event, and then the data is read out offline at a later time. A high-end camera, such the Fastcam SA-Z from Photron, which can run at 20,000fps at 1-megapixel resolution, can generate 128GB of 12-bit image data in just less than 4.5 seconds. No streaming mechanism exists to allow this amount of data to be transferred from the camera in real time, so these types of camera contain internal memory.

‘Until recently, cameras with 1MB images operating faster than 1,000fps required internal DRAM memory,’ Ferrell said. He continued that with the introduction of 10 Gigabit Ethernet and CoaXPress 1.1 and 2.0 interfaces, data throughput has increased and other system components like PC busses and GPUs can be limiting factors.

WheelWatch motion analysis test bench. Credit: Mikrotron

The other option is to run the cameras in burst mode, recording at maximum frame rate while the event is happening, and then reading out the data while waiting for the next event, while the line is bringing in the next product, for instance.

Kaya Instruments’ latest JetCam product has a Camera Link HS interface transferring video over fibre optic cabling. The camera is able to image at 2.1-megapixel resolution at almost 2,400fps and record in real time for up to 40 minutes. Yampolsky said the company is working on cameras that can sustain data rates of more than 100Gb/s – today its technology reaches 55Gb/s, higher than 20Gb/s that regular CoaXPress cameras with four channels can reach.

Neuromorphic imaging

All the cameras mentioned so far are based on CMOS sensors capturing image frames. Now, however, event-based sensors and cameras are available that record changes in a scene, rather than each pixel registering light irrespective of what is happening in front of the lens.

Prophesee has recently released an industrial event-based vision sensor in a standard commercial package. The neuromorphic vision technology – it mimics the function of biological sight – can run at the equivalent of 10,000 images per second at VGA resolution, with high dynamic range and excellent power efficiency. Camera maker Imago Technologies has integrated Prophesee’s Metavision sensor inside its VisionCam EB smart camera.

Each pixel in the Metavision sensor is independent and asynchronous, adjusting at the pixel level according to the dynamic and the light in the scene – each pixel supplies its co-ordinates and a time stamp. If part of the scene is static – like the floor in an industrial setting – no information will be recorded. Slow changes in the scene will be sampled slowly; if something fast happens, the pixel will react quickly.

Speaking to Imaging and Machine Vision Europe, Luca Verre, co-founder and CEO of Prophesee, said that the amount of data the sensor produces is orders of magnitude lower than a traditional frame-based sensor, because it is only recording dynamic events. It means that high-speed counting, for example, operating at thousands of frames per second, can be run on a mobile system-on-chip, such as a Snapdragon 845.

Carsten Strampe, general manager of Imago Technologies, noted that, using event-based cameras, high-speed applications can be rethought. ‘There are ideas for fast counting of objects, vibration analysis and the control of kinematic movements. But also surface inspection, the measurement of object velocities or the analysis of particles in liquids is part of the application spectrum,’ he said.

Measuring machine vibration frequency and amplitude in real time can give an indication of when a machine might be about to fail, for example. Prophesee is exploring ways of inspecting mobile phone screens for surface damage, by vibrating the phone and measuring how light is scattered from the surface. Light will reflect differently depending on whether the phone is scratched, or flat and undamaged.

A BMW test vehicle equipped with WheelWatch. Credit: Mikrotron

Imago’s VisionCam EB makes all the calculations inside the camera on a dual-core Arm Cortex-A15 processor. A stream of events arrives and is processed into information with the help of Prophesee’s Metavision software library.

Swiss firm, Inivation, also supplies event-based vision technology through its Dynamic Vision Platform, which operates along broadly similar principles.

Event-based imaging has been known in academia for a number of years, but Prophesee’s standard packaged sensor, along with other neuromorphic vision systems, are a new way of inspecting very fast events.

High-speed camera cuts cell analysis from days to minutes

Scientists at the Dresden University of Technology have developed a high-speed imaging technique to analyse cell samples 10,000 times faster than conventional methods.

The Real-Time Deformability Cytometry (RT-DC), which the team has called AcCellerator, is able to measure up to 1,000 cells per second. The scientists founded Zellmechanik Dresden to commercialise the flow cytometry technique.

The mechanical properties of a cell give information about its health – how a cell deforms can act as a label-free biomarker to gain understanding about drug treatment effects, immune cell activation, stem cell differentiation, cancer prognosis, or the assessment of state and quality of cultured cells.

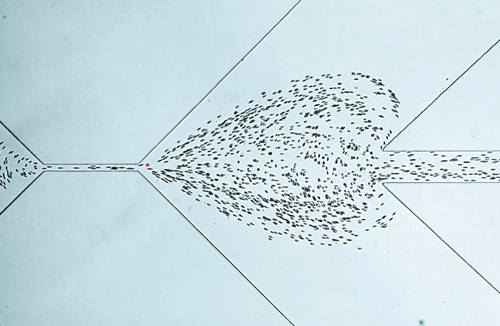

Heart-shaped flow lines are formed in the flow cytometry setup. Credit: Zellmechanik Dresden

The RT-DC technique forces cells through a micro-channel, and the pressure gradient of the fluid creates a flow profile and deforms the cells. Softer cells display greater deformations.

The cells flow through the cytometry set-up at a speed of 10cm/s and are viewed under a microscope with 400x magnification. An EoSens CL high-speed camera from Mikrotron is connected to the microscope and captures each individual cell, at up to 4,000fps.

The camera also controls the 1μs LED light impulse sent out for each image acquisition. The standard resolution of 250 x 80 pixels is automatically adjusted to the channel width.

All images are transferred in real time to the computer via a Camera Link interface. A program, based on National Instruments’ LabView, then measures the deformation of each cell; analysing a single image takes less than 250μs.

‘This process enables us to measure the mechanical properties of several hundred cells per second. In one minute, this permits us to carry out analysis that would take a week in the technologies we used before,’ said Dr Oliver Otto, CEO of Zellmechanik Dresden. ‘In 15 minutes a precise characterisation of all blood cell types, including cell activation status, is analysed. Due to the high throughput of cells, only one single drop of blood is needed for the analysis.’

Thanks to the AcCellerator, cell mechanic evaluation has become usable in clinical applications for the first time. In the future, mechanical fingerprinting of cells could be used for fast diagnosis, as well as for monitoring infections. Blood count changes or metastasising cells can be detected in minutes.

The technology is also opening up many new areas of application in research, by enabling scientists to examine all processes in which cytoskeleton changes are responsible for the mechanical stabilisation of the cell, including migration or cell division.