The idea of a Fourth Industrial Revolution – or Industry 4.0 – has been floating around for a while now. At its crux is interconnectedness – from the IoT platforms and the sensors picking up the data, to the cloud where everything is brought together; Industry 4.0 factories will no longer be a set of different machines working on individual – and independent – tasks, but a coordinated mechanism fed by data and smart algorithms.

Some companies are already experiencing that future today. From Siemens calling Industry 4.0 ‘the future of industry’ to Ericsson hailing it as ‘a new era in manufacturing’, there’s no shortage of momentum around this transformation, but exactly what kind of role can machine vision play?

The edge of a new revolution

Humans are fundamentally visual creatures. All of us rely heavily on visual cues to assess situations and make decisions. Even for machines, which can use all sorts of sensors and aren’t faced with any inherent biological limitations, vision is likely to be one of the key sources of information.

‘Vision is one of the most important senses for humans, but also for machines,’ agrees Sören Böge, head of product management 2D image acquisition at Basler, a manufacturer of imaging components for computer vision applications. ‘You have several sensors in a factory – for example, photoelectric sensors or proximity sensors – but comprehensive tasks, such as sorting, quality analysis or orienting an AGV [Automatic Guided Vehicle] requires vision to deliver the significant data you need to make a factory smart enough to make its own decisions.’

For factories looking to incorporate machine vision, the challenge lies not so much with the sensors themselves, but rather how these sensors communicate with each other. A factory’s ability to make its own decisions based on this dialogue is what truly differentiates a smart factory.

The first prerequisite for this (and Industry 4.0 in general) is true interconnectedness. You can’t have islands or isolated components in a factory. Everything needs to be connected. This is not a new idea, but what is new is how they connect. Increasingly, emerging communication technologies and protocols are enabling the processes required for smart factories to happen.

‘You still have bus system-based factories but, if you look in the future, 5G, wi-fi 6 and other standards of wireless connection will play a significant role, which will allow you to interconnect broader spaces, broader areas and even multiple factories in the future,’ Böge adds. Basler’s prioritising of communication standards is also clear from the fact it is the first machine vision company to join the 5G Alliance for Connected Industries and Automation.

Even with the new standards of wireless connection, however, the industry still faces sizeable limitations. Machine vision implies a lot of information; even standard-speed cameras can stream at 120 MB per second and, if you have dozens – or hundreds – of cameras and machines and other utilities, even wi-fi 6 likely won’t be sufficient to support all this data transfer. So Böge expects we’re not quite done with wires yet, and there’s still a way to go before streaming becomes truly wireless.

But there are alternatives. An important accelerator of machine vision in smart factories is a combination of edge computing, where smaller computers are embedded into systems with sufficient calculation power to do some of the pre-analysis work on the edge. Edge systems exist, but there are important cost and security challenges. When edge systems become cost-competitive with PCs, then you can set up a truly smart and independent factory.

Despite these shortcomings, however, Industry 4.0 isn’t something on the near-horizon – it’s happening already. ‘If we look at the factory, the game changer for me in Industry 4.0 is not hard cut,’ says Böge. ‘I don’t think it’s something that’s starting now, but is an evolution that began quite some time ago.’

As more large companies share their success stories, we can expect confidence to increase and the new industrial revolution to truly take shape, with machine vision as its core accelerator. When that happens, how could we expect a smart factory to work? ‘Almost like a home automation system,’ suggests Böge. The idea would be to have an automated supply store, where the operative supply management could be initialised automatically for tasks such as reordering and stock management. Then the parts would be transported to the assembly machines, where robots would load and unload them. Everything would be quality inspected and the final product put on the storage shelf, where there would be a connection to shipping and so on. ‘So, working back from the customer order, you could more or less complete production planning and, in nearly every step of this, vision systems would be a central success factor,’ adds Böge.

Artificial intelligence, real images

Industry 4.0 – and digitisation in general – have become relatively ‘fuzzy’ terms and terminology also has a geographical component. In the USA, for instance, IIoT (Industrial Internet of Things) is the buzzword that organisations respond to; it’s not Industry 4.0. Although, no matter what you call it, it is all based on AI, and deep learning specifically.

It is widely recognised that deep learning is now the state-of-the-art in machine learning for machine vision and it is being increasingly widely deployed across industrial applications. As well as studies showing this, Nvidia, one of the key players in the vision game, also clearly states it. AI, and deep learning specifically, enable computer vision models to learn, adapt and perform comparably to a human expert in a factory, while requiring significantly less input (and lower costs in the long run). In the food industry, for instance, AI systems have already become proficient at detecting and grading various food products – in some cases with an accuracy of more than 95 per cent, or even 99 per cent, according to research by Lili Zhu and Petros Spachos et al in their recent study, Deep learning and machine vision for food processing: A survey.

‘Machine vision systems are widely used in food safety inspection, food processing monitoring, foreign object detection and other domains,’ write the authors. ‘It provides researchers and the industry with faster and more efficient working methods and makes it possible for consumers to obtain safer food. The systems’ processing capacity can be boosted to a large extent, especially when using machine learning methods.’

Credit: shutterstock.com

Methods such as those common in food detecting, grading and processing can also be used in factories to deal with components or products, but there remain significant obstacles. Among these is visual data availability. To train your deep-learning algorithm for tasks such as sorting, picking and quality inspection, you need a lot of visual data, and obtaining good quality data is rarely easy. But solutions are emerging on this front, too. Siemens’ SynthAI generates thousands of randomised annotated synthetic images just from 3D CAD data. This data can then be used for training, enabling organisations to handle the training of their systems with fully annotated datasets. This not only shortens data collection and training time, but also eliminates tedious manual images and labelling, and produces a model that can also be used offline.

Developing easily accessible and high-quality data is important for developing efficient algorithms that, in turn, not only speeds up the machine vision-based process, but also makes the entire system consume less power, which is important as it makes it easier to run on edge machines and pass-through wireless connectivity.

Other companies, including Basler, have demonstrators for automating various aspects of factories and are working at automating robots, often remarkably quickly. Within hours, an example demonstrator can be set up to separate various products placed randomly below the robot, even for people without any machine vision or programming experience – something that would have taken thousands of lines of code and thrown up many complications just a decade ago.

So, what’s the delay?

Given all this, why aren’t we seeing smart factories pop up everywhere? Based on signals from both industry and academia, the technology required for Industry 4.0 is already here, but there remains a significant gap between something being possible and something that supports a good business case. We know that, in general, industry is slower than research to adapt.

Perhaps the biggest current deterrent for smart factories is that, despite tangible progress being made, there are few large-scale success stories. Setting up a factory is complicated in the first place: it’s a big investment and you need everything to work smoothly. Investors are often understandably hesitant to implement the latest technology because that technology doesn’t have a track record.

There’s also a knowledge gap to bridge. Even a smart factory needs ongoing monitoring, so you need engineers who are well-versed in new wi-fi standards, machine learning algorithms and edge computing. You can’t just expect your existing engineers, who usually work with legacy systems, to be automatically confident or capable in every new technology.

It’s going to take time, but the signs are already out there. It’s perhaps just a matter of confidence and cash – and this isn’t an era when either are in plentiful supply.

It is clear that intelligent image processing opens up many new possibilities, but what does this actually mean for small and medium-sized enterprises? Find out how user-friendly systems will pave the way for the new technology true to the claim: ‘Deep learning: a game changer in automation.’

Artificial intelligence (AI) plays a key role in the digital age. Self-learning algorithms have the potential to improve processes and products in the field of machine vision and to secure competitive advantages. They are equally suited to automation and logistics as they are to monitoring products and processing goods. This is also due to the fact that classical image processing solutions work with a fixed set of rules, making organic or fast-changing objects a major challenge. Artificial intelligence, on the other hand, can handle such cases effortlessly. So, where are the challenges to technology? And what needs to be done to make it widely accepted?

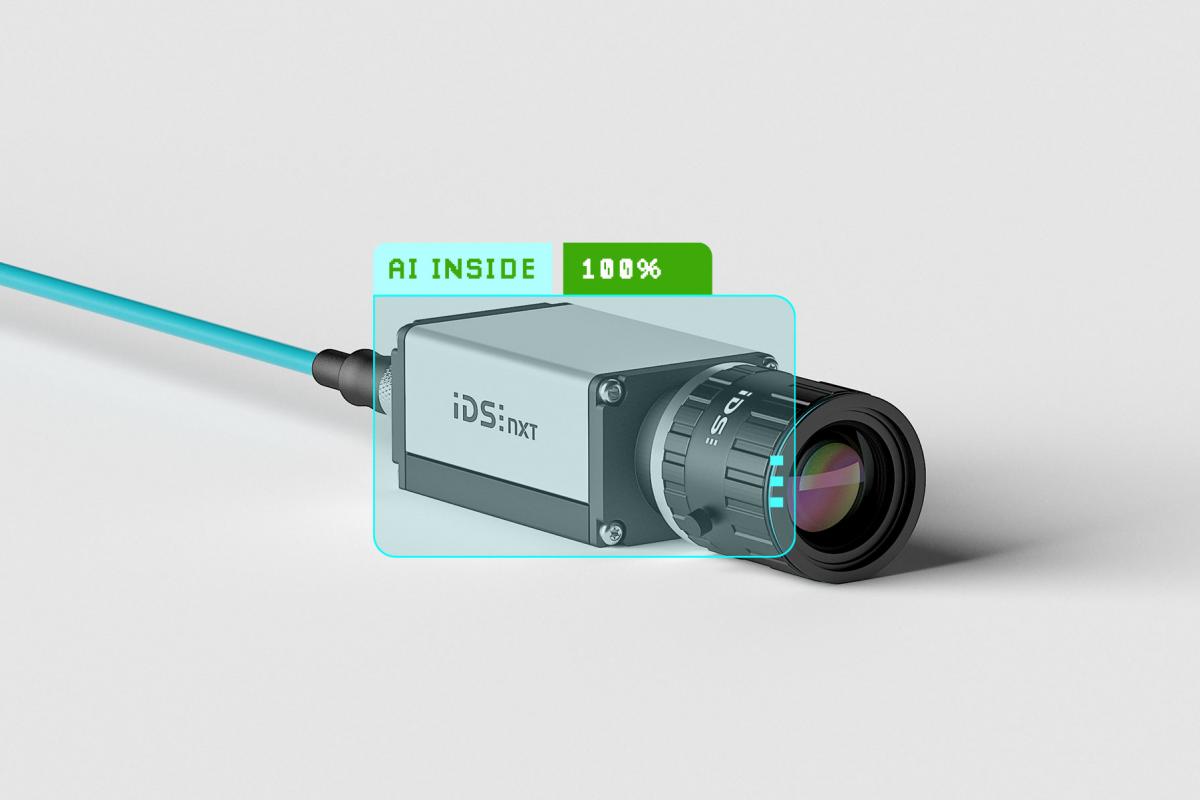

The hurdle for the application of AI-based image processing solutions is still quite high. They usually require expertise, programming efforts and investment in computer and storage hardware. Not only training a neural network, but also using it and evaluating the results require knowledge of hardware, software and interfaces. This poses challenges for many companies. IDS shows that it can be done differently: The IDS NXT AI vision system comes with all the necessary tools and workflows, allowing users to easily build intelligent vision solutions.

The IDS NXT AI vision system allows users to easily build intelligent vision solutions

Using the IDS NXT lighthouse Cloud software, even users with no prior knowledge of artificial intelligence or camera programming can train a neural network. Since it is a web application, all functions and the necessary infrastructure are immediately available. The engineer or programmer does not need to set up his own development environment, but can immediately start training his own neural network. Three basic steps are required for this: Uploading sample images, labelling the images and starting the automatic training. The generated network can be run directly on the IDS NXT industrial cameras, which are capable of delivering the desired information or passing commands to machines via REST or OPC UA.

Deep Learning: a game changer in automation

One thing is certain: artificial intelligence is a game changer. The technology is penetrating new areas with incredible speed and enabling applications where classic image processing is too expensive, inflexible and also too complex. Dr Alexander Windberger, AI specialist at IDS Imaging Development, explains: ‘Not only has the game changed, but also the players. AI-based image processing works differently from classic rule-based image processing, because the quality of the results is no longer just the product of manually developed programme code, but is primarily determined by the quality of the data sets used.’

Thus, users need different core competences to work with AI vision. The approach and processing of vision tasks is therefore changing. Domain experts are coming into the picture, as they can use their knowledge to keep the creation of data value going and react flexibly to shifts in data and concepts during operations.

However, many companies still have reservations about the new technology. There is a lack of expertise and time to familiarise themselves with the subject in detail. At the same time, the Vision community is growing from the IoT sector and the start-up scene. With the new application areas and user groups, there are inevitably other use cases and requirements. The classic programming SDK is no longer sufficient to provide the best possible support for the entire workflow, from the creation of datasets and training to the implementation of a neural network.

Looking to the future, Dr Windberger states: ‘We realise that entirely new tools are emerging today for working with AI vision, which are used by very heterogeneous user groups without AI and programming knowledge. This improves the usability of the tools and lowers the barrier to entry, which is currently significantly accelerating the spread of AI-based image processing. Ultimately, AI is a tool for humans and so it must be intuitive and efficient to use.’

Driving force behind current developments

No other component collects and interprets as much data as image processing. It enables what is seen, such as product characteristics (e.g. length, distance, number), states (presence/absence) or quality, to be monitored in the production process, processed and the results passed on to the value-added systems in the network. This not only determines whether the inspected part meets the desired characteristics or is good or bad, but also triggers an intelligent action, such as automated sorting, depending on the result. Especially small and medium-sized enterprises, for which the automation of their production could not be realised competitively due to a lower number of pieces in production, benefit from this plus in flexibility.

Object detection using the IDS NXT industrial camera

Factory automation with smart cameras therefore also offers the possibility of reacting more flexibly to difficult market conditions while guaranteeing consistently high production quality and efficiency. Companies for whom the leap to end-to-end digitisation and automation is too great can make significant progress thanks to AI-based image processing. Holistic, user-friendly systems such as IDS NXT pave the way for this.

More information

https://en.ids-imaging.com utm_source=imv_europe&utm_medium=newsletter_artikel&utm_campaign=ids_nxt_ocea