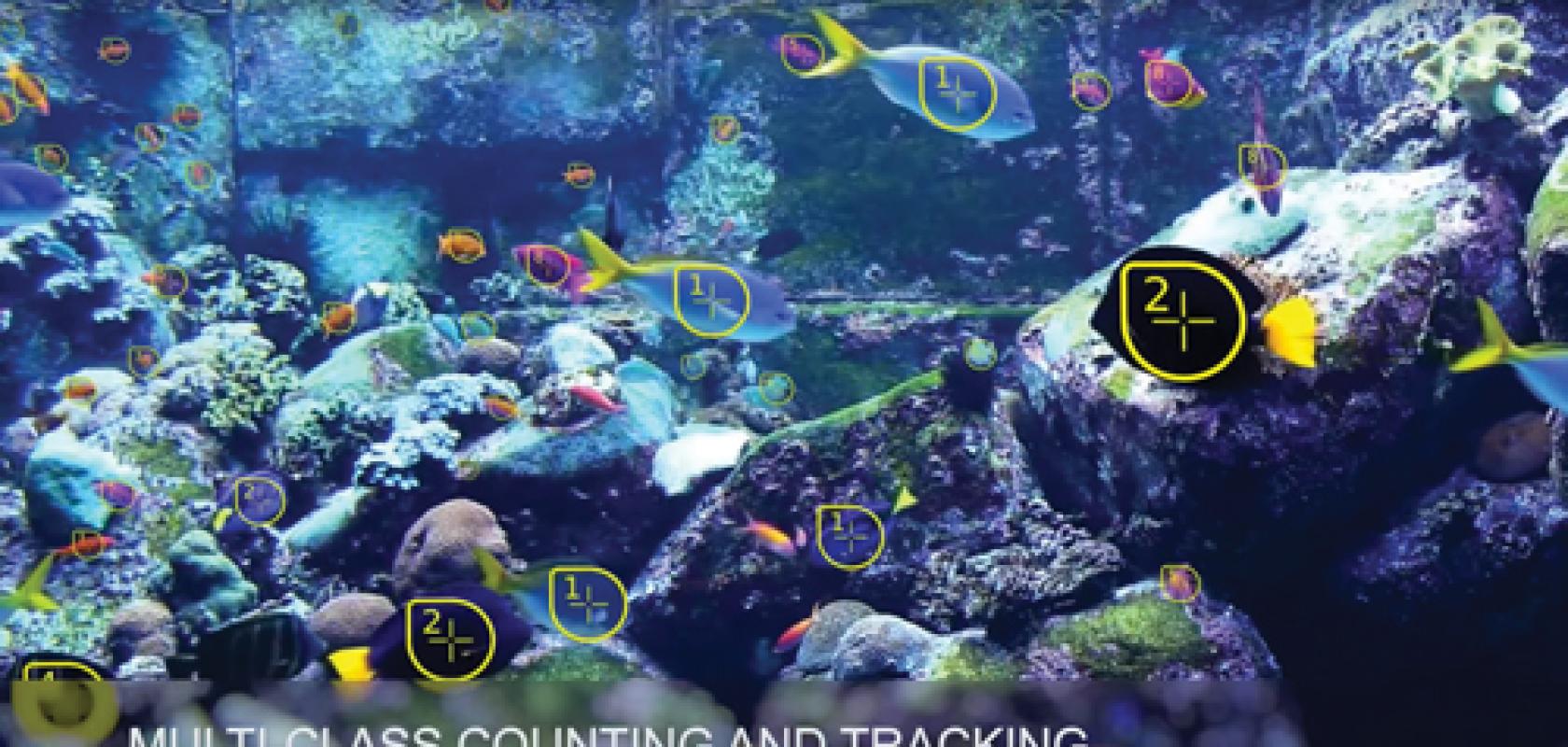

Tracking fish in real time via deep learning algorithms. Credit: Vidi Systems

Science fiction films like The Terminator might have introduced artificial intelligence (AI) to the wider public, but the concept has been around since before computers. The Turing Test – if a computer’s answers to questions cannot be distinguished from a human respondent, then it must be ‘thinking’ – was proposed in 1951. Two years before that, Alan Turing was quoted in the London Times newspaper as saying: ‘I do not see why it [the machine] should not enter any one of the fields normally covered by the human intellect, and eventually compete on equal terms. I do not think you even draw the line about sonnets, though the comparison is perhaps a little bit unfair because a sonnet written by a machine will be better appreciated by another machine.’

More than half a decade before that, McCullock and Pitts published their groundbreaking paper ‘A Logical Calculus of the Ideas Immanent in Nervous Activity’, which went on to become a foundational part of the study of artificial neural networks, with many applications in artificial intelligence research. As always, science fiction gets there before the scientists, with Issac Asimov publishing his short story Liar! in 1941. This went on to be part of the I, Robot series and included the famous ‘three laws of robotics’.

Artificial intelligence has now gone from theory to reality, brought about by deep learning algorithms and ever faster processors. One of the most high-profile examples of its progress came in 2014, with the Turing Test beaten when a supercomputer fooled people into thinking it was a 13-year-old boy at the Royal Society in London. And earlier this year, Google’s program based on deep learning algorithms, DeepMind AlphaGo, beat – in fact trounced – the South Korean master Lee Sedol in the exceptionally complex board game, Go.

These advances in deep learning have significant implications for machine vision – not just for factory automation systems, but for agriculture, transport and medicine, to name just a few.

Where AI differs from deep learning

When AlphaGo’s victory was reported, the media used the terms AI, machine learning and deep learning to describe how the program won. But the three terms, while related, are not the same thing.

As the former Wired journalist Michael Copeland put it: ‘The easiest way to think of their relationship is to visualise them as concentric circles with AI – the idea that came first – the largest; then machine learning, which blossomed later, and finally deep learning, which is driving today’s AI explosion, fitting inside both.’

Defect detection in a rotating medical screw. It is far simpler to train a system that understands how to spot what is wrong than one to identify all possibilities.

Machine learning, as Copeland described it, is a ‘narrow AI’, a way of eliminating the process of ‘hand-coding software routines with a specific set of instructions to accomplish a particular task. [Instead] the machine is “trained” using large amounts of data and algorithms that give it the ability to learn how to perform the task.’

In industrial machine vision, machine learning is well known, with algorithms that perform edge or shape detection, measurement calculation, or OCR. These require significant programming and are still prone to error if, for example, the image is blurred or obscured, or the angle of the camera is wrong.

Deep learning takes this further

Michał Czardybon, general manager of Adaptive Vision, stated: ‘Deep learning based solutions can deliver results that have not been achievable so far with standard machine vision algorithms.’

Deep learning in machine vision has been advancing quickly over the past decade. Olivier Despont is from Swiss machine vision software firm Vidi Systems, which claims to be the first to develop a deep learning-based industrial vision analysis system. Vidi Systems is shortlisted for the Vision Award for its technology, the winner of which will be announced at the Vision show in Stuttgart, Germany in November.

‘Before 2006, the way neural networks were conceived didn’t work very well and the computation power was not there,’ Despont said, adding that the change in 2006 was when British-born scientist Geoffrey Hinton developed what is now called deep learning.

Hinton’s 1978 PhD was in artificial intelligence and he is now a distinguished researcher at Google, as well as emeritus professor at the University of Toronto. He, along with Professor Yann LeCun, director of AI research at Facebook and Silver Professor at the Courant Institute, New York University, has played a key role in the field’s evolution – the results of their work can be seen in Google’s and Facebook’s ability to recognise specific people in photographs, from a huge number of angles and even when they’re very small in the image.

Vidi Systems’ software is based on the approach developed by LeCun. Despont described deep learning as the ability to teach a machine as if it were a child: ‘It really works like the human brain. You and me, if we see [something] two or three [times], we can already contextualise what [it] should look like – what eyes should look like, what a dog should look like. And that’s with two or three [instances].’

It goes without saying, however, that the systems now available and those being developed aren’t yet as advanced as the human brain.

Status for industrial vision

A straw poll of some industrial vision software firms – Adaptive Vision, Matrox Imaging, MVTec, Teledyne Dalsa and Vidi Systems – shows deep learning is playing a key part in their strategies. Most are either developing or, in the case of MVTec and Vidi Systems, have launched or are about to launch a deep learning-based product – MVTec will release its first deep learning product, Halcon 13, on 1 November.

Speaking with these groups, the importance of sample size came up again and again. Adaptive Vision’s Czardybon commented: ‘[A] typical example is identification of automotive parts delivered for refurbishment. The images contain objects at very different orientations and most of them are dirty or contain superfluous labels; some are incomplete or even broken. While the database can contain more than 10,000 references, the task of correctly stating the vendor and part number is extremely difficult with the tools we have been using so far. And here comes deep learning. It requires laborious preparation of samples, designing a dedicated structure of convolutional neural networks and training the system in the cloud, but then the final result clearly surpasses everything that we have seen in the past.’

Consumer companies are able to solve this through their users – tagging a friend in a photo on Facebook or Google helps the algorithm. The same is true for OCR and handwriting recognition with Recaptcha – now owned by Google – which gets people to prove they’re human by writing a number from a picture or a string of letters.

But it’s a different proposition for a business-to-business firm. MVTec’s Johannes Hiltner remarked: ‘Most of the applications for certain problems, like defect detection, are quite similar to each other, even though the objects are different… We are now trying to develop [the idea] that the problem of defect classification is similar to a certain problem set. We might be able to provide a pre-trained deep learning network optimised for this kind of problem and still allow our customers to add a smaller set of images – here we are talking 10 images, for example, per class.

‘This has to be usable for our customers,’ he continued. ‘[None] of our customers want to spend weeks and weeks labelling hundreds and thousands of images.’ Taking such an approach, Hiltner said, would also mean MVTec could provide a deep learning approach where its customers don’t have these hundreds of thousands of images to begin with.

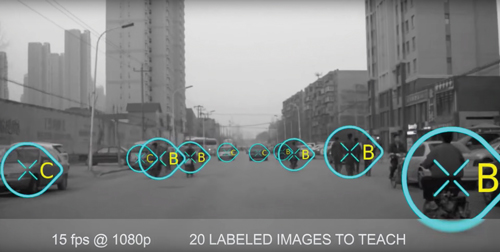

Vidi Systems' software was taught to identify the position of trucks, cars, bikes and pedestrians with just 20 images. (Credit: Vidi Systems)

The one exception to this sample size issue was Vidi Systems. Despont said: ‘We don’t need millions of images on our site. As we come from factory automation, we know that when they [factories] inspect a product we have maybe four, five, six parts, and then we need to acquire an image of this part.’

He added that Vidi Systems has some tricks, so the system can be taught even with low sample numbers of 10 or 20. Despont said the software can be ‘as sound, or sometimes even sounder’ than using countless images.

One example of this was tracking and counting different fish species swimming in a tank. With just 80 images, Despont said, the system could be trained to identify and label all eight species, no matter the size or angle to the camera.

Teledyne Dalsa’s Bruno Menard noted that, because of the large number of image samples necessary for classification, significant computing power is needed to train the machine in a reasonable time. ‘Recent advances in GPU technology, [which are] very suitable for deep learning given the parallel nature of it, tend to show that implementing deep learning-based solutions is possible today. But the biggest challenge in machine vision remains to create the training database. Companies that are willing to invest time and money in building these huge image databases might be able to end up with a commercial system very soon, given the underlying technology is present.’

Principles and technology

Deep learning is highly data intensive, but the camera technology is less so. Despont observed: ‘As long as you can see what matters – it could be infrared, x-ray, hyperspectral – if you can show the image and… a human can interpret it, then the system can.’

At the heart of any deep learning system is a fast processor. Vidi Systems specifies Nvidia’s GPUs, with the application dictating the speed of the chip, and the software can operate on PCs running either Linux or Windows.

MVTec’s Hiltner also stressed the importance of parallelisation: ‘We’ve focused a lot on optimising our algorithms on speed. We use multi-core CPUs and our library makes use of these... automatically.

‘Certain CPU manufacturers provide specific hardware and specific instruction sets… which allow us to parallelise certain calculations better.’ Hiltner said using Intel’s AVX2 – and similarly with Arm’s Neon instruction set – MVTec was able to gain a 300 per cent speed improvement.

As to image resolution, this can be a double-edged sword. Hiltner noted that larger images are ‘more precise and you can do more image processing. On the other hand, the bigger the image, the more data you have to use and… thus more time is being spent on the processing.

‘We implement a lot of ways that allow our customers to speed up the processing.’ He gave the example of a 10 megapixel image, which would be very demanding, but by creating a 100 x 100 pixel sampled-down image to undertake a pre-search this can be sped up significantly.

Vidi Systems’ Despont reiterated this approach: ‘The way we produce the image, we are not using brute force; we are using focus and attention. So, instead of analysing the image as a whole, we drive the system to analyse small parts of it. Then the system learns this and [can] focus on this very quickly.

Where will be the big gains?

Both Despont and Hiltner stressed that the overwhelming majority of current applications for Vidi Systems and MVTec are on the factory floor. But agriculture, health and smart vehicles were also highlighted.

Despont explained that, in healthcare, the system could search near instantaneously, and highly accurately, for cells infected by the malaria parasite, or interpret radiography images in order to allow the trained medical staff to spend time elsewhere. And being based on statistical probabilities, the system would be able to flag up any borderline calls for a second opinion.

Future improvements

GPU technology is advancing quickly, which means training time is coming down considerably. Despont remarked: ‘Every year the capability is doubling. And every year – or at each release – we can improve from 20 per cent up to 50 per cent the... processing and training time. Today’s technology is starting to allow some real-time applications.’

This also means that GPUs can rapidly become out of date. One possible approach to solve this is through cloud computing, where the systems are constantly updated to the latest hardware. Traditionally, cloud servers for machine learning have been better suited to hobbyist applications. Companies like Nervana Systems, now owned by Intel, may be making this a more realistic proposition for in the future.

Additionally, according to Despont, miniaturisation could be a likely next step. With Moore’s Law enabling smaller GPUs that still have huge processing power, potentially four parallel GPUs could fit into one, which would mean a phone could run deep learning vision applications. And, as smart cars become more of a reality, the image processors would also be able to deal with the environmental conditions and extract all the information really quickly.