The number of 3D vision cameras and technologies is proliferating, and therefore attention turns to how to transport 3D vision data in a standardised way.

The answer for most machine vision interface standards is GenDC, or generic data container, a module within the latest version of GenICam that can be used to describe more complex data formats like 3D data. GenDC defines how image data is represented, transmitted or received independent of its format.

Coaxpress already supports GenDC in its latest version meaning the standard now has full 3D support; GigE Vision version 2.2 will include it too when it is released at the end of the year.

In 2018, when the current version of GigE Vision, 2.1, was introduced, a multi-part payload was added. This allows the user to put different data types into one single logical frame thus enabling the transport of 3D data as part of a single container.

The way the transport layer works in GigE Vision with a typical 2D image, explained James Falconer, product manager at Pleora Technologies and vice chair of the GigE Vision technical group, is to first send a leader, which describes the incoming image; then the image data; and then a trailer that signifies the end of that frame. Multi-part means different data types can be put in one container. That was the introduction of 3D into GigE Vision.

The GigE Vision standard was initially released in 2006, and, as part of that effort, the GenICam technical group formed alongside the GigE Vision group to help with interoperability.

‘Typically, 3D technologies up until recently have had a proprietary transport mechanism, even if they are based on Ethernet,’ Falconer said. ‘More recently we’ve seen a large push, especially in Asia, to move that to a standards-based technology with GigE Vision as the underlying transport layer.’

Originally there wasn’t a defined way on how to send 3D data, Falconer explained. ‘Before the introduction of GigE Vision 2.1 there were cameras that would use GigE Vision to transmit 3D data, but they used to take an RGB pixel data and use that as a container to stuff the XYZ components (as 3D data) in R, G and B parts respectively, and then they’d reconstruct the 3D data on the host side. That made it still a closed system, a proprietary system,’ he said.

Now, with the multi-part payload in GigE Vision 2.1, and with GenDC coming as an alternative means for 3D transport in version 2.2, there are now more possibilities for 3D and other types of data to be transported on various standards. Specifically, GenDC extends beyond 3D and solves other use cases as well, such as new compression types.

‘The benefit for the user goes back to interoperability,’ Falconer said. ‘If the user wants to use image processing software from one vendor, and that vendor is standards-compliant and supports GenDC or multi-part, then they can use a GigE Vision 2.1- or 2.2-compliant camera from another vendor and still use the software.’

There’s now a desire to move to a standards-based Ethernet transport, Falconer said, but many 3D camera technologies are proprietary Ethernet-based. Pleora has released eBus Edge as part of its eBus SDK. EBus Edge is a set of libraries that allows the user to convert a proprietary 3D camera into a standards-based transport mechanism.

- White paper: High-speed imaging: The benefits of 10, 25, 50, and 100GigE Vision

- Prof Dr Bernd Jähne, chair of EMVA 1288 and senior professor at HCI, Heidelberg University, gives a preview of release 4.0 of the EMVA standard 1288

The user can write an application for transport over multi-part with eBus Edge to give 3D transport capability. ‘Our libraries also allow you to customise the GenICam layer to add your own 3D-specific features and other features required to control your device or sensors,’ Falconer said.

Pleora’s eBus SDK gives a sample application that shows the user how to handle a stereoscopic 3D use case, for example, from both the GenICam and transport layers.

In embedded vision, for example, a user might want to connect one or multiple Mipi sensors to an edge device. ‘EBus Edge supports multiple sources, so you can, for example, connect two Mipi sensors to a compute device and then, on the Ethernet transport side, you have two different logical streams of 2D GigE Vision data on the same Ethernet link,’ Falconer explained. ‘Alternatively, you can connect two Mipi sensors, gather the data in memory, do some processing to compute your 3D point cloud, and with our libraries take that data and transmit it using the multi-part payload giving you a 3D GigE Vision-compliant data stream to connect to software from any GigE Vision-compliant software vendor.’

EBus Edge transmits uncompressed 3D data using multi-part payloads with low, predictable latency over Ethernet directly to existing ports on a computer for analysis.

Alongside GenDC streaming, GigE Vision 2.2 will have multiple event data functionality. Up until GigE Vision 2.2, each asynchronous event message from the device to the host PC was sent separately. Version 2.2 allows the user to concatenate multiple event messages into a single message.

Going beyond version 2.2, Falconer said that the GigE Vision technical committee’s next big task is to look at speeds greater than 10Gb/s.

GigE Vision guarantees data delivery through a proprietary mechanism called packet resend. The transmitter must store the data while the receiver is processing packets, which allows a receiver to request retransmission of packets. The amount of data that must be stored for the packet resends increases linearly with link speed.

Ethernet equipment can ask a transmitter to temporarily stop streaming. The transmitter must therefore be able to buffer data to cope with pauses on the Ethernet link. During an update given at the Vision trade fair in October, Falconer said the GigE Vision committee is looking to improve the standard to reduce the memory requirement for devices, with the aim of enhancing the robustness and stability of transferring data at speeds higher than 10Gb/s.

Euresys featured product

Euresys/Sensor to Image IP Cores speed up the implementation of the GigE Vision protocol into any embedded project.

From the Top Level Design (which interfaces the imager, sensors, the GigE PHY to the FPGA internal data processing) to Working Reference Designs as well as hardware and software toolkits, Sensor to Image IP Cores deliver top-notch performance in a small footprint while leaving enough flexibility to the developer to customize his design.

The GigE Vision IP Cores are compatible with Xilinx 7 Series devices (and higher) and Intel/Altera Cyclone V devices (and higher). The toolkits exist in various configurations and come with sample applications and examples.

Sensor to Image’s offering also includes USB3 Vision, CoaXPress and CoaXPress-over-Fiber interface IP Cores as well as Image Sensor IP Cores for MIPI CSI-2 and Sony’s IMX Pregius sensors.

www.euresys.com/en/Products/IP-Cores/Vision-Standard-IP-Cores-for-FPGA/GigE-Vision-IP-Core-(1)

--

Other commercial products

New GigE Vision camera models on the market include updated versions of Teledyne Flir’s Blackfly S line and Lucid Vision Labs’ Atlas line, which has just entered production.

The additions to the Blackfly S GigE camera line – the BFS-PGE-50S4M-C and BFS-PGE-50S4C-C – are 5-megapixel models suitable for integration into handheld devices. The cameras use Sony’s IMX547 global shutter sensor; offer lossless compression to move from 24fps to 30fps at full resolution; have 68 per cent quantum efficiency at 525nm; and 2.49e- read noise.

Teledyne Flir's Blackfly S camera line

The Atlas IP67-rated camera line from Lucid Vision Labs has a 5 GigE PoE interface and features Sony Pregius global and rolling shutter CMOS sensors. The first models available include the 7.1-megapixel Sony IMX420 sensor running at 74.6fps, and the 20-megapixel IMX183 sensor running at 17.8fps.

The cameras feature active sensor alignment for excellent optical performance; M12 Ethernet and M8 general purpose I/O connectors that are resistant to shock and vibration; and industrial EMC immunity. They operate over -20°C to 55°C and measure 60 x 60mm. The 5GBase-T Atlas is a GigE Vision- and GenICam-compliant camera capable of 600MB/s per second data transfer rates (5Gb/s) over CAT5e and CAT6 cables up to 100 metres in length.

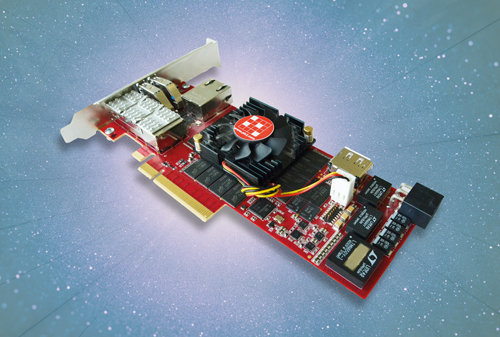

In addition, Kithara has released a real-time driver for the frame grabber card PGC-1000 by PLC2 Design. The PCIe card acquires and converts GigE Vision data, for which Kithara’s RealTime software suite provides real-time functionality.

The PGC-1000 frame grabber

The PCIe plug-in card handles the entire conversion process of captured GigE Vision data, thus relieving the CPU during image acquisition. In this way, multiple camera streams (4 x 10Gb/s or 1 x 40Gb/s) can be operated at minimal CPU load, and the captured image data can be stored on SSDs within the same real-time context. Additionally, real-time synchronisation of multiple cameras is achievable with the Kithara PTP feature.