Dr Christos Theoharatos, chief scientific officer at Irida Labs, describes an imaging system for monitoring the melt pool in additive manufacturing, developed as part of a Horizon 2020 project

Additive Manufacturing (AM) brings significant innovation in the field of manufacturing parts with complex geometries. However, current ΑΜ technologies often produce 3D parts that need further machining to improve the final surface finish. To improve the quality of printed parts, knowledge is needed about how the main process parameters – such as laser power, laser head velocity, feed rate, and powder mass stream – influence melt pool behaviour.

Real-time monitoring of the melt pool is an essential part of AM, to better understand and control the thermal behaviour of the process, as well as to detect any unpredicted faults and control process parameters within the machine, all done in situ as the part is being built.

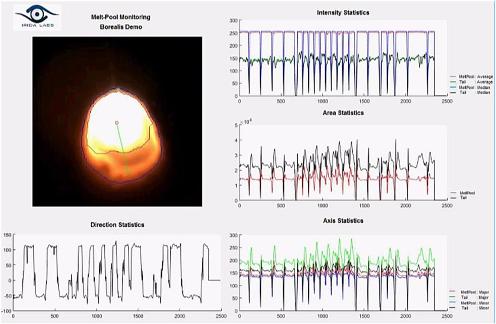

Melt-pool monitoring and analysis; extraction of geometric and intensity statistical features in real-time

The Symbionica H2020 project is a three-year, EU-funded project, ending in September 2018, which aims to develop a reconfigurable machine to make personalised bionics and prosthetics using additive and subtractive manufacturing. The Symbionica system uses a vision-based solution for closing the loop in terms of process control in AM.

The solution is based on monitoring the AM process in real time with a comprehensive vision system, which interacts with the machine process algorithms to detect and correct deposition errors. The system should improve the accuracy of printed parts and their material properties, leading towards zero-defect AM.

The interoperable vision system monitors the size, shape and intensity of the melt pool, and makes process parameter corrections, all through a numerical controller. The software implements complex image processing algorithms directly on the hardware subsystem’s FPGA for closed-loop AM melt pool process control.

The hardware subsystem is comprised of a high-speed camera operating at 540fps and three-megapixel resolution. It is equipped with an optical system positioned inside the machine tool head to capture images in the same axis as the laser beam.

The camera is connected through a CoaXPress interface to a frame grabber, which includes a Xilinx FPGA to run the melt pool monitoring pipeline in real time. The frame grabber focuses on high-speed image acquisition with up to four CoaXPress connectors.

The design process consists of three main phases: in the first phase the algorithm was developed and simulated using artificial data. This phase ended with the development of bit-accurate models for each hardware component in the data path. The second phase includes the implementation of the hardware components and the simulation of the whole design. After successfully completing these two steps, the FPGA design tools for synthesis and place-and-route procedures were used to produce the final bitstream file to program the FPGA. In the third phase, a software (C++) application was developed to manipulate the results from FPGA processing, upload them to the host DDR memory, package them, and send them to the numerical controller.

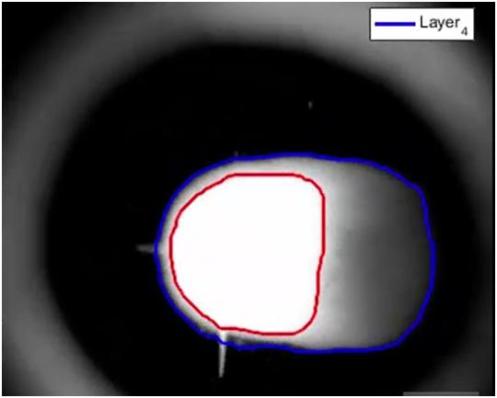

Robust segmentation of the melt-pool distribution in different layers of the deposition process

The main objective for the algorithmic pipeline for analysing the melt pool is to segment each frame in two overlapping regions, R1 and R2, where R1 is the melt pool area and R2 the entire distribution, that is, the melt pool along with the tail, which defines the direction of the laser head deposition process. This is accomplished by implementing a robust adaptive multi-thresholding operation that can estimate the segmented regions – in case the internal physical parameters of the laser deposition process change over time – followed by a set of morphological filtering operations for smoothing both regions and filtering out sparks and reflections. The resulting melt pool regions can then be used to extract a variety of geometrical and intensity statistics at the back end of the image pipeline system in order to quantify their properties. These are fed to a numerical controller, so as to be transformed to physical parameters of the AM process.

The shape and intensity features extracted for both regions R1 and R2 are: the major and minor axis lengths, the area, the centre of gravity or mass, and the average intensity. Apart from the above statistical parameters, other interesting and important features can be extracted from the two distributions, such as the perimeter, the aspect ratio, the eccentricity that quantifies the circularity of the melt pool region, the centroid distance of both regions to the end and front-end of the distributions, or other intensity features such as the maximum, minimum and median intensities. Finally, a temporal moving averager is applied on the statistical values in order to smooth out temporal variation, such as that caused by movement of the melt pool image or even a large spark that is not entirely filtered out by the morphological operator.

The project team is currently investigating the association and correlation between the melt pool behaviour and process parameters, as well as process monitoring to gather temporal and spatial real-time information. In addition, engineers at Irida Labs are working towards integrating artificial intelligence and, more specifically machine learning and deep learning techniques, to monitor parameters related to quality and features, and detect defects and abnormalities in real-time during the laser deposition process.

Irida Labs, based in Patras, Greece, is a computer vision company using embedded platforms. It is one of 12 project partners working on the Symbionica project (www.symbionicaproject.eu) to build a reconfigurable additive and subtractive manufacturing machine to make personalised bionics and prosthetics.