Researchers at the University of Zurich (UZH) and the Swiss research consortium NCCR Robotics are using eye-inspired cameras to guide drones in low-light conditions.

The work could lead to drones being able to perform fast, agile manoeuvres in challenging environments and applications, such as search and rescue missions in urban areas at dusk or dawn.

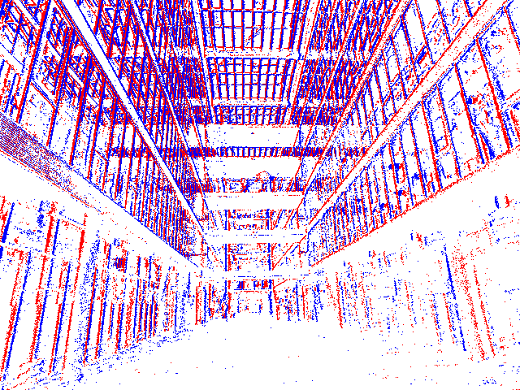

The researchers' eye-inspired camera enables drones to perform delicate manouvres in low-light environments. (Credit: UZH)

The vision system used is a prototype event camera, a type of camera invented at UZH that uses a bio-inspired retina to capture clear pictures without needing a full amount of light across the entire sensor. Unlike their standard counterparts, event cameras only report changes in brightness for each pixel, rather than colour as well, enabling them to capture perfectly sharp images even during fast motion or in low-light environments.

By only measuring differences in pixel brightness between frames, event cameras are able to produce sharp images even when moving at high speeds. (Credit: UZH)

Conventional drone cameras in contrast only work when there is a high amount of light available and when the drones are travelling at limited speeds, in order to avoid creating motion-blurred images that would be uninterpretable by computer vision algorithms. This can be solved with alternative sensor systems such as laser scanners, although these can often be expensive and bulky.

The UZH researchers also designed new software that can process the outputted data from the event camera efficiently, allowing drones to fly autonomously at higher speeds and in lower light conditions than currently possible with commercial vision systems.

Drones equipped with an event camera and the researcher's software could assist in search and rescue scenarios in low light conditions where normal cameras would be ineffective. They would also be able to fly faster in disaster areas, where time is critical in saving survivors.

‘This research is the first of its kind in the fields of artificial intelligence and robotics, and will soon enable drones to fly autonomously and faster than ever, including in low-light environments,’ confirmed Professor Davide Scaramuzza, director of the Robotics and Perception Group at UZH.

Scaramuzza and his team have already taught drones to use the onboard event cameras to infer their position and orientation in space, allowing them to fly safely. This is particularly significant as drones usually require GPS in order to accomplish this, which can often be unreliable and only works outdoors.

According to Henri Rebecq, a PhD Student working with Scaramuzza on the technology, there is a lot of remaining work to be done before these drones can be deployed in the real world, as the event camera is still an early prototype. The software is also yet to be proven to work reliably outdoors.

‘We think this is achievable, however,' Scaramuzza added. 'Our recent work has already demonstrated that combining a standard camera with an event-based camera improves the accuracy and reliability of the system.’