Five candidates have been shortlisted for this year’s Vision Award, Imaging and Machine Vision Europe is pleased to announce.

(Watch our exclusive webinar featuring all five shortlisted technologies)

Submissions from Brighter AI Technologies, Edge Impulse, Kitov.ai, Saccade Vision and SWIR Vision Systems were selected by the judges from 61 entries. The winner will be announced at the Vision trade fair in Stuttgart at the beginning of October.

The entries include: two instances of clever uses of machine learning; software that automates inspection planning using CAD models; a novel 3D camera containing a MEMS scanner; and disruptive shortwave infrared camera technology.

The Vision Award, sponsored by Imaging and Machine Vision Europe, recognises innovation in the field of machine vision, and has been awarded at each Vision show since 1996.

Prophesee won last year’s award for its neuromorphic approach to imaging, which Martin Wäny of the judging panel called a 'new paradigm' in imaging technology.

Along with Wäny of TechnologiesMW, the judges that selected this year’s shortlist are: Jens Michael Carstensen, Videometer; Michael Engel, Vision Components; Gabriele Jansen, Vision Ventures; Dr-Ing Ronald Mueller, Vision Markets; and Christian Ripperda, Interroll Innovation.

The five shortlisted award entries are:

Deep Natural Anonymisation

By Patrick Kern, Brighter AI Technologies

Brighter AI Technologies’ Deep Natural Anonymisation Technology (DNAT) is a solution to protect personally identifiable information (PII) in image and video data. This technology automatically detects and anonymises personal information such as faces and vehicle number plates, and generates a synthetic replacement that reflects the original attributes. The solution therefore protects identities while keeping necessary information for analytics or machine learning.

General video redaction techniques include blurring PIIs, but this leads to loss of information and context of the image. DNAT, on the other hand, replaces the original PII with an artificial one that has a natural appearance and preserves the content information of the image.

Each generated replacement is constrained to match the attributes of the source object as precisely as possible. Nevertheless, this constraint is selectively applied, so that the user can control which attributes to maintain and which to obscure. For faces, for example, it could be important to keep attributes like gender and age intact for further analytics. DNAT effectively breaks the trade-off between removing and anonymising data.

What the judges say

'This is a topic [keeping personal information anonymous] that is very relevant within society. Anonymisation with a synthetic replacement is a potential enabler for future applications where personal information is present.'

Ground-breaking algorithm that brings real-time object detection, tracking and counting to microcontrollers

By Mihajlo Raljic, Edge Impulse

A new machine learning technique, developed by researchers at Edge Impulse, has been designed to run real-time object detection on devices with very small computation and memory capacity. Edge Impulse provides a platform for creating machine learning models for edge computing.

Called Faster Objects, More Objects (FOMO), the deep learning architecture can unlock new computer vision applications. Most object-detection deep learning models have memory and computation requirements that are beyond the capacity of small processors. FOMO, on the other hand, only requires several hundred kilobytes of memory, which makes it a great technique for TinyML, a subfield of machine learning focused on running machine learning models on microcontrollers and other memory-constrained devices that have limited or no internet connectivity.

The idea behind FOMO is that not all object-detection applications require the high-precision output afforded by state-of-the-art deep learning models. By finding the right trade-off between accuracy, speed and memory, the user can shrink deep learning models to small sizes while keeping them useful.

Instead of detecting bounding boxes, FOMO predicts the object's centre. This is because many object detection applications are just interested in the location of objects in the frame and not their sizes. Detecting centroids is much more compute-efficient than bounding box prediction and requires less data.

FOMO can be applied to MobileNetV2, a popular deep learning model for image classification on edge devices. According to Edge Impulse, FOMO is 30 times faster than MobileNet SSD, and it can run on devices that have less than 200 kilobytes of RAM. On a Raspberry Pi 4, FOMO can detect objects at 60fps as opposed to the 2fps performance of MobileNet SSD.

Edge Impulse says that where an application might have needed an Nvidia Jetson Nano, now it can run on an MPU at significant cost savings. In addition, since the algorithm runs on C++ it is hardware agnostic and a natural hedge against hardware volatility.

What the judges say

'The jury sees ground-breaking work between the algorithm and the [computer] architecture, with the potential to enable more embedded vision on devices with very small computation and memory capacity. Up to 95 per cent of cost savings shows the potential [for the innovation].'

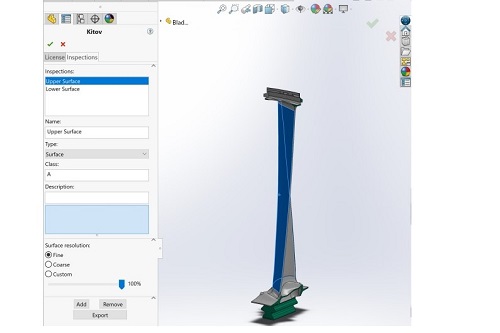

CAD2Scan: Automating visual inspection planning

By Dr Yossi Rubner, Kitov.ai

Kitov.ai's CAD2Scan software automatically takes all available information from a CAD model, including geometric and component specifications and specific inspection requirements, and uses it to plan a robot inspection task. This might include the best 3D camera and illumination directions for each inspection, and the optimal robot path.

An engineer can set inspection requirements directly in CAD software using CAD2Scan. Once all requirements are marked, CAD2Scan automatically extracts the specific geometric and semantic information for each inspection requirement. This information is passed on to the relevant semantic detectors performing the inspection tasks.

Kitov's semantic detectors include a surface detector, label detector, screw detector, existence detector, and so forth. In addition, Kitov's open software platform allows easy integration of third-party detectors.

Each inspection requirement is processed by the appropriate semantic detector that generates the imaging parameters and the requested 3D poses for the camera and light. The inspection plan is then optimised to give, for instance, full coverage, reduce the number of images needed, minimise the total inspection time, and calculate the best camera positions and illumination angles for each specific feature of interest.

In addition, the planner can capture multiple inspection points in a single image where possible, reducing the overall inspection cycle time.

Automatic CAD-based inspection planning is ideal for industries that manufacture complex parts and products. For example, CAD2Scan technology improves the inspection of: single-material parts with complex 3D geometric shapes, such as turbine engines, blades, wheels, and metal moulding; CNC parts, where it is difficult and time-consuming to carry out full inspection manually; and custom-made or other low-volume parts, such as medical implants or 3D-printed parts.

CAD2Scan is implemented as a plugin to common CAD software; it is available for SolidWorks and Creo. It also supports the evolving quality information framework ISO standard and can parse visual inspection requirements embedded into it.

Kitov.ai believes CAD2Scan is the only technology that can automate general-purpose visual inspection test requirements directly from a CAD model.

What the judges say

‘Kitov.ai has developed a product highly relevant to manufacturing markets, specifically in light of the market shift towards local manufacturing and mass customisation.

‘The main USP of the company’s offering is a software platform for automating inspection planning... taking the input from a CAD drawing of the part. There’s a separate module for actual quality inspection of a part.

‘Both modules make use of a combination of rule-based and deep learning-based vision tools. With its software, Kitov is helping solve a major obstacle for the wider proliferation of vision tech in manufacturing: the need for experts to set up and fine-tune a vision system for complex inspection and measurement tasks.’

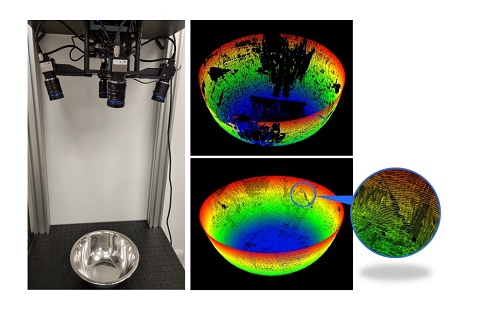

MEMS-based 3D camera for industrial inspection and robotic guidance

By Alex Shulman, Saccade Vision

Saccade-MD is a feature-based static laser line scanner based on a MEMS scanner for absolute scanning flexibility. The technology is similar to that used in solid-state lidar modules for autonomous vehicles, except that the MEMS mirror is in full control on both axes.

Employing patent-pending technology, Saccade imitates the saccadic movements of the human eye, whereby the brain captures an image using low-resolution receptors first, identifies objects of interest, and then captures interesting parts with high-resolution receptors.

Not only can the direction of the Saccade-MD scan be optimised so that the part doesn't have to be rotated to get a different viewing angle, but the system can vary the resolution, scanning the entire part at low resolution and then honing in on certain areas at very high resolution. This scanning flexibility means images can be acquired separately for different elements inside the field of view, and selective sub-pixel resolution can be achieved in 3D.

The data acquisition can be locally optimised based on a CAD model analysis of the scanned sample.

Saccade Vision is currently focusing on critical dimension measurements and 3D inspection in a few segments of precise manufacturing. The first is integrated inspection and metrology for metal working machines, for tasks such as: initial alignment or precise positioning of the piece inside the manufacturing tool; post-manufacturing off-line inspection; and metrology to provide predictive process and machine analytics.

Saccade Vision has partnered with Euclid Labs, a provider of offline robot programming, to deliver an inspection system integrated with a robotic material handling system, and installed on metal working machines.

The second area Saccade is concentrating on is 3D inspection in automated assembly lines. Recently, an electronics manufacturer installed a Saccade-MD system for 3D quality inspection on its fully automated assembly line. The Saccade-MD system has been running non-stop for the last six months. The camera system has already inspected more than one million units, where each assembly arrives for inspection on the line every 10 seconds.

What the judges say

‘Saccade Vision has developed a product for 3D measurement and inspection that addresses some major challenges for line scan-based 3D technology today. Its MEMS scanner... enables orientation and density of projected lines to be individually adapted to the part and surface properties, as well as to the measurement or inspection task.

‘Only the areas of interest are targeted, and the data resolution is adapted to each specific area based on the digital CAD model analysis of the sample.

‘The Saccade approach avoids the requirement for relative movement between part and sensor, leads to very time-efficient data acquisition, and increases the 3D data quality, through the avoidance of glare or occlusions, for instance.’

Extended SWIR cameras with colloidal quantum dot sensors

SWIR Vision Systems has developed the Acuros CQD eSWIR family of cameras. These cameras with their colloidal quantum dot (CQD) sensors provide sensitivity from 400 to 2,000nm wavelength and are offered in three resolutions.

The firm’s current 2.1-megapixel camera offers the lowest cost per megapixel on the market, SWIR Vision Systems says, with interfaces designed around international standards. SWIR Vision Systems has now extended these high-density, full HD focal plane arrays with responsivity out to 2,000nm.

The CQD eSWIR camera sensors are fabricated with low-cost materials and CMOS-compatible fabrication techniques representing an advance towards broadly accessible high-definition shortwave infrared imaging. It is expected that the camera's lower cost point and its non-ITAR, EAR99 export classification will drive higher adoption rates globally, broadening the market for SWIR camera technology.

SWIR Vision Systems synthesises lead sulphide-based quantum dot nanoparticles and processes these into thin-layered photodiodes. The QD particles are typically designed to provide a spectral response tuned for the 400 to 1,700nm visible-SWIR wavelength band.

The company made use of a property of its CQD sensor technology, whereby larger diameter quantum dot semiconductor particles are designed to broaden the sensor's optical response to longer wavelengths. Broader bandwidth sensors can address a wider range of SWIR imaging applications.

Shortwave infrared cameras are already deployed to inspect silicon wafers and semiconductor die for void and edge defects. The technology is also used to detect moisture levels in packaged products; thickness and void detection on clear coat films; glass bottle imaging; bruise detection in fruits and vegetables; inspection of lumber products; detection of water or oil on metal parts; imaging through smoke and mist environments; surveillance and security monitoring; crop monitoring; glucose monitoring and many more applications.

What the judges say

‘SWIR Vision Systems has created a novel camera line – Acuros – within the much needed UVA-Vis-SWIR range. In addition to a wide spectral range, the cameras offer significantly higher resolution than comparable InGaAs-based cameras.

‘The camera line has the potential to be a game changer in many important fields, including spectral imaging, scattering reduction imaging, recycling, food and agricultural quality assessment, semiconductor inspection and medical imaging.’