Researchers have developed an optical neural network (ONN) that could lead to the development of faster, smaller and more energy-efficient image sensors.

It could reduce the need for high camera resolution and complex electronic processing in machine vision tasks.

The network, described in Nature Photonics, can filter information from an image before it is captured on camera.

This method could be applied across numerous imaging tasks, for example in early cancer detection, being used to isolate cancer cells from millions or even billions of other cells.

With digital systems, images are saved and then sent to a digital electronic processor that extracts information, whereas ONNs can be used filter the information first, saving time and power.

“Light coming into the sensor is first processed through a series of matrix-vector multiplications that compresses data to the minimum size needed,” explained Tianyu Wang, leader of the research team from Cornell University College of Engineering in New York.

This is similar to how human vision works: We notice and remember the key features of what we see, but not all the unimportant details.

"By discarding irrelevant or redundant information, an ONN can quickly sort out important information, yielding a compressed representation of the original data, which may have a higher signal-to-noise ratio per camera pixel,” Wang continued.

His team showed that the ONN pre-processors can efficiently compress data, achieving a compression ratio of up to 800-to-1 (e.g. compressing a 1,600-pixel input to just 4 pixels). Despite such compression, the ONN pre-processors can still accurately perform a variety of computer-vision tasks.

Consequently, ONNs could enable image-sensing applications to "operate accurately with fewer pixels, fewer photons, higher throughput and lower latency", according to the researchers, which could lead to the development of faster, smaller and more energy-efficient image sensors.

They could also be combined with event cameras to compress spatial and temporal information – as event cameras are only triggered when the input signal changes.

ONNs could therefore help reduce the need for high camera resolution and complex electronic processing in machine vision tasks, especially those with incoherent broadband illumination. They could be especially helpful in situations where low-power sensing or computing is required, for example satellite-based image, as well as a wide range of other vision tasks.

ONNs show promise across numerous vision applications

In their paper the researchers claim that the ONN works better than other similar-sized linear optical encoders in terms of classifying images from flow cytometry – a method of analysing cells in a fluid stream – as well as identifying objects in a 3D scene and hitting other machine-vision benchmarks.

They therefore show promise across a range of imaging applications.

For example, by training an ONN to identify the physical characteristics of cancer cells, it may be able to detect and isolate them immediately using flow cytometry.

Doctoral student Mandar Sohoni explained: “To generate a robust sample of cells that would hold up to statistical analysis, you need to process probably 100 million cells. In this situation, the test is very specific, and an ONN can be trained to allow the detector to process those cells very quickly, which will generate a larger, better dataset.”

The researchers also created an image using data produced by ONN encoders that were trained only to classify the image. “The reconstructed images retained important features, suggesting that the compressed data contained more information than just the classification,” said Wang. "Although not perfect, this was an exciting result, because it suggests that with better training and improved models, the ONN could yield more accurate results.”

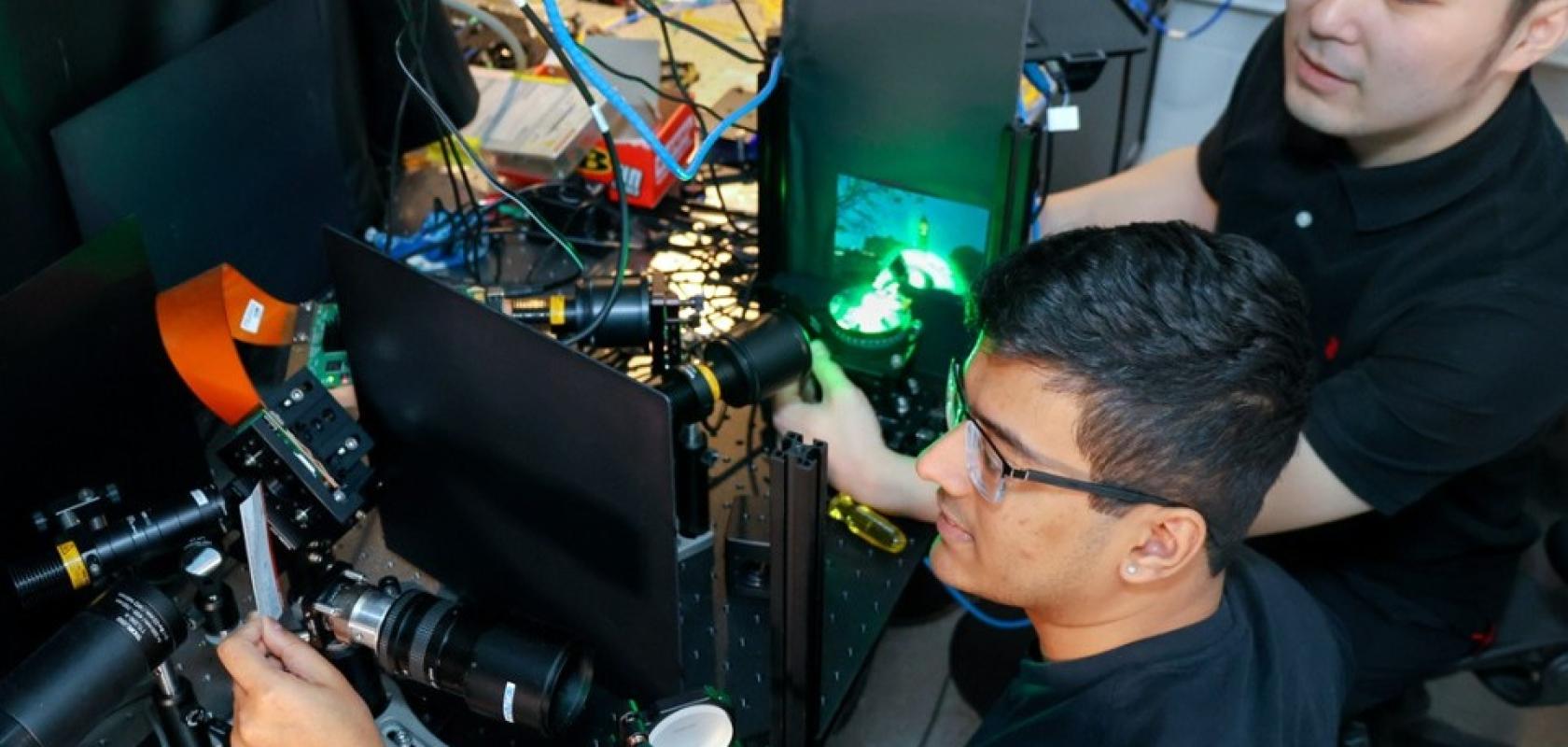

Image: Charissa King-O’Brien