Imaging is becoming ubiquitous. Image sensors and computing are now so cheap that imaging is just another function on any number of products. Autonomous cars are able to navigate and avoid collisions and obstacles thanks, in part, to vision sensors; Dyson’s new robot vacuum cleaner navigates using vision, while robots can harvest crops when they ripen and destroy weeds selectively, all using advanced imaging (more on Dyson’s robot vacuum cleaner on page 28 and automated harvesting on page 32). Embedded vision, a term meaning essentially that the image processing happens onboard the device, could be used to describe each of these examples – and it’s infiltrating all areas of society, from healthcare to transport to retail.

The machine vision sector deals in advanced imaging as does embedded vision – and machine vision has embedded products, smart cameras being one example – yet the two markets are largely separate, mainly down to price. ‘The embedded vision market has really come out of the high-volume consumer sector,’ commented Mark Williamson, director of corporate market development at machine vision supplier Stemmer Imaging. ‘Machine vision hasn’t really touched this market because the price point of what we’ve had as technology is too high. Automotive and robot companies have gone and developed vision technology from scratch. The machine vision industry is waking up to the fact that there is this whole mass market development going on that’s not running on a PC.’

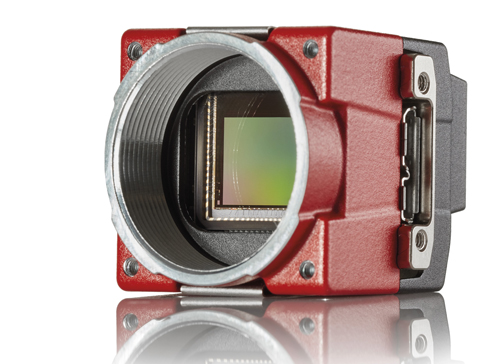

Machine vision companies are indeed waking up to the embedded market. At the Embedded Vision Summit, a computer vision conference in Santa Clara, California in May, organised by the Embedded Vision Alliance, Allied Vision launched a €99 camera targeting the embedded vision market. What’s special about the Allied Vision 1 product line is that it contains an ASIC (application-specific integrated circuit) processor, a first for any machine vision camera maker because the volumes of cameras sold into machine vision are not normally high enough to support such a system-on-chip. ASIC chips require high volumes to make them cost-effective, and so this camera aims to be a bridge between the high-performance, costly and low-volume industrial vision market and the higher-volume, lower-cost embedded market.

Andreas Gerk, Allied Vision’s CTO, told Imaging and Machine Vision Europe during the show that the typical cameras in the embedded market are not as rich in features as in the machine vision sector. Allied Vision hopes to offer some of the functionality found in machine vision to embedded vision developers through its new product line. Gerk said that the new camera platform is ‘totally different to what we have done before’.

Allied Vision is not the only machine vision supplier to try and tap into this market; other notable names exhibiting at the event in Santa Clara included Ximea, MVTec, Euresys, and Vision Components. Basler’s head of new business development, Mark Hebbel, gave a tutorial at the summit on choosing time of flight sensors for embedded applications, and the company is upgrading it Dart cameras with a MIPI interface that makes them more compatible with embedded vision. Stemmer Imaging’s Common Vision Blox and MVTec’s Halcon software can now both run on embedded platforms from ARM.

Some of the feedback Allied Vision received from its market analysis during the early stages in developing the 1 product line was that there was a lack of camera options available for embedded systems. Paul Maria Zalewski, product line manager at Allied Vision, remarked that the company has come under greater price pressure in recent years in the lower price segments for which it caters. ‘We ran into a situation where only the price matters to most of the customers in specific market segments,’ he remarked. The idea of developing a system-on-chip means the company can ‘offer functionality in a price segment that was never reached before’.

Zalewski commented: ‘We did some calculations on the break-even point of an FPGA-based versus an ASIC-based camera. And yes, we are talking about huge and ambitious numbers for the future.’ He added: ‘In the end we wanted to create something new and revolutionary for the market and the customers. I am optimistic and, [from] the feedback we got from the Embedded Vision Summit in Santa Clara, the Allied Vision 1 product line will give engineers new options for designing embedded vision applications.’

MIPI standard

Of course €99 could still be considered a high-end price for the embedded vision market – cameras costing $10 are available. But, as Williamson at Stemmer Imaging noted, while the components might be cheap, the development cost to build an embedded vision system is a lot higher. ‘It really only makes sense if volumes are in the thousands or more,’ he said. ‘If you create a custom embedded processor for five products, it doesn’t make sense because it takes a lot longer to develop than a PC-based system would.’ Plus, in Europe, there are requirements like CE approval, which adds complexity.

This is why, from a machine vision industry perspective, a standard for embedded vision is so important, to make it easier to develop embedded systems and to ensure cameras can interoperate with embedded processors. The EMVA is currently working on a machine vision standard based on the MIPI interface, which is really a consumer product interface – ARM chips are supplied with a MIPI connection and it is the interface used for cameras on a mobile phone, for example. Allied Vision’s 1 product line includes a MIPI interface.

USB 3.0 is an option for building an embedded vision system, according to Williamson, but USB 3.0 uses processor bandwidth, it doesn’t have direct memory access into the processor. MIPI, on the other hand, has been designed specifically by the processor manufacturers so that the camera data feeds directly into the processor. The EMVA embedded standard therefore makes embedded systems more compatible with the machine vision sector. ‘Mobile phone cameras are not machine vision cameras; they’re not asynchronous reset or global shutter, all the things a machine vision system requires,’ Williamson explained. ‘The cameras that are available to work with MIPI at the moment don’t meet many of the machine vision market needs.’

The standard is being driven by the machine vision industry. ‘From the Embedded Vision Alliance point of view, they are less interested in standards rather than promoting partnerships to realise solutions,’ Williamson added. ‘Those in the embedded vision community are interested in collaborating and trying to get people to cross-fertilise technologies. It’s the machine vision sector that’s saying “we need a standard to make the cameras interoperate better with the embedded processors”.’

While the embedded vision market represents an opportunity for machine vision suppliers, there is the question of how embedded processing will alter factory automation. Most factory inspection systems are still PC-based, something Zalewski feels will largely remain the case, in the short term at least. PCs are more powerful than embedded boards, and traditional systems can be upgraded with additional peripherals much easier than an embedded system designed for a specific task.

Allied Vision's 1 product line contains an ASIC chip and targets the embedded vision market

However, he added that the initial cost of an embedded system is much lower than a classic PC-based system. ‘From my point of view, it is only a matter of time until higher performance will be available in embedded boards. This will accelerate the transition from PC-based systems... to embedded systems.’

To some extent, the machine vision sector already has embedded vision solutions in the form of smart cameras. ‘Inside a smart camera from Teledyne Dalsa there will be an embedded processor running algorithms with a custom processor board and customer camera interface. But it’s a product dedicated to factory automation,’ remarked Williamson. He added: ‘Some embedded processors are getting close to the performance of PCs, so you can really start to build quite powerful vision systems.

‘In the machine vision market, at the moment people are generally taking Nvidia or Odroid processors and building lower volume embedded vision applications,’ he continued. ‘To do embedded vision properly you need to have volume. I think the majority of successful embedded vision projects will be at the volumes where it’s viable to develop a dedicated application specific processor board. We are already seeing companies like Accrosser offer template boards, which for volume can be customised.’

New business models

Will embedded vision be a revolution or an evolution for the machine vision market? Patrick Schick, product manager at machine vision camera maker IDS Imaging Development Systems, asked this question in a presentation he gave at the UK Industrial Vision Association’s machine vision conference, which took place in Milton Keynes, UK, at the end of April. Schick concluded evolution. He said that embedded processing will come into its own for reducing, as early as possible, the data that’s transferred to a back-end system for analysis in order to speed up processing.

So how will companies generate profit from embedded vision? Williamson asked: do engineers buying embedded components expect the same support model? ‘I predict a change so the general support is provided by the community, while the integration specialist provides paid support for custom integration required for a volume solution,’ he said.

There are already embedded vision development zones out there for those building embedded platforms – FPGA and SoC company Xilinx offers its ReVision online development zone for sharing reference designs, libraries, and experience about embedded vision, and Basler also has its own version called Imaging Hub.

Williamson warned that, just because there is low-cost, off-the-shelf hardware available like the Raspberry Pi, developers should be cautious of these products. ‘The trouble is that in three months’ time the camera will be obsolete, there being no long-term availability,’ he said. ‘The challenge with proper embedded vision is you need longevity of supply, guaranteed availability of the sensors, and standards such that when the camera does change it’s easy to replace it – which is where the MIPI standard comes in.

‘Companies like Basler, IDS and Point Grey are all driving the price of machine vision cameras to the bottom. But it’s not the bottom in terms of embedded vision. The low prices are because these companies are getting involved in applications where there is embedded vision and the volumes are higher. You just have to deal with low-volume customer support in an efficient way. A new business model needs to evolve, one that already exists in other markets.’

He concluded: ‘Some people in the machine vision industry are scared to some extent of the consumer embedded vision markets coming in and taking away what they already have. I would prefer to see it as an opportunity to address a wider, higher-volume market that previously was out of reach.’

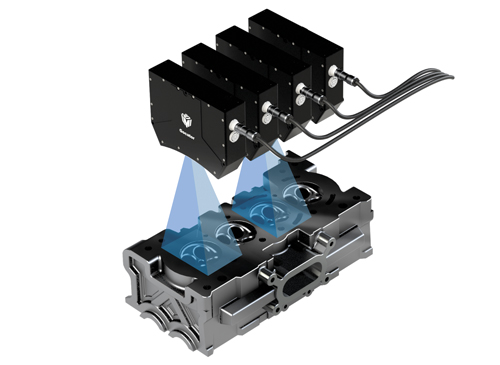

Smart cameras from Cognex or Teledyne Dalsa contain embedded processing, and there are now smart 3D sensors available that make 3D vision available as a designated product, with light source and processing all included. LMI’s Gocator 3D smart sensor is one such product. It was introduced in 2011 and has an all-in-one, precalibrated design.

LMI’s CEO, Terry Arden, commented: ‘Embedded controllers are not just suitable approaches for processing 3D data, but a necessity for handling the high speeds involved in laser profile scanning or the high data loads involved in stereo fringe projection. Scan rates can easily get up to 4kHz (laser) or 10Hz (fringe). Data rates can reach 1GB/s. Buffering and transmitting raw image data over GigE, Camera Link, or USB would introduce cost, complexity, and often result in too high a latency before a pass/fail decision can be generated.’

The embedded controllers in the Gocator 3D sensors contain both an FPGA and multicore processors that extract laser or fringe data from raw images, and then convert it to a 3D point cloud for processing in real time.

LMI's Gocator 3D sensor approach to measuring cylinder heads in internal combustion engines

Arden said that the advantages of embedded 3D sensors are that the inspection system is easier to scale simply by adding more sensors, and that it makes 3D vision easier to use by process control engineers who might not have in-depth machine vision expertise. LMI has just launched a dedicated 3D measurement system for measuring cylinder heads in internal combustion engines. The Gocator 3210 with cylinder head volume checker gives a high resolution 3D scan at an accuracy of +/- 0.04cm3.