Sorting and recycling waste is not only crucial for the planet, it is also big business.

‘One of the key components of waste sorting is quality of the material you are sorting, so you can resell the material – that’s getting important nowadays,’ Eric Camirand, founder and chief executive of Waste Robotics, noted during a presentation for Imaging and Machine Vision Europe as part of a webinar on hyperspectral imaging.

Recycling centres get paid to receive waste and sort it. Everything they can separate out to be recycled – or resold if possible – saves on material going to landfill. The material that’s missed and sent to landfill costs money. ‘They’re trying to avoid the landfill cost and capture quality products. It’s all about sorting out quality in the least expensive way,’ Camirand explained.

Many recycling centres still rely on hiring people to sort through waste on a conveyor, which can be inefficient and unpredictable, with material that could be reused sent to landfill. It’s also not a pleasant job. Waste Robotics, based in Trois-Rivières in Quebec, Canada, is trying to automate this process with robotic sorting. Camirand said sorting should be: inexpensive; effective at capturing quality material; precise, adaptable and reconfigurable; predictable and reliable; and something that improves the overall operation, all of which an automated system can offer.

Waste Robotics builds its own scanners incorporating multiple sensors, including RGB, 3D and hyperspectral cameras, and uses AI to make sense of the data. The company develops tailored robotic solutions for different types of waste. It has several projects deployed in Canada and the US, as well as France; lines include those for sorting different plastics, as well as construction and demolition debris.

Hyperspectral imaging gives Waste Robotics the ability to differentiate between different types of plastic, or to sort construction material, like wood, depending on whether it has glue or paint on it. It gives additional information that other imaging systems don’t provide.

Camirand said that near-infrared optical sorters have been used to separate waste in combination with air jets to eject material, but optical sorters can’t distinguish between objects. Containers made of multiple plastics, for example, can be identified as individual containers with hyperspectral imaging and put aside for further processing. These containers are really difficult to recycle, so they are removed from the line to stop cross-contamination of other plastics.

‘Sorting plastics is difficult,’ Camirand said. One problem is that packaging changes. ‘If I train an RGB-based AI model – based on colour or shape – I’m going to put all the containers in the HDPE bin. But in reality [packaging] switches back and forth between PET and HDPE.’ Camirand showed an example of two lids that are identical to look at, but made of different plastics. ‘We really need hyperspectral,’ he said. ‘They all look white, crushed containers, which humans or [RGB] AI cannot differentiate between. We really need to rely on additional signal, which is hyperspectral, to classify them.’

One of Waste Robotics’ projects in Prévost, QC, Canada, is sorting 30 parts per minute of polystyrene. ‘Right now it’s not using hyperspectral, but the client has ordered a second line for differentiating the subtleties and different types of styrofoams. Hyperspectral is really key to deliver quality sorting,’ Camirand said.

Waste Robotics is also working on other functionality in its robot solutions, such as destacking items lying on top of each other on the conveyor. ‘We believe in multi-robots and the ability to scan once and dispatch pictures to robots,’ Camirand said. To do that the firm uses 3D sensors to give as precise a location of each piece in the stack as possible. The aim is that none of the material is displaced as the robots handle the stack, so when the items get to the third robot they are still at the same location as when the original scan was made. ‘There’s a lot of people who think having a fast robot is a solution, but having one fast robot is not as good as having two slower robots because you can distribute the workload,’ he added.

- Plastic not fantastic - Greg Blackman looks at how SWIR spectral imaging can help reduce plastic pollution

- Super spectral in sight - Optical design is crucial in enabling wider adoption of hyperspectral imaging, finds Andy Extance

- Norwegian fish filleting gains hyperspectral quality measure

AI is useful for distinguishing between plastic bags on a conveyor, for instance, which is a complex computer vision challenge. The AI algorithm will learn to see features like the knot in a refuse sack, or the valleys in between two bags.

Every image from every sensor Waste Robotics uploads to the cloud; it is building a huge database of images, including hyperspectral, 3D and RGB. That allows the company to train its neural networks centrally. If the client participates in collective robot learning – if they share their data – then whatever one robot is trained on, other robots within the network will benefit. ‘If a new container comes on the market and appears in Singapore, and the robot is taught there and starts picking it, then if that container shows up in Montreal we’ll pick it because we’re sharing the same database,’ Camirand explained.

In addition, because all the images are saved it means the client can replay the tape if needed. There’s not always time to analyse everything, Camirand said, but images can be looked at again, which is useful for the client to find out what’s happening on their sorting lines, to analyse the overall productivity or the quality of the material being brought into and sent out of the plant.

Waste Robotics uses Specim FX17 hyperspectral cameras; for lighting it uses halogen bulbs. ‘We’ve tried other more concentrated [lighting] solutions,’ Camirand said, with little success. He said that, ideally, he’d like to bring all the halogen light into a narrow line for line-scan imaging. ‘At the moment we use diffuse lighting, which is okay, but we’re using too much light and there’s too much heat to manage,’ he said.

Image: Jantsarik/shutterstock.com

Filament-based light sources, like halogen or tungsten, have a broad spectrum with a lot of shortwave infrared content and therefore radiate a lot of heat. Speaking during the webinar, Steve Kinney, director of engineering at Smart Vision Lights, explained that a light source with a broad spectrum can result in big differences between the highest spectral peaks of the light and the lowest spectral components in the area of interest, which makes it difficult for the camera to assimilate all the wavelengths.

He said using filters can help here or, by using LED illumination, the output can be tuned to the wavelengths needed. There is less heat with LEDs, but also the narrowband nature of them – and the fact that each band can be tuned and the peaks flattened – means there is less dynamic range to soak up by the camera. Therefore, the camera’s dynamic range is used on the scene and not in the differences in the light source spectrum.

Smart Vision Lights provides LED lighting for both multispectral and hyperspectral imaging, although Kinney said that, largely, LED illumination is more suited to multispectral imaging.

Camirand said that, at the moment, waste sorting lines are being automated alongside manual labour. However, the end game is for fully autonomous lines that feed themselves. ‘Maybe they’ll go a little slower, but for longer days,’ he said. ‘That’s what we’re aiming for.’

--

Steps for spectral imaging success

During our recent webinar, Steve Kinney, director of engineering at Smart Vision Lights, gave his advice on designing a spectral imaging solution for factories. Firstly, he said that multispectral is more practical for production environments, as it reduces the complexity of hyperspectral imaging.

To move from hyperspectral to multispectral, the first step is to start with a full hyperspectral image of the sample and background. Compare each sample’s relative intensity, and compare the peaks in common axes over normalised ranges. Then overlay the data to identify peaks and contrast of unique areas.

Step two is to identify the spectral peaks of interest which, if you’re lucky, might only be one peak. Identifying bruising on an apple, for example, is relatively straightforward and might only require one wavelength band.

Sometimes one peak is not enough – for example, when imaging different species of tree in a forest canopy. Here, additional information might be needed to highlight the different plants.

Optical filters can isolate areas of interest from the rest of the spectrum. Multiple bandpass wavelengths can be built into one filter. The aim is to achieve high transmission and steep cut-off to isolate the narrowband edges. Kinney suggests an optical density of five for band-pass filters.

Broadband lighting – halogen or sunlight, for example – is more flexible in combination with narrowband filters, but it requires enough light energy across the target spectrum. Broadband lighting also introduces heat, and there can be loss of spectral efficiency outside of the spectral peaks, so the dynamic range of the camera is dominated by light outside the wavelengths of interest.

LED lighting, such as that offered by Smart Vision Lights, is a more targeted alternative to a broadband illumination source. It has the advantage of higher intensity in the wavelength bands of interest, and might mean filters aren’t necessary.

--

Latest products

Among the recent products launched that are suitable for spectral imaging are lenses from Kowa, with a transmission of 450 to 2,000nm. The lenses also have a reduced focus shift over this wavelength range – without visible-to-shortwave-infrared optics, users might need to adjust the focus by changing the illumination and changing the lens.

The one-inch format lenses are available with focal lengths of 12mm, 25mm and 50mm, with 8mm, 16mm and 50mm following in October.

Ximea has released a new generation of hyperspectral cameras in the XiSpec series. The new cameras, using sensors from Imec, include line scan and snapshot mosaic versions.

The XiSpec2 cameras measure 26.4 x 26.4 x 32mm and weigh 32g. The band-pass filters have been optimised to improve spectral performance. The cameras are ideal for applications like precision agriculture, material science and medical imaging. For projects where integration into limited space is required, models with USB3 flat-ribbon connection or PCIe interface are available.

Lighting provider Ushio has updated its Spectro LED series with an indium gallium nitride (InGaN) chip that achieves an output power of 180mW at 500 to 1,000nm. The 1mm2 chip emits a total broadband visible to near-infrared spectrum, meaning multiple LEDs are not necessary to cover this hyperspectral wavelength band. The SMBB package means there is no need for a heat-dissipation jig to accompany it.

ProPhotonix has added a hyperspectral LED line light to its Cobra MultiSpec platform. It has a spectral range from 400 to 1,000nm and excellent spatial and spectral uniformity. The spectrum is well-matched to the Specim FX10 camera, for example, or machine vision cameras using the Sony IMX174 sensor. For applications covering an extended spectrum, the Cobra MultiSpec can be developed in configurations of up to 12 wavelengths, from 365 to 1,700nm.

LEDs offer advantages over traditional halogen light sources, including compactness, longer lifetimes and greater control of the emission spectrum. System designers will benefit from reduced-form factors, without the need for additional heat extraction equipment, and excellent spectral control, allowing improved system optimisation and enabling new applications.

Finally, French fibre optic provider, Sedi-ATI, suggests using custom fibre-optic bundles to perform hyperspectral imaging.

Fibre optic bundles are a flexible option for spectral or hyperspectral imaging, the firm said. Broadband optical fibres are particularly well suited to this type of application.

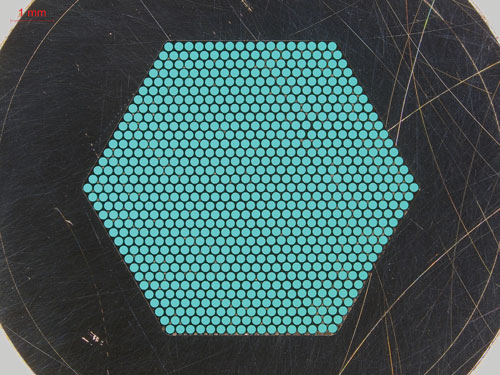

Optical fibre bundle from Sedi-ATI. Credit: Sedi-ATI

The image is reconstituted by using masks ordering each optical fibre – the same imaging principle used in telescopes. A spectrometer would generally be used to get a reading from the fibre optic bundles.

The number of optical fibres and their respective core diameters constitute the pixels of the transported image. Bundles of several hundred fibres can be assembled, with offsets of several tens of metres.

One example Sedi-ATI gave is a bundle of 200µm core diameter broadband optical fibres to form an image of about 800 pixels over a spectrum from 300nm to 2.1µm.