Highway authorities have long used vision to keep track of road users, the most recognisable being automatic number plate recognition in traffic enforcement, toll booths, parking and speed detection.

The cameras used in these types of scenarios are able to produce clear images of moving objects in widely varying light conditions. As the technology has developed, so too has the breadth of uses for vision technology in traffic and transport – no longer can the association remain with just speed cameras.

Despite being much maligned, it is hard to argue against the speed camera’s role in helping improve road safety. Now, there are other vision-driven safety applications making their way into the traffic sector.

Fusion Processing, which develops sensing systems for autonomous vehicles and driver assistance, has had a cyclist detection system for trucks and buses on the market for a few years. Last year the first Transport for London buses were equipped with the latest version of the system. It uses radar and cameras, with the software programmed to identify cyclists next to the vehicle and notify the driver. ‘We have a number of bus companies using it, and Transport for London has been very interested in cyclists’ protection,’ said Jim Fleming, marketing director at Fusion Processing.

One could argue that Fusion Processing’s CycleEye system arrived ahead of its time, particularly in light of the decision taken by the European Union to revise its general safety regulations for motor vehicles. Next year new safety technologies will be mandatory in European vehicles to reduce the number of fatalities and injuries on roads. Such features include systems that reduce dangerous blind spots on trucks and buses, and technology that warns the driver if it detects they are drowsy or distracted.

Commissioner Elzbieta Bie´nkowska, responsible for internal market, industry, entrepreneurship and SMEs for the EU, noted that many advanced safety features already exist in high-end vehicles, and that the new regulations will raise the safety level for vehicles across the board. For trucks and buses in particular, there will be specific requirements to improve what drivers can see directly and remove blind spots, as well as systems at the front and side of the vehicle to warn of vulnerable road users, especially when making turns.

Fusion Processing has a mirror replacement version of CycleEye, designed to replace wing mirrors with a camera system to give driver assistance – it is this version that has been installed on some Transport for London buses. The CycleEye camera mirror system (CMS) combines HD cameras covering the field of view of class II and class IV mirrors – the main exterior mirrors of vehicles and wide angle mirrors respectively – and an optional class V, close proximity mirror FOV to provide a live feed from the cameras to displays mounted to the inside of the windscreen. The system uses a single camera and radar unit on each side of the bus, plus two internal displays for the driver; Fusion Processing’s AI algorithms identify cyclists. Each unit can be fitted at the external mirror location of most buses using the same or similar mounting bolt pattern.

CycleEye CMS replaces wing mirrors on buses, combining HD cameras and radar to give the driver a view of what’s around the vehicle. Credit: Fusion Processing

The system achieves the low latency required by EU regulation #46, to provide the video stream in real-time, and superimposes images onto the display screens, warning if cyclists and pedestrians get too close to the vehicle.

The CycleEye CMS replaces the wing mirrors, which in itself has a lot of benefits including eliminating mirror head strikes that can occur when pedestrians are too close to buses and trucks. ‘The head strikes are a real issue for the industry,’ Fleming said.

‘There are more and more standards out there, so safety engineering has become even more important. It’s not just about understanding sensors,’ he added.

Designing a system such as this must also take longevity into consideration, particularly when it is used in the kind of conditions faced on London’s roads. It has to be able to withstand all weather conditions, along with aspects such as being put through a bus wash every day, Fleming explained.

In the future, Fleming foresees vision technology finding its way more often into the areas of driver assistance and autonomous driving. He said that providing technology for autonomous vehicles is a huge area for Fusion Processing, as is helping drivers identify vulnerable road users. ‘Look out for autonomous buses in the future,’ he said.

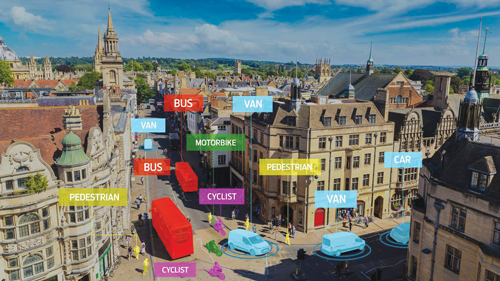

In a similar vein, London-based Vivacity Labs is providing its AI and Internet of Things technology to help London’s Sutton and Kingston Councils improve active travel insight and, ultimately, the safety of pedestrians in high footfall areas. But rather than accidents, this project aims to keep people safe if they need to travel during lockdown.

The work falls under the InnOvaTe project, an initiative funded by the Strategic Investment Pot as part of the London Councils Business Rates Retention scheme. The funding will allow the boroughs involved to deliver a multi-purpose Internet of Things platform, connecting various sensors across borough boundaries. This is being used to gain new data insights to support and drive economic growth and create a safer environment across the sub-region.

A smart city pilot study in London is helping support crowd management when lockdown restrictions are eased. Credit: Vivacity Labs

The pilot study recognises the cultural shift created by the pandemic, resulting in a dramatic drop in traffic levels, so residents feel safer on the roads when they are taking their daily exercise or making essential trips. But, as the world begins to return to some sort of normality, the study can anonymously support crowd management, monitoring the impact of road and pavement changes and reducing the infection risk for residents with better management of high footfall areas.

Mark Nicholson, CEO at Vivacity Labs, explained: ‘The pandemic has seen a significant shift in travel trends, and it has brought about changes to road and pavement space in order to accommodate for active travel and help people to keep their distance.’

The project uses a sensor mounted on a lamppost. The sensor contains a local edge processor to take video from the in-built 1080p board-level camera, and runs machine learning algorithms to extract the location and classification of each road user. With each frame of video under normal operation deleted immediately after processing, only anonymous data is extracted, stored and transmitted. The sensors have mobile connectivity, so the data can be sent to the cloud, enabling remote access at any time to view operation status, enable remote debugging and maintenance, and action remote updates as functionality is improved.

Nicholson said: ‘Data insights provide the ability both to analyse where to implement changes and evaluate the effectiveness of these schemes. The next stages of regeneration are crucial, and we’re delighted to be working with Sutton and Kingston Councils to help improve their active travel insight, and assess and improve the safety of high footfall areas.’

If the pilot is successful, the scheme could be expanded across the boroughs and into the adjacent boroughs of Merton, Croydon and Richmond.

Chasing pavements

Vision is also being used in the US to help keep pedestrians safe in a pavement management exercise that has been implemented by the American Association of State Highway and Transportation Officials (Aashto). The association introduced some provisional new specifications for measuring the condition of pavements, detecting whether and to what extent they are damaged.

The association’s new specifications define measurement of ruts, cross slopes, deformations and lane-edge drop-off. However, it struggled to find a complete profiling system that complied with these specifications. Surface Systems and Instruments (SSI), together with LMI Technologies, built a 3D-profiling system able to meet these needs. The 3D sensor had to provide high-speed data acquisition with a large coverage area, large clearance distance, high measurement accuracy in an outdoor environment, and a robust industrial package with a small footprint.

SSI had previously partnered with LMI Technologies, using sensors from its Gocator range. It selected the recently-introduced Gocator 2375 sensors for the solution, which generates 3D data using laser line profiling.

American highway officials are using a rig comprised of five LMI Gocator 2375 sensors mounted on a vehicle to measure the condition of pavements. Credit: LMI Technologies

Several profiling systems have been mounted on pick-up trucks and are currently in use with Aashto. To cover the specified transverse profile width of 4 or 4.25m, five Gocator 2375 sensors were mounted on each vehicle, three pointing straight down, and the outer two angled outward to minimise vehicle width, while maintaining a large scan width.

SSI also equipped the vehicles with GPS, an inertial measuring unit for vehicle pitch and roll compensation, a camera for area imaging, two Gocator 2342 3D sensors in the vehicle frame for ride and roughness measurement, and a high-end, ruggedised notebook computer. SSI integrated all sensors with data synchronisation, and developed a software package for analysis, display and reporting in compliance with the Aashto specifications.