February’s Geneva Auto Show had something a little special. The vehicle in question may not have looked like much – it was a big square box on wheels – but those who made their way to the Volkswagen stand will have seen a concept car called Sedric, the first vehicle to do away with the conventional controls and what will perhaps become a glimpse of the future.

The history of autonomous vehicles is longer than you might think. It is now 131 years since the first ever car, the Benz Patent-Motorwagen, went into production. It is 101 years since the launch of the Cadillac Type 53, the first car with a modern layout. And it is 91 years since the first driverless vehicle, the Achen Motor, albeit this was managed via a remote control system.

And while the concept of the autonomous vehicle is now arguably most connected to the Google Car project, now rebranded Waymo, research has been going strongly since the 1980s. Before that several attempts were made, notably Ford’s stunning 1956 concept car, the Firebird II, which had an ‘electronic brain’ that allowed it to move into a lane with a metal conductor.

Recent years have seen an explosion in those developing technology for, or actively designing and testing autonomous vehicles. Earlier this year, Intel spent $15.3 billion on Israeli firm MobileEye, which develops vision-based advanced driver assistance systems (ADAS). MobileEye’s EyeQ is used in more than 15 million vehicles sold as of 2017, with BMW and Intel announcing in January that it would be developing a test fleet of autonomous vehicles using the technology.

In May CB Insights published a list of 44 car and technology firms developing autonomous vehicles. Add to this the university-based projects like Bristol’s Venturer project, and Statista’s predicted market value of $42 billion for partially ($36 billion) and fully ($6 billion) autonomous vehicles seems conservative.

The Venturer autonomous vehicle crossing Bristol's Clifton suspension bridge

Why the surge now? Jim Hutchinson at Bristol-based Fusion Processing, which is involved in the Venturer project, commented: ‘It’s twofold: [we] think differently about cars today versus a generation ago… with people less bothered about driving and [instead] just wanting to get there. Connectivity is often viewed as more important than performance.’

A key reason, Hutchinson said, is technology. ‘We have reached a point where the technology is reaching sufficient maturity and the necessary components such as sensors and processors of a high enough performance can be implemented at a reasonable cost.’

As he also stated, at the heart of this growth will be sensor technology, with lidar, radar, ultrasound and, of course, camera technologies playing a major role in this.

A million reasons for autonomous vehicles

While it may be hard to predict if cars of the future will look like the Sedric, like today’s cars, or something completely different, the need for these vehicles is pressing. WHO 2017 figures state 1.25 million people die in road traffic accidents each year; a further 20 to 50 million people are injured or disabled, nearly half of these are ‘vulnerable users’ (pedestrians, cyclists and motor cyclists), and vehicles are the leading cause of death for those aged between 15 and 29.

The cause is overwhelmingly human error. US Department of Transport figures from May 2016 state that 94 per cent of the country’s annual 2.19 million accidents (2.05 million) were caused by driver error.

This equates to one death every 60 million miles travelled, albeit in richer countries this drops, with one per 94 million miles in the US.

The first death from a crash caused by an autonomous vehicle happened last year when, in May 2016, a Tesla Model S controlled by Telsa’s Autopilot crashed into a white tractor trailer which was crossing the road.

The Model S uses a combination of radar and vision systems, with Tesla’s statement on the crash saying the vision system missed the trailer as it saw ‘the white side of the tractor trailer against a brightly lit sky’. At the time 130 million miles had been driven by Tesla vehicles with Autopilot activated; in October Elon Musk tweeted the figure had risen to 222 million miles. Since this time not one further fatality has occurred. Tesla is upgrading its systems to prevent these sorts of crashes happening again.

Today, cars rolling off the production line will likely have as standard autonomous emergency braking, lane departure warning systems, and active cruise control, as well as blind spot monitoring and automated parking. This is not just in the traditionally luxury brands, with companies such as Kia, which traditionally competes in the lower priced market, offering these too.

These systems represent level one (driver assistance warnings) and level two (partial automation of steering and/or speed) on the SAE automation scale. The scale runs from zero (no automation) to five (fully automated).

A good mix of sensors

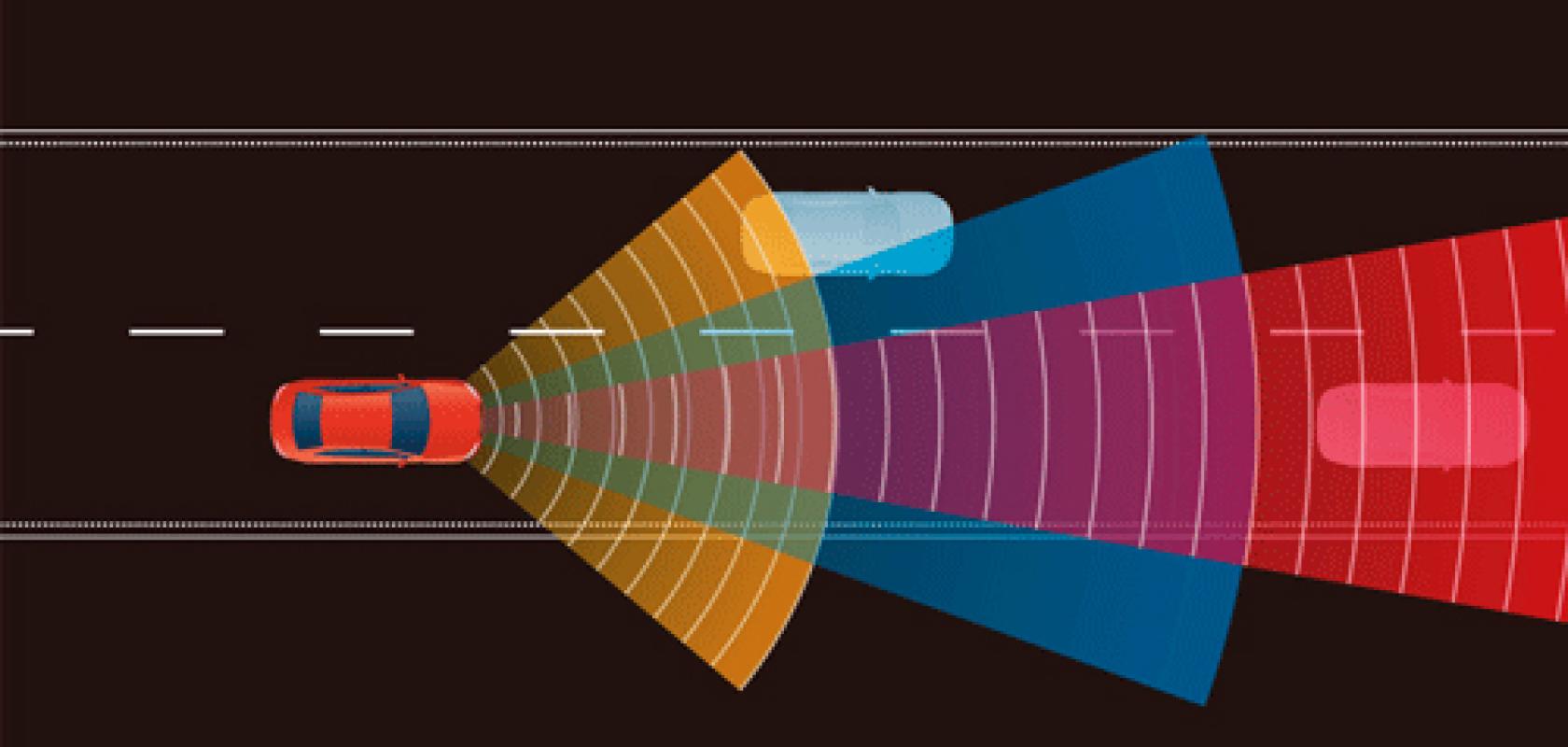

The experts interviewed for this feature all highlighted three technologies that will be used in autonomous vehicles: cameras, lidar and radar. Fusion’s Hutchinson also noted low-cost ultrasound technology, which could be used in parking applications, for example.

Narayan Purohit of On Semiconductor’s automotive image sensor division explained the three technologies: ‘Cameras are the master of classification; they are by far the cheapest and most available compared to lidar or radar. They can also see colour, which allows them to make the best of scenic information.

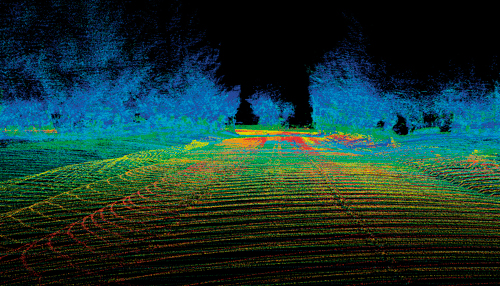

‘Lidar... is the master of 3D mapping. It can scan more than 100 metres in all directions to generate reasonably precise 3D maps of the car surroundings. Radar is the master of motion measurement.’

There are significant limitations in each of these technologies. A camera’s accuracy is severely affected by light conditions, by weather, by fast changes in light. Cameras also produce lots of data which places demands on the car’s processing systems.

Lidar – albeit significantly less so than cameras – is also affected by weather. The flash lidar variant is too power intensive to scale, while scanning lidar, which uses mechanically steered beams, is slower to scan and prone to failure. Radar is both less granular and slightly less responsive on angular response compared to lidar, but it works in all weather conditions.

Bruce Welty of Locus Robotics, a developer of autonomous logistics factory robots, commented: ‘If you add one more sensor to the mix, even if that sensor is quite inaccurate, it can substantially improve accuracy.’ He added: ‘If I want to maximise server time and [each of four servers] has an 80 per cent uptime, then [as] all you need is one server to be up, you can get incredibly high run times. Adding another server, even with just a 50 per cent uptime will [halve your downtime].’

Sensor fusion

Dr Sim Bamford, CTO at neuromorphic camera technology developer Inivation, commented: ‘The incident with Tesla made the point that no single system should be relied on. There should be [redundancy]. Vision can fail, radar can fail. If you have two or more systems – radar, lidar, visual – working together you’re going to get better results.’

Fusion Processing’s Hutchinson said: ‘For a simple operation, in a very benign environment, you may not need many sensors, possibly only cameras, but that would limit what you can do safely. A more common configuration would be several cameras – including stereo cameras – [and] several [long- and short-range] radar units. Sometimes it’s useful to include certain sensors, for example ultrasound, for close proximity sensors.’

Hutchinson confirmed Fusion Processing’s Cavstar autonomous vehicle also supports lidar, but the firm wasn’t using lidar in any live projects and the technology wasn’t its preferred solution.

‘We have algorithms specifically for the sensor data fusion,’ Hutchinson said. ‘You are looking at those different data streams and processing them together if possible, so you get an accurate picture – choosing complementary sensors gives you the most efficient way of doing that. Camera and radar are a complementary combination.’

Some of these problems will be solved by recent advances in sensor technologies. Sensata’s Greg Noelte remarked: ‘Current lidar [does] not comply with the requirements of [SAE] level three, four, and five. Applications in level three automation really require an entirely different kind of lidar sensor.’

At this year’s CES, Sensata’s partner Quanergy demonstrated one of the first solid-state scanning lidar chips. This chip creates an optical beamed array through the interference of beam patterns. Noelte said that with ‘different patterns of light coming off the silicon in just the right way, you can electronically steer the beam with no moving parts. We can move, for example, 120 degrees. You can then have multiple lasers pointing in lots of different directions. This is a scanning technology, so long range, but also no moving parts. Unlike in mechanical, a truly solid-state approach has the ability to lower reaction times to microseconds, which is a very low latency [at a] 25Hz scan rate.’

Significant advances are also being made in vision sensors, with companies such as Chronocam and Inivation developing neuromorphic sensors designed to work more like the retina, highlighting what changes in the scene, rather than capturing the whole scene frame by frame. Inivation’s Bamford explained the technology: ‘Each pixel is an independent sensor of change in light. And if there’s a change in light, a pixel sends a signal – and it does that asynchronously so there are no clocks on the system. What you get is a flux of data about changes in the scene and you get it with extremely low latency – sometimes down to tens of microseconds latency.’

Lidar is one sensor technology used to give information about a car's surroundings (Credit: Sensata)

Chronocam’s Luca Verre backed this up, highlighting that this type of sensor gives both a ‘very high temporal precision, reacting at microsecond precision. You never have motion blur with our sensors.’

Both companies have developed chips that give a greater than 120dB dynamic range and that drastically reduces the data being sent to the car’s processing unit. Bamford also said the camera could work in very low levels of light, approximately 0.1lx.

While still in its relative infancy, the neuromorphic sensors are already in the hands of the car manufacturers, with Bamford stating the company is working with five of the top global car manufacturers and the Renault group announcing a partnership with Chronocam in November 2016.

Will the Sedric be the future of transport? If it is, drivers will have to get over a big trust issue first, according to a Deloitte survey.

Fusion Processing’s Hutchinson said: ‘As with anything new, there will be early adopters. That might be those that have had to give up driving but want to maintain a particular lifestyle. It might be people who are very pressed for time. As people try it, they’ll become more comfortable with it. I think it will become quite normal.’ He added: ‘At some point it will be seen as more dangerous to get into a human-driven car.’