Qualcomm Technologies' Snapdragon board is designed for mobile devices, but can be used to create other embedded vision systems. Credit: Qualcomm Technologies

Embedded computing promises to lower the cost of building vision solutions, making imaging ubiquitous across many areas of society. Whether this turns out to be the case or not remains to be seen, but in the industrial sector the G3 vision group, led by the European Machine Vision Association (EMVA), is preparing for an influx of embedded vision products with a new standard.

‘An embedded vision standard is important for the future of the machine vision market,’ commented Arnaud Darmont, the EMVA’s standards manager. ‘Right now, there still aren’t many embedded products on the market, but we see this as a trend for the future. We need to start working on the standard now, because when it becomes real, we need this standard to help embedded vision to penetrate the market.’

It’s still early days in the development of the standard, but at an initial meeting at the end of August in Hamburg, Germany, the group identified three aspects that the standard should try to address.

The first part is a software framework, so that code is compatible across different embedded platforms. The idea is that an embedded camera supplier will provide a software design kit based on the standard that is compatible with any software package. In this way, any embedded machine vision software will run on any embedded camera.

The standard will specify software to run on top of Sony/JIIA SLVS-EC or MIPI CSI-2 D-PHY physical layers. If the board or sensor uses a separate interface, it will not be part of the framework, according to Darmont, but the group will provide examples and recommendations on how to build a similar framework for a different interface.

The software framework will be based on GenICam: the GenICam SDK will be available for the user to program image processing code and connect to the sensor, and there will be a GenTL interface between the driver and the user.

This software framework is well defined, according to Darmont, and there is a working group. The other two parts, involving hardware, are less well defined and are still being discussed.

The second part concerns connectors and cables. Image sensors have different pin-outs, with different speeds and different controls: Sony’s SLVS-EC sensor interface, LVDS, and MIPI CSI-2 are three examples. The proposal is to define compatible board-to-board connectors and ribbon flat cables, so that manufacturers of processing boards can include a connector that matches the sensor boards. The cables and connectors will be compatible with the voltage levels and speeds provided by the image sensors for the most common interfaces.

‘In the market now, vision systems are delivered as a camera, with the sensor, the sensor board, the processing board, all coming from the same supplier,’ Darmont explained. ‘This [part of the standard] is more for the future, if someone wants to replace the processor or the sensor board in the camera with something else compatible.’

Currently, the standard group has decided only to recommend interfaces between image sensors and system-on-chips (SoCs) for embedded vision, namely a Sony SLVS-EC or a MIPI CSI-2 interface. ‘This will be a recommendation from the standard,’ Darmont said; ‘we are not going to make a standard on top of these interfaces. It doesn’t mean that people can’t use something else, but we think the market and the processors will go in this direction [SLVS-EC and MIPI CSI-2], to offer the interface directly on the pins of the SoC. Currently this is the case with MIPI; not many processors have a dedicated interface for SLVS-EC yet. We think if we make this recommendation in the standard it might help to move [machine vision equipment suppliers] in a direction where we have it as a hardware block directly from the SoC.’

A third aspect that was discussed in Hamburg in August that might be useful for the future is to make hardware more plug-and-play. The idea behind this is to make the image sensors, image signal processors (ISPs) and the boards similar in terms of communication and the register list.

‘Not everyone agrees on this strategy, because the camera manufacturers want to keep this knowhow in-house, and the sensor guys don’t want to comply with the standards because of technical reasons. So this is more difficult,’ commented Darmont. ‘But this is also one of the identified challenges, in that every image sensor is so different, that there is a lot of work to move from one sensor to another. In an embedded world, where people would like to see individual building blocks that interconnect in terms of hardware and software, having some standardisation there would be an interesting step. But it is a challenge.’

Work on the software framework has begun, while the other two aspects are still in the discussion phase. The group plans to publish a white paper during the first half of 2019, stating what the embedded vision standard is trying to achieve and the progress that has been made.

High-end systems to benefit from lens standard

Greg Blackman talks to Arnaud Darmont at the EMVA on a new standard interface for lenses

Machine vision industry bodies have begun the first steps to define a standard interface for lenses. The initial meeting to build the working groups took place on 9 July; the groups will be chaired by Marcel Naggatz, from Baumer, and Erik Widding, from Birger Engineering.

Nothing has been specified yet, according to Arnaud Darmont, the EMVA’s standards manager, but he said that higher-end systems and new applications could benefit a great deal from a lens standard.

Writing on the subject for Imaging and Machine Vision Europe in December 2017, Professor Dr Bernd Jähne stated: ‘It is really surprising to see that such an essential part of a vision system as an open camera lens communication standard has been overlooked for such a long time by the industry. It is now essential to develop it, in order to make the next generation of vision systems possible.’

Darmont envisaged a potential standard as having two backwards compatible communication protocols on the same bus: an extremely basic version, for lenses that don’t have a lot of electronics, and a more advanced protocol allowing communication in both directions at higher speed with more commands. He said that the basic version is ‘mandatory if we want to communicate with less expensive devices that might want to be compliant but don’t want to invest in the full standard.’

Another aspect would be mapping over GenICam, so that the end-user will see the lens as virtual parameters of the camera. However, this would be later in the implementation, because the basics have to be defined first.

The standard, according to Darmont, will not address the low-cost lens market, as these devices have to remain inexpensive. ‘We are addressing the market of higher-end systems,’ he said, giving the example of setups with two cameras and two lenses that could use just one camera and one programmable lens. The lens would toggle between two settings to grab two different views of a scene. Here, the customer would invest more in the lens, but would save money on the camera.

Applications that make use of lens control would also benefit from the standard. ‘Currently, these applications have custom solutions, custom electronic controls for the lenses with a second cable,’ Darmont explained. ‘For those, even if the protocol is more expensive, users will save on cables; they will save on the cost of the custom implementation.’

Applications currently based on GigE Vision with long cables often require a second cable to control the lens separately, which is expensive. ‘Going to a single-cable solution for those long-distance applications is probably something people will be looking for, because it’s not only the cost of the cable, it’s the cost of all the problems you have with cables,’ Darmont said – cables will have a lifetime when they are bent, for example. ‘If users go from two cables to one, they might increase the lifetime of the system,’ he continued. ‘There are some niche applications where it really makes sense.’

One question raised at the initial meeting is whether the interface can be made more generic to include devices such as encoders or light sources. In this way, these devices would be controlled directly by the camera, so that the light source no longer needs its own connection to the PC. The camera would be the bridge between the computer and the light source. ‘This type of basic interface is very similar to what we would do with lenses,’ Darmont said.

‘This question hasn’t been answered, but if we find a match between what we could do for lenses and what lighting requires, then we will have something even more general,’ he concluded.

Since these articles were written, Arnaud tragically died in an accident during a trip in the United States. Our thoughts are with his family.

OPC UA Vision part 1

By Dr Reinhard Heister, VDMA Robotics and Automation

At Automatica 2018, VDMA Machine Vision published the release candidate of OPC UA Vision part 1, a 146-page document, jointly developed in only two years by 20 key players from the European machine vision industry, and backed by the international community through G3.

Connected to the publication of the OPC UA companion specification at Automatica was an OPC UA demonstrator, developed by the VDMA Robotics and Automation association, together with 26 partners from the European automation community. The demonstrator showed the potential of this new communication approach in a concrete and understandable way: interoperable, vendor-independent and skill-based.

The OPC UA companion specification for machine vision – in short OPC UA Vision – provides a generic model for all machine vision systems, from simple vision sensors to complex inspection systems. Put simply, it defines the essence of a machine vision system. The scope is not only to complement or substitute existing interfaces between a machine vision system and its process environment by using OPC UA, but rather to create non-existing horizontal and vertical integration abilities to communicate relevant data to other authorised process participants, right up to the IT enterprise level.

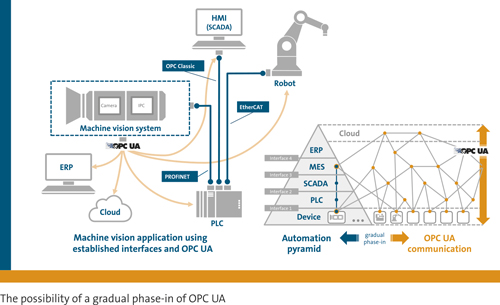

It is possible to have a gradual phase-in of OPC UA Vision with coexisting interfaces. The benefits are a shorter time to market through simplified integration, a generic applicability and scalability, and an improved customer perception thanks to defined and consistent semantics. OPC UA enables machine vision to speak to the whole factory.

Fundamentals

A machine vision system is any complex vision system, smart camera, vision sensor or even any other component that has the capability to record and process digital images or video streams for the shop floor or other industrial markets, typically with the aim of extracting information from this data. With respect to a specific machine vision task, the output of a machine vision system can be raw or pre-processed images, or any image-based measurements, inspection results, process control data, robot guidance data, or other information.

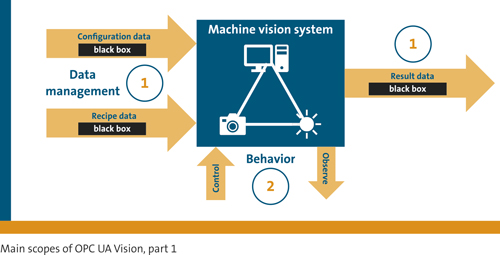

The basic concept of OPC UA Vision is a subdivision into several parts. Part 1 includes the basic specification and describes an infrastructure layer that provides basic services in a generic way. In part 2, a machine vision skill layer is addressed which provides more specific machine vision services.

Part 1, published as release candidate in June 2018, describes the infrastructure layer which is an abstraction of the generic machine vision system. It allows the control of a machine vision system in a generalised way, abstracting the necessary behaviour via a state machine concept. It handles the management of recipes, configurations and results in a standardised way, whereas the contents stay vendor-specific and are treated as black boxes.

In future parts, the generic basic information model will shift to a more specific skill-based information model. Machine vision skills could include presence detection, completeness inspection, and pose detection, to name a few. For this purpose, the proprietary input and output data black boxes will be broken down and substituted with standardised information structures and semantics.

Please contact opcua@vdma.org for a copy of the draft standard and let the VDMA working group know your opinion. Standardisation only works when the community joins forces. http://rua.vdma.org