Advances in specialised camera, imaging and microscopy technologies are paving the way for a wide range of novel applications in neuroscience and live cell imaging, with AI enhancing images further.

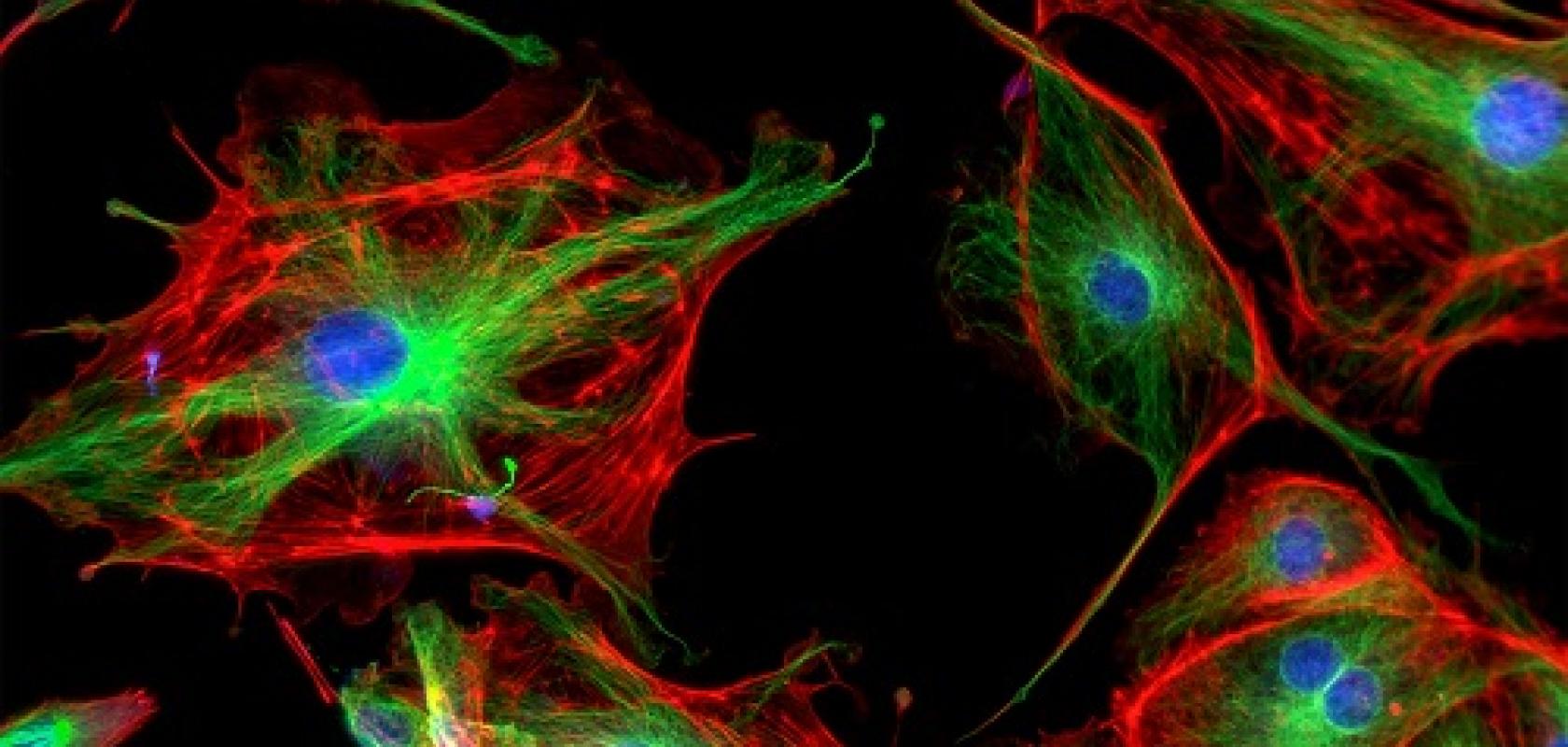

One type of imaging commonly used in live cell imaging is fluorescence microscopy, where genetically modified proteins fluoresce when excited with light. In neuroscience, for instance, in small animals such as mice and rats, fluorescence allows researchers to visualise the position and shape of nerve fibres, synapses, and cell bodies.

Naoya Matsumoto, Associate Senior Researcher at the Hamamatsu Photonics Central Research Laboratory, explains: “Creating a neural map of the whole brain is an important topic of research. At the same time, research is also being performed to manipulate neural activity by irradiating light onto visualised cells. These research activities are helping to unravel the relationship between the brain and its functions, for example, movement and memory.”

Hamamatsu Photonics has recently developed a two-photon microscope with integrated spatial light modulators (SLMs), making it suitable for observing thick biological samples, as well as for live imaging with small animals.

Spatial light modulators are pixelated liquid crystal devices with more than a million pixels, each of which can be digitally controlled to shape the wavefront of a probe laser beam. As researchers seek to capture images deeper in tissues, aberration effects – which essentially distort the laser wavefront and limit its ability to form a sharp focus deep inside the tissue – become more pronounced. One way to counter aberration is to use SLMs to shape the laser wavefront before it enters the tissue.

“Because fluorescence is emitted only at high energy densities, the locations where fluorescence occurs are localised to the focal region at the depth of interest. The use of near-infrared light, which has high biological transparency, aids in deep tissue imaging,” says Matsumoto.

Hamamatsu SLMs were recently used to counter aberration as part of a joint research effort between the company and Hamamatsu University School of Medicine. As well as helping to increase the depth of imaging, the wavefront shaping capabilities of the new microscope also improved the resolution of images.

“This research is another example of how Hamamatsu is helping researchers pursue challenging problems by developing photonic solutions to meet their needs. Here we show that by simple modifications to a standard two-photon fluorescence microscope via the inclusion of an SLM and the use of aberration correction steps, imaging depth in tissue as well as resolution can be improved,” says Matsumoto.

The research team estimated the amount of aberration by obtaining a ray trace, and modulated the wavefront of the excitation light by SLM to perform pre-compensation – which allowed it to observe images clearly even at greater depths in scattering samples. According to Matsumoto, a further interesting illustration of the enhanced capabilities of the new device was demonstrated when the team observed a transparency-enhanced sample. Although a special objective lens is normally required in such cases, correction with the SLM function of the new microscope enabled a clear observation even with a water immersion objective lens.

As Mastumoto explains, another key advantage of the new microscope is its ability to enhance resolution. Two-photon microscopy, which uses near-infrared light as the excitation light, typically has lower resolution compared to confocal microscopy that uses visible light. To solve this problem, the Hamamatsu SLM works in combination with an azimuth polariser to enhance amplitude and phase modulation, as well as polarisation modulation. According to Matsumoto, these effects enable measurement at depths of less than 100μm inside a sample with lateral resolution 1.23 times higher than that of conventional two-photon microscopy.

“Compared to conventional two-photon microscopes, this microscope can observe deeper locations on a sample and has a resolution higher than the diffraction limit. This has the potential to observe cells in locations at previously unseen depths and to discriminate cells that could not be identified due to overlap,” says Matsumoto.

“Although we have demonstrated its potential with fixed tissue, our microscope, realised in a simple configuration, has the potential to demonstrate its properties in live imaging as well. It may make nerve activity clearer,” he adds.

Multiphoton microscope

Dr Stefanie Kiderlen, Application Scientist at microscope manufacturer, Prospective Instruments, says that 3D imaging is also important in neuroscience research. Prospective Instruments’ MPX-1040 multiphoton microscope has an air-cooled NIR femtosecond laser integrated, resulting in a higher penetration depth, lower photodamage, and confocal resolution in 3D. The microscope also has a built-in LED source for widefield fluorescence imaging.

“In future directions, 3D live cell imaging will overtake the classical 2D cell culture in many research areas,” says Kiderlen. “3D live cell imaging includes 3D cell cultures like spheroids or organoids, but also in vivo imaging of cells in their native 3D environment. The MPX delivers microscopy techniques to image any 3D sample but still allows state-of-the-art imaging of 2D cells when needed. Key applications include 3D imaging, deep tissue imaging, confocal imaging, live animal imaging, whole organ and whole slide imaging.”

According to Kiderlen, the company’s main goal in this area is to provide the research and clinical community with a flexible, modular, and highly compact multimodal imaging platform – so that high-level microscopy “should not be limited to those with technical knowledge, indoor working space, or budget”.

“Every researcher, scientist, and clinician should have access to high-quality, affordable multimodal microscopes,” she says.

Machine learning

Ultimately, Kiderlen observes that, in the field of life sciences, neuroscience and 3D cell cultures, 3D microscopy techniques are essential – particularly, for example, for time-lapse imaging of organ development in zebrafish larvae, as “one of the gold-standards in animal models”.

“Long-term imaging with low-photo impact to avoid photodamage and phototoxicity is needed to image native animal development processes. Also, deep tissue penetration of the excitation wavelength is beneficial, to image structures inside an organ or tissue, or to compensate for small movements of the animal,” she says.

Looking ahead, Kiderlen predicts that several key ongoing innovations in multiphoton microscopy will result in life science researchers, scientists and clinicians soon being able to access a wide range of capabilities in a single microscope.

She adds that enhanced AI capabilities will also be included in future devices and enable virtual tissue staining, label-free virtual staining and virtual histology.

Meanwhile, Matsumoto believes that live imaging using two-photon microscopy will allow scientists to observe the progression of disease, or the process of treatment – and lead to the development of more effective therapeutics.

“The method we have developed further enhances the features of conventional two-photon microscopy. Since it is demonstrated with a simple technique, it can be applied to live imaging. We believe that it can improve the accuracy of the position and shape of observed nerve fibres, synapses, and cell bodies,” he says.

“Machine learning can [also] be used, for example, to extract images, or to infer corrected wavefronts. 4D – 3D space plus time – image measurement with a two-photon microscope produces an enormous number of images, [and] machine learning can be used to extract meaningful information from these huge datasets,” Matsumoto adds.

The future depends on vision-guided technology that can safely sanitise hospitals, warehouses and other locations to prevent the spread of pathogens and contagious diseases

Pathogens vs. Machine Vision Frequent cleaning of high-traffic areas – for instance, hospitals, airports and public transit systems – is crucial to curtailing the spread of disease-causing pathogens. A wave of new vision-guided cleaning robots has been deployed to safely sanitise these locations without putting front-line workers, such as janitorial staff, in harm’s way, simultaneously killing germs and minimising human contact.

Lidar, a laser-based remote sensing method, or machine vision lenses and cameras allow these robots to navigate through their environment, dodge obstacles, and ensure that all required surfaces have been cleaned. Many such robots sanitise surfaces using ultraviolet (UV) radiation, which destroys the DNA or RNA of viruses when operated at a sufficient optical power. At the onset of the Covid-19 outbreak, China introduced thousands of The future depends on vision-guided technology that can safely sanitise hospitals, warehouses and other locations to prevent the spread of pathogens and contagious diseases Optics for vision-guided cleaning robots UV-based cleaning robots, and the technology then made its way to other countries. In San Diego, CA, a hospital disinfected 30 Covid-19 patient rooms and break rooms every day using a fleet of robots able to completely sanitise a room in 12 minutes; this same task would ordinarily take 90 minutes for human employees to perform.

Does UV radiation really kill germs?

UV-C radiation, which covers wavelengths from 100-280nm, has been utilised to disinfect surfaces, air and water for decades. Research published through the American Chemical Society found that 99.9% of aerosolised coronaviruses similar to Covid-19 were killed when directly exposed to a UV-C lamp. The outer proteins of the virus are damaged by these lamps, rendering the virus inactive.

Vision-guided cleaning robots sanitise rooms without the need for any human operators. This is important because UV-C radiation can damage the skin and eyes of those exposed to it. Motion sensors can ensure that UV sources are turned off if a person comes too close to the robot.

How these robots ‘see’

Cleaning robots typically navigate through their environment using either lidar or 3D machine vision. Incorporating multiple lenses and cameras allows robots to generate 3D images of their environment and accurately gauge distances (Figure 1). The location, optical specifications and recorded images of each imaging assembly are used to determine depth through triangulation algorithms.

Figure 1: Like our two separate eyes allow us to see in 3D, stereoscopic vision allows vision-guided robots to “see” their environment in 3D

Like our two separate eyes allow us to see in 3D, stereoscopic vision allows vision-guided robots to ‘see’ their environment in 3D.

Figure 2: Edmund Optics TECHSPEC Rugged Blue Series M12 Lenses feature a ruggedised design and are ideal for weight-sensitive vision-guided robotics.

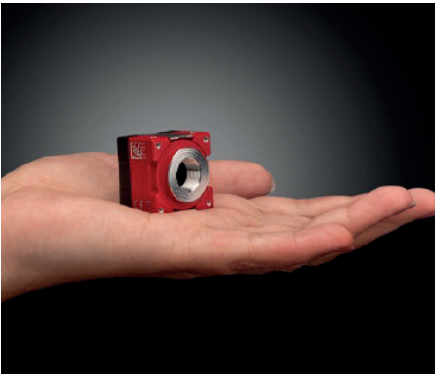

Using multiple lenses and cameras can quickly make a system very large and heavy, so compact solutions are critical for practical robots. Small M12 imaging lenses (Figure 2) and compact cameras (Figure 3) are ideal for reducing bulk while maintaining performance. Ruggedisation to protect lenses from shocks, vibrations or humidity can also be beneficial for long-term performance sustainability.

Figure 3: Allied Vision ALVIUM USB 3.1 Cameras are compact and ideal for weight-sensitive vision-guided robotics

Allied Vision ALVIUM USB 3.1 Cameras are compact, and Edmund Optics TECHSPEC Rugged Blue Series M12 Lenses feature a ruggedised design, making both products ideal for weight-sensitive, vision-guided robotics.

Impact of vision-guided robotics

In addition to cleaning, vision-guided robotics are improving safety in other areas, including the restaurant industry. Robots can minimise human-to-human interaction in restaurant settings, particularly fast food, where they can perform repetitive tasks such as food preparation and serving. However, robots offer far fewer potential benefits for fine dining, as the subtle details, critical for each dish and connecting with customers, often require a human touch.

Vision-guided cleaning robots are not likely to disappear any time soon. Their added safety and efficiency will continue to be beneficial to hospitals and other high-traffic spaces, so do not be surprised if you spot more robotic co-workers in the future! O Created in partnership between Edmund Optics and Allied Vision.

References

Reed, N. (2010). The History of Ultraviolet Germicidal Irradiation for Air Disinfection. Public Health Reports, 125(1), 15-27. doi:10.1177/003335491012500105 Lerman, R. (2020, September 8). Robot cleaners are coming, this time to wipe up your coronavirus germs.

The Washington Post.

Abajo, F. J., Hernández, R. J., Kaminer, I., Meyerhans, A., Rosell-Llompart, J., & Sanchez-Elsner, T. (2020). Back to Normal: An Old Physics Route to Reduce SARS-CoV-2 Transmission in Indoor Spaces. ACS Nano, 14(7), 7704- 7713. doi:10.1021/acsnano.0c04596

Carroll, J. (2020, June 5). Could vision-guided robots be key to keeping the restaurant industry afloat? Vision Systems Design. Retrieved October 8, 2020, from https://www.vision-systems.com/home/article/14175802/could-visionguided…