The 2014 Nobel Prize for Chemistry was awarded to three scientists for the invention of super resolution microscopy, techniques that overcome the diffraction limit of visible light to view cellular objects as small as tens of nanometres. Stefan Hell, of the Max Planck Institute in Göttingen, Eric Betzig, of the Howard Hughes Medical Institute in the USA, and W E Moerner, of Stanford University, USA, were recognised jointly for work stretching back through each of their careers – Betzig cited Moerner’s 1989 scientific paper on the optical detection of a single molecule inside a crystal as influential on his early work on super resolution imaging techniques.

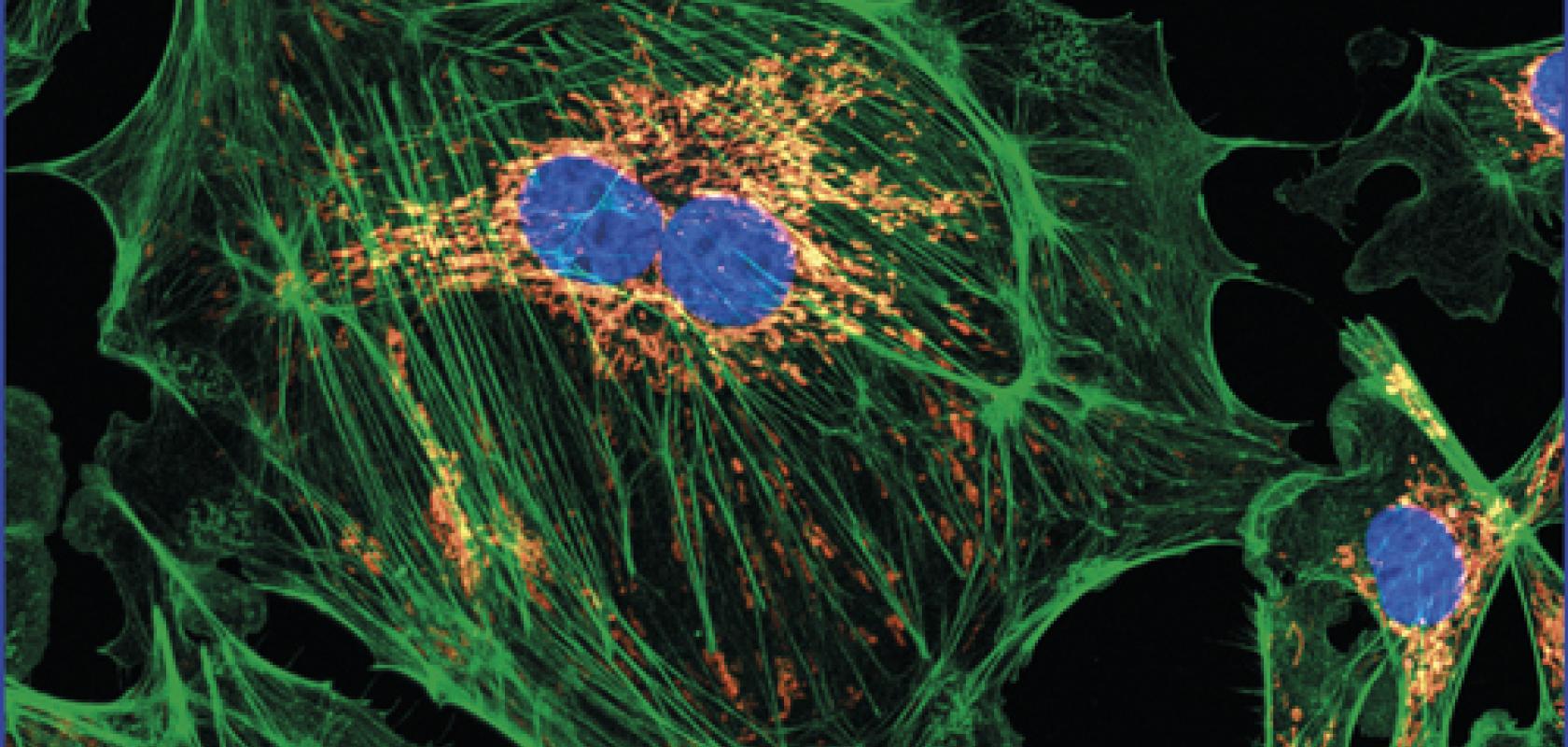

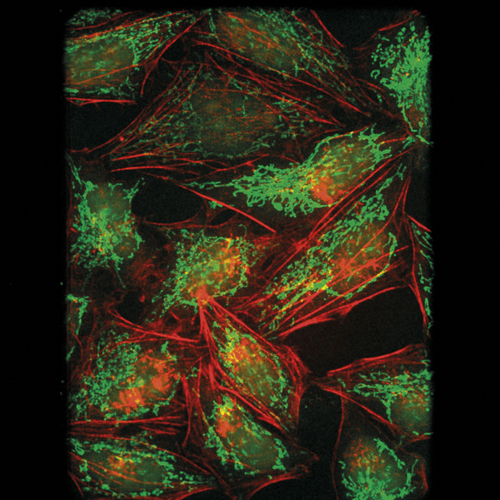

Now, there is a whole raft of super resolution microscopes commercially available, all using variations on the super resolution theme, which typically involves tagging cellular structures with fluorescent proteins to image them.

Photo-activated localisation microscopy (PALM) developed by Betzig – he built his first PALM system in his friend Harald Hess’s living room, so the story goes – and stimulated emission depletion (STED) microscopy, which Hell is credited with inventing, have been followed by lattice light-sheet microscopy, point accumulation imaging in nanoscale topography (PAINT) and structured illumination microscopy (SIM), among others.

Leica Microsystems launched its first super resolution system in 2004, and followed this with a STED system in 2007. It’s an impressive rise of a branch of life sciences that was conceived in academia, but which has led to researchers being able to buy fully formed instruments for cellular imaging.

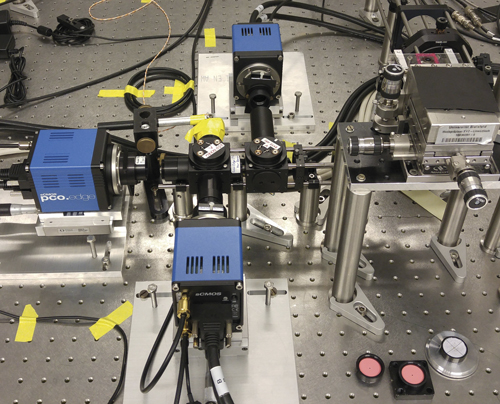

The image sensors that go into these super resolution microscopes have undergone their own gradual advance, the biggest change being the shift from CCD to CMOS. This is the case throughout life science imaging, not just for super resolution microscopy. ‘The newer microscopes of Leica, Nikon or Zeiss will only use scientific CMOS,’ commented Dr Gerhard Holst, head of science and research at camera maker PCO.

Sensitivity is particularly important when imaging live cells, as the signals returning from fluorescent molecules can be extremely faint. The disadvantage with early super resolution techniques like STED and PALM is that they use an intense laser to excite fluorescent molecules in the cell to get the signal, which kills the cells. A lot of the new super resolution techniques, however, like lattice light-sheet microscopy, can image live tissue over time without bleaching the cells.

‘While it’s important to see the cells clearly, there’s a definite trade-off in terms of cell longevity and being able to track processes inside cells over time. That’s where sensitivity becomes important, because the more sensitive the camera, the less laser illumination power is needed,’ explained Rachit Mohindra, product manager at Photometrics, which supplies cameras for life sciences.

Sensitivity is one critical characteristic for image sensors used in life science imaging, but also low noise and speed, according to Holst. ‘Many of the new microscopy methods that improve resolution or give detailed information about living organisms, they need speed,’ he said. ‘They need to record a number of images to reconstruct the image the researcher is interested in.’

Rise of CMOS

Life science imaging has traditionally been based on CCD image sensors because the image quality used to be better than the CMOS alternative. Now, however, CMOS, and particularly scientific CMOS (sCMOS), has reached a point where its performance equals or betters CCDs in the majority of cases.

‘A lot of [life science] investigations still use CCD cameras, but mainly for historical reasons,’ observed Holst. In January 2015, PCO were informed that Sony had decided to stop making CCDs. ‘That was another major push towards CMOS,’ Holst commented. He added: ‘I think in the future, in my opinion – and it might change – there will be only CMOS.’

Photometrics' Prime-BSI camera has a backside-illuminated sCMOS sensor

Electron multiplying CCDs (EMCCDs) are one option for imaging in low light applications. ‘EMCCDs are very sensitive, but complex and not easy to control – there have to be a lot of different voltage levels applied in a fast sequence to give the multiplication process,’ Holst explained. ‘Therefore, usually these cameras are cooled, bulky and quite expensive. Also, the application range for EMCCD cameras has diminished with the appearance of scientific CMOS.’

Scientific CMOS gives extremely low noise of around one electron, which isn’t possible with CCDs; quantum efficiency is up to 80 per cent, and can now surpass 90 per cent with backside illumination. The sensors also have high resolution and high frame rate. ‘This combination of parameters, in my opinion, means that EMCCDs are only second best for a lot of the newer microscopy methods,’ commented Holst.

He added: ‘There are some photo counting applications that might still use EMCCDs, but now there are the new backside illuminated sCMOS sensors with quantum efficiency above 90 per cent, there is no advantage of using an EMCCD – it’s just more expensive.’

EMCCDs are backside illuminated and the multiplication process before the readout means the final readout noise is less than one electron. However, as with all multiplication processes, the noise is amplified along with the signal, which effectively reduces the speed. In addition, the amplification process, by its nature, kills the crystal lattice in the CCD semiconductor material in the long run. Holst commented: ‘Over time the gain phenomenon will degrade, and after five to ten years the multiplication gain becomes a lot lower. When this happens scientists will have to replace their EMCCD equipment.’

Photometrics introduced a backside illuminated scientific CMOS camera last year, the Prime 95B, and a second version this year, the Prime BSI with smaller pixels. ‘The Prime 95B improved upon EMCCD cameras by giving a much better spatial resolution pixel size, going from 16µm down to 11µm,’ explained Mohindra. ‘One of the benefits of this is that it allows you to use a 100x magnification objective and properly spatially sample, which was one of the limitations of using EMCCDs.’

Cell image taken by spinning disk confocal microscopy. Credit: University of Manchester and Photometrics

In addition, the Prime 95B sCMOS camera has a 1.4-megapixel resolution, the area of the sensor is larger, and it runs around 20 to 30 per cent faster than an EMCCD, according to Mohindra. ‘We envisioned the Prime 95B as a camera that replaces the EMCCD level of sensitivity, where sensitivity is the absolute key performance factor – microscopy techniques such as spinning disk, confocal, super resolution, and single molecule fluorescence all tend to be very low-light applications where the sensitivity really is crucial,’ he added.

The backside illuminated Prime BSI camera provides about a 30 per cent improvement on top of standard sCMOS in terms of sensitivity, according to Mohindra. It gives 100fps with effectively one electron noise.

Mohindra commented: ‘I don’t think CCDs will disappear entirely, but they are being pushed into niche applications.’ He said that CCDs are relegated to basic microscopy cameras where they are an inexpensive option. The other place where CCDs have a specific niche is for luminescence imaging, where the camera monitors the natural glow from cells. To image, luminescence needs an acquisition time of minutes, potentially half an hour; here, CMOS technology is less good because the background noise tends to be quite high, according to Mohindra. ‘This might not always be the case and CMOS technology could eventually catch up with CCDs, but at the moment CCDs are still better for long image acquisitions,’ he said.

‘There are CCD cameras that will do effectively one electron of background noise per hour,’ Mohindra continued. ‘One of our customers was looking at the circadian rhythm of plants, where every half an hour they would take a 10-minute image. So, in the future, while the majority of cameras will switch to CMOS, I think CCDs will still have a place in life science imaging.’

Scientific CMOS is now rivalling EMCCDs for life science imaging. Credit: PCO

Dark current on sCMOS is roughly 0.5 electrons per second, which is higher than a CCD camera, Mohindra stated. However, sCMOS can reach one electron of read noise – the minimum amount of signal the sensor can measure – while an optimised CCD will have three to four electrons read noise.

Life in the future

Holst noted that CMOS can do things that are not easy to do with CCDs. PCO offers time-of-flight (ToF) CMOS sensors with two or four readout sites per pixel that can be modulated. With an external signal, the charges generated in each pixel can be directed to different readout positions, which means the incoming modulated light can be separated.

PCO’s customers use this sensor in the frequency domain for measuring fluorescent lifetimes. The sensor gives a double image; the modulation signal is then shifted and a second measurement made. This gives four images corresponding to different time positions in the modulated signal.

‘If you assume a sinusoidal modulation, then you get the information of the face angle at zero degrees, 90 degrees, 180 degrees, and 270 degrees, and with that you can reconstruct the sinus and get out the information of the phase angle, as well as the modulation depth,’ explained Holst. ‘From there on you can back-calculate the fluorescent lifetime. That’s a relatively new application using these slightly older ToF sensor principles.’

Elsewhere in life sciences, digital pathology for automated tissue analysis is now more widely accepted (see ‘Automated pathology’), while, in fluorescence imaging, there has been a push to move further out into the infrared, according to Mohindra – in the infrared there is less phototoxicity and a microscope can image deeper into tissue. There is still a limit to what a silicon sensor can image in the infrared, but he noted that ‘with the newer sCMOS cameras we see 50 per cent efficiency at 900nm, which has been quite an improvement.’

Studying coral reefs

The Bay of Biscay’s cold water coral habitats have become important biodiversity hotspots and home to many species. Research has recently been published by the French Research Institute for Exploitation of the Sea (IFREMER) to investigate the health of these ecosystems, and assess the damage being done by human activity.

The IFREMER team used adapted vision systems to survey 24 of the Bay’s canyons plus three locations between adjacent canyons. The images enabled them to identify 11 coral habitats, formed of 62 coral species, at depths between 50 and 1,000 metres.

Two methods to capture images were used: from a towed stills camera moving at 0.9m/s, and from a remotely operated vehicle (ROV) running a Sony FCB-H11 colour block camera, which delivers up to full HD images.

‘Light deteriorates quickly underwater, and while cameras such as the FCB-H11 can capture down to 1lx, there is little significant light found below 200m, and no sunlight beyond the twilight zone (200m to 1,000m),’ said Sony Image Sensing Solutions’ Marco Boldrini.

‘Lighting also needs to be carefully controlled, however, as at depth the presence of particles such as silt cause an effect similar to high-beam headlights in heavy rain,’ he continued. The research team highlighted that several images were discarded because of sediment clouds obscuring them.

According to Boldrini, this makes it very difficult to automate lighting control, and even in a more controlled system such as the ROV, an operator would ideally have the ability to calibrate lighting and exposure times to maximise images.

‘Water also has a particularly interesting effect on light,’ said Boldrini. ‘With a refractive index of 1.333, it shrinks the field of view by 25 per cent, and any camera chosen needs to take this into consideration.’ The Sony FCB-H11 colour block camera has a 50 degree horizontal viewing angle, making it suitable for this application.

--

Automated pathology

Section of a kidney stained to show the vascular network. Credit: 3Scan and Teledyne Dalsa

Digital pathology is becoming more widely accepted as an alternative to the often laborious task of sectioning and staining tissue samples for analysis. The Knife Edge Scanning Microscope developed by San Francisco-based 3Scan is a fast, automated sectioning and imaging system that gives detailed, 3D images of tissue. It is able to slice approximately 3,600 sections per hour, or more than 28,000 per day, compared to 350 sections per day for a pathologist.

‘The KESM is able to simultaneously light and scan tissue samples, and because it produces many scans of the tissue, we can apply algorithms to the images and spatially index each pixel to build 3D images of the model,’ said Todd Huffman, 3Scan CEO and co-founder. The system is able to render 3D imagery based on micron-level scans, which gives faster and more accurate diagnosis, and in some cases, earlier detection and management of disease.

KESM stains tissue before cutting it. Sections are then imaged with Piranha CMOS and Piranha4 4K colour line scan cameras from Teledyne Dalsa. By combining sectioning and imaging, a large part of the traditional pathology workflow is automated.

The machine generates more than a terabyte per cubic centimetre of data. It is able to run quantitative analysis on 3D image stacks of whole mouse organs, for instance, which are used in research and pre-clinical pharmaceuticals trials. This is extremely difficult, expensive and time-consuming with traditional manual techniques.

‘3Scan’s automated histology platform fills the gap between radiology and pathology by allowing large-volume high-throughput imaging of tissue and tissue-scale diseases. This imaging technology is essential if ever we want to be able to use the power of modern computing to improve pathology outcomes,’ said Megan Klimen, 3Scan COO and co-founder.