Machine vision companies believe their market is at a turning point and is moving into 3D. Interestingly, this transition from 2D image processing to 3D is being driven by forces outside traditional machine vision itself. It derives, they believe, from an increase in 3D technology in consumer markets which has led to lower prices and improved technology.

One example of this trend will be evident during Vision 2014, to be held in Stuttgart between 4 and 6 November. At the event, the 21st Vision award, which is sponsored by Imaging and Machine Vision Europe, will be presented by publishing director, Warren Clark. One of the shortlisted entries for the €5,000 award is from Odos Imaging, for its time-of-flight 3D imaging camera that creates ‘machine vision with depth’ images. Odos is up against five other companies, chosen from 44 initial entries (more details on page 4).

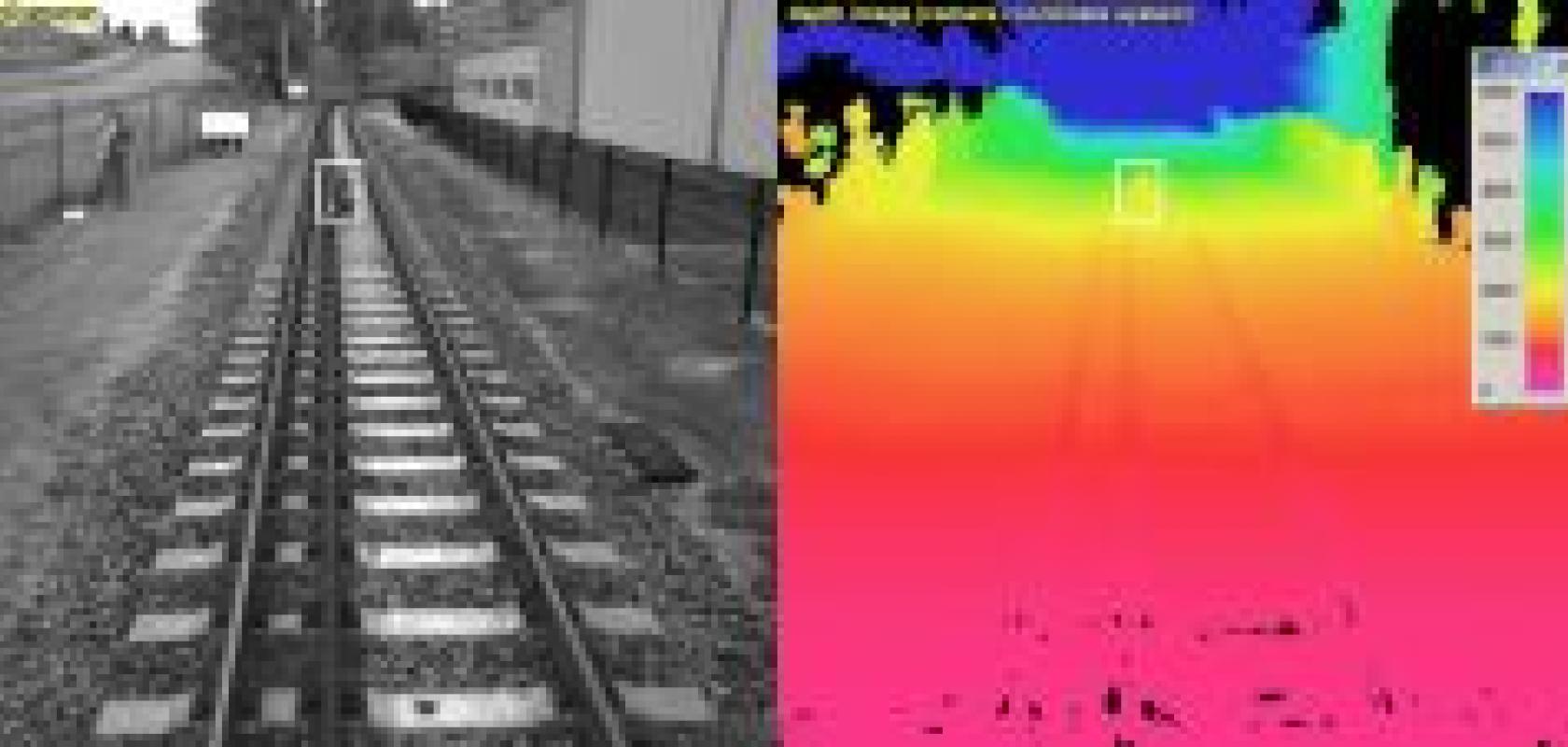

3D images offer a variety of benefits for industrial users when compared to 2D techniques. By adding the extra dimension, the volume of a package can be calculated which is useful for logistics applications such as pallet or lorry loading. The distance between an item and the camera can be measured to help with bin-picking operations, automated vehicles, and driver assistance measures.

To some extent, the increased interest in three dimensions is a development that the machine vision industry was not expecting, though it has welcomed the trend. Philip Dale, product manager of new business development at Basler, said: ‘We found that a lot of our existing “typical machine vision” customer base were very interested in adding depth to their current application. So for a camera which can provide both a 2D image for image processing in combination with depth (3D) data, the potential seemed limitless.’

Dale believes that 3D technology in consumer markets has driven advances in the technology: ‘This also has brought down overall costs of 3D imaging through economies of scale, as the technology reaches greater maturity. At the other end of the cycle, 2D imaging is almost at the end of its growth potential where greater cost savings are no longer possible and the performance potential is near its optimum.’

Dale pointed out that because of the extra dimension, a 3D image increases in the amount of data that needs to be handled, and as a consequence increases the importance of the software.

There are many different ways to obtain a 3D image, the main methods are using laser profiling, fringe patterns, stereo vision, or, as Odos has chosen, time of flight. According to Matrox Imaging’s processing software development manager, Arnaud Lina, it doesn’t matter which technique is being used from a software perspective. He said: ‘On one side, you have the technology to acquire the data; on the other side you have the software to analyse this data and extract the important information.’ Imaging hardware and processing software ‘are not necessarily coupled,’ he said. ‘They are two different subjects.’

Matrox Imaging Library (MIL) was originally developed for 2D image processing, but has been adapted to handle 3D images as well. To adapt the image handling software, the first step was to understand how the 3D data was acquired. Lina said the researchers had initially used data created by the laser profiling technique, but that it was relatively easy to make it suitable for the other methods. ‘The first step was to tackle one type of 3D data acquisition and going from this type to a software created 3D representation. We had to look at the different steps of extracting data from the grab of an image to the 3D data.’ The team set out to find a way of calibrating this information to translate the position of a point on the object to a x,y,z coordinate that could be used in the simulation.

He continued: ‘Today you can buy a camera that outputs the 3D data, but it is usually confined to a specific volume of measurement and accuracy. This means people often like to process the data away from the camera because the user needs a specific cost, volume, the bandwidth and speed, or resolution. This means you will rely on software such as MIL to extract the data, to calibrate, and to extract your 3D map; it gives you a lot more options for customisation.’

There are a variety of techniques used to obtain the three dimensional data and, according to Dale, each has its merits. However, he observed that customers needed to take serious consideration of what features they actually require of a system. He warned: ‘Often users end up with a system or camera that is wildly over spec for their application. This is a very common problem with vision systems and something we at Basler aim to eradicate with our line-up of products. At Basler we believe there is a place for all variants of 3D technology, as each has its own strengths and weaknesses which fit a variety of differing applications.’

Odos’ shortlisted system is a continuation of the traditional time-of-flight method which works by measuring the time taken for a pulse of light to go from a light source to an object’s surface and to be returned to a detector. This technique has been used extensively for non-contact distance measurement and can provide very quick, but typically single-point, results.

The camera works on the same principle, but captures a whole image in a single snapshot. Short and highly intense pulses of light illuminate the entire scene. The objects in the scene reflect the light, and these reflections are subsequently captured by a high-resolution image sensor. Each pixel within the image sensor array returns a value proportional to the round trip time-of-flight of the pulse of light and hence the distance to the particular imaged object. In this manner, the system returns a high-resolution range image of the scene. This, Odos said, is highly desirable for the machine vision industry and will help provide more useable results for users of 3D imaging.

Matrox Imaging’s Lina said, from a data processing perspective: ‘Time of flight is one of the fastest of the comparable systems, because it outputs a depth map directly, but the drawback is spatial resolution. If you do not need high resolution and you can use time of flight, you are very close to a traditional camera in terms of acquisition, number of frame-grabs, and speeds.’ According to Lina, for ‘applications that do require more accurate readings, the laser-based scanning systems are hard to beat. You just keep grabbing profiles until you have the depth of detail you need.’

By passing a laser bar over the object and recording the reflected light, a model can be created. Every scan creates a new profile that can be combined with others to create a more accurate representation. This provides the highest accuracy, but can prove time consuming for real-world applications.

The time taken to collect an image depends, Lina explained, on the size of the object and the resolution required. ‘Laser profiling requires potentially thousands of profiles that have to be combined. However, time of flight or projected pattern techniques require a lot less frame grabs, which are then put through an algorithm. The burden is put on this algorithm, not on the camera system, as there is a lot less information to handle.’

The speed at which the object is moving through the field of view is also part of the bandwidth equation. Lina said: ‘If you don’t want to slow down a conveyor belt on which an object is moving, you may need to use a very high speed acquisition. You can add multiple scanners, but then you have the challenge of stitching the data. This increases the complexity of the system as it requires multiple calibrations tied together to maintain accuracy.’

He continued: ‘The main reason for using multiple scanners is not to create more accuracy or more data points, but instead to minimise the occlusion of the laser bar or camera – or if you need a top and bottom view, to measure an entire object.’

Lina’s colleague, and product line manager at Matrox, Pierantonio Boriero, said: ‘The use of multiple 3D profiling systems together is something we are going to address very shortly in an upcoming update of the MIL software. This will allow people to use multiple systems together in a seamless way.’

Multiple cameras are at the heart of IDS’ Ensenso range, according to Steve Hearn, the company’s UK sales director. He explained: ‘The benefit of stereo vision is that you can build a 3D image almost immediately. This is used more typically where you need a number of frames per second on a real-time production line. Calibration is also much less arduous than for other 3D techniques as the cameras and lenses in the system are pre-calibrated and external calibration is a simple process of moving a calibration plate. However, there are limitations to the accuracy that this method can achieve today but multiple stereo systems can be combined to increase the capability.’

He continued: ‘Bin picking and robotics are a real strength of stereo systems. The accuracy required can be fairly coarse initially, but the accuracy gets better as you get closer so these applications are a natural beneficiary of the method.’

Stereo vision operates using two cameras looking from different angles and analysing the difference in the scene before them. However, Hearn explained: ‘If you are looking at a plain object, you aren’t going to be able to get much information because there is no real detail to work on. This is why our cameras use a random projected pattern (RPP) to create a texture to provide the information. You look at the scene from the two angles, from the two cameras, and calculate disparity information from the same points in the scene.’

Tordivel offers a similar system that also relies on using two cameras and an RPP called the Scorpion 3D Stinger camera. The company specialises in applying the algorithms that make sense of the visual data and generate a 3D model from the two or more cameras. Thor Vollset, founder and CEO of Tordivel, said the whole system is dependent on reliable, high-quality hardware. Vollset explained: ‘To be able to locate precisely in 3D, you need to find the sub-pixel resolution. If you don’t have sub-pixel resolution, you have to place the centre. We can locate the centre within one tenth of a pixel – so we are splitting pixels into 10 parts. To do this, you need good calibration software plus special image processing to take advantage of the calibration.’

He said the Scorpion system uses multiple cameras from Sony to increase the area and the depth of view, and allows for dense stereo vision. The company said that this enables the camera to cover the whole of a typical warehouse bin, something that was not possible with previous, lower-resolution cameras.

When asked what the future for 3D imaging could hold, Basler’s Dale summarised: ‘We do not expect 3D imaging to eradicate 2D imaging; there will always be a need for both methods depending on the application. But we certainly believe 3D imaging will take a firm foothold in the machine vision world and we hope to be able to play an important part of it.’