The market for online shopping is continuing to grow at a rapid rate, which, in turn, has led to a rise in automated warehouses. To use the obvious example, Amazon now has nearly 200 operating fulfilment centres globally, spanning more than 150 million square feet, where employees work alongside the retail giant’s robots to pick, pack and ship customer orders to the tune of millions of items per year.

This would not be possible without machine vision, and it is not just the hyperscale organisations benefiting from this kind of technology in their warehouses. German electrical supply wholesaler Obeta is now running a robotic station to pick and sort components at its facility in Berlin. The wholesaler partnered with AI robotics company, Covariant, and logistics technology firm, Knapp, to bring the system into production.

Managing expectations

Michael Pultke, head of logistics at Obeta, explained: ‘Customer expectations for fast, affordable package delivery have never been higher. To stay competitive, we need to modernise our operations and keep order processing and delivery running quickly and smoothly. The Covariant-powered robot is an integral part of our live operations, exceeding our performance requirements and adapting quickly to change. AI robotics is a foundational part of our future strategy.’

Covariant was founded in 2017 by Rocky Duan, Tianhao Zhang, Pieter Abbeel and Peter Chen. Abbeel is a professor at the Department of Electrical Engineering and Computer Sciences at Berkeley Engineering, California, the engineering school from which the other three founders all graduated.

Abbeel’s lab at UC Berkeley has enjoyed a number of recent breakthroughs in robot learning, including a robot that organises laundry, robots that learn (simulated) locomotion, and robots that learn vision-based manipulation from trial and error and human VR teleoperation.

The firm is backed by lead investor Amplify Partners, plus some big names in AI, such as Jeff Dean, Geoffrey Hinton, Yann LeCun and Raquel Urtasun. To date, it has raised $27m in funds, and the last two-and-a-half years have been spent researching, developing, testing and deploying its AI technology at Obeta, as well as facilities across North America and Europe.

Brainwave

Based on the team’s background and research in deep imitation learning, deep reinforcement learning and meta-learning, Covariant’s vision is the Covariant Brain – a universal AI system for robots designed to be applied to any use case or customer environment. The robots learn general abilities, such as robust 3D vision, physical properties of objects, few-shot learning and real-time motion planning. This enables them to learn to manipulate objects without being told what to do.

As with all new technology, it is reaching the commercial stage for continuous production that often proves to be the challenge. This is because there are an almost unlimited number of scenarios that a robot could encounter, so it’s impossible to program each one. In order to operate and add value to customer environments, a robot must be able to see, understand, make decisions, learn from mistakes and adapt to change, on its own.

The Covariant Brain is designed to enable the robots to do all of these things continuously in real-world environments.

Photoneo's MotionCam-3D camera can inspect objects moving at 40m/s

Abbeel said: ‘Even though we are just getting started, the systems we have deployed in Europe and North America are already learning from one another and improving every day.’

Raquel Urtasun, chief scientist at Uber Advanced Technologies Group, and a Covariant investor, added: ‘Developing safe and reliable robotic technologies to address challenges in the real world is extremely hard. Whether you’re talking about self-driving cars or warehouse operations, robots encounter an endless number of unexpected scenarios. Covariant has demonstrated exceptional progress on enabling robots to fill orders in warehouses, which could unlock many other robotic manipulation tasks in other industries.’

Take your pick

One of the biggest challenges when it comes to automation in warehouses, said Mark Williamson, group marketing director at Stemmer Imaging, is bin picking. Programming a robot to pick up random objects from boxes relieves people of this task. The issue in logistics, says Williamson, is that robots are asked to handle a far wider range of items than is the case in a factory, which makes the imaging and gripping aspects a lot more complicated.

While a number of companies produce general bin picking tools, Williamson feels they all typically target one kind of imaging technique. ‘They might use a low-cost stereo camera; they might use a laser profiling system, or a fringe projection kind of system,’ he explained. Each technique has advantages and disadvantages, but while one 3D method might work well in a certain scenario and with certain equipment, it might not work for a different application.

Stemmer Imaging’s solution to this dilemma came following the acquisition of Infaimon, a provider of software and hardware for machine vision and robotics. The Infaimon InPicker 3D bin picking system uses a variety of 3D imaging techniques, such as passive stereo, active stereo or laser triangulation to recognise and determine the position of objects randomly piled up in a container. It also interfaces directly with a variety of robots.

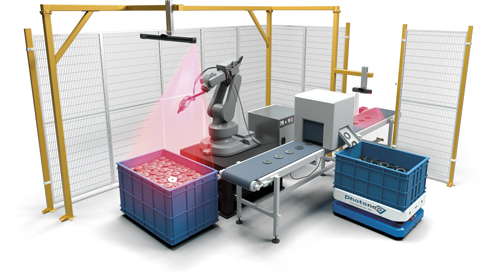

Robots learning general abilities such as 3D vision at AI robotics firm Covariant

‘The unique thing about the InPicker,’ said Williamson, ‘is that it is robot agnostic, so it doesn’t matter what make of robot it is. It is also camera agnostic.’ An application using InPicker, he explained, becomes bespoke without the customer having to develop everything from scratch, which would traditionally be the case when building a bin picking system.

Get a grip

InPicker requires a CAD model of the gripper and a CAD model of the item that the robot will handle. There is also the functionality to upload the CAD model of the box the objects are housed in.

This is important, said Williamson, because if the robot does not know the shape of the box, it will not know how to approach the box to pick up products from it – if the box has a high sidewall, for example, the arm could try to pick an object from the wrong side.

‘That’s a key thing,’ said Williamson, ‘when you start to do proper bin picking, and certainly for things like warehousing, where they have these plastic boxes with a pile of bits in them.’

Warehouse robots also might use multiple grippers, and potentially different vision techniques. In this scenario, said Williamson, the first thing is to calibrate the space of the robot into the space of the InPicker. The InPicker tells the robot its co-ordinates and identifies what gripper is needed to pick up certain objects.

Williamson explained: ‘Using the 3D matching technology, it looks at all the shapes of the products in the box and says: “I can pick up 30 of these and I see this particular one has the minimum inclusion of other products on top of it”.’

Picking at speed

3D imaging firm Photoneo is close to launching its MotionCam-3D camera, which won the 2018 Vision Award at the Vision trade fair in Stuttgart, and which can be used for bin picking.

MotionCam-3D is based on the company’s parallel structured light technology, implemented on a custom CMOS image sensor. It is able to inspect objects moving as fast as 40m/s, and can be used for warehouse applications such as autonomous delivery systems, object sorting, as well as bin picking applications. The precision of the camera is designed to allow robots to handle smaller and sensitive objects in palletising, de-palletising, machine tending applications, quality control and metrology.

Bin picking applications require robust 3D scanning methods. Credit: Photoneo

Photoneo has been selling engineering samples of the camera, but plans to release an official version before the summer, according to the firm’s CTO, Tomas Kovacovsky.

Last summer, Photoneo developed an autonomous mobile robot, which was designed for transporting materials in factories and other industrial facilities. It works thanks to two 2700 laser scanners, two ultrasonic sensors, and its vision is courtesy of two 3D cameras. It can carry up to 100kg direct weight, pull up to 300kg, supports a dual-way operation with an interchangeable back and front, and has a maximum speed of 1.125m/s in both directions.

This year, there will be a new version of the robot launched. Kovacovsky explained: ‘The new one we are going to release this year will be called Phollower 200. It will have new features and is designed for transport of materials in larger factory environments.’

Looking to the future, Williamson believes that the market for imaging technology to support automated warehousing will only continue to grow, particularly when it comes to bin picking. ‘Obviously that is very much an exploding industry,’ he explained. ‘InPicker started around three to four years ago, and we are working on second generation products, so it’s evolving all the time.’