Recent estimates from the United Nations predict the world will need around 60 per cent more food by 2050. Precision farming will be vital in meeting this demand, with machine vision key to its success.

This was a talking point at last year’s Knowledge Transfer Network (KTN) workshop, which gathered representatives from the agri-food and imaging communities to look at opportunities for innovation using emerging imaging technologies. According to Professor Simon Pearson, from the University of Lincoln, who chaired the workshop, ‘imaging technology is the backbone of precision agriculture, as DNA is to bioscience’.

David Telford, knowledge transfer manager for agri-food at KTN, said: ‘Precision agriculture is a real growth area. It does so much good; it helps farmers make money, and helps improve the environmental impact of agriculture – it’s good in general.’

Cattle welfare

To meet this demand in the UK, the government has committed £90 million in new funding to help transform food production. The programme is led by Innovate UK and the Biotechnology and Biological Sciences Research Council, under the government’s Industrial Strategy Challenge Fund. Telford said that the project aims to broker collaborations, bringing together people from different sectors who might not usually meet.

Telford explained that the technologies that can be applied for agriculture can be split into crop-based production systems and livestock-based production systems. ‘Machine vision has a good application in both, so there’s been quite a bit of interest in recent years,’ he said.

For the livestock systems, the Centre for Machine Vision at the Bristol Robotics Laboratory – under the University of the West of England (UWE Bristol) and the University of Bristol – has been working with dairy consultancy, Kingshay, to develop imaging technology to monitor dairy cows when they leave the milking parlour, in order to improve their welfare and productivity. ‘The Centre for Machine Vision works in lots of different areas,’ stated Telford, ‘but what they have learned in some of these other areas, they are applying in agriculture.’

The first stage of the cattle project is complete, which was a two-year feasibility study funded by an Innovate UK grant. Melvyn Smith, director at the centre, said: ‘The system on the farm replicates what herdsmen would do, which is regularly monitor the cows to see whether they are gaining or losing weight, and if they are in calf. It also looks for lameness. We think this is the only system that can do these things for a moving animal.’

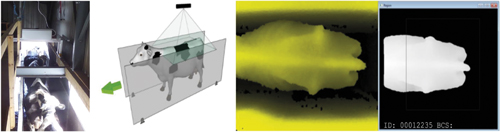

The system uses a 3D camera, which looks down at the animal as it walks underneath, so the cow doesn’t need to be stationary or held in a pen. The camera measures the volume and assumes the density of the animal, so the farmer can estimate its weight. It then scores the animal’s body condition on a scale spanning ‘very thin and feeble’ to ‘very fat’. The system is currently in situ on three farms.

Some of the 3D techniques were originally developed in manufacturing applications; the Centre for Machine Vision first developed one imaging method to characterise the size and shape of aggregate particles in tarmac or cement. Working in 3D means the system can isolate the animal easily from background objects in the image, which can be difficult on a busy farm.

Next steps

The project is entering a new phase, which aims to improve on the original prototype technology. ‘Lameness is the most challenging criteria, because animals tend to hide it,’ explained Smith. ‘The beauty of the camera is there’s nobody there and it’s just looking down. We want to know [signs of lameness] in order to call the vet in a timely way. As we develop the system further, we want to improve the lameness side of it.’

Another objective under the next phase, explained Smith, is to make better use of the data. ‘What you really want to do is link with milk yield, calving and feed consumption. This is where precision comes in, because you have a closed loop system where you can, for instance, control the feed to get the animal to the optimum condition for calving. That’s where we are now.’ Once fully developed, Kingshay anticipates the potential for multi-million-pound sales for the technology.

The camera is positioned above the cows, providing a raw depth image of the moving animal. Credit: Bristol Robotics Laboratory

Identifying weeds

The centre has also been applying machine vision techniques to farming crops, looking specifically at improving the efficiency of weeding. Another feasibility study funded by Innovate UK, the GrassVision project, is run in partnership with agriculture specialist, Soil Essentials. ‘The argument,’ explained Smith, ‘is that pasture is a crop. You use it to create feed for animals, for example, and weeds like dock leaf, ragwort and nettles are a huge problem. But some, like clover, are good. We want to be able to detect these.’

The motivation behind the project is to prevent over-spraying of herbicide. A camera is positioned on the back of a tractor to identify any weeds as it drives over the land. The tractor also has a device similar to an inkjet printer, with nozzles that fire weed killer just on the weed itself – ideally, on the growing part of the plant – rather than the grass around it.

Researchers considered a number of conventional machine vision techniques that isolate objects, but the problem was replicating the results from the lab – or the centre’s garden – on the farm. Smith explained: ‘The solution – and this has just got funding after the two-year feasibility study – is to use deep learning. We trained the system on [images of] what the weeds looked like in different conditions, times of year, times of day, and growth stages of both weed and grass. [The algorithm] can then work quickly and accurately to spot a weed, and the difference between good and bad plants. We’ve been field testing in Scotland and it seems to be working robustly. The next stage is taking that from a working demonstrator to something we can sell.’

Tom, Dick and Harry

Another recipient of funding through Innovate UK is start-up The Small Robot Company, which was founded by a group of farmers, engineers, scientists and service designers in 2017 to build technology to make farming profitable, more efficient and more environmentally friendly. This technology builds on 15 years of research by Professor Simon Blackmore, a precision farming expert at Harper Adams University, and has been developed alongside partner company, artificial intelligence specialist, Cosmonio. The three small robots – Tom, Dick and Harry – are designed to ‘seed, feed and weed’ arable crops autonomously.

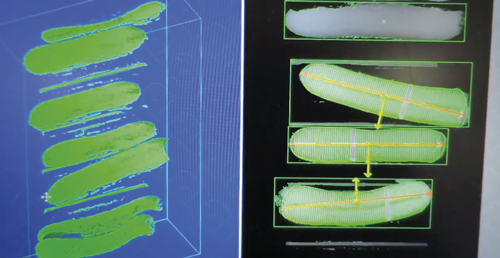

Sortiflex provides 3D information about each cucumber and inspects, grades, sorts, and packs them automatically. Credit: Sortiflex

Monitoring robot, Tom, is already developed and currently in field trials on 20 farms across the UK. Sarra Mander, CMO at the Small Robot Company, said: ‘We developed [Tom] in-house and are currently using conventional cameras, but we have trialled hyperspectral infrared cameras. You can see things like phosphate and nitrate levels, and plant health with those, so there are some interesting potential opportunities. The first phase of the field trials focuses on building up a database to train the AI.’

This involves Tom scanning an emerging wheat crop, from which the data will be used to train an AI system called Wilma. Following intensive training using last year’s data, Wilma can already distinguish wheat from anything classed as ‘not wheat’.

The next step will enhance the system to recognise weeds and deliver a weed map, at which point Wilma can start to recommend remedial action.

The company is currently developing digital weeding and spraying robot, Dick, which will use machine vision to differentiate between weeds and crops, and then kill the weeds using chemicals, and apply fertiliser precisely to the roots of the crops. An early prototype of this is expected towards the end of the year. Harry, a precision drilling and planting robot, will follow later, by early 2020.

Small Robot recently secured £1.2 million in additional funding via the Crowdcube platform, and The John Lewis Partnership is undertaking a three-year trial with the robots at a farm in Leckford, Hampshire.

Picking cucumbers

The end of a crop’s lifecycle can also benefit from a technological boost, as demonstrated by Crux Agribotics, which was founded as a sister-company of Beltech, under the One of a Kind Technologies group. The company has been developing a cucumber harvesting robot, which is currently at the proof-of-concept stage, and the group is currently rolling out a robotic solution for grading, sorting and packaging from bulk harvested products, in partnership with Christiaens.

The harvesting robot works by scanning cucumber plants in the greenhouse using 3D laser triangulation, which applies a straight laser line and pulls it in a linear direction over the field of view of a camera, placed at a certain angle. This sees any deformations in the line to recognise the top and the bottom of the fruit. The algorithms determine whether it meets the harvesting criteria, depending on size and shape.

Ron van Dooren, group marketing at One of a Kind Technologies, explained: ‘Cucumbers don’t grow according to rules. Leaves and branches are often in the way and we don’t want to damage them, as that would mean the end of the plant harvesting cycle. From the raw image’s 3D point cloud, we determine the shapes of cucumbers that meet harvesting criteria. Then we tell the robot to fetch what is on the cart, without causing damage. We calculate the best possible approach paths and take the best one given the circumstances.

Length is one of the main drivers because if the length is OK, the product is OK,’ van Dooren continued. ‘We know where the top is; it’s always hanging down, it never hangs up. We can manoeuvre the robot and it also has a fine-tuning camera because the plant moves.’

A concept image of weeding robot, Dick, which will use machine vision to differentiate between weeds and crops. Credit: Small Robot Company

One of the benefits for growers, he said, is that the robot can also predict what will be harvested in two to three days. With that information the grower can sell produce more effectively. ‘Price is always related to demand,’ he said. ‘If you can provide now for demand in two days, you can get a better price.’ Van Dooren expects the robot to be commercially available in the next two years.

Already available, in partnership with Christiaens, is Sortiflex, which inspects, grades, sorts and packs automatically. It uses the Beltech vision system to provide 3D information about each cucumber, such as length, position and orientation. Each product is then graded and assigned a destination which is digitally addressed to it. Further on in the line, each fruit is picked up by one of six delta-picking robots, oriented in the right way, and placed in the right destination and position. ‘It’s a logical step,’ said van Dooran. ‘People are still required … but it can make the work more interesting for humans, and the rest can be automated.’

Top image: monitoring robot, Tom, is already developed and currently in field trials on 20 farms across the UK. Credit: Small Robot Company