Standing for generic data container, GenDC defines a transport media-independent data description that allows devices to send or receive almost any form of imaging data to and from a host system, using a uniform and standard data description. GenDC completes the GenICam (generic interface for cameras) family of modules that control and exchange data between imaging devices.

GenDC defines a generic and self-described imaging data container that is independent of the way it is transported or stored. It also defines standard mechanisms shared by the various transport layer (TL) protocols in camera interface standards – such as GigE Vision, USB3 Vision, CoaXPress, and others – to transport it. As a result, a TL needs only to define how to transport the data container, without needing to know the content or format of the data container. This separation allows the addition of data types to the GenDC standard without requiring modifications to individual TL specifications. All TL protocols implemented by common machine vision camera interface standards will support GenDC; each of them already has a proposal on how to handle it. A quick and general adoption of GenDC is therefore expected. This can be attributed in good part to the common standardisation efforts in the machine vision industry around the globe.

Why GenDC?

Similar to other machine vision standardisation projects, the main objectives of GenDC are to avoid work duplication, simplify common tasks, and establish a common definition between various manufacturers. All types of cameras and devices can use GenDC as an opaque and portable data blob – a chunk of binary data – that describes the images or data to transport or process. This makes porting an imaging device from one transport medium to another a very simple task, as the data description and encoding is always the same and can be done in a shared section separate from the transmission protocol implementation. Accordingly, on the reception side, the common data description format of GenDC decouples the data reception from the data interpretation, making the design of a receiver modular, simple and uniform.

Today, most TLs – which are part of the existing camera interface standards – support only basic 2D images, monochrome and packed colour. However, these TLs are all looking to support new, more complex data payload types such as planar colour images, compressed data, 3D data, multi-spectral images, metadata and processing results. Up until now, for every new payload type, each TL standard technical committee independently had to address the way those new payloads are to be formatted and transmitted. Moreover, these technical committees have had to release new versions of their respective TL specification to accommodate those new payload types. It could take several years to have a particular new payload type supported across all the TLs. Manufacturers of transmitter and receiver devices supporting multiple TLs would also need to develop and maintain a different solution for each TL. GenDC thereby eliminates duplication of work and possible discrepancies by defining a standard, general and shared data-description format for those new use cases. The fact that GenDC is developed and maintained in a common workgroup greatly simplifies the standardisation efforts, and reduces the time and effort needed to provide support for a new imaging payload type.

GenDC in detail

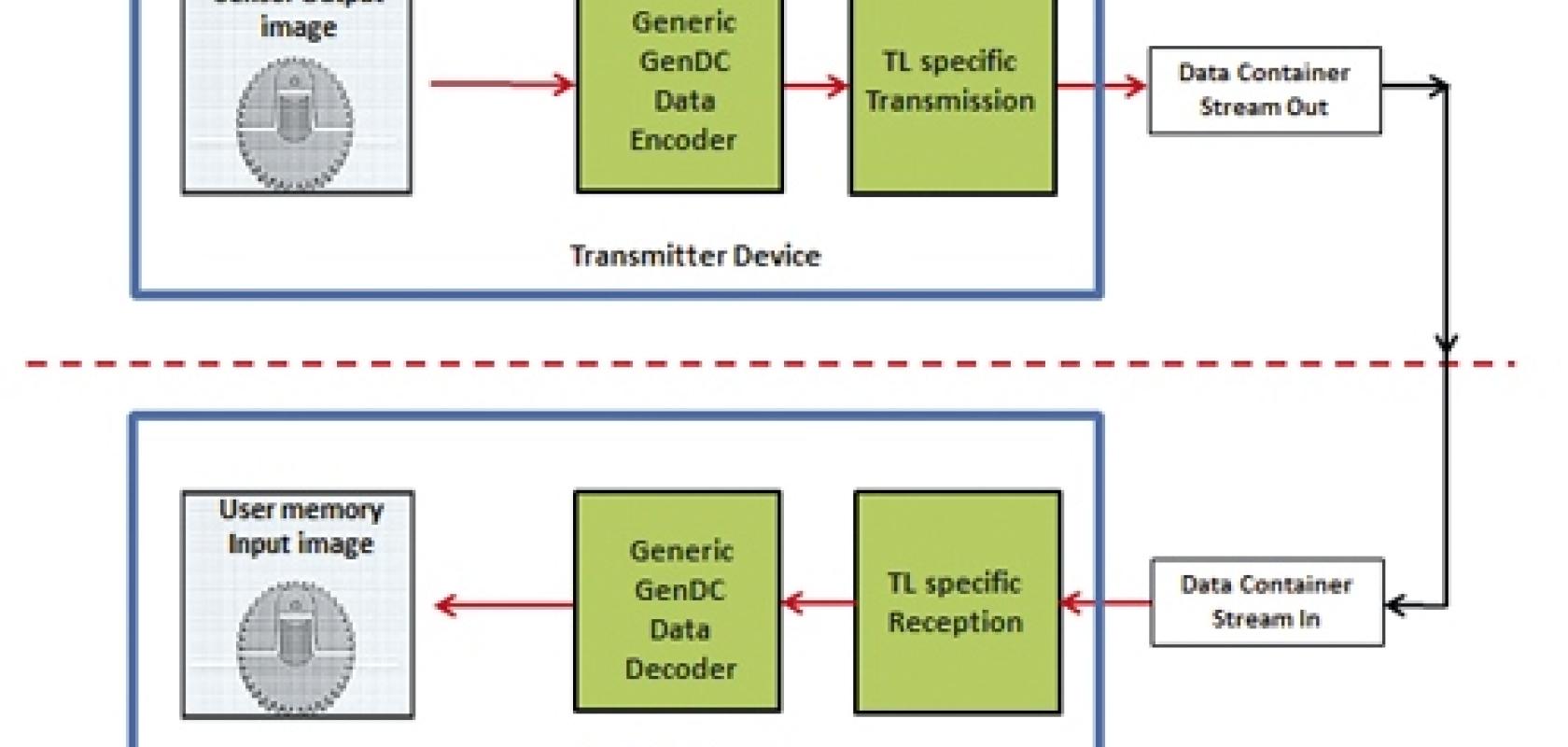

GenDC is an imaging data-container representation that supports the description of almost any type of imaging data. It decouples the data container format – the ‘what’ to transmit – from the TL data transmission – the ‘how’ to transmit. The TL is thus only responsible for the transmission and reception of an opaque GenDC payload.

Transmitter and receiver devices for different TLs can share the same GenDC data encoder and decoder, meaning that a new camera or frame grabber for a different TL is easily supported by simply changing the transmission or reception physical front end. Subsequently, the same general GenDC data-descriptor format is used for all payload types, from camera to computer as well as within and outside application software, i.e. in memory or file.

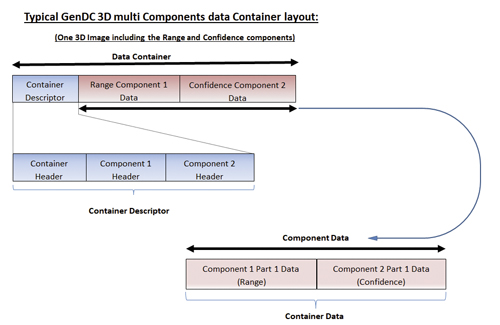

Typical GenDC data container layout for a 3D image

GenDC can describe simple or complex payloads with a unified data-descriptor structure that includes: 1D, 2D, or 3D images, for example monochrome, packed or planar colour, or 3D range; compressed buffers, such as JPEG, JPEG 2000, and H.264; multi-view or multi-spectral, such as stereo, visible and infrared, or hyperspectral data; image sequences, including multi-frame bursts; processing results such as histogram or blob analysis; metadata such as general data information, GenICam chunk, or XML; and mixed content, for example 2D image plus metadata plus processing result. GenDC is supported by existing or future vision TLs as an opaque new generic payload type, or as raw data. Respective TL committees can define specific mechanisms and rules to guarantee minimal data-transport overhead and easy interpretation of the data container on reception.

GenDC history

First presented at the Kyoto International Vision Standards Meeting (IVSM) in the spring of 2016, the GenDC concept was received with enthusiasm and obtained the backing of the G3 group, its Future Standard Forum steering committee, and all the TL committees. These TL committees agreed to work on supporting a common TL-agnostic, futureproof data format that would be shared and maintained in a common GenICam forum. Extensive work was done by a workgroup composed of the GenICam chairs, TL chairs and other interested parties to make GenDC a reality. The GenDC specification was finalised and released in December last year.

Summary

The GenDC module defines a generic and flexible imaging data container representation. Its basic principle is to create a layer that decouples what to transport (the data container description) from how to transport it (the data transmission method) using a particular media. GenDC – being TL neutral – standardises the format of the data buffers transmitted and received by various present and future TL standards. Also, since it is defined and maintained by a single workgroup composed of representatives from GenICam and each TL, the TL committees are now able to focus solely on providing efficient and reliable real-time data transmission over a particular physical media. TL standards specifications are thus no longer affected by changes to data representation or by new payload types, which can now be added without requiring updates. Moreover, since the GenDC specification defines a self-described data format, it is also a standard way to represent almost any imaging-related information in many different contexts beyond the data exchange between a transmitter and a receiver. This includes, for example, data containers stored in host memory or in a file.

Finally, with all the above features, GenDC completes the GenICam offering of TL-independent modules to control and exchange data between imaging devices, including GenAPI, SFNC, GenCP, GenTL, and now GenDC.

The GenDC 1.0.0 specification can be found on the EMVA website at: www.emva.org/wp-content/uploads/GenICam_GenDC_1.0.pdf

Suprateek Banerjee, VDMA’s robotics and automation standards manager, updates on the OPC Machine Vision companion specification, designed to make vision interoperable with factory machines

‘Wouldn’t it be great, if machines could communicate in a direct way with each other? This idea is at the core of the Industry 4.0 movement to create the smart factory of the future,’ said Dr Horst Heinol-Heikkinen, chairman of VDMA’s OPC Machine Vision initiative and its working group.

‘The goal of reaching interoperability is the new core competence that must distinguish our future products in a connected world of Industrial IoT,’ continued Heinol-Heikkinen, who is also managing director of Asentics. ‘I am proud that machine vision plays a pioneering role and, as one of the first VDMA divisions, is presenting the release of an OPC UA companion specification to the public, thanks to extraordinary commitment and co-operation by core working group members who worked very hard and made it possible.’

The VDMA, Europe’s biggest industrial association of more than 3,200 firms from mechanical engineering and machine building, is driving the development of interoperability. It has teamed up with the OPC Foundation and its OPC UA technology, to develop market and industry-specific standards for the many domains in the sector, such as robotics, machine vision and machine tools. These domain-specific standards are companion specifications; part one of the machine vision specification has been published, and can be downloaded at OPC Foundation and VDMA Machine Vision websites.

Open platforms

The acronym OPC UA stands for Open Platform Communications Unified Architecture. OPC UA is a vendor and platform independent machine-to-machine communication technology, recommended by the German Industry 4.0 initiative and other international consortiums, such as the Industrial Internet Consortium (IIC), to implement Industry 4.0. The specification of OPC UA can be divided in two: the basis specification and companion specifications. The basis specification describes how data can be transferred in an OPC UA manner, and the companion specifications describe what information and data are transferred. The OPC Foundation is responsible for the development of the basis specification. Sector-specific companion specifications are developed in working groups, usually organised by trade associations, like the VDMA, a key player in the Industry 4.0 initiative.

In early 2016, the VDMA Machine Vision board decided to develop an OPC UA companion specification for machine vision. The work has been carried out in the joint working group, made up of members from the OPC Foundation and VDMA Machine Vision. A core working group with 17 experts from leading European machine vision firms came up with proposals for the approach and contents, and monitored feedback from the wider machine vision sector. For broader reach, VDMA Machine Vision decided to bring this work into the G3 standardisation co-operation for machine vision.

OPC Machine Vision part one

Part one describes an abstraction of the generic vision system; a representation of a digital twin of the system. It handles the management of recipes, configurations and results in a standard way, whereas the contents stay vendor-specific and are treated as black boxes. It allows the control of a vision system in a general way, abstracting the necessary behaviour via a state machine concept.

A trial implementation was completed and presented to an audience of automotive industry experts in May at an OPC UA automotive day in Wolfsburg. A hardware demonstrator is being developed, to be showcased at trade shows in Germany soon.

In future parts of the standard, the generic basic information model will shift to a more specific skill-based information model. For this purpose, the proprietary input and output data black boxes will be broken down and substituted with standardised information structures and semantics. This follows the idea of implementing information model structures derived from OPC UA DI (device integration, part 100) as OPC Robotics has already done in part one of the OPC Robotics companion specification. Thus, it ensures the idea of cross domain interoperability, so that machine vision systems can talk to robots and vice versa – and, at a later stage, to all kinds of devices.

The official kick-off for part two of the OPC Machine Vision companion specification began on 18 September, where all the members of the working group met at VDMA, Frankfurt to brainstorm important topics to be covered in part two, and to lay a well-defined roadmap for the standard.

Stefan Hoppe, president and executive director of the OPC Foundation, said: ‘Machine vision has taken a decisive step into the Industry 4.0 era. Beyond the work done to adopt OPC UA as the interoperability platform for machine vision, we applaud the joint working group for embracing the spirit of inter-organisational collaboration on a global scale with G3. This big thinking aligns well with a key OPC Foundation focus on encouraging organisations to work together to reduce the vast number of overlapping custom information models into a harmonised set of OPC UA companion specifications which will benefit end-users and vendors by lowering barriers to true interoperability.’