The cameras and optics within the latest consumer headsets are not only changing how users interact with AR/VR devices, but are helping to improve their performance

Set for a February 2024 release, Apple’s Vision Pro mixed reality headset has been described as the company’s biggest gamble in a decade.

That’s because despite the hype, virtual and augmented reality devices often fall short of user expectations and have, so far, struggled to penetrate the consumer market on a mass scale.

But could Apple – a brand whose products have often led the widespread adoption of new technology – throwing its weight behind this trend signal a new era for VR/AR headsets in the consumer space?

Eye tracking allows users to interact with AR/VR devices in a new way

Apple’s Vision Pro incorporates 23 million pixels across two displays, and a dual-chip design to ensure ‘every experience feels like it’s taking place in front of the user’s eyes in real time’ the company says.

The device’s eye-tracking technology has been hailed as one of the key features that sets it apart from competitors. The innovation enables users to control the device solely through eye movements or hand gestures – unlike other AR/VR headsets such as the Meta Quest, it does not require an external keyboard, mouse, or touch screen.

Apple’s eye tracking system projects invisible light patterns onto the retina that are detectable via a sensor. Multiple invisible LEDs surrounding each eye illuminate the user’s eyes and four near infrared (NIR) cameras, located below and at the inner corner of each eye, capture the reflected NIR light.

The images captured by the NIR cameras are then analysed by advanced image processing algorithms and Apple’s powerful R1 chip, which was designed specifically for the Vision Pro. This chip processes vast amounts of data in real time, allowing instantaneous responses to the user’s focus and eye movements.

Foveated rendering significantly improves power efficiency

Eye tracking not only improves how the user interacts with AR/VR equipment, but allows developers to improve the performance of their devices.

“We've seen for some time the use of eye tracking to understand human intention, but I think one of the big things that we see now is that… the device is getting enhanced by eye tracking,” said Johan Hellqvist, Head of XR at Swedish firm Tobii, during a Moor Insights and Strategy XR podcast. Tobii is a major developer of eye tracking technology, which features in devices such as the playstation VR2 headset launched in 2023.

An example of this is a technique known as foveated rendering, whereby eye tracking helps to optimise the performance of the display. By mimicking the natural degradation of perception, foveation is used to limit high-definition rendering to the small portion of an image where the user’s attention is focused. This provides an enhanced immersion experience for the user while lowering processing and bandwidth needs.

On a standalone headset, Tobii says this can result in frame rate increases of up to 78% with a 10% reduction in power consumption.

Conserving bandwidth while improving performance is particularly important for being able to cope with the ever-increasing data demands of AR/VR devices at speeds that match the extraordinary fast muscle movements within our eyes.

“The reason why we need fast eye tracking is because a lot of the more interesting things actually happen in less than 10 milliseconds,” said Gordon Wetzstein, Associate Professor at Stanford University. “The eye has some of the fastest moving muscles in the human body, they're contracting in less than 10 milliseconds, so the speed is really crucial for these eye trackers to properly measure the fastest and most subtle movements of the eye.”

“To properly measure the fastest and most subtle movements of the eye we basically have to enable temporal sampling rates of a thousand times per second, or more ideally,” Wetzstein added.

It is not clear how fast the eye tracking cameras within Apple’s Vision Pro operate at, but Tobii’s most advanced system (the Tobii Pro Spectrum) takes up to 1,200 images per second. The system is larger than its solutions for the AR/VR market, however, and is not intended for use within a wearable device.

How companies are addressing distortion

Eye tracking is also used to correct failures in the headset, Hellqvist added, for example to compensate for distortion – which occurs if the headset moves or the pupil position changes as the user’s gaze shifts, causing the optical path through the lens to vary.

“When you look in different directions… the image warps slightly, and when you’re moving around it's like the world is warping a little bit all of the time,” he said.

And, as displays keep increasing in resolution, this problem is heightened, Hellqvist added. “The distortion becomes more and more visible and this can cause nausea because the real world and the virtual world are not really correlating. You can get used to it after a while, but as soon as you take off the headset your brain takes a while to adapt back to the real-world scene.”

While current distortion correction techniques can straighten otherwise distorted lines, this only works well if the entrance pupil of the eye is aligned with the ‘optical sweet spot’ in the middle of the lens. If the rendered distortion correction does not adjust with eye movement, the optical distortion will continue, in an effect known as ‘pupil swim’.

“You want to dynamically change the distortion correction so that the warping or the distortion is always correct.” Hellqvist added.

Pancake lenses are increasingly being used in VR headsets to solve distortion variation. They use polarised light to fold the optical path within the lens by alternating refractive elements (such as glass) and polarised films. This allows the lens to sit closer to the display than a Fresnel lens, lengthening the optical path and flattening the Petzval field, which reduces distortion variation and increases the percentage of the image that is in focus. However, while this reduces pupil swim, it doesn’t completely eliminate it.

An example of the Tobii Pro Glasses 3 in use (Image: Tobii AB)

Dynamic distortion compensation tracks the pupil location to maintain a corrected image throughout the entire field of view. To achieve this, Tobii’s eye-tracking systems created a new signal — the entrance pupil position — which indicates the location of the actual pupil in 3D, providing developers with the necessary input data to correct distortion in real time.

This gives headset manufacturers more flexibility in hardware design because the technique can be used to correct lenses with considerable distortion variation.

Tobii’s Pro Glasses 3 take a similar approach to Apple’s Vision Pro’s hardware, using LED IR illuminators and four cameras – one for each eye – to capture eye movement.

Unlike the Vision Pro, however, Tobii’s headset – as its name suggests – looks more like a pair of glasses, and integrates the illuminators into the lenses rather than within the hardware below or to the side of the eye. This is achieved by an in-house lens casting technology that uses UV light.

“This is enabling smart eyewear to look more like traditional eyewear,” said Lutz Körner, head of Tobii Switzerland. “Compared to the traditional way of casting blanks and grinding, Tobii lens technology is able to embed sensitive and curved films into prescription lenses.”

The manufacturing process consists of six steps. First, the automated manufacturing machines choose the right combination of moulds, which are aligned with respect to one another and with the element to be embedded. A sealed mould cavity is created that is filled with resin, which is then cured with UV light.

“Within a few seconds, the raw lens is basically finished and the moulds can be removed and reused using standard timing processes to get a hard coating and an anti-reflective coating on the final product,” Körner said.

“It's amazing to see the great interests of the AR and VR industry on our lens casting technology combining different technologies, bringing up completely new devices,” Körner added.

Tobii will demonstrate this manufacturing technology alongside its eye-tracking solution for XR applications at CES 2024.

Eye tracking is advancing through transparent displays and sensors

While most eye tracking systems place illuminators and IR cameras away from the eye’s line of sight so as not to disturb the user’s view, transparent display technology is allowing device manufacturers to place cameras directly in front of the eye. This could not only improve the accuracy of gaze detection, but allow the user to maintain eye contact while teleconferencing, driving, or presenting in front of a screen.

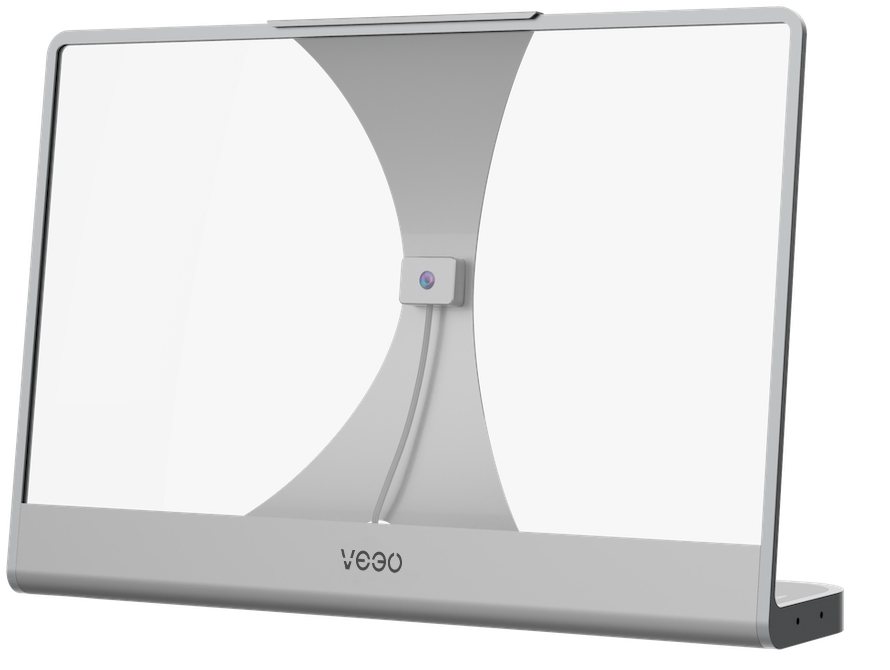

At CES 2024, Las Vegas-based Veeo will demonstrate a transparent OLED monitor it developed alongside LG Display, which incorporates an eye-level camera behind the display to enable more natural virtual conversations.

“Until somebody invents a camera with full-colour high-def x-ray vision, getting direct eye contact from somebody looking at a screen is close to impossible,” said Ji Shen, CEO at Veeo. The camera needs a vantage point behind the centre of the screen, but the screen is always in between and in the way.”

In addition to transparent displays, see-through sensors could soon allow eye-tracking hardware to be integrated directly in front of the user’s eyes, such as in a windshield or a pair of glasses.

Also at CES 2024, Zeiss will also present a ‘transparent camera’, which could improve eye tracking in autonomous vehicles – such as to monitor driver awareness. The company’s Multifunctional Smart Glass technology permits the integration of a see-through camera – a ‘holocam’ – that uses coupling, decoupling and light guiding elements to divert incident light to a concealed sensor.

Veeo's T-30 display monitor with behind-display camera technology set to be shown at CES 2024 (Image: Veeo)

A separate group, from the Barcelona Institute of Science and Technology and Barcelona-based startup Qurv Technologies, is developing see-through sensors that combine the properties of graphene, an excellent conductor but poor photon absorber, with quantum dots, which are excellent absorbers of light.

To create the sensors, the scientists first deposited graphene on a clear quartz substrate, and then coated it with an ultrathin layer of quantum dots just a few tens of nanometres thick. The quantum dots absorb photons and pass them on to the graphene, which converts them into voltage.

The graphene–quantum dot photodetectors are about 90% transparent. They absorb less light than conventional silicon photodetectors, which reduces their overall performance, but convert the absorbed light to an electrical signal at efficiencies of around 60%, which is comparable to conventional silicon photodetectors – and sufficient for eye tracking.

The team produced the photodetectors with graphene grown using common vapour deposition techniques on 300mm wafers. The pixels are connected to the signal-processing electronics via wires made of indium tin oxide transparent electrode material. The researchers projected pixelated black and white images onto the array and, by interpreting the signals from each photodetector, were able to reproduce them.

While most of the patterns could be reconstructed by the array, more work is needed to improve performance, the researchers reported in a paper published in ACS Photonics. They now plan to further improve the resolution and speed of the image sensors and develop methods to make them reliably on a larger scale.

Author: Jessica Rowbury | Lead image: Tobii AB