Following speaking at Embedded Vision Europe, Pierre Gutierrez, lead machine learning researcher at Scortex, writes about the challenges of deploying deep learning on the factory floor

The efficacy of deep learning for computer vision is now well proven. As a result, many manufacturing companies are trying to deploy or at least run a first project using deep learning.

Take the example of industrial inspection, our task at Scortex. If a company was to start from scratch, here would be a typical setup for a machine or deep learning proof-of-concept. First, the data scientist is given a dataset of, let’s say, around 10,000 annotated images. The dataset is then split into a training set on which the model parameters are going to be learned, and an evaluation set to assess the model’s performance. A convolutional neural network, pre-trained on ImageNet, is used and gives potentially good results, for example 99 per cent recall and 99 per cent precision.

The proof-of-concept project is then considered a success, and therefore most of the work is done, right? Wrong! This is because of several challenges, namely that there is a huge gap between an approximate working model and a solution running in production, and that, in factories, processes and manufactured parts will evolve and the deep learning solution must be able to cope with unavoidable changes.

Deploying deep learning

A deep learning approach is a data-based solution. This means, firstly, the acquisition system is paramount – the phrase ‘garbage in, garbage out’ is apt here. In computer vision, the easier it is to distinguish defective from non-defective regions on an image with the human eye, the easier it will be for a computer model. While it is true that deep learning is more robust to acquisition system changes than earlier computer vision solutions, do not expect the model to detect things a trained human would not be able to distinguish. Indeed, the dataset the model is trained on is itself annotated by humans who must be able to detect such defects.

A second consideration is that vertical data understanding is key. The deep learning algorithms and the ways of training them are becoming a commodity; vertical deep understanding of business and data is not. Changing model architecture can give a few more accuracy points, but the real game changer is the quality of the data. Here is an unfortunate example. After a few weeks of collecting and annotating data, you train a model that seems to perform well. You test it in production, but realise the quality specifications have been misunderstood leading to a very high defect tolerance. To remedy the problem, all the data has to be re-annotated, which costs tens of thousands of euros and leads to weeks of project delay.

Storage and data governance is also necessary. Sure, at first, a folder with images and a large JavaScript Object Notation (JSON) for labels seems okay. But pretty soon, a more robust database will be required. In the first case, acquiring data on the factory floor is error prone – some unnecessary data will be collected, some wrongly labelled. The user needs to be able to curate data from a specific date and time. In addition, a proper database is necessary to create clear training and evaluation sets, for class-stratified sampling for training, or for using per-part or per-day dataset balancing.

A proper database also enables performance breakdown; for example, on what part or defect does the model perform well? Finally, a databased is needed for traceability: what happened on this day? Why did the model infer this part was defective while the human did not see it?

In addition, a deep learning approach needs a fast and robust inference pipeline. Scortex is dealing with real-time constraints, while working with high-resolution images at 10 to 20 frames per second. This means that an overly large off-the-shelf network architecture may not be a good fit for the application, and that the company might not be able to deploy a trained model unless there is a software engineer team making the prediction delivery a commodity.

Once the user has a solution for all these issues, they have a decent industrial prototype because handling the inputs and outputs of the system is reasonably covered. Now this is where it gets complex, from the machine learning point of view.

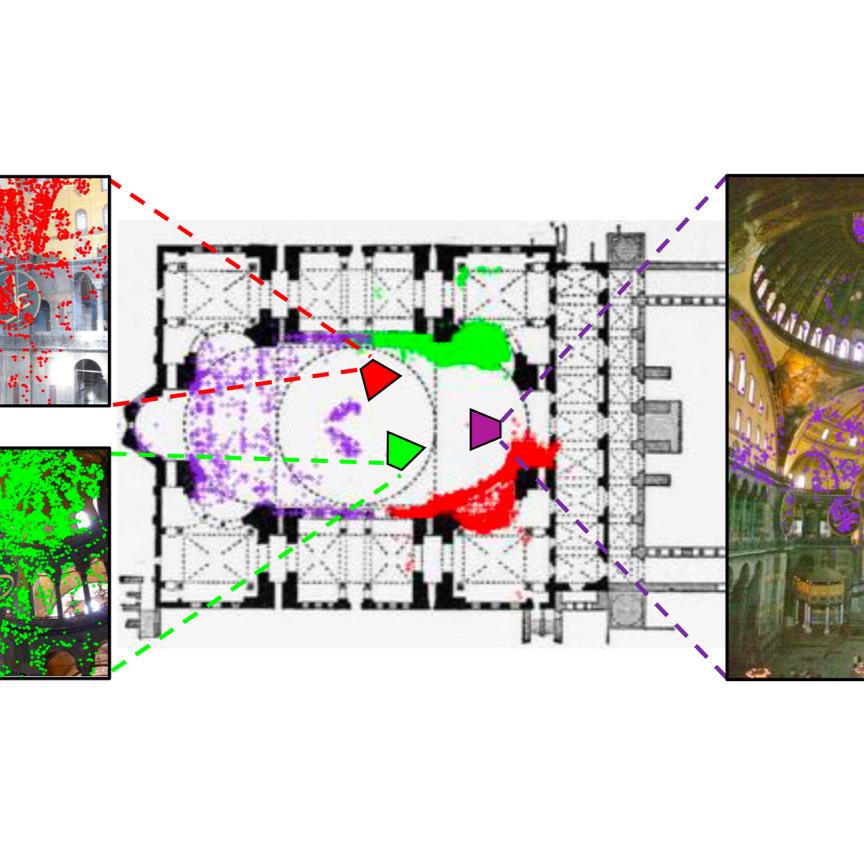

Scortex’s Quality Intelligence Solution can detect and decide the part quality in real-time, matching the manufacturing line speed. Automatic quality reports are generated and status of production quality with dedicated dashboard and integration in ERP or EMS.

The open world challenge

In a factory, things tend to change and unpredictable cases will always appear. As a result, the user cannot expect a model trained on a few images from a specific day on a particular setup to generalise to all possible cases forever. This means that deep learning models need to be maintained over time and have the ability to adapt quickly. In the deep learning community this is called the open world challenge.

Here are a few factors the user may want the deep learning solution to be robust to, or at least be able to adapt to:

- Continuous distributions drift: The environment will change, whether you want it to or not – some camera pixels may die, the intensity of an old LED may vary, the amount of dust in the factory or on the camera lens may increase, for example.

- Unpredictable false positives: A standard deep learning model often does not know what it does not know. That is, a model is often overconfident when inferring on something it has never seen before. As a result, there are chances a change of process or background might impact the system – a new pen mark on each part may appear as a defect, or a screw becoming visible may look like a crack.

- New parts and new assembly lines: A manufacturer generally produces different products on several production lines. When doing a first project, the user might want to identify one line and a few products to run the experiment on. One would then expect the model to adapt quickly to new parts with similar defects, but with a different geometry or textures. One would also expect the model to be easily deployable on new production lines, where conditions may vary because of real life constraints, such as camera position, lights, conveyor belt, or different integration on the production line.

- Support new kinds of defect: Because processes change, new kinds of defect can appear. The user might also want to change the specification and declare defective some characteristics previously considered benign. A proper deep learning solution should be able to adapt quickly to this specification evolution.

At Scortex we have been studying a few solutions to the open world challenge. These are, firstly, data augmentation. The user can make a model more robust by randomising the images it sees outside of its comfort zone. For example, to make the model robust to a change of camera position, the user can apply random flips, translations, rotations or projections on images during training. Similar transformations can be applied to be robust against external lights (random additions or multiplications) or camera parameters (random contrast or gain).

Secondly, add more data and employ active learning, which is the best solution in terms of model performance, but not always in terms of cost. It necessitates the ability to acquire more data and to annotate it, which can take time and energy. A way to keep the cost down is to label only the data that will lead to the best performance. This is known as active learning in the literature.

Thirdly, use domain adaptation. This relatively new set of techniques allows a model to generalise better to new environments – acquisition systems, for example. It has been widely studied in the deep learning driverless car literature where researchers use it to generalise from simulated environments, such as a Grand Theft Auto game engine, to real life.

Finally, Scortex has been studying incremental learning. This new field is linked to the update of a model to support a new class under a set of constraints. The constraints typically are ‘limited’ or ‘no access’ to data previously used for training. This can be the case when the user wants to retrain locally or at the edge. By extension, this technique can be used to give faster training of a new class while maintaining the old class’s performance and calibration.

None of these techniques alone solve the problem of open world, but combined they create a robust model at the beginning of a project and allow it to be maintained over time.

To sum up, making a deep learning vision solution is still a difficult problem, not because of the algorithms used, but rather because of data curation and traceability, the predictions serving performance, and how the model is maintained over time.

Based in Paris, Scortex was founded in 2016 to automate quality control in manufacturing using artificial intelligence. The company has 30 employees and provides complex visual inspection services for manufacturing. The technology can recognise and track defects, such as scratches, cracks and blemishes on surfaces ranging from smooth (such as painted plastic) to highly textured (such as forged metal).

Write for us

Want to write about an industrial imaging project where you have successfully deployed deep learning? Email: greg.blackman@europascience.com.