The Austrian Institute of Technology, HD Vision Systems, Prophesee and Zeiss have been shortlisted for the Vision Award. The four entries - selected by the jury out of a total of 44 submissions - cover event-based vision, inline metrology and two 3D imaging products.

The prize for technological excellence in the field of machine vision will be awarded during the Vision show, which will take place in Stuttgart from 5 to 7 October.

Each company will present their technology during the Industrial Vision Days on 6 October from 10.20am to 11.20am. Warren Clark, publishing director of Imaging and Machine Vision Europe, which sponsors the €3,000 award, will moderate the session, with a member of the judging panel crowning the overall winner at the end.

While smaller than usual, more than 250 companies will exhibit products at the trade fair, which will be one of the first major in-person shows for the machine vision industry since the beginning of the pandemic.

The four shortlisted award entries are:

Xposure:Photometry – fast inline 3D surface scanner

By Ernst Bodenstorfer, Markus Clabian, Christian Kapeller, Philipp Schneider, Petra Thanner, AIT Austrian Institute of Technology

Xposure:Photometry, developed by the Austrian Institute of Technology, is a fast inline 3D surface scanner realised by a high-speed smart camera. It is designed for optical inline surface inspection tasks common in many industrial manufacturing processes.

Conventional 2D scanning methods cannot distinguish between pseudo defects – dirt on the surface of the manufactured part, for instance – and actual 3D defects such as scratches, ridges, spikes, pinholes or wrinkles. At the same time, existing 3D inspection methods are not able to handle high speeds, neither for single objects nor for high transport speeds of endless material.

Xposure:Photometry addresses this by combining very fast photometric stereo (PS) imaging and smart camera technology to highlight inline, actual small 3D defects on the object's surface, while distinguishing them from pseudo defects.

Photometric stereo imaging is known as a shape-from-shading method for reconstructing the 3D object shape from planar images taken from multiple illumination directions. It is sensitive to small deviations in the object surface structure, derived as local changes to the surface normal vector. Photometric stereo processing inherently computes different images of the object's surface structure under different illumination directions, which highlights small 3D surface defects.

To illuminate the object, AIT typically uses a set of four fast-strobed line light sources. Xposure:Photometry can be combined with AIT’s Xposure:Flash line light sources, but is also compatible with a large variety of fast-strobed off-the-shelf line lights.

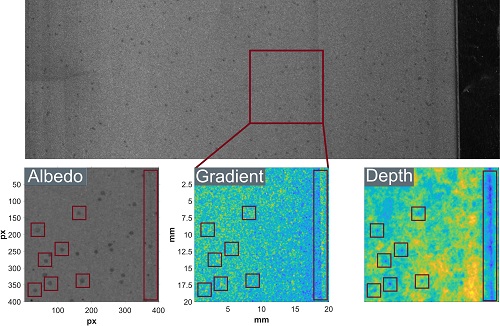

AIT’s multi-line-scan camera, Xposure:Camera, is mounted above the inspected object's surface. The camera acquires every point of the object's surface four times, for the four different illumination directions. An FPGA in the camera calculates an albedo image (conventional 2D image of the surface) and a gradient image representing the surface normal vector at each acquired surface point.

The output of the FPGA can directly be used for inline 3D surface inspection. Furthermore, the processing can serve as a pre-processing step to supply classical or AI-based object classifiers with rich photometric stereo data of the objects’ surface, in order to make the classifiers more discriminative.

Application of Xposure:Photometry in battery foil inspection. Depth image (right) shows clearly the defects, while the 2D images (left) also show additional pseudo defects. Images have been taken with the battery foil moving at a speed of 10m/s. Credit AIT

Xposure:Photometry combines 2D high-speed capture with on-camera 3D surface capture, which, to AIT’s knowledge, is currently not on the market. When 1D surface gradients are enough, the system can reach 300kHz acquisition speed using two light directions. When full 2D surface gradients are required, 200kHz is reached using three light directions. Finally, when targeting optimal, 3D information of the surface structure, the system can run at 150kHz using four light directions.

In its high-precision configuration, Xposure:Photometry delivers lines with 2,048 pixels width at a line rate of 150kHz, and with a 300kHz line rate for a lower precision configuration.

Potential applications include: wire inspection, where material defects on the surface of wires can be identified as they are drawn at speeds of 100m/s; print inspection, such as inspecting passports, banknotes or other high-quality printed material; traffic infrastructure monitoring; and inspecting battery electrodes, which are made of a very dark material – Xposure:Photometry is sensitive to differences in greyscale value and therefore able to detect defects like scratches and pinholes fast on dark surfaces.

Easy pick-and-place for complex objects combining light field technology with artificial intelligence

Dr Christoph Garbe, HD Vision Systems

LumiScan Object Handling version two and its 3D sensor, LumiScanX, provide an industrial approach to light field 3D imaging. The system is able to image glossy or shiny parts, while also reducing occlusions on objects. Thus, the light field sensor is well suited to inspecting complex objects such as forged parts, semi-transparent pieces or blunt plastic. At the same time, the compact multi-camera-array consisting of 13 lenses provides more precise information than conventional 3D imaging methods.

On the software side, pre-configured and pre-trained neural networks are used for the vision task. The user can fine-tune the algorithm on labelled images of their parts with the help of intuitive software. If a workpiece has to be picked up in one way and put down upright, for example, staff can simply mark these areas via a drag-and-drop tool in the software. After training, the software learns to locate the objects in the image and determines possible grip points. This information is then used to calculate and send passive way points to a connected PLC or robot controller.

In addition, through LumiScan Object Handling, the sensor can be installed and calibrated in less than two minutes.

Complex objects can now be fully automated with robot pick and place using LumiScan Object Handling. The solution can be used for a variety of object detection and object handling tasks in manufacturing.

Alongside numerous applications for forged parts in automotive, LumiScan Object Handling version two is also able to automate picking and clearing unsorted bagged goods. Here, the system not only detects the form of unstable bags and their orientation, but also delivers the corresponding information to the robot controller. The bags can then be placed accordingly into the machine and cut open.

Prophesee Metavision technology – a comprehensive event-based sensing and software solution

Christoph Posch, Prophesee

The Metavision platform provides developers of machine vision applications with a complete solution to implement event-based vision in their systems. It is particularly well suited for applications in high-speed quality control, inspection and analytics, but has proven to have unique capabilities in other areas as well, including as an aid to help restore or enhance human vision in people with conditions that impair their sight.

Event-based vision is a paradigm-shift in imaging addressing the limitations of traditional frame-based cameras. It is based on how the human eye records and interprets visual inputs. The sensors facilitate machine vision by recording changes in the scene rather than recording the entire scene at regular intervals.

Specific advantages over frame-based approaches include better dynamic range (>120dB), reduced data generation (10x-1,000x less than conventional approaches) leading to lower transfer or processing requirements, and higher temporal resolution (microsecond time resolution, i.e. >10k images per second time resolution equivalent). Other advantages are low light imaging, down to 0.08 lx, and power efficiency, with just 3nW per event and 26mW at sensor level.

At the core of the innovation is the Metavision sensor, a third generation 640 x 480 VGA event-based sensor. Inspired by the human retina, each pixel of the Metavision sensor embeds its own intelligence and activates themselves independently, triggering events.

Prophesee has also partnered with Sony Semiconductor Solutions to integrate the Prophesee advantages with a new generation stacked event-based vision sensor co-developed with Sony. Prophesee has released two evaluation kits specifically for this architecture that gives developers early access to the sensor, which features 4.86µm pixel pitch and 1,280 x 720 pixel resolution.

Prophesee's evaluation kit

Key to implementing the Prophesee solution is the Metavision Intelligence Software Suite. It can be used to perform a variety of design exploration steps and incorporate customised software applications to meet specific market requirements.

Use cases for the two sensors include high-speed counting, vibration monitoring, object tracking, particle size monitoring and other critical vision-enabled processes in manufacturing, assembly, logistics, quality control, and inspection.

As one example, the platform can be used to improve predictive maintenance by measuring and monitoring equipment vibrations from 1Hz to 10kHz remotely, continuously, and in real time under normal lighting conditions.

In automotive and mobility, DTS from Xperi has used the sensor to develop a neuromorphic driver monitoring solution, registering saccadic eye movement and micro-expressions. In addition, VoxelFlow, developed by Terranet in conjunction with Mercedes-Benz, uses Metavision to enhance existing radar, lidar and camera systems in autonomous driving systems.

Another outstanding application of neuromorphic event-based vision can be witnessed in the study conducted by Gensight Biologics. The study, published in Nature Medicine, is the first case reported of partial recovery of visual function in a blind patient with late-stage retinitis pigmentosa. The patient is the subject of the ongoing trial of GenSight Biologics' GS030 optogenetic therapy. The study combines gene therapy with a light-stimulating medical device in the form of goggles that uses Prophesee’s Metavision sensing technologies.

Zeiss AICell Trace – a revolutionary generation of inline measurement technology

Manuel Schmid, Carl Zeiss Automated Inspection

The new generation of inline measuring technology, Zeiss AICell Trace, forms the basis for the Zeiss strategy ‘Metrology goes inline’. It provides improved quality assurance in the automotive sector with regard to productivity, efficiency and economic sustainability.

Previously it was mainly only process control that was carried out on the production line. Now, Zeiss’s inline measuring system opens up real traceable metrology tasks in accordance with DIN-standards directly on the production line, which, in the past, were only possible with CMMs in dedicated measurement rooms.

By bundling process control and inline metrology in one single cell, Zeiss AICell Trace opens up new possibilities for customers when defining quality assurance strategies. The system provides high-precision digital quality information through point cloud-based feature detection in real time.

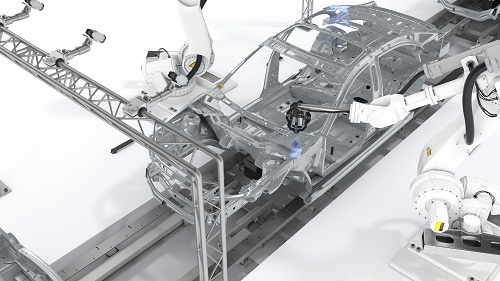

Zeiss's AICell Trace measurement system. Credit Zeiss

The system contains the following components: fixed floor markers with integrated LEDs that act as a temperature-independent reference in the coordinate system; tracking cameras capture both the exact position of the sensors in each measurement position, as well as their own position; and the Carbon Fiber Navigation Tool with integrated active LEDs forms a fixed unit with the 3D point cloud sensor, AIMax Cloud.

By using this new optical tracking technology in combination with the AIMax Cloud sensor, absolute inline measurement results can be achieved that were previously not possible on car body construction lines.

This opens up the potential for cost saving and process optimisation thanks to the ability to carry out traceable inline measurements in the production environment.

The automotive industry is currently going through a radical change, with the shift from the combustion engine to e-mobility, as well as an increase in efficiency in factories and the digital transformation of production plants.

In order to implement these changes as quickly as possible, the OEMs have set up various strategy programmes, some incorporating the new Zeiss inline metrology solution. Zeiss has already implemented this inline measurement technology several times at stations with individual components, sub-assemblies, and for complete car bodies for customers in the body shop.

In addition to the further rollout of Zeiss AICell Trace, Zeiss is working with Gom to integrate digital inline metrology products into the production lines of car body shops.