Edge devices make our lives easier, smarter and enable more sustainable solutions – from precision farming to reduce fertilizer usage, to smart traffic applications that help to avoid congestion, to AI-powered medical devices that detect diseases based on pattern recognition.

Embedded vision is a key technology for these devices.

Detailed data analysis directly on the edge is enabled by ultra-compact cameras, powerful and energy-efficient embedded processors and the latest AI technologies.

With these developments, the integration of embedded vision has become easier than ever.

From PC-tower-sized vision systems to smart edge devices

Standard for vision applications until the 1990s have been cameras connected to external processing units. This setup provided the necessary computing power to handle image data. But the design and implementation of these systems, usually for industrial quality control, was time-consuming, expensive and far off from today’s definition of embedded. In 1995, Michael Engel presented the VC11, the world’s first industrial smart camera based on a digital signal processor (DSP). His goal was to create a compact system that allowed image capture and processing within the same device. The camera was offered at a significantly lower cost compared to PC-based systems and led the way for today’s smart cameras and embedded vision systems. This invention was also the cornerstone for the foundation of Vision Components (VC).

A definition of embedded vision systems

For the first time, the smart camera VC11 applied what still are embedded hallmarks today: Systems that are perfectly adapted to their applications, with no overhead and unnecessary components, optimised for industrial uses, mass production and low per-unit cost. In general, these solutions are as small as possible and are optimally suited for edge devices and mobile applications. Therefore, low power consumption is also an important aspect of embedded vision systems. In addition, rugged operating ranges and industrial-grade quality as well as long-term availability are also firmly linked to the term embedded electronics.

From DSPs to heterogeneous architectures

Today, the technology can be utilised and found in various applications from consumer end products to highly specialised industrial uses. This is the result of a vast technological evolution over the last 30 years: During the years following Michael Engel’s invention, DSPs were standard for smart cameras’ image processing. The technology evolved from the first chips by companies such as Analog Devices to new models with gigahertz computing power by Texas Instruments in the first years of the 21st century, but the main principle remained: Image data was captured by an image sensor that was controlled by the DSP. The data was then transferred to the internal memory via a bus controller. All processing took place in one processing unit. With advanced DSPs, even applications such as 3D profile sensors with onboard data processing have been enabled.

A milestone for the evolution of embedded vision systems was marked by the introduction of dual core ARM processors with a FPGA by Xilinx. Vision Components developed its first embedded vision systems based on this heterogeneous architecture in 2014. They combined the advantages of parallel real-time processing capabilities of the FPGA with freely programmable ARM cores running a Linux operating system. This setup made the development of embedded vision systems more versatile and flexible and opened up new possibilities for developers to code and implement software for their specific applications.

Consumer and automotive industry drive technological advances

Computing power increased with new processors that have been developed, driven by high demand for small and powerful electronics for consumer products, smart home and industry, as well as new applications in the automotive industry. As a result, embedded vision systems have been designed to fit any market requirement, from board-level cameras with multiple-core processing power to extremely small embedded vision systems that combine image acquisition and processing with ARM- and FPGA-cores on a single board the size of a postage stamp.

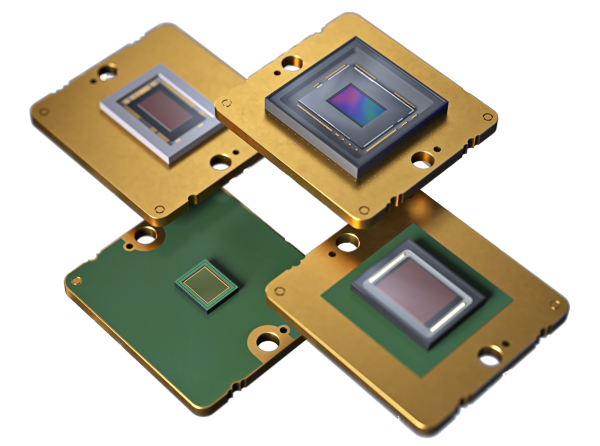

MIPI camera modules are available with various image sensors, compact and flexible to deploy with all common processor boards (Image: Vision Components)

State of the art technologies are nowadays heterogeneous system approaches with multiple processing units that use ARM-CPUs and high-end FPGAs as well as specialised processing units such as DSPs, graphics processing units (GPUs), tensor processing units (TPUs) especially developed for machine learning, and AI-optimised Neuronal Processing Units (NPUs). In the design of these systems, processing units can be deployed using respective system-on-modules that already contain all processors. It is also possible to combine different modules and thus benefit from a greater freedom to select the best fit processor for the respective tasks.

Three ways to implement embedded vision

When designing a solution with embedded vision, developers can choose the perfect level of integration for their projects: from a MIPI camera module, to complete embedded vision systems that combine image acquisition and processing in an ultra-compact form factor, to ready-to-use OEM solutions that can be flexibly adapted to fit their end-application.

MIPI cameras: flexible, cost-effective and easy to integrate

MIPI camera modules have become a standard for innumerable applications in the industry, driven by their widespread use in the consumer market and the broad support of the MIPI-CSI-2 interface by modern embedded processors. MIPI camera modules are flexible, cost-effective and easy to integrate. They are available from low-cost to high-end sensors with 20MP resolution and more with global or global reset shutter. The latter provide best-in-class image quality, combined with high frame rates and the advantages of the MIPI interface. Developers choose their desired image sensor and at best a supplier that also provides drivers in source code and accessories such as cables, lens holders, optics, lighting and adapter boards. Therewith, the camera modules can be deployed easily with all common processor boards with MIPI CSI 2 interface and easily implemented into their end product.

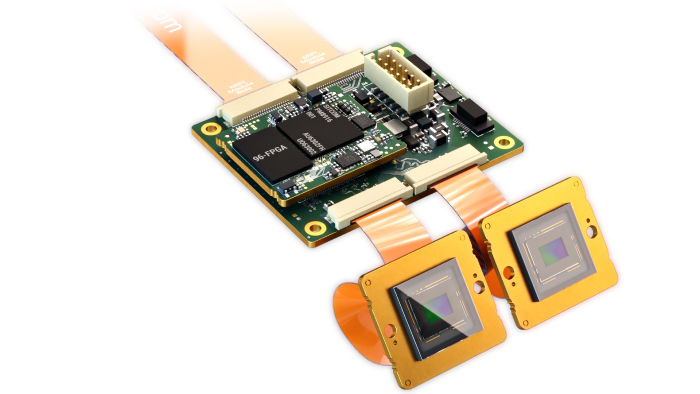

VC Power SoM is a small FPGA-based hardware accelerator for complex image processing, measuring only 2.8 x 2.4cm. It can be easily, quickly and cost-effectively integrated into embedded vision electronics, as a component directly in the mainboard design or as shown in the picture with an interface board (Image: Vision Components)

For even faster designs, VC has recently presented VC PowerSoM, an FPGA-based hardware accelerator module that can be implemented in MIPI data streams. The tiny module, measuring only 28mm x 24mm, completes complex image processing calculations and transfers the results directly to a main processor board. It can be directly integrated into embedded vision mainboard designs as a module or combined with an I/O board with multiple MIPI interfaces. OEMs benefit from the VC Power SoM’s mature FPGA technology and comprehensive image processing functionalities and can simultaneously freely choose the embedded processor board and use its full computing power for the main application.

Board cameras including CPUs: optimally matched components

The next higher level of integration are board cameras, which combine camera modules and multi-core processing units, either with all components including one or multiple image sensors perfectly integrated on a single board, or with board cameras connected via FPC cables. Both setups are ultra compact, with perfectly matching components and adapted to their respective applications. They can be designed into any greater system. Often, the processor of these systems can also be used to run customer-specific applications based on the image data. For mass production or industrial applications, individual adaptions can be made. Custom developments for special requirements are also possible – including housing and software. An advantage of board cameras is their proven design, which shortens development cycles and helps to keep a budget.

Ready-to-use embedded vision systems: ideal choice for standard applications

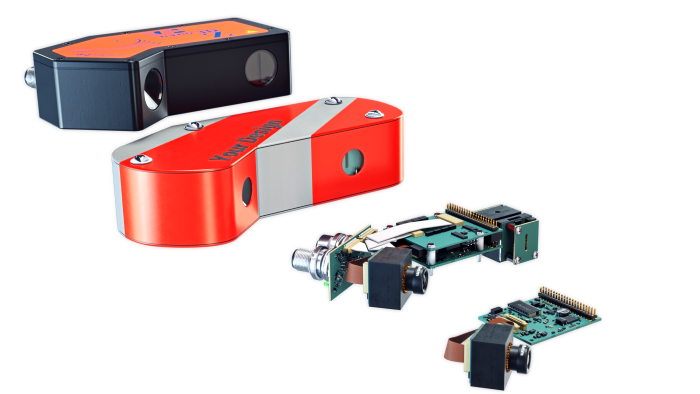

The same holds true for turnkey OEM solutions that can be utilised for many standard applications. A perfect example are profile sensors that combine a laser triangulation module and a smart embedded vision system and that can take over tasks such as 3D-scans and volume measurement, optical quality inspection and high-precision positioning of automatic guided robotics etc.

Embedded vision systems can be ultra-compact, dispense with all unnecessary components and, thanks to onboard data processing, do not require an external computing unit. They are available in numerous variants, from electronics kits to ready-to-use complete systems for numerous industrial applications. (Image: Vision Components)

These systems are often customisable in terms of housing and labeling and can be adapted to the specific project requirements with customer software. Given the high level of prefabrication, these systems enable the shortest time to market.

The future is embedded!

Be it MIPI cameras or turnkey solutions, there is a growing demand for embedded vision solutions in all markets, both for consumer products and for industrial applications. Smaller and more powerful embedded vision systems are being used in more and more applications, from smart agriculture and intelligent traffic systems to smart appliances for consumer devices, factory automation and logistics. Health, medical and life sciences also make use of the data depth provided by advanced embedded vision systems. The range of components that are perfectly tailored to their respective areas of use and applications is increasing accordingly. Another development is moving towards mobile applications that do not require a connection to an external computing unit and can therefore be used extremely flexibly.

With the high computing power of modern embedded processors, embedded vision systems are now suitable for all applications that were equipped with PC-based systems in the past. In addition, they offer decisive advantages: Embedded vision systems are ultra-compact and can be perfectly integrated into devices. They are self-sufficient, require little energy and dispense with all components that are not needed. This makes them ideal for use in edge, handheld and mobile devices.

Jan-Erik Schmitt is Vice President of Sales at Vision Components. He was promoted to this position in 2008 and is responsible for the strategic development of the company worldwide. Schmitt has continuously expanded the global sales network and led numerous embedded vision projects to success as key account manager