Greg Blackman reports on CSEM's Witness IOT camera, an ultra-low power imager that can be deployed as a sticker. Dr Andrea Dunbar presented the technology at the Image Sensors Europe conference in London in March

As demonstrations of Internet-of-Things devices go, Dr Andrea Dunbar’s unobtrusive camera stuck to the plinth from which she gave her presentation at Image Sensors Europe in London on 13 March added a nice finishing touch to her description of technology designed to be positioned anywhere and everywhere.

Dunbar, who is working for Swiss research organisation CSEM, pointed out the tiny camera at the end of her talk: ‘I put one here,’ she said, ‘I don’t know whether you noticed it. So you’re currently being videoed.’

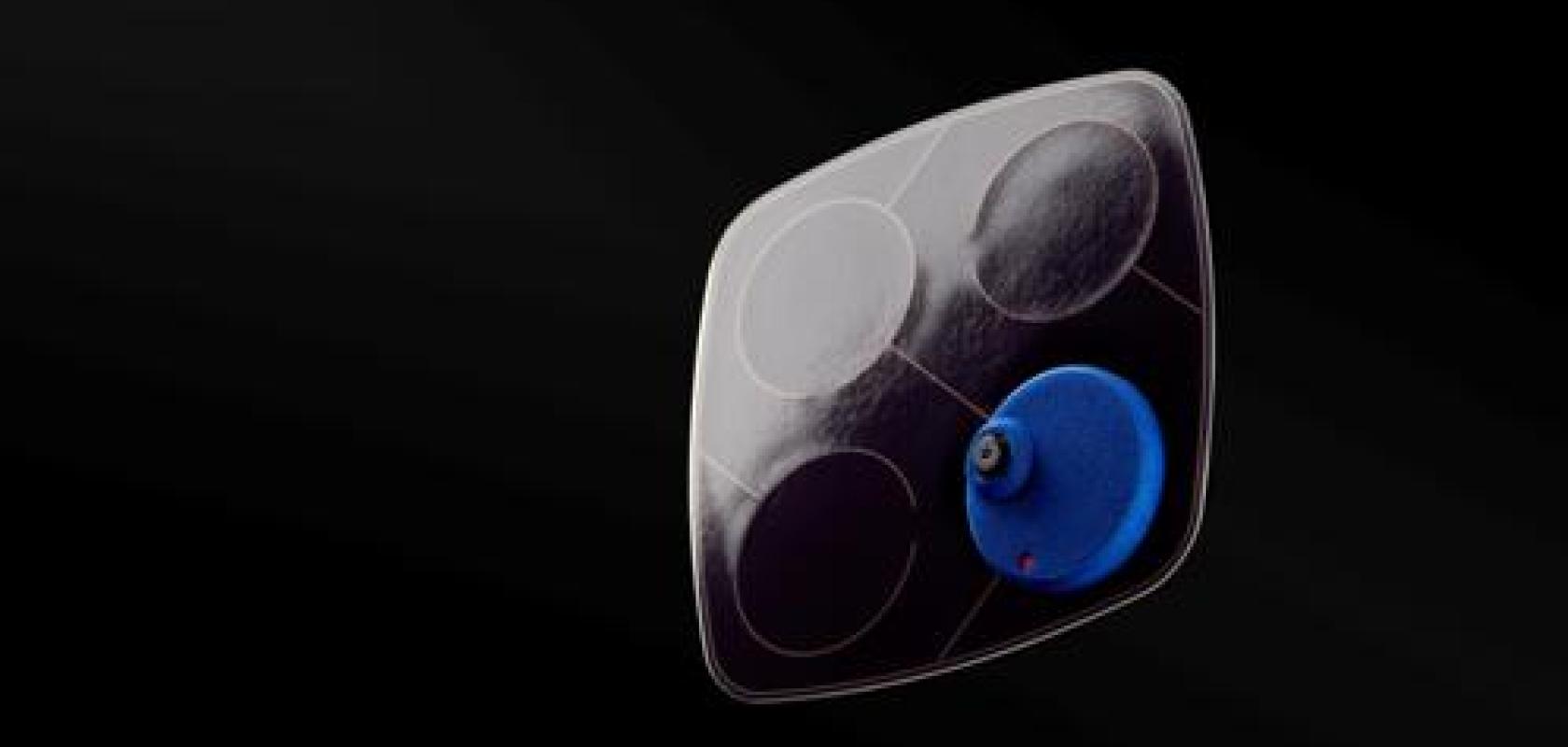

CSEM has developed the Witness IOT camera for security and other Internet-of-Things (IoT) applications. Its unique selling point is it can be deployed as a sticker, and shows how an imaging device can be optimised to keep power consumption and cost low enough to act as an autonomous, smart, and useful data logger.

Witness combines an ultra-low power imager, photovoltaic power supply, and wireless reporting. The electronics are thin (200µm) and flexible; it’s built using low-cost components, although the imager was developed specifically for the project, and the camera operates based on motion detection, only recording when activity is happening in the scene.

Security tasks, applications in healthcare – to trigger alerts if elderly people fall, for instance – and people counting in buildings, are all potential uses of this technology.

Dunbar explained the requirements of the Witness project: ‘We need a low-power imager that will work in general lighting conditions, which are quite extreme, so it needs to have a high dynamic range. It also needs embedded intelligence, and to do some wireless reporting. From the data, you will get a feedback loop into actuation or reporting. All of this needs to be under a certain power [consumption].’

The camera, called Ergo, is QVGA resolution (320 x 320 pixels); it has 120dB dynamic range, 107° field of view using a special lens, and detects motion on sub-sampled images.

‘We first tried to buy an imager,’ recalled Dunbar, ‘because we believe in buying low-cost hardware, but we couldn’t find an imager with sufficient dynamic range coupled with resolution and power budget, so we decided to make our own called Ergo.’

The 10-bit Ergo imager has logarithmic encoding; it has an on-chip frame memory and time-to-voltage integration, and consumes700µW of power at 10fps. The imager is able to read out regions of interest and uses a supply voltage of 1.8V.

Ergo acquires an image and then performs background subtraction to determine whether there’s movement in the scene. If there’s no motion, the imager returns to sleep mode to save power until it is time to acquire the next frame. If there is motion only the change in the scene is compressed and stored in the onboard flash memory. It runs at 1fps. ‘Only a change [in the scene] requires a response, and this is absolutely key to the low-power part [of the device],’ Dunbar said.

‘This is all achieved using rather poorly optimised COTS components at the moment,’ she added. ‘We believe we can still drastically reduce the processing consumption of the hardware.’

The team at CSEM also wants to implement machine learning on the device to optimise processing. ‘We need embedded intelligence on our [IoT] devices, because we’re not going to be able to send videos or photos with the power budget we have,’ Dunbar explained. A 20 megapixel image has 1 million bytes of information, which is a huge amount to transmit. The idea is that a neural network would reduce the image data to just a few bytes containing only the information needed to make a decision.

‘Intelligence at the edge … minimises the quantity of data stored and exchanged – we get more accurate information,’ Dunbar said. ‘It also makes it more secure and more private – we’re not transmitting images of the scene. Embedded intelligence is essential to have an autonomous IoT design.’

However, a key issue is that the software has to be able to perform image capture, analysis, and compression with very scarce resources.

Dunbar summed up: ‘The main achievement [of Witness] was to balance the whole system; we had to take into account that we had no motion, normal motion, or intensive motion, which would require different quantities of energy compared to what is gathered by the photovoltaic cell.’

The solar cell is not a standard photovoltaic device, Dunbar noted. CSEM has knowhow in photovoltaics and the module used in Witness is optimised for low-light conditions. However, the cost is still comparable to what’s available on the market, she said. To make an IoT device truly autonomous using solar power means having to live with a power budget of between 10µW and 100mW, she added.

CSEM’s roadmap for Witness is to increase the resolution to VGA. ‘We’ve done some trials where we’re still going to be in the 1mW range for 10fps,’ Dunbar said. ‘This is an optimised system, and IoT systems are going to remain optimised if we want them to work. So we will be optimising either for people counting or detection, and we will be using machine learning hardware to extract information from the scenes.’

Related article

Infrared, China, and 3D stacking: CMOS trends - Greg Blackman reports from the Image Sensors Europe conference in London, which took place from 13 to 14 March