Michał Czardybon, CEO of Adaptive Vision, on how to handle data and image annotation when working with deep learning

This article is not about deep learning frameworks, architectures, or even about applications. It is about common problems that appear all the time for teams developing deep learning solutions. Let’s start with an illustrative example of the kind of things discussed when working on such a project:

‘Hey John, where is the most up-to-date dataset for training our solution for our current project?’

‘I have a copy from site A, but I think that Alice has some new images from site B.’

‘Ok, I will ask her to upload it to our shared disk tomorrow. Today she is working remotely. By the way, the model currently in production was trained only with images from site A, right?’

‘I think so. But I know that Peter was adding some additional test cases from the lab. These were some important cases, because we had been getting some false positives earlier.’

‘Ok, but are defect annotations for these cases already verified by the customer?’

‘I think the customer had some remarks, but they still haven’t finished annotating yet.’

And so on.

Does this sound familiar? Every company working on computer vision projects nowadays has to decide how to share and manage image datasets, and how to use them to deliver products with consistent quality. Data itself is becoming at least as important as the code. It may be highly valuable, it may be confidential, it may need versioning. However, while we have clear industrial practices for managing code, a lot of work has yet to be done for managing image datasets and experiments.

There are a couple of ad hoc options to start with for the basic issue of storage and transfer. One of them is to create a shared disk space on a local server, where everyone can upload or download files. We call this local storage and it is actually quite common. Another possibility is to use an online storage service like Google Drive. For transferring images between customer and supplier, people may also use services like WeTransfer. Things get more complicated when the number of datasets grows or when permissions need to be controlled.

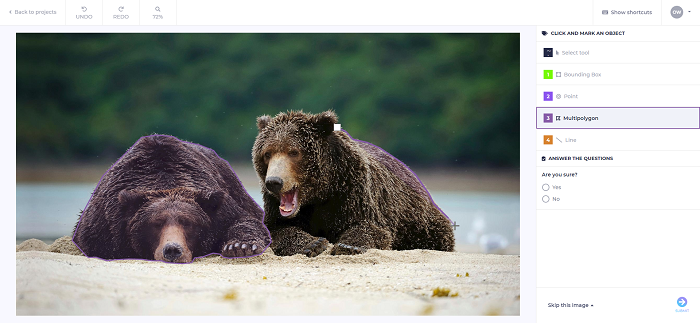

Annotations created within Zillin can be used to train deep learning models

When considering projects based on deep learning, there is the issue of working with data, but also of working with experiments.

First of all, data needs to be annotated for training. Individual companies have developed tools for annotating images, and then optimised them to make them effective when the amount of data becomes too large to be handled by one person. Creating, sharing and versioning annotations becomes another major issue.

Once datasets are available and images annotated, one can proceed with training the model. This is a very important step, but out of the scope of this article. After training, the next step is managing experiments, i.e. reproducible runs of training and inference on a given input, using a specific set of parameters.

As companies invested in developing deep learning models, new products have started to appear that solve the issues mentioned above. Some of the first products are dedicated services for dataset storage and annotation specialised for machine learning projects. One such a service is Zillin. It focuses on machine vision applications, where the team gets a common disk space for storing images and an online tool for annotating them.

The team starts by creating a workspace and adding people to it. Quite often people need to have different permissions and the customer wants to have a clear view of who is accessing what. This is done with role-based access levels.

The next step is to add datasets. These are like folders with images, with the small difference that when a dataset is published and used in a project, its content is tracked and cannot be changed. A project, then, is a set of object marking tools and the annotations assigned to individual images. Annotators and reviewers work within a project to finish preparing the data for training. Final results can be downloaded in the form of JSON files.

After training, the next step is managing experiments

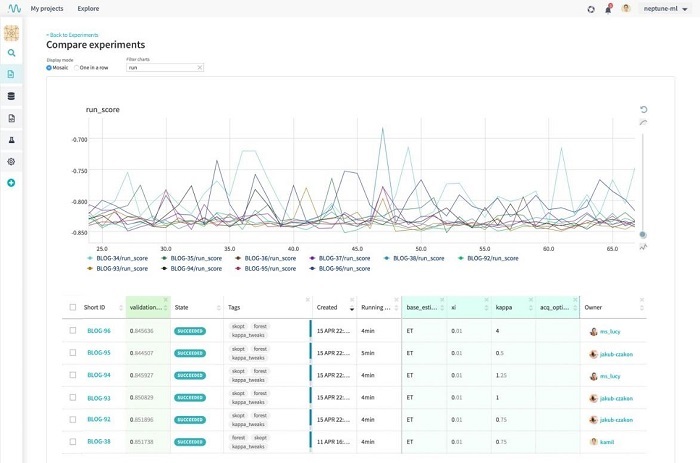

Annotations created with Zillin are later used to train deep learning models. Again, there are a multitude of frameworks for doing that, but one thing is common: when experiments are done in an ad hoc manner, they become difficult to track and oversee. What’s more, it may be difficult to track progress and reproduce results if there are many people working in the team with different dataset versions, project configurations and different sets of parameters. These problems are solved with experiment management tools such as Neptune.ai or its open-source alternatives, Sacred and MLflow.

A common approach when working with experiments involving large image datasets is to create metadata files that are lighter and easier to manage. These metadata files may contain the location of image files, annotation files (usually JSON or XML) and additional information like size, quality and other user-defined tags. Typically, one can store this information in a data frame, such as a CSV file, and identify it with an md5 digital signature. When working with one’s favorite deep learning framework, be it TensorFlow, PyTorch or any other, Neptune keeps track of these metadata, parameters and experiment results, making it fully repeatable and easy to browse.

--

Adaptive Vision recently acquired the online dataset annotation platform, Zillin.io. The product was developed by QZ Solutions, based in Opole, Poland. Zillin provides an easy way to mark objects or defects on images used for training deep learning models. It will complement Adaptive Vision’s existing portfolio of deep learning products, which includes software tools for industrial image analysis, and the Weaver inference engine for processing images at high speed.

Write for us

How do you manage data for deep learning projects for industrial inspection? Email: greg.blackman@europascience.com.

Further reading by the author

AI for industry - Michał Czardybon, general manager at Adaptive Vision, gives his view on how to make the most of deep learning in industrial vision