Gemma Church looks at how finding the right AI-assisted software can benefit specific vision tasks

The advent of artificial intelligence (AI) technology has helped multiple industries and the imaging and machine vision market is no exception.

AI-assisted imaging is currently used in areas such as machine vision, manufacturing, agriculture and smart cities. Brandon Hunt, product manager at Teledyne Dalsa, explained: ‘Smart cities are an emerging application area as Industry 4.0 and 5G take charge. The resulting IoT infrastructure starts to enable more data and connectivity than ever before. All this extra data is rocket fuel for AI models to perform well.’

From food packages to flat panel displays, automotive parts and medical x-rays, AI-assisted inspection tools are now entering those markets where standard algorithms have challenges, from high variation rates to changes in shapes or lighting levels. Hunt defines these cases as ‘any area where the logic is fuzzy and requires a human’s judgement’.

However, AI is still fairly new to the imaging industry. Hunt explained: ‘There are still many companies out there who rely on humans for applications like defect inspection and they often are not even aware of what AI can do for them.’

As such, AI-assisted imaging still faces challenges surrounding managing user expectations. ‘One of the biggest challenges is communicating what AI can or cannot do and explaining the process,’ Hunt continued. ‘There is often a gap between the technology and the customer’s expectations.’

AI models follow a different workflow, for example, which is iterative in nature. As a result, users must repeatedly run the workflow and analyse the results that are returned. ‘Some customers want specific levels of accuracy, but it’s hard to get that kind of information without trying it out first,’ Hunt added.

‘Then, if something goes wrong in the AI model, it’s not a straightforward fix. It usually requires experimentation and trying out different parameters, datasets – and requires an engineer to look into those areas.’

This is where the right platform can help, providing users with an intuitive interface and the tools to understand both how AI works and how it can benefit specific imaging applications.

Working together

The user experience is a key factor to increase adoption rates and help everyone understand the benefits of AI-assisted imaging tools.

To achieve this, these tools must integrate with existing, traditional image processing software. This provides users with the best of both worlds, allowing them to cater to their needs while also lowering the barrier to entry, streamlining the user experience and learning process.

The latest developments in tools such as Teledyne Dalsa’s Sapera Vision Software can provide field-proven image acquisition, control, image processing and artificial intelligence functions to help users design, develop and deploy high-performance machine vision applications. Sapera Vision Software includes Astrocyte, a GUI-based tool for training AI models.

Users can, for example, use the GUI-based Sherlock machine vision in conjunction with Astrocyte. This provides them with a no-code environment, democratising these AI models for everyone. Visualisation is another important means to help users understand the AI-assisted tools with which they are working. When dealing with anomaly detection, such data visualisations can help users intuitively understand any detected defects, presenting the size and location of those defects in an intuitive manner.

An anomaly detection algorithm can robustly locate defects while generating output heatmaps. The provision of heatmaps at runtime is also a useful feature, helping users obtain the location and shape of defects without the need for graphical annotations at training.

Teledyne Dalsa is also developing a tiling feature to allow users to work with larger image sizes and identify smaller defects. Previously, these smaller defects were often reduced in size and lost when they were passed into the neural network.

Time savings

By their very nature, AI-assisted image processing tools perform many of the repetitive, low-skill activities within image processing, providing users with more time to focus on value-added or more complex tasks. The resulting time savings are a key benefit for many users and businesses.

When it comes to discussing how AI-assisted imaging tools actually work, a system such as the Sapera Vision Software offers continual learning – where the deployed AI model learns in the field. In other words, the user does not need to retrain and redeploy the model if any new cases occur after initial training is complete. The model can continuously adapt, even after it has already been deployed to runtime.

As a result, the AI models are able to train themselves quickly. With a sufficient amount of data, users can get a model up and running in as little as a few minutes.

‘This is a huge time saver for customers,’ Hunt explained. ‘One of the biggest issues in AI is that when a model is ineffective or new images are presented, then you must retrain the model. This replaces any previous work done and does not guarantee an improvement in the results. With continual learning, users can optimise the existing model with new information. This both saves a lot of time and keeps any previously saved efforts.’

Time is a critical consideration, not just when running these AI models but also when labelling the images.

Using this type of software, for example, users can import a folder of images and group them all with a single label. The semi-supervised object detection (SSOD) functionality allows users to start with a certain number of images, label a few and then the software automatically labels the rest.

Data quality

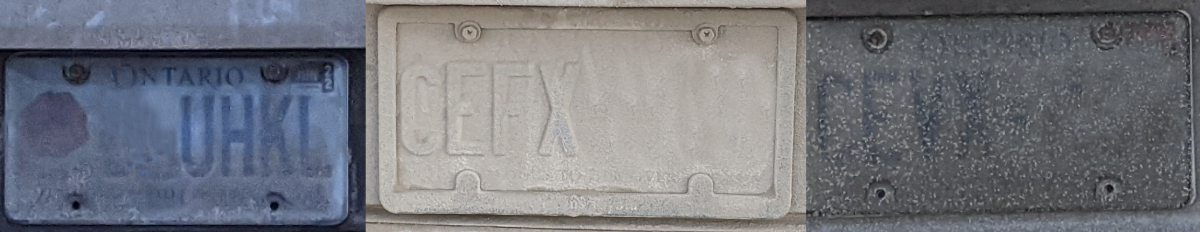

A licence plate obscured by dirt

When training any AI system, the quality of the data used is another crucial factor. For example, you may want to train an automatic number plate recognition (ANPR) imaging system to read the plates passing by on a motorway.

If the licence plate images are clear and can be easily identified by a human, then the AI system can also be easily trained. But if there is ambiguity in this data from, for example, faded number plates or those partially obscured by dirt, then there needs to be an agreement between the humans classifying those images. ‘If the humans can’t agree, then the AI model won’t do well,’ Hunt added.

In its latest white paper The Importance of Data Quality When Training AI, Teledyne Dalsa examines this data quality issue in more detail, examining the impact data quality has on the quality of the training for an AI-assisted imaging tool. Read the white paper.