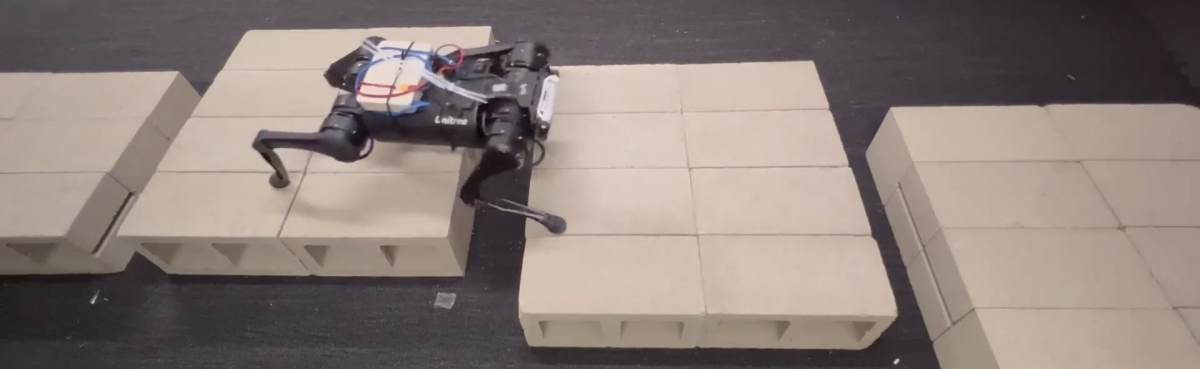

Researchers led by the University of California San Diego have developed a training model that enables four-legged robots to see more clearly in 3D and navigate challenging terrain such as stairs, rocky ground and gap-filled paths more easily.

The work will be presented next week at the 2023 Conference on Computer Vision and Pattern Recognition (CVPR), which takes place from 18-22 June in Vancouver, Canada.

The training model is designed for robots equipped with a forward-facing depth camera tilted downwards and at an angle – offering a good view of the terrain in front.

The model captures and examines a short 2D video sequence consisting of the current frame and a few previous frames. It then extracts 3D information from each 2D frame, including information about the robot’s leg movements such as joint angle, joint velocity and distance from the ground. The model compares the information from the previous frames with information from the current frame to estimate the 3D transformation between the past and the present. It then fuses all the information together.

The model then uses the current frame to synthesise the previous frames. Following this, as the robot moves, the model checks the synthesised frames against the frames that the camera has already captured. If they are a good match, then the model knows it has learned the correct representation of the 3D scene. Otherwise, it makes corrections until it succeeds.

The 3D representation is then used to control the robot’s movement. By synthesising visual information from the past, the robot is able to remember what it has seen, as well as the actions its legs have taken before, and use that memory to inform its next moves.

“Our approach allows the robot to build a short-term memory of its 3D surroundings so that it can act better,” said Xiaolong Wang, a professor of electrical and computer engineering at the UC San Diego Jacobs School of Engineering. “By providing the robot with a better understanding of its surroundings in 3D, it can be deployed in more complex environments in the real world.”

Robots trained using the model are able to remember what it has seen and the actions its legs have taken before, and use that memory to inform its next moves

In previous work, the researchers developed algorithms that combine computer vision with proprioception – which involves the sense of movement, direction, speed, location and touch – to enable a four-legged robot to walk and run on uneven ground while avoiding obstacles. The advance here is that by improving the robot’s 3D perception (and combining it with proprioception), the researchers show that the robot can traverse more challenging terrain than before.

“What’s exciting is that we have developed a single model that can handle different kinds of challenging environments,” said Wang. “That’s because we have created a better understanding of the 3D surroundings that makes the robot more versatile across different scenarios.”

The new approach does have its limitations, however. Wang notes that their current model does not guide the robot to a specific goal or destination. When deployed, the robot simply takes a straight path and if it sees an obstacle, it avoids it by walking away via another straight path. “The robot does not control exactly where it goes,” he said. “In future work, we would like to include more planning techniques and complete the navigation pipeline.”

This work, described in a paper titled “Neural Volumetric Memory for Visual Locomotion Control”, was supported in part by the National Science Foundation, an Amazon Research Award and Qualcomm.

Images: Ruihan Yang / UC San Diego Jacobs School of Engineering