With January around the corner, much of the technology world’s focus will be on CES, the consumer electronics show in Las Vegas, where almost every major brand will exhibit their latest wares.

Imaging technologies have traditionally played a key role at CES – particularly of late with virtual and augmented reality being among the most talked about technologies at the event in both 2016 and 2017. And, of course, the consumer sector – notably the smartphone market – has led to imaging technologies becoming ubiquitous, which in turn has led to those in industry, agriculture, healthcare and research exploiting these technologies.

Artificial reality

According to ABI Research, augmented reality (AR) systems – where data is overlaid on clear screens or live video – is set to play a significant role in several industrial sectors, with the technology predicted for considerable growth by 2022. According to the research house, shipments of AR smart glasses are expected to generate around $220 million this year, with the logistics sector alone accounting for a quarter ($52.9 million) of this. By 2022, shipments to the logistics sector are set to reach $4.4 billion.

According to Marina Lu, senior analyst at ABI, AR systems let logistics companies streamline their work processes and reduce errors. Examples of the technology’s benefits to the logistics sector given by Lu include a pick-by-vision capability, which ‘frees workers’ hands of traditional paper lists and picking instructions [while in the warehouse].’ Augmented reality also has the ability to give remote expertise, which, according to Lu, can ‘dramatically reduce travel costs and optimise resources’ through remote support done in real-time.

DHL is among the first companies to implement the technology widely, and last year began the second phase of trials for its AR programme, using Google and Vuzix smart glasses. While DHL hasn’t specified the Vuzix system, its clear-lens M300 Android-based smart glasses display data on an HD display, and combine data from a 1080p camera, along with three-axis gyros, accelerometer and compass to understand what a user is looking at.

In its announcement, DHL said: ‘Pickers are equipped with advanced smart glasses, which visually display where each picked item needs to be placed on the trolley. Vision picking enables hands-free order picking at a faster pace, along with reduced error rates.’

Outside of logistics, Eric Abbruzzese, a principal analyst at ABI Research, noted design and manufacturing as a promising sector for AR, ‘but [this] hasn’t taken off as quickly as logistics’. He added that healthcare will eventually take hold, but that regulations and tight budgets were delaying AR adoption in this sector.

In late September, Ford announced what Abbruzzese calls ‘a key moment for the automotive [and] manufacturing industry’. The company has partnered with Microsoft to use the Hololens AR platform in its design process. According to Ford, this allows the company’s designers to ‘see several digital designs and parts as if these were already incorporated into a physical vehicle.’ The company says this helps the designers understand how a new design will work, such as how the design of a wing mirror affects visibility, and see multiple examples in minutes, rather than weeks.

Abbruzzese said: ‘Ford is the first company [using AR] at this scale.’ He added that while Audi and a few others have piloted AR, it’s not at a global scale.

The Hololens combines its inertial measuring sensors – accelerometer, gyroscope and magnetometer – microphones and ambient light sensors, with six cameras: four environment-understanding sensors, a depth sensing camera with a 120 x 120° field of view, and a 2.4 megapixel photographic video camera that gives 1080p HD video. Data is relayed to see-through holographic lenses with the display run via two HD 16:9 light engines.

‘In terms of their choice of device, I think Hololens remains the most prominent thanks to the Microsoft tie-in. We’ve seen hardware competitors on a similar level to Hololens – Daqri and ODG’s latest devices come to mind – but Windows is a well understood and ubiquitous platform, which can’t be ignored,’ Abbruzzese commented.

Tracing ancestry

Of course, no examination of consumer technology and its evolving use would be complete without a reference to the iPhone. Apple recently unveiled its latest model, the iPhone X, complete with deep-learning face-recognition technology, used to unlock the handset. This both highlights the advancing computational power and imaging capabilities available in smartphones, and opens the door to medical companies seeking to offer either ancestral history services or genetic diagnoses through dysmorphology analysis.

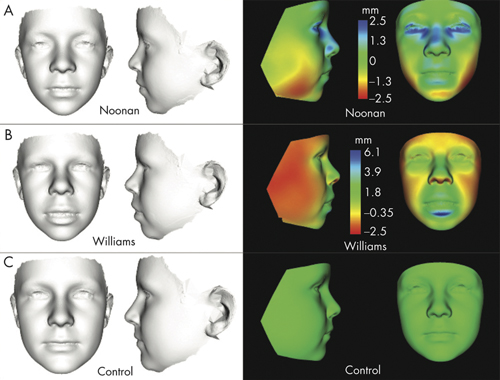

Professor Peter Hammond, of UCL’s Institute of Child Health, is a pioneer in computational dysmorphology. In his 2007 BMJ Archives of Disease in Childhood paper, ‘The use of 3D face shape modelling in dysmorphology’, Professor Hammond set out how computers could be used to better analyse 2D photos or 3D scans, and quantify deviations from the mean face and identify conditions that present dysmorphic facial features – comparing 3D facial scans generated via a laser scanner, of people with Noonan’s, William’s, fragile X and velocardiofacial syndromes.

Since this paper, research has continued with commercial services such as Face2Gene launching to offer computational dysmorphology diagnoses of genetic conditions from a photo. The service’s website states it uses ‘deep learning algorithms [to] build syndrome-specific computational-based classifiers’, and ‘real world phenotype data crowd-sourced by expert genetics professionals’. The company also offers a smartphone app, albeit ‘designated to be used solely by healthcare professionals’.

Researchers at UCL's Institute of Child Health are using 2D photos or 3D scans to make genetic syndrome diagnoses from dysmorphic facial features. (Image: UCL)

Professor Ruth Newbury-Ecob, consultant in clinical genetics at the University Hospitals Bristol NHS Foundation Trust and a dysmorphology expert, said that 2D photos can indeed be used to identify several conditions, from chromosomal conditions such as Down’s syndrome, to metabolic disorders and cancer predisposition syndrome, such as mutations in Pten (Cowden’s Syndrome) which increases the risk of breast and thyroid cancer. In addition, the nature of computers means they will be able to recognise more conditions, and do so with greater subtlety, but also that artificial intelligence is needed to do it accurately, taking into account everything a clinician would.

Would the iPhone’s camera be good enough to allow for accurate analysis? Face2Gene’s website’s marketing photos show an iPhone 4 or 4S, which capture at 5 and 8 megapixels respectively. Newbury-Ecob said that some genetic conditions are even prominent enough to have been identified in North-American totem-pole and whale-bone carvings, but that it depends on the condition. ‘Some facial dysmorphisms are very subtle … and overlap with the range of normal. For those, you’d need better quality imaging,’ she said.

But Newbury-Ecob was also keen to point out the risks that come with services such as Face2Gene and the ability to use consumer technology to deliver them.

Genetic testing services such as 23andMe and Ancestry.co.uk exist to allow consumers to find out more about their heritage and health risks. So, would a consumer use a service like Face2Gene? ‘[I’m] absolutely certain,’ Newbury-Ecob said. ‘People use Google [to diagnose own conditions]. So someone on [a] neonatal unit [whose baby was] a little bit floppy or not feeding as well, and the paediatrician then comes round and says “I wonder if there’s an underlying condition”, parents can go on to Google, and could go on to [such a service].’

But this also raises the issue of do you really want to know the information? ‘You’re not just diagnosing a facial appearance, [but] a genetic disorder. And that genetic disorder has all sorts of information,’ Newbury-Ecob continued. ‘So you may be diagnosing a risk of associated malformations. You may be inferring an associated risk for one or another parent. Or their children. Or the extended wider family. And that might change the way they manage during their pregnancy, or their reproductive options.’

Here in the UK, like many countries, genetic counselling is given before testing takes place via the health service. This helps a patient understand the consequences of knowing or not knowing the information, not just for managing the condition, but the psychological effects of a positive diagnosis. But services like 23andMe simply state: ‘If you are concerned about your data, or want to explore a connection between your genetics and your medical history, you should contact your physician or other healthcare provider.’ The psychological effects could be increased by the reduced accuracy of a diagnosis based on computational dysmorphology alone.

‘There are great concerns of what happens when you make the wrong diagnosis,’ Newbury-Ecob said. ‘The very best dysmorphologists only make a diagnosis in 30 per cent of cases,’ and ethnicity adds to the complexity. ‘Most of the data we have on facial measurements are biased towards Caucasians, because of the greater amount of published data.

‘There are datasets of the normal facial measurements and normal facial patterns for a number of ethnic groups,’ she added. ‘[But] what we see in the UK, though, is a huge amount of mixing, and so … [the background population] is harder to know. And this would worry me … if they’re offering it in Brazil versus Holland, because the background characteristics will be very different.’

Is consumer tech good enough?

Will we see greater adoption of technologies such as VR or AR and smartphone-based systems used for industrial applications and research? ABI has forecast significant growth of AR in the logistics market and according to ABI’s Abbruzzese: ‘successful reports from a Ford-scale company [following the Hololens trial] will be a boon for AR as a whole, and especially for those looking to invest for design and manufacturing implementations.’

Elsewhere, it’s worth noting that, at Vision 2016, Sony Europe’s Image Sensing Solution division also demonstrated applications running its UMC cameras, which are based on consumer-grade technology, but adapted for the B2B space. Sony stated that a common use – but not the sole use – would be in drones, with the modules featuring an APS-C size Exmor CMOS sensor and a Bionz X processor to deliver 20 megapixel images. Sony’s website also shows a 4K module in the works.

Sony's UMC camera is ideal for installation onboard drones.

Matt Swinney, a senior manager at Sony Europe’s Image Sensing Solutions division, said it was ideal for systems such as AR – overlaying mapping data onto the images captured by drones, in real-time – but also ones such as agriculture. He said that by flying drones equipped with low-weight cameras and using different sensors – thermal, visual, hyperspectral – the user is able to identify where vegetation is, and understand what areas of a field may need extra fertiliser, water or pesticide, relaying information to the farmer or automated sprayer.

In such applications run time is short, so mean time to failure is a low priority. Instead, Swinney says, weight and resolution are: ‘The greater the resolution, the more you can do on the camera and the easier it is to use the data.’

According to Youngjun Cho, a PhD student at the UCL Interaction Centre, growth of more consumer-type technologies in healthcare and research is also likely to happen. Cho was lead author on a Biomedical Optics Express paper. For the paper, Cho’s team developed algorithms to run on Android smartphones, connected to low-cost ($200) thermal imaging sensors from companies such as Flir and Stemmer Imaging, to monitor breathing patterns.

The portable software-camera system analyses body movement and temperature changes to monitor respiration, enabling healthcare professionals, carers or even consumers to observe breathing problems in elderly people living alone, potential sleep apnoea sufferers, or newborn babies. Cho said that he believes it is becoming more common to use consumer-priced products in his and his colleagues’ research. Cho acknowledged several factors in this, primarily cost and size, making it affordable and manageable to roll out on a larger scale.