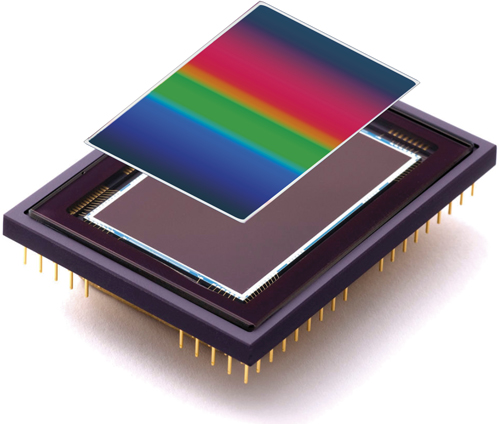

Caption: Hyperspectral imaging can reveal defects in pharmaceuticals

The acquisition of Perception Park by Stemmer Imaging is a long-term investment in hyperspectral imaging, Mark Williamson, Stemmer Imaging’s managing director, told Imaging and Machine Vision Europe at the Vision 2018 trade fair in Stuttgart in November. Stemmer Imaging bought a stake in the Austrian hyperspectral software provider in October; for two years prior to the acquisition Stemmer had acted as distributor for Perception Park’s technology. Perception Park’s processing platform renders complex hyperspectral data usable for machine vision, which is where Stemmer Imaging’s interest in the technology lies. ‘Hyperspectral imaging has become accessible to the traditional system integrator,’ Williamson said during a VDMA-organised panel discussion at Vision 2018. ‘Five years ago that wasn’t possible.’

The accessibility of hyperspectral imaging is now apparent in not only software but also hardware, with cameras from companies such as Finnish spectral imaging firm Specim now designed for industrial use, in the case of Specim through its FX series. Spectroscopy and spectral imaging used to be the domain of scientists, but today’s spectral imaging equipment, while still reasonably complicated and costly, can theoretically be set up on the factory floor by non-experts. And its benefits are being seen in industrial areas such as recycling, food and pharmaceutical production. Williamson told Imaging and Machine Vision Europe during Vision 2018 that a hyperspectral imaging system could cost around €30,000, but he added that this would be the cost of just the feasibility study not so long ago.

The cameras

Materials can be identified by how light interacts with them; compounds will have unique spectral signatures that act like a fingerprint. In this way, spectroscopy can distinguish between different plastics, identify a pharmaceutical product, or grade the purity of a whisky. A spectrometer gives a point reading, whereas a hyperspectral camera builds on this, so that each pixel in the sensor collects many spectra over tens or hundreds of wavelength bands. The resulting information is known as a data cube made up of measurements at each wavelength over the range.

The hyperspectral cameras that have been around the longest are those based on prisms or gratings, which use a dispersive element and image a narrow line of the object through a slit. These are line scan cameras or push broom scanners, scanning line by line to build a 2D image. Specim’s FX cameras use push broom scanning. Snapshot hyperspectral cameras are also now available, such as Ximea’s XiSpec, based on Imec’s mosaic hyperspectral image sensors. These cameras capture the entire data cube in one go, rather than scanning across an object line by line.

The optics

New optical components are improving the accuracy and reliability of hyperspectral cameras. Delta Optical Thin Film has developed continuously variable bandpass filters (CVBF) specifically for hyperspectral imaging. The filters have been used in a snapshot camera from Fraunhofer IOF and in cameras from Glana Sensors. The coating thickness on these filters – and therefore the spectral characteristic – changes from one end to the other along one direction of the filter. The result is a bandpass filter in which the centre wavelength changes continuously along its length.

Delta’s filters for hyperspectral imaging will only transmit a narrow band of light for any given position within the working wavelength range of the filter. All other wavelengths above or below this band are suppressed to at least OD4 in the wavelength range, where silicon detectors are sensitive, from 200nm to 1,200nm. ‘You get a very clean signal with a very high signal-to-background ratio,’ Dr Oliver Pust, Delta’s director of sales and marketing, said.

‘The gradual change in coating thickness gives you a real hyperspectral filter,’ he continued. ‘Other filter approaches have a number of discrete bands, making them inherently multispectral. But Delta’s filter is truly continuous, so each pixel will see a slightly different centre wavelength.’

Delta has filter versions covering 450nm to 950nm and 800nm to 1,100nm. There is also a version from 450nm to 850nm that can be used for slightly smaller sensors.

Fraunhofer IOF’s snapshot camera uses a micro-lens array. Mounting a continuously variable bandpass filter directly in front of the image sensor gives a hyperspectral detector, but it still needs some kind of scanning between camera and object. Fraunhofer’s camera works in such a way that the micro-lens array axis is tilted with respect to the filter axis, Pust explained. The setup can be described as a multi-aperture camera, where each of the micro-lenses looks through a different region of the filter, with a different centre wavelength onto the same object in space.

Push broom scanners require careful synchronisation to get an accurate data cube, according to Pust. Attaching a hyperspectral camera to a drone, for example, is much easier with a filter-based approach, compared to a push broom sensor, because every image acquired has the complete 2D object. Pattern recognition can therefore be used to align the image spatially on an unstable platform.

Filter-based cameras can also reduce the data load, Pust observed. He gave the example of obtaining a data cube of 1,000 x 1,000 pixels, which would require 1,000 images using a classical push broom scanner. With a filter-based approach, the user would only need to acquire as many images as the number of spectral channels required in post-processing, which could be 50 or 100. This therefore reduces the amount of data by at least an order of magnitude.

‘The filter-based cameras are more compact and more robust, because they don’t need a certain distance between the sensor, the slit, and the dispersive element, as is required with a prism or grating,’ Pust said. ‘They can be more mechanically stable and they’re also more compact. They’re also typically cheaper than a classical hyperspectral imager.’

The coating thickness on Delta Optical Thin Film's continuously variable bandpass filters change along one direction of the filter

A nice side benefit from a filter-based camera, Pust noted, which is especially useful for drone applications in forestry or agriculture, is that 3D height information can easily be reconstructed from the image sequence when viewing the object at different angles. ‘That comes basically for free; it’s just a matter of software,’ he said. An additional lidar system has to be attached to the drone to capture 3D information when imaging with a classical hyperspectral camera.

Glana Sensors has also incorporated Delta Optical Thin Film’s hyperspectral filters in its cameras. Glana worked with a modified DSLR camera at first, and has now mounted the filter inside a machine vision camera from Lumenera. This camera doesn’t use a micro-lens array because Glana wanted to have 3D capability, which is not possible with a snapshot camera. Glana also focuses more on high spatial resolution. ‘With every snapshot camera, there is a compromise to be made between spatial and spectral resolution,’ Pust observed.

The lights

Illumination is another component where dedicated hyperspectral solutions are springing up. Typically, hyperspectral systems will use halogen bulbs because they give broadband illumination, as well as being relatively inexpensive. Broadband light is important for hyperspectral systems, since they image over a large spectral range. Now, however, broadband LED lights are being designed with industrial hyperspectral systems in mind.

‘Halogen has quite a lot of drawbacks, especially for any company trying to integrate hyperspectral technology into an automated system,’ commented James Gardiner at US lighting firm Metaphase Technologies. The company offers dedicated broadband hyperspectral LED illumination, in both the visible to near-infrared, 400nm to 1,000nm, and the shortwave infared (SWIR), 1,000nm to 1,700nm.

The first disadvantage with halogen bulbs is that they run at more than 100°C and, unlike LEDs, they radiate a lot of their heat in the same direction as their light output. Radiating heat onto food or pharmaceuticals, for example, isn’t ideal.

One of Metaphase’s biggest application areas at the moment is inspecting gel caps commonly used for ibuprofen and fish oil capsules. The hyperspectral system uses SWIR illumination to see if any gels leaked liquid onto the outside of the cap.

The other issue with halogen bulbs is lifetime; a halogen bulb lasts only about 1,000 hours compared to LED light sources that have 50,000 hours or more lifetime. Halogen bulbs also quickly lose intensity.

In addition, there is now a lot more pressure to come up with an alternative to halogen, Gardiner noted, because, as of September 2018, the sale of halogen bulbs in the EU is banned as part of the final phase of new EU energy regulations.

There is also no flexibility in the spectral profile of a halogen light source; it is broad and there are no gaps in it, but it’s not particularly flat, according to Simon Stanley, director of technology at LED light manufacturer ProPhotonix. ‘Output [of halogen] is high in the IR region but almost non-existent in the UV and blue,’ he explained. ‘With an LED light, by contrast, we can replicate and adjust certain portions of the halogen spectrum, so we can make it flat, for example, or make it biased to the UV or IR side, or whatever the application might require.’

ProPhotonix offers LED products for both multispectral – a set number of distinct wavelength bands, rather than a continuous range – and hyperspectral applications. Its Cobra MultiSpec line light has 12 separate colour channels, which can also be configured to provide a complete spectrum over a wavelength range.

Stanley said that one of the things the company is sometimes asked to do is bolster the LED spectrum in particular areas, such as when a camera sensor doesn’t have equal sensitivity across the spectral range. ‘For example, if the camera isn’t particularly sensitive in the infrared, we might bolster the infrared part of the spectrum, while still providing a full spectrum into the visible,’ he explained.

The other downside to halogen bulbs is that they are typically not optics-friendly, Gardiner noted. Halogen spreads its light out over a large field of view, making it difficult to focus that light into a line, which would be beneficial for line scan cameras, the most common hyperspectral camera. One of the advantages that Specim cites for its FX cameras, is that they only require narrow line illumination, whereas a much larger area needs to be illuminated for filter-based cameras. ‘Halogen lights have a lot of wasted energy and photons that could have been directed at the object,’ Gardiner continued. LEDs, however, can use focusing rods or diffusers to give uniform focused light.

‘Hyperspectral imaging requires a lot of light,’ Gardiner stated. ‘A spectrometer using a combination of optics to split the light into 400 or more different spectral bands needs quite a lot of light to image all those different bands. The light needs to be high power and also emit a broad spectral range, which isn’t necessarily natural for LED technology.’

Metaphase worked with its LED partners to create custom LEDs for certain hyperspectral projects. ‘A natural LED, in terms of spectral band, tends to be very narrow, which means you need a lot of LEDs to cover a broad spectrum,’ Gardiner explained. ‘We take these narrowband LEDs and use custom combinations of phosphors to convert some of the photons emitted to a different wavelength. This phosphor correction expands the spectrum produced.’

This is done in combination with custom chips to make a single point source LED much broader in spectral coverage. Metaphase’s visible NIR light, for example, uses five LEDs: a broadband blue LED emitting from 400nm to 500nm; a broad white LED for up to around 700nm; and then three different LEDs to cover the 700nm to 1,000nm range. A typical blue LED might only cover around 15nm of the spectrum, so the firm uses phosphor corrections to expand the number to a half width, half max of 100nm – the phosphors shift the photons from the LED to cover a broader spectrum.

Photons are lost in phosphor correction, but Metaphase is using custom high-intensity chips that produce a lot of photons, so there are more photons to convert in the first place.

Metaphase designs optics to take the wavelengths of those different LEDs and blend them together into homogeneous illumination. It then uses a focusing rod to focus the light into a tight, narrow, high-intensity beam.

The company continues to improve on its phosphor and chip combination to give a broader spectral range and produce a flatter spectral curve. ‘A flat spectral curve generally does help, because the user has to do less pre-calibration of the camera, such as spectral balancing or white balance correction,’ he said.

Because hyperspectral LEDs tend to be custom-made, they are more expensive than standard white LEDs for machine vision, Gardiner said. The firm’s SWIR hyperspectral light – which is its most popular hyperspectral light – is made from InGaAs chips, which are more expensive to manufacture. ‘For some applications, that pricing will be a hurdle, but usually the other components, such as the camera and the software, will be expensive in itself, so it tends to be an expensive system no matter what,’ Gardiner added. He said hyperspectral camera systems can range from $20,000 to $100,000.

SWIR and sensors

Liquids and materials with a high water content appear black in the shortwave infrared at 1,500nm and stand out to the camera. Other materials, such as plastics, show up differently under SWIR light. Pust, at Delta Optical Thin Film, said the company is looking at making filters for the SWIR range, because ‘we think the market is almost as big as for the visible and NIR range’, but he said the firm doesn’t have any concrete plans in that direction at the moment.

Emberion, which has sites in Espoo, Finland, and Cambridge, UK, has developed an image sensor based on graphene and other nanomaterials, which offers a spectral response range from 400nm to 1,800nm in the shortwave infrared. Silicon detectors are sensitive in the visible range extending into the near-infrared, up to 1,100nm. InGaAs detectors, on the other hand, are used for SWIR imaging, but suffer from a lower performance in the visible region and, if they combine both SWIR and visible sensitivity, are expensive. Princeton Infrared Technologies is one company offering cameras with both SWIR and visible response.

‘Our detector can offer similar performance to InGaAs in the near and shortware infrared region, and we outperform InGaAs in the visible region,’ Dr Vuokko Lantz, product manager at Emberion, said.

Emberion’s photodector arrays have tailored readout integrated circuits (ROIC). The ROIC is based on a conventional CMOS IC technology, with the photodetectors monolithically integrated on top of it. ‘The photodetectors consist of graphene and light absorbing nanomaterial layers, which provide an exceptionally broad spectral response range and excellent sensitivity. The manufacturing approach is very cost efficient,’ Lantz explained.

Lantz said that the sensor has a lower performance than silicon-based CMOS sensors in visible range. ‘If you are just interested in the visible range, it’s best to stick with silicon-based devices,’ she said, ‘but if you want to extend into the infrared region, into the shortwave infrared in particular, we become a very interesting alternative.

‘Our monolithically integrated graphene-on-CMOS technology gives us a price advantage over InGaAs,’ Lantz continued. ‘We can offer our sensors, similar in performance to InGaAs, at a price that is 20 to 30 per cent less than an InGaAs detector.’ She added the company is optimistic the price will drop further as the production line matures and volumes increase.

Emberion was established in 2016 and is still in a development phase. Its first product is a conventional spectrometry sensor design, with pixels that have a 25µm pitch and 500µm tall. The company offers few-pixel detectors as a prototype device for evaluation purposes.

Early in the summer of 2019 Emberion aims to have product prototypes for a linear array device and, towards the end of 2019, a VGA imager, which will have a 20µm pixel pitch. Lantz noted that this is a starting point, not a limiting design parameter, and that the firm made its pixel pitch size comparable with commercially available InGaAs detectors. Emberion has the capabilities to make smaller or larger pixels, depending on customer need.

Cameras with a broad response range will open up hyperspectral imaging to even more applications. The next challenge then becomes to tailor the optics to focus such a broad wavelength range onto the sensor.