Though the pandemic undoubtedly accelerated warehouse automation – with challenges in terms of staff absences, and huge demand forcing companies to explore more efficient and less labour-intensive ways of working – automation solution providers were making good business long before the term Covid-19 had crossed anyone’s lips.

The first automated storage and retrieval systems – computer-controlled systems akin to huge vending machines that store and retrieve items in warehouses and distribution centres – were introduced as early as the 1960s. And as the world embraced consumerism, automation steadily seeped deeper into warehouse operation in the following decades to help in as many steps in the order and fulfilment process as possible.

But it wasn’t until the 2000s that investment in automation exploded: e-commerce had arrived. This disruption to shopping patterns, customer expectations and the industry’s competitive landscape became known as ‘the Amazon effect,’ so named after the company whose success pushed established retail giants to transform their business models and invest heavily in their own e-commerce operations and warehouse networks.

The result has been an automation revolution. Wherever there is a dull, dirty or dangerous (or possibly dear) task for a human in the warehouse, there is now a robot for that. Yet you will still see humans milling about. Why? ‘Humans are extremely good at adapting,’ explained Cognex’s Ben Carey, senior manager for logistics vision products. ‘For example, if they go to pick something up and they drop it, they know how they’re going to pick it up a second time. That innate intelligence is extremely difficult to build into machinery.’

Robots equipped with vision systems still struggle with various basic warehouse tasks that their human counterparts find a breeze. A large part of why is the sheer inconsistency of the work and environment. A warehouse or distribution centre is a fast-paced, variably lit workplace, handling thousands of items every day, packaged in thousands of different ways and going to countless different destinations.

Given most machine vision systems have traditionally been developed for factories, where they repeatedly identify and process the same objects in the same conditions indefinitely, it is little surprise that when faced with the messy, dynamic world of logistics they get confused. But, more recently, advances in camera technology, machine vision and deep learning have come together to make inroads into many higher-level, traditionally human duties.

Smarter sorters

Cognex, based in Natick, Massachusetts, USA, manufactures a raft of machine vision systems, software and sensors for automated gauging, inspection, guidance and identification in factories and warehouses.

One example is it’s 3D-A1000 compact snapshot smart camera, combining both 2D and 3D vision and requiring no difficult integration. ‘This is running rules-based software today, using both 3D and 2D data and locking them together with a lot of logic in between,’ Carey said. It can be used for identifying the presence or absence of objects on sorters, while collecting additional useful information at the same time. For example, deciphering the 3D properties of the object – size, shape, volume and so on – improves 2D inspections, such as figuring out where a package’s label is.

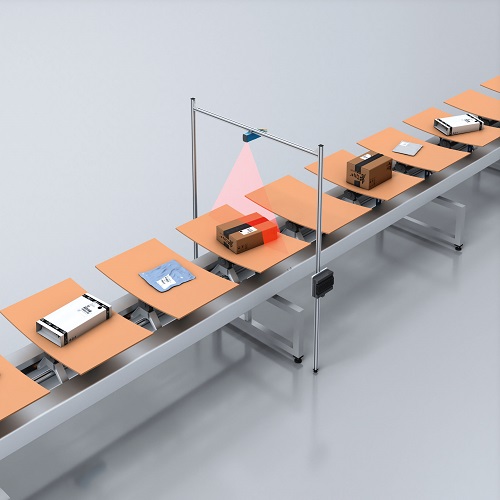

Sick, based near Freiburg, Germany, is a close competitor of Cognex, with a similarly broad product range to cater for innumerable different warehouse tasks. For example, it’s TriSpector 1000 is a 3D triangulation camera projecting and recording a laser line on objects passing by on a conveyor, broadly comparable to Cognex’s In-Sight 3D-L4000. Ryan Morris, Sick market product manager for machine vision, explained some of the benefits of the TriSpector 1000 and 3D sensing more generally. ‘In the 2D world, a stuck label on a conveyor looks like an item, but with 3D, we get height information, so we can basically ignore that stuck label,’ he said. ‘Moreover, 3D sensing doesn’t care about low contrast between different objects or challenging lighting scenarios.’

Scanning parcels in 3D. Credit: Sick

Cognex and Sick also offer a raft of sensor solutions for a growing trend in logistics: mobile robots. Automated guided vehicles, automated guided carts and, increasingly, autonomous mobile robots (AMRs) are often seen trundling along the warehouse floor. They might be moving through the aisles to pinpoint an item or detect hazardous spills, or doing any other task that involves loading, sorting, delivering or fetching.

Autonomous mobile robots first came to prominence in 2012 when Amazon acquired Kiva Systems’ pod-transporting robots. Since then, the sector has been flooded with both established and new companies offering a host of different AMR solutions, from AutoGuide Robots’ driverless forklifts to Boston Dynamics’ dog-like, four-legged Spot product. Visual information is crucial to any AMR’s navigation and function, which is why many developers have turned to Cognex and Sick. For instance, Morris said that Sick’s time-of-flight and stereoscopic 3D cameras, Visionary-T and Visionary-S, respectively, are increasingly being used for mobile robot vision in warehouse applications to offer more flexibility and information than traditional automated systems.

A more recent trend in warehouse vision systems is the launch of products with more sophisticated onboard processing. With similar capabilities to Sick’s InspectorP611 2D sensor that can conduct inline machine vision inspections, the new In-Sight 2800 from Cognex is a prime example. ‘For logistics, it’s a deep-learning-lite technology for complex classification,’ explained Carey. ‘Unlike traditional rules-based tools, it’s able to cope with large amounts of variation.’ So, for example, from seeing five to 10 images with its 2D camera, the In-Sight 2800 can classify mixed, skewed objects, and provide outputs to sort them appropriately.

One of Cognex’s most advanced products for logistics is the In-Sight D900. ‘This is one of the world’s first smart cameras that can run full neural network deep learning algorithms,’ Carey said. With the deep learning software deployed on the smart camera itself and accessible to non-programmers, in logistics the In-Sight D900 is particularly well-suited to optical character recognition for label identification. Given packages are coming from multiple countries and vendors, and made using multiple printing processes, labels can be badly deformed, skewed or poorly etched, making identification challenging. ‘You can just imagine, there’s a lot of variance,’ he said. ‘This is where deep learning really comes into its own in logistics.’

Tricky tasks

Other companies are expanding the reach of computer vision in the warehouse too, both in terms of capabilities and scope. Fizyr, based in Delft, The Netherlands, focuses exclusively on developing advanced computer vision software for pick-and-place tasks traditionally performed by humans. The company began as TU Delft spin-off Delft Robotics in 2014. Soon after, the team won the 2016 Amazon Picking Challenge – where the aim is to build an autonomous robot to pick items from a warehouse shelf as well as, or better, than a human. ‘But then we realised how unique our deep learning capabilities were, so we decided to pivot to a software-only company,’ said Shubham Singh, Fizyr technical sales consultant.

Fizyr’s deep learning algorithms enable automated handling of unknown objects being picked from bulk. ‘If you look at parcels or groceries, they always vary in shape, size, colour, how they’re stacked, and robots are not good in these situations where they need to understand what items to detect, segment, pick and place,’ explained Singh. ‘That’s where Fizyr comes in.’

Difficult segmentation of parcels by Fizyr software. Credit: Fizyr

A key advantage of Fizyr’s technology over competitor offerings, said Singh, is its high accuracy even in situations where other algorithms – and even humans – struggle, such as picking an item from a bin of overlapping and similarly coloured items. ‘Our software is trained using supervised deep learning, with hundreds of thousands of images from real-life logistics environments, collected in collaboration with our industry partners,’ he explained. ‘Unlike other approaches, where the robot learns on the go, we ensure the end user is in control and that the robot learns all the things we want it to learn.’

Fizyr’s software is used in production worldwide by more than 20 leading system integrators. Singh said the technology is hardware-agnostic, though it requires an industrial-grade 2D and 3D stereo camera for triangulation. As a result, robots equipped with Fizyr software can handle extreme object variation in e-commerce, parcel services and logistics in general. They perform a range of traditionally human tasks, not only picking diverse SKUs in warehouses, but also parcel handling, depalletising, truck unloading, mixed placement and even baggage handling at airports.

Rabot, located in San Francisco, California, USA, is another company carving out a niche in the warehouse vision space, focusing on improving worker efficiency. ‘Our sole goal is really to augment existing warehouse operations today, rather than implement a solution that could be disruptive,’ explained Sandeep Suresh, Rabot co-founder and chief product officer. Rabot Pack uses off-the-shelf, industrial-grade cameras set up over packing stations, and then performs machine learning to identify errors and inefficiencies, and verify orders have been packed correctly. ‘It really serves as a virtual assistant to the packer,’ he continued. ‘It visually verifies all the different objects in the packing station, performing simple checks to ensure any labels, packing material or bubble wrap that need to be applied, have been applied, and checking if there is any damage.’

Rabot CEO, Channa Ranatunga, watching Rabot Pack in action. Credit: Rabot

Results from installing Rabot Pack have been impressive, with customers reporting 25 to 50 per cent productivity increases within months, faster training of new employees and significant reductions in troubleshooting or resolution time for customer complaints.

Like Rabot, Vimaan – a computer vision company based in Santa Clara, California, USA – is also looking at the big picture in terms of improving warehouse efficiency. The company has a suite of inventory tracking and verification technologies that permit wall-to-wall and door-to-door visibility from when items arrive to when they leave the warehouse. These products scan and image goods and packages on pallets and conveyors, on forklift trucks and even from the air, with autonomous drones performing flybys of warehouse racks. Vimaan’s solutions completely remove human error in inventory management, and can save a single warehouse millions of dollars in write-offs.

With these and many more vision technologies reducing costs and errors, and improving efficiency throughout the warehouse, it begs the question: are we near the point where humans no longer need to step foot in the warehouse?

‘I think we’re getting there,’ said Morris. ‘I don’t think there’s any limiting technology out there right now that we’re waiting on to be able to do that – it’s now more about willpower and integration to make it all come to reality.’