The move to more automated systems brings with it many benefits, in terms of safety, quality – human error is reduced – and economic ones too, with the ability to continue manufacturing around the clock.

While the US election cycle has led to suggestions of it reducing jobs in industries like the automotive sector, there is evidence to suggest otherwise. According to Joe Gemma, president of the International Federation of Robotics (IFR), ‘more productivity is … driving down prices, creating more demand for products, and creating more jobs.’ Gemma pointed to the automotive industry, which has ‘over the last several years installed 80,000 more robots in the US and at the same time increased 230,000 jobs’, also citing that in the rust-belt states of Ohio and Michigan, automation has directly led to an increase of 75,000 jobs.

Figures from the IFR show that in 2015 – the last year for which figures are available – 27,500 new industrial robots were installed in the US, with more than 18,000 being used in the automotive parts, electronics and motor-vehicle manufacturing sectors. Indeed, 135,000 were added between 2010 and 2015. The IFR data shows the US automotive industry currently has 1,218 robots per 10,000 employees, roughly on par with Japan, Korea and Germany.

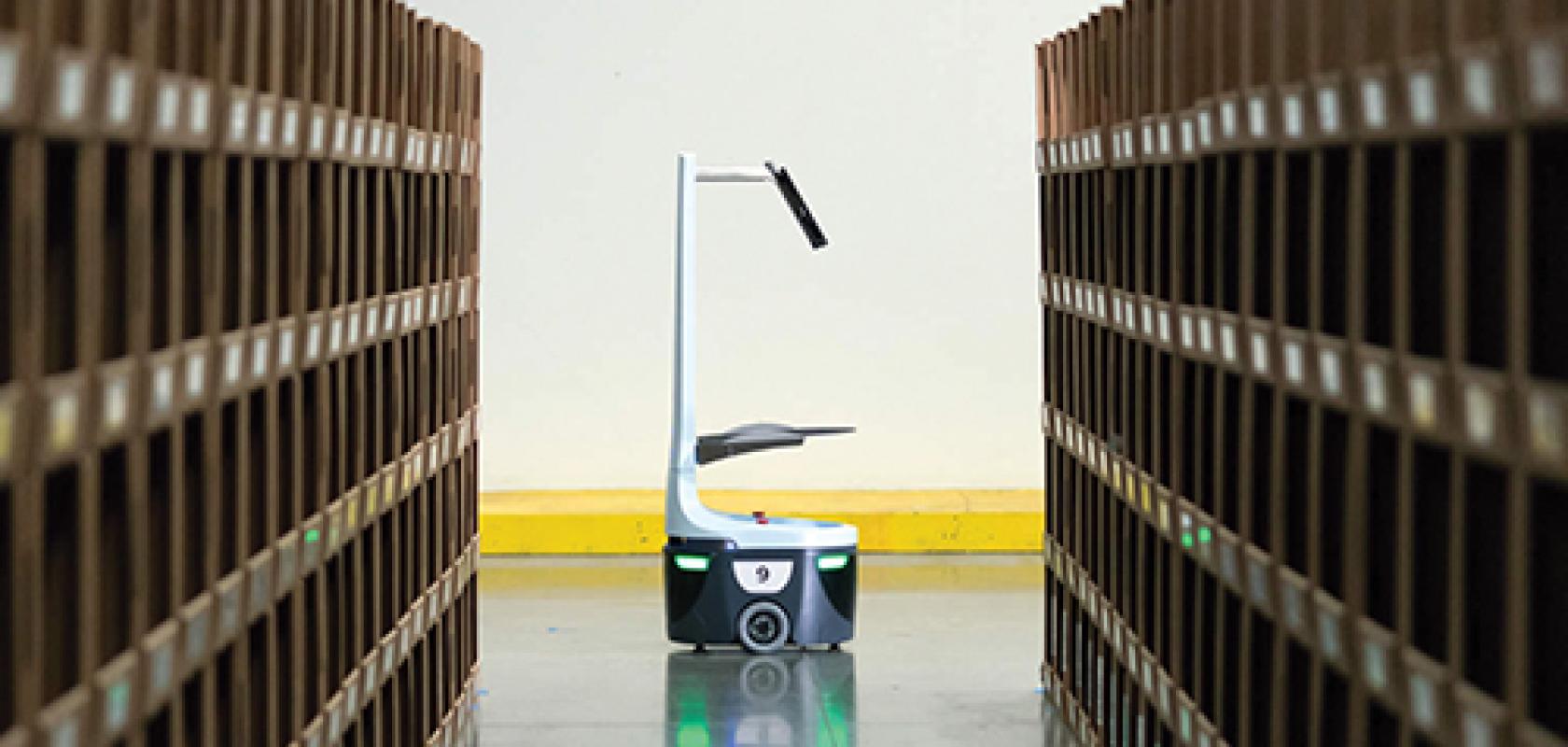

The Locus warehouse robot uses a sensor fusion of lidar and vision

The rise is also happening in service (non-manufacturing) robots, with the IFR forecasting the industry will grow from its $4.6 billion turnover in 2015 worldwide to $23 billion between now and 2019. It found the majority of these units shipped (53 per cent) will be in the logistics sector. Consequently, significant technological advances are being made here.

In 2012, logistics robot company Kiva Systems was bought for $775 million and rebranded as Amazon Robotics. Its acquisition left a large technology gap and has led to several start-ups trying to replace it. Among the leaders are Canvas Technology and Locus Robotics.

Bruce Welty, CEO of Locus, described the original Kiva setup as an air traffic control system, where once clearance was given to a robot, no other robot was allowed on the route.

This is no longer the case with integrated vision – and lidar – enabling the detection of humans, other robots and obstacles, as well as mapping capabilities to locate specific positions, be it a loading bay or charging points.

Welty said the Locus robot uses a system based on sensor fusion of lidar and two monocular cameras to create 3D time of flight data. This is coupled with wheel encoders and inertial dampers to calculate position – GPS isn’t available inside.

In comparison, the Canvas system places a bigger emphasis on vision sensors, citing the cost of 3D lidar.

Currently, however a fully autonomous service robot for logistics isn’t possible, with Welty saying humans are still needed for picking. ‘If [picking arm vendors] can meet our requirements then we’ll place some big orders. [But] we need a picking arm that can be reliable, that works all the time, that is robust, that can pick any item, can see any item. It needs to be fast, it needs to be portable and it needs to [score an eight or nine out of ten] on all of those.’

Welty said no company is currently there yet: ‘I think we’re years away from [solving this problem], at least five, maybe longer. My business partner ... argues it’s a three to five year solution. Vendors tell me they’re 18 months away. When it’s ready it’s ready.’

Flexing robot arms

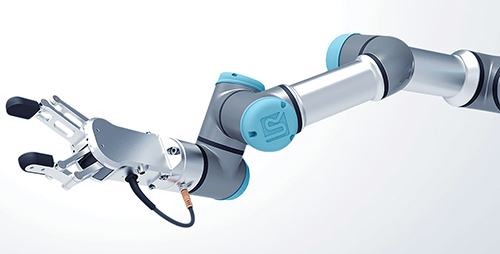

Danish firm Universal Robots has designed a robot arm capable of doing a huge range of tasks – its website says: ‘The UR robot can automate virtually anything … from assembly to painting, from screw driving to labelling, from injection moulding to welding and from packaging to polishing.’

The Universal Robot arm can be taught movements by physically manipulating it

The Universal Robots’ imaging system is based on the Sick Inspector PIM60 2D vision sensor. Sick’s David Bäck told Imaging and Machine Vision Europe: ‘The [robot is] a bit different compared to standard industrial robots.’ He said that the robot can be taught movements by physically grabbing the robot arm and moving it to each required position, allowing it to be reconfigured by someone on the factory floor.

‘Our Inspector was a good match,’ Bäck said. ‘An operator can just take the robot and controller and move it to a workstation, and

set it up in just an hour. Then, when they are done, they can take it to the next station and set it up differently there. So, they need a flexible vision sensor to work with their setup.’

Once images are acquired, some of the more interesting developments in robot guidance, however, are happening with the introduction of deep-learning image processing techniques applied to systems like the UR robot.

In October 2016, Google published a paper on its research into robot skill acquisition with shared experience – the authors were Timothy Lillicrap of DeepMind, Sergey Levine of Google Brain Team, and Mrinal Kalakrishnan of X.

The paper pointed out that while ‘machine learning algorithms have made great strides in natural language understanding and speech recognition, the kind of symbolic high-level reasoning that allows people to communicate complex concepts in words remains out of reach for machines. However, robots can instantaneously transmit their experience to other robots over the network – sometimes known as “cloud robotics” – and it is this ability that can let them learn from each other.’

The team’s research not only showed how deep-learning techniques could be used to learn new skills – in this case turning a door handle – via trial and error, but also by communicating their attempts among one another, and by being shown by a human moving the arm.

‘Of course, the kinds of behaviours that robots today can learn are still quite limited. Even basic motion skills, such as picking up objects and opening doors, remain in the realm of cutting edge research. [But] in all three of the experiments [reported], the ability to communicate and exchange their experiences allows the robots to learn more quickly and effectively. This becomes particularly important when we combine robotic learning with deep learning,’ the team wrote in the paper.

Agile lighting

Acquiring good images starts with good illumination, which is no less true for robot vision. There is a difference between the lighting used for robot guidance and that installed on a production line, according to Paul Downey from Gardasoft, a specialist in lighting control for vision systems. ‘For a production line, you have set up the system in a way that you know what’s coming through. For robotics, you need the agility. If I only had one light configuration it’s unlikely to suit all inspection requirements,’ he said.

‘Lighting is the fundamental starting point of any vision system. If you don’t get that right then everything else is going to be out,’ he continued. ‘By the nature of the robotics interface, what we have is the robotic arm coming in and doing maybe four or five inspections on an object, with multiple objects coming through, and inspecting these from different angles and for different purposes.’

Downey highlighted that for a robot operation the ambient light might not be controlled. Gardasoft uses a closed loop ‘test card area’ for calibration and analysis. This allows the system to know if the camera is reporting back the optimal light conditions and adjust accordingly.

Remote monitoring

As Downey pointed out, with robotic automation systems now installed around the world, ‘when you introduce functionality like robotic control you need to be able to support it. Nobody wants a system that is great from day one but costs a lot to support. The last thing you’d want if someone reports a reject rate out of the norm, is to have to get on a plane to sort it out.’

Downey said a lot of Gardasoft’s customers are setting up systems specifically for remote diagnosis and even for making adjustments, enabling 24/7 operation with staff monitoring the equipment based in various time zones.

‘With a modern system with controllers, you now have access to the camera and the light [remotely],’ he added. ‘So now you have access to all the variables. It gives the end user peace of mind, and it gives added confidence to the integrator – [with] service contracts [not being] onerous.’ Integrators could also work in collaboration with the end user, with the remotely located engineer able to ask about robot operation on the production floor.

Lighting and camera control aren’t the only aspects vital for a robotic system; the lens focal length can change dramatically.

Gardasoft has recently partnered with Optotune, which manufactures liquid lenses. Optotune’s lenses can not only be adjusted remotely, but can shift focus within milliseconds, with focal distances from infinity to 50mm.

‘So, you can not only change settings on the lighting and the camera, you can also remotely control the focal length of the camera,’ Downey remarked. ‘If you’re doing things in a warehouse, that can be really important.’

He went on to give the example of a robot in a warehouse being really close to an object to make one inspection, but then moving to a package higher up – at which moment the focal point will change.

Timing, control and error prevention

Downey also highlighted the need for synchronisation between the lighting, the lens, the camera and the robot itself: ‘How do you make those changes fast and accurate? One of the crucial things [about inspecting] high-speed objects is that to reduce blur you need to get the [light] pulses and the camera synchronised.’

This need was also highlighted by Sony Image Sensing Solutions’ Alexis Teissie when describing a fruit inspection robot picking out bad fruit from a conveyor. In this situation, the fruit comes down constantly and multiple cameras – colour, near infrared, and even hyperspectral – are needed to distinguish between a bruise, an infection or even spot objects hidden under the skin, with few false positives and even fewer false negatives let through.

‘Robots need to be able to identify damaged stock from the image and this means accurate timing is essential [to ensure the cameras fire at precisely the same time],’ he said. ‘Today they do it with hardware triggering,’ which Teissie pointed out adds cost and creates a single point of failure in the system.

Both Teissie and Downey highlighted the role the software-based IEEE1588 precision time protocol can play in creating more accurate information for robots – precisely controlling camera firing time to ensure exactly the same image is taken by the colour or near infrared cameras, or to coincide with light pulses.

Teissie explained: ‘With a standard GigE camera, there is a clock inside the camera. The clock is [based on] a number of clicks, which is arbitrary, and there is no link between devices. So each device on a network has its own clock; you cannot tell a camera to action at a specific time. By enabling PTP 1588, all the devices will share the same [master] clock. And the benefit with that is we can use it to synchronise the camera operations – acquisition, GPIO interchange, etc.

‘This is a software protocol, so there are no specific hardware requirements … and using it we can get the precision down to microsecond accuracy,’ he continued. ‘While it’s still less precise than hardware synchronisation, this [hardware] has drawbacks – cost, complexity, points of failure.’

Downey continued: ‘[IEEE1588] means you can do it over Ethernet [via GigE 2.0]. One of the big issues is ease of use and 1588 makes it very easy. You’re exchanging parameters via Ethernet and you’re triggering over Ethernet.

‘It builds into, not necessarily plug-and-play, but ease of use, particularly if you have a complex system. We have customers using 30 or 40 lights and 30 or 40 cameras on one machine. This is very involved inspection. When you’re getting to this level, or even more than one camera and more than one light, you really do need this ease of synchronisation and ease of seeing what it looks like and how to connect it easily to these devices.’

Teissie agreed: ‘More and more we’re seeing systems adopting IEEE1588. Lighting and robots and the cameras can all use the same connector … [which] makes it much easier to synchronise them over a cheap GigE connection.’