Modern agriculture relies heavily on mechanisation to obtain the kind of crop yields needed to feed the world’s population.Mechanical systems for harvesting and sorting crop plants have developed, just as farming practices have with the use of fertiliser and pesticides along with the selective breeding of high-yield varieties. In the western world, cotton is now harvested with a cotton picker, a machine that removes the cotton from the boll – or, in places where picker varieties can’t grow, a cotton stripper that strips the entire boll from the plant. The combine harvester, a classic example of mechanisation in agriculture, is used for cereals and combines reaping, binding and threshing in a single operation.

There are instances where machine vision is used out in the field, but this is a very unforgiving environment for a vision system to operate in – largely because of the changing lighting conditions. ‘One of the major problems with machine vision out in the field is that it doesn’t provide the flexibility and dynamics of the human eye – the lighting has to be carefully controlled, for instance,’ comments Ben Dawson, director of strategic development at Dalsa. Dalsa has been supplying vision hardware for various agricultural applications for a number of years – the company supplied hardware to researchers at the University of California 15 years ago for the development of an automated weeding system.

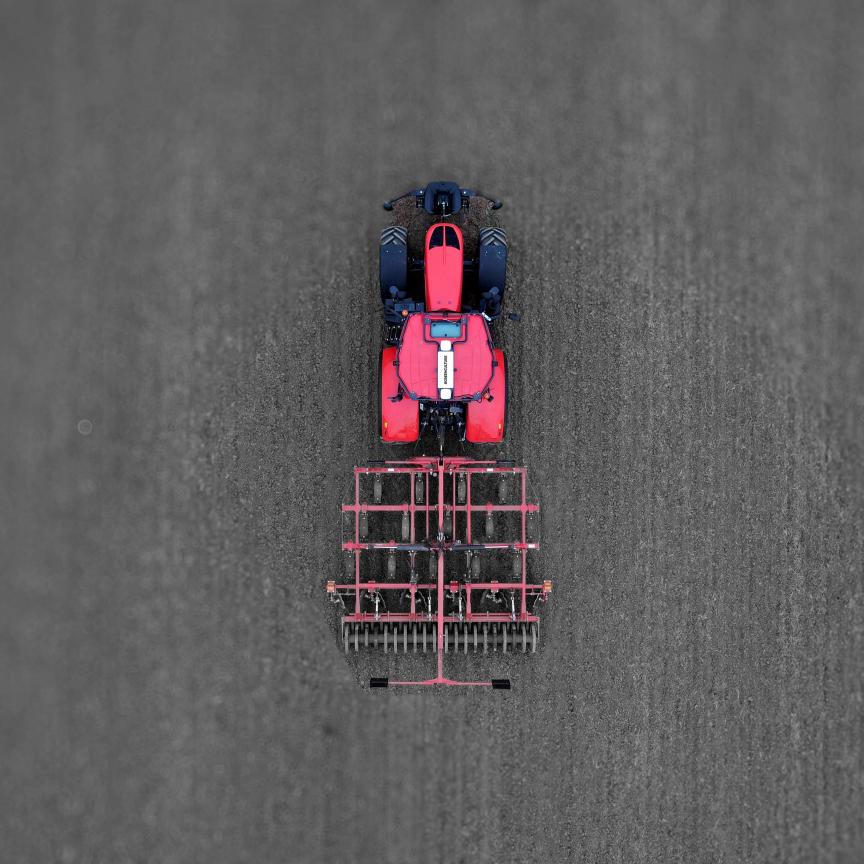

More recently, researchers at Wageningen University and Research Centre in The Netherlands have developed an automated weeding machine for controlling volunteer potato plants – potato plants that survive the winter and emerge in spring as weeds in a different crop rotation, such as sugar beet. The machine, engineered as part of a five-year PhD project culminating last year, consists of two Marlin cameras from Allied Vision Technologies in a covered and artificially lit enclosure that analyse images of the field as the system is dragged behind a tractor.

Volunteer potato plants were used as a model weed. They are a source of nematodes and diseases such as Phytophthora infestans (potato blight) which aren’t controlled by pesticides in the standard manner because the plants are not the crop. ‘The automated system is of interest to farmers as there are currently no selective herbicides available to kill these plants,’ explains Ard Nieuwenhuizen, a researcher at Wageningen University working on the project. Currently, removing the weeds has to be carried out plant-specifically and by hand, either by applying a non-discriminate herbicide like glyphosate or by uprooting them.

The system was programmed to recognise weeds based on colour and the crop row pattern. Crops are seeded in rows a known distance apart and therefore everything between those lines must be a weed. This information is used to train the system, after which the weed and the crops are distinguished based on colour. The system conducts a background subtraction to remove all the soil in the image and identify only the green vegetation. About five to 10 weed potato plants and sugar beet plants have to be detected for the system to be trained. During field trials 84 per cent of the weed potato plants were controlled or killed, while 1.4 per cent of sugar beet plants were unintentionally killed. ‘It is difficult to design a vision system for use in agriculture, because of the environment,’ states Nieuwenhuizen. ‘Every field and every crop is different and, what’s more, the conditions from day to day and even within a day will differ. Light levels can vary enormously, which is why the cameras were covered and the scene artificially lit. This meant that the cameras only had to cope with the variable conditions caused by the crop itself and not changes in light.’

The researchers used algorithms that were adaptive to changes in colour, in that the algorithm only takes into account weeds from the past 10m in the field. Changing soil conditions within a field, such as water and nitrogen content, can cause colour changes in the plants. Adaptive algorithms meant that only the local difference between crops and weeds were used in classification.

There is around a four-week time slot between when weed plants start to emerge to when they start to spread disease, and during that timeframe the plants have to be removed. This, according to Nieuwenhuizen, is the main reason for developing the automated system, in that there is a time constraint for controlling the weeds. In addition, removing weed plants requires a lot of manual labour, which is expensive. The team at Wageningen University has showed that the machine is more economical compared to manual weeding.

Allied Vision Technologies’ colour and near-infrared Marlin cameras are used to distinguish between crops and weeds. Combining images captured in the NIR and red spectrums gives a good indication of how healthy a plant is and how fast it’s growing, a evaluation termed the Normalised Difference Vegetation Index (NDVI), which indicates whether or not a plant is growing. Using NIR in combination with colour images gives extra features to better distinguish between the crops, which would have a higher NDVI associated with healthier growth, and the weeds, which would typically exhibit a lower NDVI.

Camera resolution was important (1mm resolution per pixel was required) to locate the plants precisely. The Marlin’s FireWire interface made it easy to connect the cameras with National Instruments’ LabView real-time operating system. ‘The machine is running behind a tractor and so it’s important to have a real-time system with an actuator continuously carrying out tasks,’ Nieuwenhuizen says.

The system is currently a proof-of-principle machine and has been demonstrated to researchers and farmers. Nieuwenhuizen is now extending the technology to identify other weeds to make it more commercially attractive for farmers. ‘Most other weeds are smaller than potato plants,’ he says. ‘There are also further colour differences from weed to weed and from crop to crop, and tests need to be carried out to see if the adaptive algorithm will work well in these situations. The cameras give good quality images, but the intelligence in the system needs to be extended to be able to detect other weeds as well.’

Sorting fruit

Fruit picking is one task that is still largely done by hand. ‘There has been a lot of work carried out developing automated fruit picking, because of the increasing cost of manual labour and the value of the crop,’ comments Dawson of Dalsa. The Automation Centre for Research and Education (ACRO), based in Belgium, has developed a mechanised fruit picker that uses a large blue tent to surround the fruit trees as the tractor moves through the orchard. The tent provides a diffuse blue background, which creates high contrast between the red apples and green leaves making the vision job a lot easier. ‘Designing a robotic system that will probe in and around the branches harvesting fruit without the tent is a much harder to achieve because the background cannot be controlled,’ says Dawson. In some circumstances, high contrast can also be achieved with infrared or UV imaging – a system has even been developed for harvesting tomatoes using low-intensity X-rays.

A machine vision system can be used to distinguish between cherries and the background by the fruit’s colour and shape. Image courtesy of Dalsa.

There are some crops that generally wouldn’t lend themselves to using vision for harvesting – nuts, for instance, are harvested by vigorous mechanical shaking, causing them to fall onto a plastic sheet. ‘You can’t really beat that in terms of economy,’ says Dawson.

‘Replacing human vision (and muscle) in the field is perhaps the most difficult vision (and robotics) task in the food production continuum,’ comments Dawson. The next step is using vision to sort and grade foodstuffs.

As in any other manufacturing process, depending on the value of the parts, it’s cheaper to find a defect early in the production chain rather than after time and money have been spent to transport it and wash it, and so forth. ‘Here, the machine vision aspect is still challenging, but easier because there is more control of the environment – the lighting can be controlled and the stream of material to sort or inspect is constrained,’ Dawson says.

Food sorting also used to be carried out mechanically – almonds can be sorted by using air shoots to blow any debris away from the nuts; potatoes can be sorted by placing them in a saline solution, whereby the potatoes float and any stones sink. By moving to machine vision, sorting can be faster and grading can be automated. Using optical sorting, the potatoes can be graded in terms of size and whether the produce is damaged in any way, as well as sorting out any debris. Dawson notes: ‘It’s a matter of value-adding, because now the higher-value components can be sorted out and preferentially treated, which justifies the cost of the machine vision system.’

MAF Roda Agrobotic, based in Montauban, France, manufactures grading and packing systems for fresh fruit and vegetable packing houses. Its GlobalScan 5 vision system is designed to sort fruit as it moves along a sizer (a conveyor made up of lanes that presents the fruit to the vision system). The sizer transports the fruit while rotating it in order to image the entire surface area. The vision system is just one aspect and there are numerous other sensors involved, including spectrometers to measure the sugar content, a sensor for the firmness, and strain gauge sensors to determine the weight.

The various measurements are made and then the fruit is diverted down one of 10 to 60 outlets, depending on the sizer. ‘The system is set up so that each outlet collects fruit with the same characteristics – the same weight, the same colour, the same quality, the same firmness, etc,’ explains Michel Rodière, electronic department manager at MAF Roda Agrobotic. The decision to send a piece of fruit to one outlet is made through Orphea, MAF Roda’s software. ‘The user might decide to divert small fruit at the first outlet, red fruit at outlet 15, and so on until the end of the sizer,’ Rodière says. The conveyor operates at speeds up to 15ft/s per lane.

Cameras capture 20 colour and 20 infrared images per piece of fruit as it rotates. Near infrared is used to detect disease or pest damage in the fruit, such as bitter pit, russet and bruises in apples. These diseases are characterised by dark spots on the skin and which are identified easily using IR images, because there is no interference from the colour of the fruit.

Vision is used, firstly, for measuring the dimensions of the fruit to sort it according to the diameter, area or volume. ‘Fruit is rejected if it is the wrong shape – round lemons, for example, are removed, because lemons need to be elongated,’ Rodière explains. Secondly, the cameras will sort the fruit according to colour. ‘This isn’t a simple task and customers will want data on numerous colour criteria, such as the hue of the overall background, the hue of the coloured part of the fruit, the percentage of the surface area that is a certain colour, how well-distributed the colour is across the surface of the fruit, etc.’

Readout from the automated weeding machine developed at Wageningen University and Research Centre, which is designed to spray weed potato plants selectively in a farmer’s field

The Matrox Imaging Library (MIL) is used to process images from the cameras. Binarisation and blob analysis are used to separate the fruit from the background and obtain dimensions. Colour calculations are typically histograms that are carried out in MIL. ‘Processing separates the hue of the background (the green part of an apple, for instance) from the hue of the coloured area (any red patches), and then statistical tests are performed to determine the various criteria – the percentage area of a specific hue, for instance,’ explains Rodière.

The third, and according to Rodière the most important, use of vision in the fruit sorting system is to identify defects, such as quality flaws on the skin – dark spots, any kind of disease, or even natural blemishes could result in the fruit being rejected. ‘The severity of the disease will differ, some of which will result in a rejection of the fruit while others will be accepted depending on the market and the requirements of the packing house,’ he says.

The vision system can define up to eight quality categories, eight colour categories, and 16 categories for size. Different combinations of characteristics are then programmed into Orphea in order to sort the fruit.

Infrared imaging is a useful tool to separate the fruit from the background, explains Rodière: ‘All fruits viewed in infrared are depicted at the same level of grey – shiny yellow apples or dark red apples will appear at the same grey level in infrared.’ In addition, he notes defects will also be more visible in infrared and will not be influenced by the colour of the fruit. Certain diseases can also be defined by colour and IR images can be used to determine a region of interest and then processed in combination with colour information.

The sorting machines have to be quick and reliable, but also must handle the fruit gently to avoid any damage. According to Rodière, the main advantage with GlobalScan 5 is its performance: ‘80 to 90 per cent of what was previously a manual process for sorting apples can be automated with this system.’ A manual final check is often carried out prior to packing the fruit in boxes to send to the supermarket he says, but the majority of human labour is saved.

The system also accumulates data about the fruit during the processing in order to determine a price to pay the grower. Records are kept to trace the produce as it passes through the packing house to the supermarket.

Food packing

Once the produce has been sorted and graded, the final steps of food production are packaging and transport to the consumer, which, according to Dawson of Dalsa, have made extensive use of machine vision for many years – examples include optical recognition of barcodes for sorting packaged goods, or the automated visual inspection of package labels for correctness and print quality.

Dawson says that sorting organic produce is a different set of challenges from traditional part gauging and metrology work done by machine vision systems. ‘Machine vision can measure a machine part to a 10,000th of an inch, which is something a human cannot do,’ he says. ‘However, a human would be able to look at an apple and instantly tell if it’s good or bad.’

A large number of food inspection applications use 3D imaging. An automated system to disinfect cow teats prior to milking uses a robot arm that sprays disinfectant guided by a 3D laser-based vision system which locates the cow’s teats. ‘This is a good example of a labour-intensive process that has been almost completely automated,’ says Dawson. In contrast, many traditional machine vision tasks are effectively 2D or can be arranged to be so.

Dawson comments: ‘A combination of advances in robotics and advances in machine vision, as well as a reduction in the cost of technology, are making many agricultural applications practical, rather than simply being academic demonstrations of what could be done with no constraints on time and money.’

Most automated vision applications are and will be post-harvest, for sorting, grading and packaging where the environment can be controlled more easily. Some, however, are finding their way further upstream into the farmer’s field – the automated milking system is a good practical example – and as other systems are developed, vision looks likely to infiltrate further into this area.