Automated manual inspection refers to the automation of a task performed by operators. In a manual process, human operators follow a standard operating procedure to visually inspect parts, and vision systems can be used to automate the manual tasks.

Machine vision systems used in such processes vary, but are generally based on a configuration of technologies such as sensors, cameras, and lighting to aid the inspection and measurement of different components.

For example, typical systems can be 1D (line scan inspection), 2D (image analysis) and 3D (time of flight, depth mapping).

According to Narcisa Pinzariu, Technical Lead for Computer Vision at the University of Sheffield Advanced Manufacturing Research Centre (AMRC), demand for automation and quality inspection has been rising in recent years – and vision-guided robotic systems, which use cameras and sensors to better understand their environment, are “used more and more to increase productivity for manufacturers of all sizes”.

“Machine vision aids manual assembly, [and] the errors that arise in these operations can be reduced or prevented by using a vision system to assist the human operator. This is something the AMRC has experience with – developing an entire process that allows the operator to follow a set of instructions displayed on a screen. The inspection takes place in real time and checks if the operation has been carried out correctly, ensuring the operator does the relevant checks and cannot move on to the next task before correction,” she says.

Reduced cycle times

The AMRC also recently worked with a customer that wanted to improve its current process, by reducing the cycle time of analysing the dimensions of a part. It was decided to use a vision system on a gantry robot. An algorithm was developed to compare the scans with computer aided design (CAD) drawings of ideal parts to check if the manufactured part is within tolerances. The project focused not only on the development of the machine vision solution, but also on the communication between different software packages and the development of a graphical user interface (GUI) that an operator could use. As Pinzariu explains, the GUI would also output a report that highlighted the measurements needed and the overall decision to aid the operator in their inspection.

“This initial stage of the project showed that a considerable reduction in the cycle time can be achieved by using the method proposed by the AMRC,” she says.

Computer vision-based solutions are set to become more affordable, says AMRC’s Narcisa Pinzariu

Pinzariu also cites an AMRC project that focused on aiding an operator in the detection and classification of defects on complex components. Here, the cell that was built uses a robot to position the components in front of a camera that takes images from different positions. The images are then analysed using traditional machine vision, with algorithms such as edge detection to detect any defects.

“Additionally, we developed other approaches using AI to classify the defects into their categories. The solution proposed offers a reduction in cycle time,” says Pinzariu.

Different paths

Manufacturers interested in automating manual inspection can take a few different paths. According to Ed Goffin, Senior Marketing Manager at Pleora Technologies, the first, and perhaps most complex, is automating a process to “fully remove the human” by integrating cameras, real-time imaging and processing, and often robotics. Although there is a market for this type of approach, especially in “dull, dirty and dangerous tasks, where it’s best to try and remove the human from the process”, Goffin more often finds that manufacturers are not necessarily looking at replacing humans altogether. This is largely because, in many cases, the cost of fully automating a process is too high – for example in lower-run manufacturing, or for products with a high degree of customisation.

“Instead, they would like to use technologies to help ensure humans make the same consistent, reliable, and trackable decisions in these manual processes,” he says.

A common example of such an application is camera-based visual inspection, where a manufacturer can use a combination of machine vision and AI to help an operator spot product differences. Here, basic image comparison is used first to highlight differences between a known good product and the product to be inspected. As the operator accepts or rejects highlighted differences, says Goffin, “behind the scenes, they are transparently training an AI model.”

When inspecting items such as such as electronics boards, AI can begin to offer decision guidance to the operator after just a few inspections

“After a few inspections, AI will start providing decision guidance to the operator. One of the key markets for this type of technology is electronics, where a human operator is verifying the components on a board and that the correct assembly steps are complete,” he adds.

Data gathering

Goffin points to a few examples of manufacturers using new technologies to add decision-support for manual processes. For example, in the electronics market, one Pleora customer uses machine vision to provide a “second set of eyes” for the human inspector to help them identify missing or damaged components. Some of the most common issues are missing labelling, component alignment, and soldering defects.

“These may be issues missed by an automated inspection, or for short-run products because it’s too time-consuming and expensive to set up the system. The system is also used to visually train new employees on the inspection line, so they can more easily distinguish between good and bad products,” he says.

For Goffin, one of the interesting by-products of adding automated decision-support to manual processes lies in its ability to enable manufacturers to start accurately gathering data. For example, automated and customisable reporting tools help manufacturers gain data on visual inspection and manual manufacturing steps.

“Often, visual inspection is a bit of a data ‘black hole’ for manufacturers, which makes it both difficult to ensure end-to-end quality and time-consuming to resolve issues when errors occur,” he says.

“In the case of this manufacturer, they save images of every manually inspected part, as well as operator notes, to their manufacturing resource planning system. This is primarily used to help speed up root cause analysis and resolution if there in an in-field product issue,” he adds.

For Goffin, a key benefit of using vision technology to automate manual inspection for this manufacturer is that it enables it to ensure “consistent, reliable and traceable human decision-making for incoming, in-process, and outgoing inspection steps across different operators”. It also detects defects commonly missed by automated optical inspection (AOI) and “gathers actionable data on manual steps to help ensure end-to-end quality and speed issue resolution”.

“We have also worked with a distillery that is trialling visual inspection tools, as both a visual learning tool for new employees so they can learn required brand elements, and as a pass/fail QC check to verify all elements are on a bottle before its packaged,” he says.

Trialling visual inspection tools at a distillery, as both a visual learning tool for new employees so they can learn required brand elements, and as a pass/fail QC check to verify all elements are on a bottle

“The system helps the distillery increase production and reduces costs by avoiding rework and downtime – if they find an error on a bottle, they report it and it takes about five minutes to correct. Since they produce around 1,500 bottles per day it reduces the risk that a poorly labelled product reaches store shelves. It also eliminates subjective, stressful and time-consuming decision-making for employees,” he adds.

Augmented reality

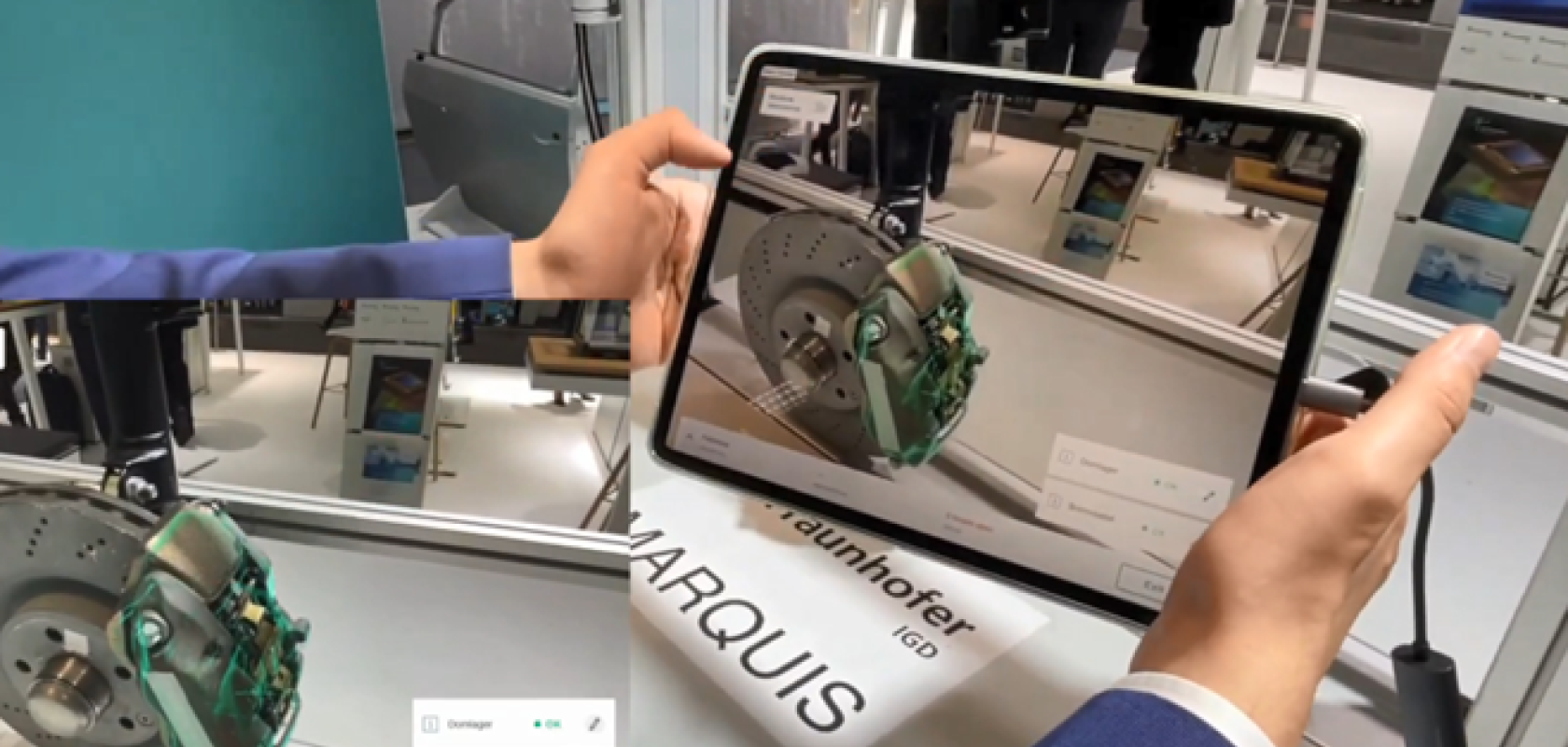

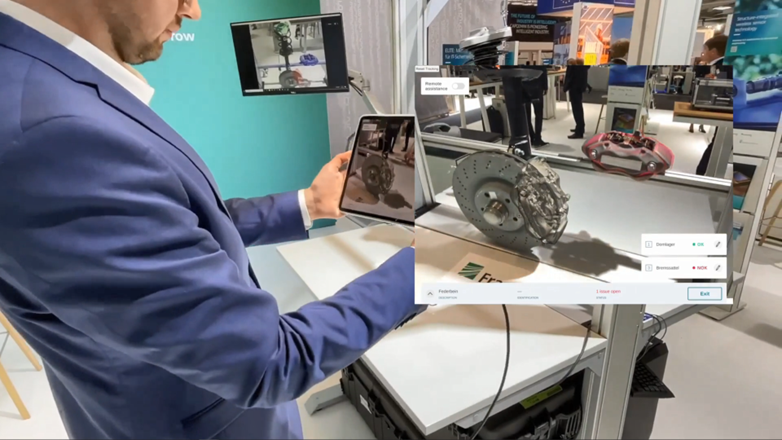

There are a growing number of examples of automated manual inspection systems featuring AI and AR technologies. In these processes, a predefined program of inspection tasks on a complex product structure is carried out and automatically evaluated, with inspection characteristics being classified and displayed to the inspection engineer via AR. As Holger Graf, Head of Virtual and Augmented Reality at the Fraunhofer Institute for Computer Graphics Research IGD, explains, the overlay of the physical objects with their digital representations in such systems facilitates a simultaneous target-actual comparison with the given CAD specification and “shows the test engineer any deviations in position or attitude directly superimposed on the object itself.”

“The training of the AI is purely ‘synthetic.’ Newly simulated training images are derived from the CAD model data and receive automated annotations and positional information of components, without the need for time-consuming photo-shooting campaigns and manual annotations. The inspection system is then able to perform object recognition, classification, and positional estimation without ever having seen the actual assembly and product configuration ‘live’ before,” he says.

Graf cites several examples where such technologies are used, for example, in sorting processes, where specific sorting tasks of objects are displayed to human operators in colour-coded form.

“They are also used in automated quality inspections, as well as at AR-supported assembly workstations, where recording is done by means of multi-camera arrays and where deviations from predefined CAD or assembly specifications must be detected and verification must take place simultaneously,” he says.

It is also possible to support virtual sampling tasks, where potential collisions between components can be detected and directly displayed to operators before product assembly begins.

“Solutions developed by Fraunhofer IGD are especially supportive in mechanical and plant engineering, as well as in the automotive sector, where they serve to reduce errors and consequently cut costs,” says Graf.

“A further interesting example is incoming goods inspection with our MARQUIS software package, where every single component can be checked for defects at variance with the CAD data. We are also currently working on the further development of an AR-supported assembly workbench, which we will present at the Hannover Messe in 2023. This will support employees working on complex product assembly lines, showing them which element is to be picked next and where it fits into the overall configuration,” he adds.

Future trends

Goffin believes there are several steps in a manual manufacturing process where an operator could potentially use image-based analysis to ensure accurate decisions and streamline processes. One example is for work checklists or job travellers, where an operator is tasked with completing multiple production or verification steps. By digitising this process, Goffin says they could visually compare their work against known good samples.

“The system can help make decision-suggestions around quality. Users can also capture and save images to verify they have completed work properly, and save and share notes or alerts as they spot issues,” he says.

Goffin also points out that vision technologies and AI can be used for digital learning applications. Since most humans “learn best visually”, he says image-based systems and AI decision-support tools “can help show a new operator how to properly complete a task or spot potential quality issues”. Another trend is focused on making these types of technologies easier to use on a manufacturing floor.

“Whether it’s a machine vision, AI, or AR tool, it needs to be simple for an operator to train and use. The other, maybe more immediate trend, is technologies that can help manufacturers deal with labour shortages. It’s getting hard to find people for these manufacturing roles, and there’s definitely a place to use vision-based tools to help train new employees, and take away some of the stressful decisions that lead to burnout,” he says.

“Digitising some of these decisions and processes also makes it easier and faster to onboard new employees – you can have more assurance that your new employees are making the same decisions as your long-time employees,” he adds.

Deep learning

As far as augmented reality output units are concerned, Graf says looking into the future is “always a bit like reading the coffee grounds”, but suggests there is a lot of momentum in novel eyewear developments, with “small but positive advances that currently facilitate a continuum of perceptual realities” known as eXtended Reality (xR), ranging from pure AR environments to mixed virtual/real setups and ultimately fully immersive virtual reality.

“What xR glasses will look like in 2035 depends on further developments in hardware, sensor technology and integrated software solutions. Already today, novel AI models on resource-efficient chips can be used to run object recognition processes. Neuromorphic chips, which enable energy-efficient AI-based image processing, are also becoming increasingly important,” he says.

“We are working on the assumption that xR glasses of the future will already have their own recognition and classification processes ‘built in’, enabling them to understand their surroundings independently and intelligently and to superimpose additional virtual information with correct positioning,” he says.

Meanwhile, Pinzariu reports that there has been an increase in the adoption of machine vision technology in recent years, due to an increased demand for automation, with manufacturers adopting automated solutions to “keep operations running more efficiently, deal with labour shortages and an increase in production targets.”

“Machine vision was used extensively in recent years, and there will be continued growth in this market. Automation of quality assurance processes in the manufacturing industry has [also] seen an increase in demand, with the use of robots and collaborative robots,” she says.

Pinzariu also observes that solutions including object recognition, such as personal protective equipment detection, part measurement, presence detection, location tracking, and defect detection are also increasingly being developed.

“Finding defective parts already proved that it could improve cycle time, and it will continue to be used to aid the operators in their tasks and meet the increasing demand,” she says.

Other contributory factors to higher demand for vision systems are recent advances in deep learning technology, especially as algorithms become more capable of processing many images. Broadly speaking, Pinzariu says AI offers the advantages of traditional rule-based machine vision systems, enabling the judgement that human operators bring, but in a semi- or fully automated fashion.

“Deep learning is a great tool to be used, where subjective decisions need to be made, like human inspection, where the identification of features is difficult, due to the complexity or variability of the image. Computer vision-based solutions are expected to become more affordable, with the development of low-power AI chips that process neural networks. Low-power AI processing will continue to shift to edge devices, making it more accessible, affordable, and easier to implement,” she says.

“There will also be an increase in the use of AR in computer vision. The solutions developed will increase in complexity, such as extracting information from the whole scene and not just image classification-based. Other applications would be anomaly detection [and] assembling components using instructions in AR,” she adds.