A new thermal imaging method could improve the awareness of automated robots and vehicles, especially in darkness, researchers say.

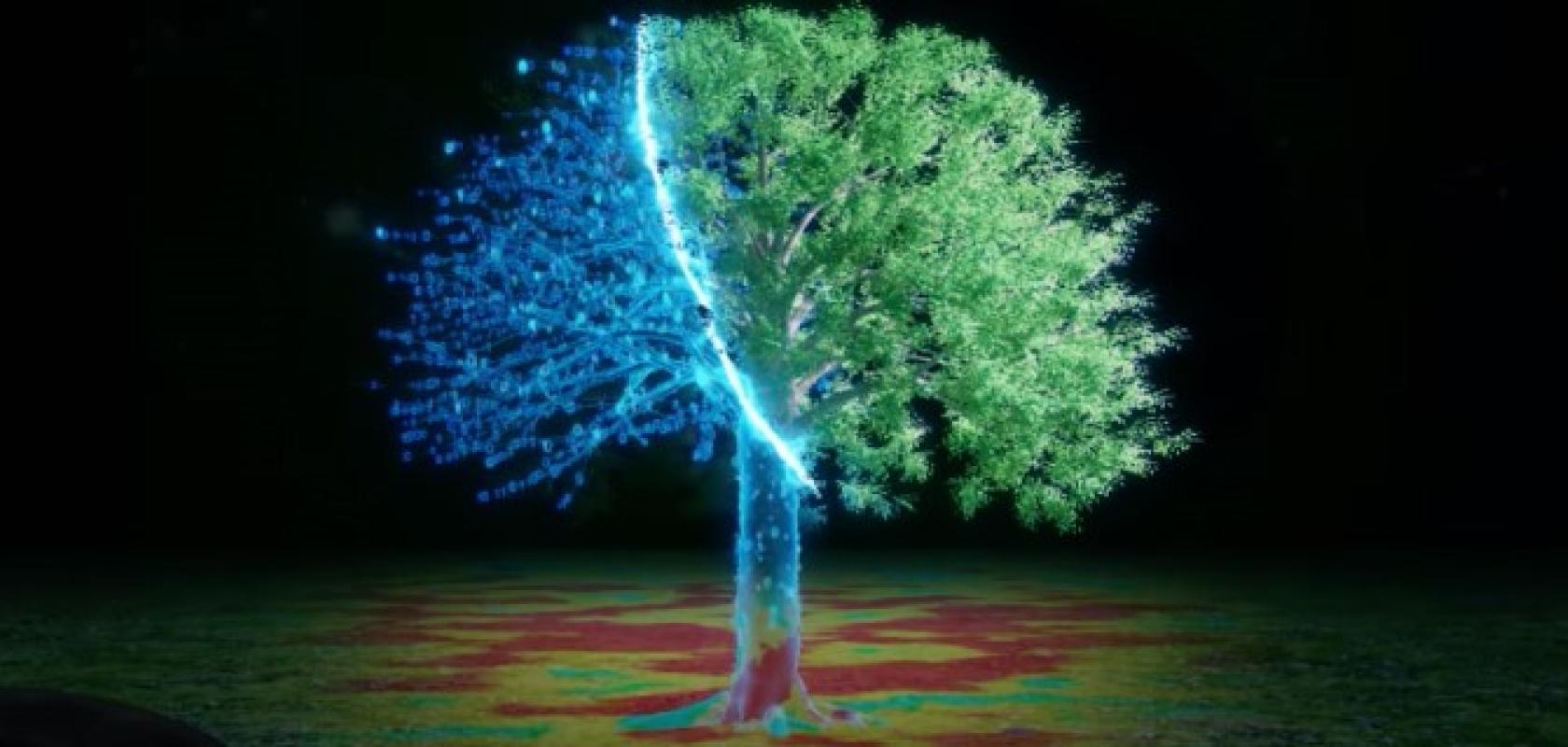

The method, called heat-assisted detection and ranging (HADAR), is being developed by researchers at Purdue University, US. It combines thermal physics, infrared imaging and machine learning in an attempt to pave the way to fully passive and physics-aware machine perception, the team says.

The initial applications of HADAR, according to the researchers, are automated vehicles and robots that interact with humans in complex environments, with further possible uses in agriculture, defence, geosciences, health care, and wildlife monitoring.

HADAR was developed by Zubin Jacob, Elmore Associate Professor of Electrical and Computer Engineering, and research scientist Fanglin Bao.

Bao said: “HADAR vividly recovers the texture from the cluttered heat signal and accurately disentangles temperature, emissivity and texture, or TeX, of all objects in a scene. It sees texture and depth through the darkness as if it were day and also perceives physical attributes beyond RGB, or red, green and blue, visible imaging or conventional thermal sensing. It is surprising that it is possible to see through pitch darkness like broad daylight.”

The team tested HADAR TeX vision using an off-road nighttime scene.

He added: “HADAR TeX vision recovered textures and overcame the ghosting effect. It recovered fine textures such as water ripples, bark wrinkles and culverts in addition to details about the grassy land.”

Traditional active sensors such as LiDAR, radar, and sonar emit and receive signals to gather 3D scene information, but as they scale up, they encounter issues like signal interference and eye safety concerns, the researchers say, while video cameras relying on ambient illumination are advantageous, they struggle in low-light scenarios like nighttime, fog, or rain.

“Objects and their environment constantly emit and scatter thermal radiation, leading to textureless images famously known as the ‘ghosting effect,’” Bao said. “Thermal pictures of a person’s face show only contours and some temperature contrast; there are no features, making it seem like you have seen a ghost. This loss of information, texture and features is a roadblock for machine perception using heat radiation.”

“Our work builds the information-theoretic foundations of thermal perception to show that pitch darkness carries the same amount of information as broad daylight. Evolution has made human beings biased toward the daytime. Machine perception of the future will overcome this long-standing dichotomy between day and night,” Jacob said.

“The current sensor is large and heavy since HADAR algorithms require many colours of invisible infrared radiation,” Bao said. “To apply it to self-driving cars or robots, we need to bring down the size and price while also making the cameras faster. The current sensor takes around one second to create one image, but for autonomous cars, we need around 30 to 60-hertz frame rate, or frames per second.”