Caption: MiniZed with Flir Lepton

Ahead of his presentation at the Embedded World conference in Nuremberg, Germany on 28 February, Adam Taylor from consultancy firm Adiuvo Engineering and Training specifies what engineers need to consider when building an embedded infrared vision system for IoT and IIoT

Imaging within the infrared domain provides a significant benefit in many applications that make use of the Internet of Things (IoT) and its industrial counterpart the Industrial Internet of Things (IIoT). The creation of an imaging system based on an uncooled thermal imager presents several challenges in interfacing, security, power efficiency and performance.

A heterogeneous system-on-chip allows the creation of a solution that is flexible, secure and power efficient. Prototyping is key to developing a system that enables the challenges to be addressed and time-to-market reduced.

Infrared imagers fall into to two categories: cooled and uncooled. Cooled thermal cameras use image sensors based on HgCdTe or InSb semiconductors that need to be cooled to 70 to 100 Kelvin. This is required to reduce the thermal noise generated by the device. A cooled sensor therefore brings with it increased complexity, cost and weight; the system also takes time – several minutes – to reach operating temperature.

Uncooled infrared sensors can operate at room temperature and use microbolometers in place of an HgCdTe or InSb sensor. Typically, microbolometer-based thermal imagers have lower resolution compared to a cooled camera, but they do, however, make thermal imaging systems simpler, lighter and less costly to build.

The Flir Lepton is an uncooled thermal imager operating in the longwave infrared spectrum. It is a self-contained camera module with a resolution of 80 x 60 pixels (Lepton 2) or 160 x 120 pixels (Lepton 3). The module is configured via an I2C bus, while the video is broadcast over SPI using a video-over-SPI (VoSPI) protocol. These interfaces make it ideal for use in many embedded systems imaging in the infrared.

Prototype imaging in the infrared domain

Creating an IoT or IIoT solution that works within the infrared domain faces the following high-level challenges and needs:

- High-performance processing systems: in order to implement image processing algorithms, communication and application security;

- Security: the ability to implement secure configuration, access authentication, secure communication and anti-tamper features to prevent unauthorised access;

- Flexible interface capabilities: the system has to be able to interface with the infrared modules, local displays, along with wired and wireless communication using both industry standard and proprietary interfaces; and

- Power efficiency: it not only has to be a solution capable of reducing power consumption depending upon the operating mode, but also one that offers the most power-efficient implementation.

One technology which addresses all of the above challenges is a class of device called a heterogeneous system-on-chip-based solution. These devices combine high-performance ARM processor cores with programmable logic.

A flexible prototyping solution is usually preferred when developing embedded infrared imaging devices. One platform that addresses each of these challenges in a compact form factor is the MiniZed. Users can combine the Flir Lepton or other imager with a Xilinx Zynq Z7007S device mounted on a MiniZed development board. As the MiniZed board supports WiFi and Bluetooth it is possible to prototype IIoT and IoT applications and traditional imaging solutions with a local display.

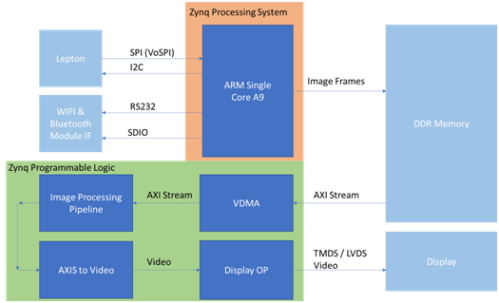

To create a tightly integrated solution, the engineer can configure the Lepton using the processing system of the Zynq through the I2C bus. The Zynq processing system can also handle the IoT or IIoT stacks and security, running an operating system such as Linux or FreeRTOS. The programmable logic is used to receive the VoSPI stream and transfer the images into DDR, meaning the operating system can access the images. The Zynq processing system also outputs video for a local display.

The application software must also provide the required power management, powering down elements of the design such as programmable logic when the system is not in use. The high-level architectural concept of the approach is demonstrated in the figure below.

High level architecture

If required, the engineer can generate custom image processing functions using high level synthesis, or use pre-existing IP blocks such as the image enhancement core which provides noise filtering, edge enhancement and halo suppression.